Audio version available on the blog (or search Stitcher, Spotify, Google Podcasts, etc. for "Cold Takes Audio"

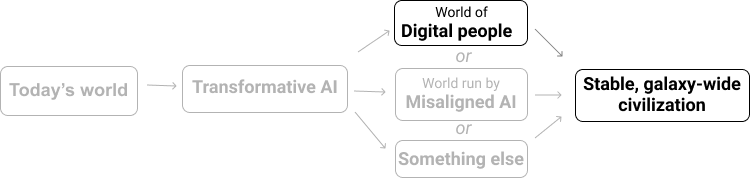

This is the third post in a series explaining my view that we could be in the most important century of all time. (Here's the roadmap for this series.)

- The first piece in this series discusses our unusual era, which could be very close to the transition between an Earth-bound civilization and a stable galaxy-wide civilization.

- This piece discusses "digital people," a category of technology that could be key for this transition (and would have even bigger impacts than the hypothetical Duplicator discussed previously).

- Many of the ideas here appear somewhere in sci-fi or speculative nonfiction, but I'm not aware of another piece laying out (compactly) the basic idea of digital people and the key reasons that a world of digital people would be so different from today's.

- The idea of digital people provides a concrete way of imagining how the right kind of technology (which I believe to be almost certainly feasible) could change the world radically, such that "humans as we know them" would no longer be the main force.

- It will be important to have this picture, because I'm going to argue that AI advances this century could quickly lead to digital people or similarly significant technology. The transformative potential of something like digital people, combined with how quickly AI could lead to it, form the case that we could be in the most important century.

Intro

Previously, I wrote:

When some people imagine the future, they picture the kind of thing you see in sci-fi films. But these sci-fi futures seem very tame, compared to the future I expect ...

The future I picture is enormously bigger, faster, weirder, and either much much better or much much worse compared to today. It's also potentially a lot sooner than sci-fi futures: I think particular, achievable-seeming technologies could get us there quickly.

This piece is about digital people, one example[1] of a technology that could lead to an extremely big, fast, weird future.

To get the idea of digital people, imagine a computer simulation of a specific person, in a virtual environment. For example, a simulation of you that reacts to all "virtual events" - virtual hunger, virtual weather, a virtual computer with an inbox - just as you would. (Like The Matrix? See footnote.)[2] I explain in more depth in the FAQ companion piece.

The central case I'll focus on is that of digital people just like us, perhaps created via mind uploading (simulating human brains). However, one could also imagine entities unlike us in many ways, but still properly thought of as "descendants" of humanity; those would be digital people as well. (More on my choice of term in the FAQ.)

Popular culture on this sort of topic tends to focus on the prospect of digital immortality: people avoiding death by taking on a digital form, which can be backed up just like you back up your data. But I consider this to be small potatoes compared to other potential impacts of digital people, in particular:

- Productivity. Digital people could be copied, just as we can easily make copies of ~any software today. They could also be run much faster than humans. Because of this, digital people could have effects comparable to those of the Duplicator, but more so: unprecedented (in history or in sci-fi movies) levels of economic growth and productivity.

- Social science. Today, we see a lot of progress on understanding scientific laws and developing cool new technologies, but not so much progress on understanding human nature and human behavior. Digital people would fundamentally change this dynamic: people could make copies of themselves (including sped-up, temporary copies) to explore how different choices, lifestyles and environments affected them. Comparing copies would be informative in a way that current social science rarely is.

- Control of the environment. Digital people would experience whatever world they (or the controller of their virtual environment) wanted. Assuming digital people had true conscious experience (an assumption discussed in the FAQ), this could be a good thing (it should be possible to eliminate disease, material poverty and non-consensual violence for digital people) or a bad thing (if human rights are not protected, digital people could be subject to scary levels of control).

- Space expansion. The population of digital people might become staggeringly large, and the computers running them could end up distributed throughout our galaxy and beyond. Digital people could exist anywhere that computers could be run - so space settlements could be more straightforward for digital people than for biological humans.

- Lock-in. In today's world, we're used to the idea that the future is unpredictable and uncontrollable. Political regimes, ideologies, and cultures all come and go (and evolve). But a community, city or nation of digital people could be much more stable.

- Digital people need not die or age.

- Whoever sets up a "virtual environment" containing a community of digital people could have quite a bit of long-lasting control over what that community is like. For example, they might build in software to reset the community (both the virtual environment and the people in it) to an earlier state if particular things change - such as who's in power, or what religion is dominant.

- I consider this a disturbing thought, as it could enable long-lasting authoritarianism, though it could also enable things like permanent protection of particular human rights.

I think these effects (elaborated below) could be a very good or a very bad thing. How the early years with digital people go could irreversibly determine which.

I think similar consequences would arise from any technology that allowed (a) extreme control over our experiences and environment; (b) duplicating human minds. This means there are potentially many ways for the future to become as wacky as what I sketch out here. I discuss digital people because doing so provides a particularly easy way to imagine the consequences of (a) and (b): it is essentially about transferring the most important building block of our world (human minds) to a domain (software) where we are used to the idea of having a huge amount of control to program whatever behaviors we want.

Much of this piece is inspired by Age of Em, an unusual and fascinating book. It tries to describe a hypothetical world of digital people (specifically mind uploads) in a lot of detail, but (unlike science fiction) it also aims for predictive accuracy rather than entertainment. In many places I find it overly specific, and overall, I don't expect that the world it describes will end up having much in common with a real digital-people-filled world. However, it has a number of sections that I think illustrate how powerful and radical a technology digital people could be.

Below, I will:

- Describe the basic idea of digital people, and link to a FAQ on the idea.

- Go through the potential implications of digital people, listed above.

This is a piece that different people may want to read in different orders. Here's an overall guide to the piece and FAQ:

| Normal humans | Digital people | |

|---|---|---|

| Possible today (More) |  |

|

| Probably possible someday (More) |  |

|

| Can interact with the real world, do most jobs (More) |  |

|

| Conscious, should have human rights (More) |  |

|

| Easily duplicated, ala The Duplicator (More) |  |

|

| Can be run sped-up (More) |  |

|

| Can make "temporary copies" that run fast, then retire at slow speed (More) |  |

|

| Productivity and social science: could cause unprecedented economic growth, productivity, and knowledge of human nature and behavior (More) |  |

|

| Control of the environment: can have their experiences altered in any way (More) |  |

|

| Lock-in: could live in highly stable civilizations with no aging or death, and "digital resets" stopping certain changes (More) |  |

|

| Space expansion: can live comfortably anywhere computers can run, thus highly suitable for galaxy-wide expansion (More) |  |

|

| Good or bad? (More) | Outside the scope of this piece | Could be very good or bad |

Premises

This piece focuses on how digital people could change the world. I will mostly assume that digital people are just like us, except that they can be easily copied, run at different speeds, and embedded in virtual environments. In particular, I will assume that digital people are conscious, have human rights, and can do most of the things humans can, including interacting with the real world.

I expect many readers will have trouble engaging with this until they see answers to some more basic questions about digital people. Therefore, I encourage readers to click on any questions that sound helpful from the digital people FAQ, or just read the FAQ straight through. Here is the list of questions discussed in the FAQ:

- Basics

- Humans and digital people

- Feasibility

- Other questions

- I'm having trouble picturing a world of digital people - how the technology could be introduced, how they would interact with us, etc. Can you lay out a detailed scenario of what the transition from today's world to a world full of digital people might look like?

- Are digital people different from mind uploads?

- Would a digital copy of me be me?

- What other questions can I ask?

How could digital people change the world?

Productivity

Like any software, digital people could be instantly and accurately copied. The Duplicator argues that the ability to "copy people" could lead to rapidly accelerating economic growth: "Over the last 100 years or so, the economy has doubled in size every few decades. With a Duplicator, it could double in size every year or month, on its way to hitting the limits."

Thanks to María Gutiérrez Rojas for this graphic, a variation on a similar set of graphics from The Duplicator illustrating how duplicating people could cause explosive growth.

Thanks to María Gutiérrez Rojas for this graphic, a variation on a similar set of graphics from The Duplicator illustrating how duplicating people could cause explosive growth.

Digital people could create a more dramatic effect than this, because of their ability to be sped up (perhaps by thousands or millions of times)[3] as well as slowed down (to save on costs). This could further increase both speed and coordinating ability.[4]

Another factor that could increase productivity: "Temporary" digital people could complete a task and then retire to a nice virtual life, while running very slowly (and cheaply).[5] This could make some digital people comfortable copying themselves for temporary purposes. Digital people could, for example, copy themselves hundreds of times to try different approaches to figuring out a problem or gaining a skill, then keep only the most successful version and make many copies of that version.

It's possible that digital people could be less of an economic force than The Duplicator since digital people would lack human bodies. But this seems likely to be only a minor consideration (details in footnote).[6]

Social science

Today, we see a lot of impressive innovation and progress in some areas, and relatively little in other areas.

For example, we're constantly able to buy cheaper, faster computers and more realistic video games, but we don't seem to be constantly getting better at making friends, falling in love, or finding happiness.[7] We also aren't clearly getting better at things like fighting addiction, and getting ourselves to behave as we (on reflection) want to.

One way of thinking about it is that natural sciences (e.g. physics, chemistry, biology) are advancing much more impressively than social sciences (e.g. economics, psychology, sociology). Or: "We're making great strides in understanding natural laws, not so much in understanding ourselves."

Digital people could change this. It could address what I see as perhaps the fundamental reason social science is so hard to learn from: it's too hard to run true experiments and make clean comparisons.

Today, if we we want to know whether meditation is helpful to people:

- We can compare people who meditate to people who don't, but there will be lots of differences between those people, and we can't isolate the effect of meditation itself. (Researchers try to do so with various statistical techniques, but these raise their own issues.)

- We could also try to run an experiment in which people are randomly assigned to meditate or not. But we need a lot of people to participate, all at the same time and under the same conditions, in the hopes that the differences between meditators and non-meditators will statistically "wash out" and we can pick up the effects of meditation. Today, these kinds of experiments - known as "randomized controlled trials" - are expensive, logistically challenging, time-consuming, and almost always end up with ambiguous and difficult-to-interpret results.

But in a world with digital people:

- Anyone could make a copy of themselves to try out meditation, perhaps even dedicating themselves to it for several years (possibly sped-up).[8] If they liked the results, they could then meditate for several years themselves, and ensure that all future copies were made from someone who had reaped the benefits of meditation.

- Social scientists could study people who had tried things like this and look for patterns, which would be much more informative than social science research tends to be now. (They could also run deliberate experiments, recruiting/paying people to make copies of themselves to try different lifestyles, cities, schools, etc. - these could be much smaller, cheaper, and more definitive than today's social science experiments.[9]) The ability to run experiments could be good or bad, depending on the robustness and enforcement of scientific ethics. If informed consent weren't sufficiently protected, digital people could open up the potential for an enormous amount of abuse; if it were, it could hopefully primarily enable learning.

Digital people could also enable:

- Overcoming bias. Digital people could make copies of themselves (including temporary, sped-up copies) to consider arguments delivered in different ways, by different people, including with different apparent race and gender, and see whether the copies came to different conclusions. In this way they could explore which cognitive biases - from sexism and racism to wishful thinking and ego - affected their judgments, and work on improving and adapting to these biases. (Even if people weren't excited to do this, they might have to, as others would be able to ask for information on how biased they are and expect to get clear data.)

- Bonanzas of reflection and discussion. Digital people could make copies of themselves (including sped-up, temporary copies) to study and discuss particular philosophy questions, psychology questions, etc. in depth, and then summarize their findings to the original.[10] By seeing how different copies with different expertises and life experiences formed different opinions, they could have much more thoughtful, informed answers than I do to questions like "What do I want in life?", "Why do I want it?", "How can I be a person I'm proud of being?", etc.

Virtual reality and control of the environment

As stated above, digital people could live in "virtual environments." In order to design a virtual environment, programmers would systematically generate the right sort of light signals, sound signals, etc. to send to a digital person as if they were "really there."

One could say the historical role of science and technology is to give people more control over their environment. And one could think of digital people almost as the logical endpoint of this: digital people would experience whatever world they (or the controller of their virtual environment) wanted.

This could be a very bad or good thing:

Bad thing. Someone who controlled a digital person's virtual environment could have almost unlimited control over them.

- For this reason, it would be important for a world of digital people to include effective enforcement of basic human rights for all digital people. (More on this idea in the FAQ.)

- A world of digital people could very quickly get dystopian if digital people didn't have human rights protections. For example, imagine if the rule were "Whoever owns a server can run whatever they want on it, including digital copies of anyone." Then people might make "digital copies" of themselves that they ran experiments on, forced to do work, and even open-sourced, so that anyone running a server could make and abuse copies. This very short story (recommended, but chilling) gives a flavor for what that might be like.

Good thing. On the other hand, if a digital person were in control of their own environment (or someone else was and looked out for them), they could be free from any experiences they wanted to be free from, including hunger, violence, disease, other forms of ill health, and debilitating pain of any kind. Broadly, they could be "free from material need" - other than the need for computing resources to be run at all.

- This is a big change from today's world. Today, if you get cancer, you're going to suffer pain and debilitation even if everyone in the world would prefer that you didn't. Digital people need not experience having cancer if they and others don't want this to happen.

- In particular, physical coercion within a virtual environment could be made impossible (it could simply be impossible to transmit signals to another digital person corresponding to e.g. being punched or shot).

- Digital people might also have the ability to experience a lot of things we can't experience now - inhabiting another person's body, going to outer space, being in a "dangerous" situation without actually being in danger, eating without worrying about health consequences, changing from one apparent race or gender to another, etc.

Space expansion

If digital people underwent an explosion of economic growth as discussed above, this could come with an explosion in the population of digital people (for reasons discussed in The Duplicator).

It might reach the point where they needed to build spaceships and leave the solar system in order to get enough energy, metal, etc. to build more computers and enable more lives to exist.

Settling space could be much easier for digital people than for biological humans. They could exist anywhere one could run computers, and the basic ingredients needed to do that - raw materials, energy, and "real estate"[11] - are all super-abundant throughout our galaxy, not just on Earth. Because of this, the population of digital people could end up becoming staggeringly large.[12]

Lock-in

In today's world, we're used to the idea that the future is unpredictable and uncontrollable. Political regimes, ideologies, and cultures all come and go (and evolve). Some are good, and some are bad, but it generally doesn't seem as though anything will last forever. But communities, cities, and nations of digital people could be much more stable.

First, because digital people need not die or physically age, and their environment need not deteriorate or run out of anything. As long as they could keep their server running, everything in their virtual environment would be physically capable of staying as it is.

Second, because an environment could be designed to enforce stability. For example, imagine that:

- A community of digital people forms its own government (this would require either overpowering or getting consent from their original government).

- The government turns authoritarian and repeals the basic human rights protections discussed in the FAQ.

- The head wants to make sure that they - or perhaps their ideology of choice - stays in power forever.

- They could overhaul the virtual environment that they and all of the other citizens are in (by gaining access to the source code and reprogramming it, or operating robots that physically alter the server), so that certain things about the environment can never be changed - such as who's in power. If such a thing were about to change, the virtual environment could simply prohibit the action or reset to an earlier state.

- It would still be possible to change the virtual environment from outside - e.g., to physically destroy, hack or otherwise alter the server running it. But if this were taking place after a long period of population growth and space colonization, then the server might be way out in outer space, light-years from anyone who'd be interested in doing such a thing.

Alternatively, "digital correction" could be a force for good if used wisely enough. It could be used to ensure that no dictator ever gains power, or that certain basic human rights are always protected. If a civilization became "mature" enough - e.g., fair, equitable and prosperous, with a commitment to freedom and self-determination and a universally thriving population - it could keep these properties for a very long time.

(I'm not aware of many in-depth analyses of the "lock-in" idea, but here are some informal notes from physicist Jess Riedel.)

Would these impacts be a good or bad thing?

Throughout this piece, I imagine many readers have been thinking "That sounds terrible! Does the author think it would be good?" Or "That sounds great! Does the author disagree?"

My take on a future with digital people is that it could be very good or very bad, and how it gets set up in the first place could irreversibly determine which.

- Hasty use of lock-in (discussed above) and/or overly quick spreading out through the galaxy (discussed above) could result in a huge world full of digital people (as conscious as we are) that is heavily dysfunctional, dystopian or at least falling short of its potential.

- But acceptably good initial conditions (protecting basic human rights for digital people, at a minimum), plus a lot of patience and accumulation of wisdom and self-awareness we don't have today (perhaps facilitated by better social science), could lead to a large, stable, much better world. It should be possible to eliminate disease, material poverty and non-consensual violence, and create a society much better than today's.

The best example I can think of, but surely not the only one. ↩︎

The movie The Matrix gives a decent intuition for the idea with its fully-immersive virtual reality, but unlike the heroes of The Matrix, a digital person need not be connected to any physical person - they could exist as pure software. The agents ("bad guys") are more like digital people than the heroes are. In fact, one extensively copies himself. ↩︎

See Age of Em Chapter 6, starting with "Regarding the computation ..." ↩︎

For example, when multiple teams of digital people need to coordinate on a project, they might speed up (or slow down) particular steps and teams in order to make sure that each piece of the project is completed just on time. This would allow more complex, "fragile" plans to work out. (This point is from Age of Em Chapter 17, "Preparation" section.) ↩︎

See Age of Em Chapter 11, "Retirement" section. ↩︎

Without human bodies - and depending on what kinds of robots were available - digital people might not be good substitutes for humans when it comes to jobs that rely heavily on human physical abilities, or jobs that require in-person interaction with biological humans. However, digital people would likely be able to do everything needed to cause an explosive economic growth, even if they couldn't do everything. In particular, it seems they could do everything needed to increase the supply of computers, and thereby increase the population of digital people. Creating more computing power requires (a) raw materials - mostly metal; (b) research and development - to design the computers; (c) manufacturing - to carry out the design and turn raw materials into computers; (d) energy. Digital people could potentially make all of these things a great deal cheaper and more plentiful:

- Raw materials. It seems that mining could, in principle, be done entirely with robots. Digital people could design and instruct these robots to extract raw materials as efficiently as possible.

- Research and development. My sense is that this is a major input into the cost of computing today: the work needed to design ever-better microprocessors and other computer parts. Digital people could do this entirely virtually.

- Manufacturing. My sense is that this is the other major input into the cost of computing today. Like mining, it could in principle be done entirely with robots.

- Energy. Solar panels are also subject to (a) better research and development; (b) robot-driven manufacturing. Good enough design and manufacturing of solar panels could lead to radically cheaper and more plentiful energy.

- Space exploration. Raw materials, energy, and "real estate" are all super-abundant outside of Earth. If digital people could design and manufacture spaceships, along with robots that could build solar panels and computer factories, they could take advantage of massive resources compared to what we have on earth.

It is debatable whether the world is getting somewhat better at these things, somewhat worse, or neither. But it seems pretty clear that the progress isn't as impressive as in computing. ↩︎

Why would the copy cooperate in the experiment? Perhaps because they simply were on board with the goal (I certainly would cooperate with a copy of myself trying to learn about meditation!). Perhaps because they were paid (in the form of a nice retirement after the experiment). Perhaps because they saw themselves and their copies (and/or original) as the same person (or at least cared a lot about these very similar people). A couple of factors that would facilitate this kind of experimentation: (a) digital people could examine their own state of mind to get a sense of the odds of cooperation (since the copy would have the same state of mind); (b) if only a small number of digital people experimented, large numbers of people could still learn from the results. ↩︎

I'd also expect them to be able to try more radical things. For example, in today's world, it's unlikely that you could run a randomized experiment on what happens if people currently living in New York just decide to move to Chicago. It would be too hard to find people willing to be randomly assigned to stay in New York or move to Chicago. But in a world of digital people, experimenters could pay New Yorkers to make copies of themselves who move to Chicago. And after the experiment, each Chicago copy that wished it had stayed in New York could choose to replace itself with another copy of the New York version. (The latter brings up questions about philosophy of personal identity, but for social science purposes, all that matters is that some people would be happy to participate in experiments due to this option, and everyone could learn from the experiments.) ↩︎

See footnote from the first bullet point on why people's copies might cooperate with them. ↩︎

And air for cooling. ↩︎

See the estimates in Astronomical Waste for a rough sense of how big the numbers can get here (although these estimates are extremely speculative). ↩︎

For an entertaining example of the "very bad" outcome and the need for digital human rights see the USS Callister episode of Black Mirror: https://en.wikipedia.org/wiki/USS_Callister

As well as the episode "White Christmas" from the same show

You mention that the ability to create digital people could lead to dystopian outcomes or a Malthusian race to the bottom. In my humble opinion bad outcomes could only be avoided if there is a world government that monitors what happens on every computer that is capable to run digital people. Of course, such a powerful governerment is a risk of its own.

Moreover I think that a benevolent world goverment can be realised only several centuries in the future, while mind uploading could be possible at the end of this century. Therefore I believe that bad outcomes are much more likely than good ones. I would be glad to hear if you have some arguments why this line of reasoning could be wrong.

It seems very non-obvious to me whether we should think bad outcomes are more likely than good ones. You asked about arguments for why things might go well; a couple that occur to me are (a) as long as large numbers of digital people are committed to protecting human rights and other important values, it seems like there is a good chance they will broadly succeed (even if they don't manage to stop every case of abuse); (b) increased wealth and improved social science might cause human rights and other important values to be prioritized more highly, and might help people coordinate more effectively.

Thanks for the post.

One of my issues with arguments about digital people is that I think that at the point at which we have EM's, we've essentially hit the singularity and civilization will look so completely different to what it is now that it's hard to speculate or meaningfully impact what will happen. To coin a concept label, its beyond the technological event horizon.

A world with EM's is a world where a small sect with a sufficient desire for expansion could conceivably increase it's population a thousand fold every hour. It's a world where you can run ten thousand copies of the top weapons scientist at ten thousand times baseline speed. It's a world where you can backup a person, play their mind for a bit and then restore from the backup until you know exactly what you need to say/do to get them to act a certain way.

I imagine that all of these possibilities and many more besides them are likely to so radically change political incentives and the relative strengths of various forms of social organization that that a world of EM's will be so different from modern nations states just as modern states are from chimps.

Still 100% worth thinking about and potentially thinking of ways to influence in a positive direction, but I'm highly sceptical that the world of EM's is something we have meaningful influence over other than delaying it a bit.

I broadly agree with this. The point of my post was to convey intuitions for why "a world of [digital people] will be so different from modern nations states just as modern states are from chimps," not to claim that the long-run future will be just as described in Age of Em. I do think despite the likely radical unfamiliarity of such a world, there are properties we can say today it's pretty likely to have, such as the potential for lock-in and space colonization.

I see a lot of talk with digital people about making copies but wouldn't a dominant strategy (presuming more compute = more intelligence/ability to multitask) be to just add compute to any given actor? In general, why copy people when you can just make one actor, who you know to be relatively aligned, much more powerful? Seems likely, though not totally clear, that having one mind with 1000 compute units would be strictly better for seeking power than 100 minds with 10 compute units each.

For example, companies might compete with one another to have the smartest/most able CEO by giving them more compute. The marginal benefit of more intelligence might be really high such that Tim Cook being 1% more intelligent than Mark Zuckerberg could mean Apple becomes dominant. This would trigger an intense race for compute. The same should go for governments. At some point we should have a multipolar superintelligence scenario but with human minds.

I think this depends on empirical questions about the returns to more compute for a single mind. If the mind is closely based on a human brain, it might be pretty hard to get much out of more compute, so duplication might have better returns. If the mind is not based on a human brain, it seems hard to say how this shakes out.

One thing that seems interesting to consider for digital people is the possibility of reward hacking. While humans certainly have quite a complex reward function, once we have full understanding of the human mind (having very good understanding could be a prerequisite to digital people anyway) then we should be able to figure out how to game it.

A key idea here is that humans have built-in limiters to their pleasure. I.e. if we eat good food that feeling of pleasure must subside quickly or else we'll just sit around satisfied until we die of hunger. Digital people need not have limits to pleasure. They would have none of the obstacles that we have to experiencing constant pleasure (e.g. we only have so much serotonin, money, and stamina so we can't just be on heroin all the time). Drugs and video games are our rudimentary and imperfect attempts to reward hack ourselves. Clearly we already have this desire. By becoming digital we could actually do it all the time and there would be no downsides.

This would bring up some interesting dynamics. Would the first ems have the problem of quickly becoming useless to humans as they turn to wireheading instead of interacting with humans? Would pleasure-seekers just be kind of socially evolved away from and some reality fundamentalists would get to drive the future while many ems sit in bliss? Would reward-hacked digital humans care about one another? Would they want to expand? If digital people optimize for personal 'good feelings' probably that won't need to coincide with interacting with the real world except so as to maintain the compute substrate, right?

Digital people that become less economically, militarily, and politically powerful--e.g. as a result of reward hacking making them less interested in the 'rat race'--will be outcompeted by those that don't, unless there are mechanisms in place to prevent this, e.g. all power centralized in one authority that decides not to let that happen, or strong effective regulations that are universally enforced.

That seems true for many cases (including some I described) but you could also have a contingent of forward-looking digital people who are optimizing hard for future bliss (a potentially much more appealing prospect than expansion or procreation). Seems unclear that they would necessarily be interested in this being widespread.

Could also be that digital people find that more compute = more bliss without any bounds. Then there is plenty of interest in the rat race with the end goal of monopolizing compute. I guess this could matter more if there were just one or a few relatively powerful digital people. Then you could have similar problems as you would with AGI alignment. E.g. infrastructure profusion in order to better reward hack. (very low confidence in these arguments)

One question I had after reading this: how do we know that the digital environments would be similar enough to our own environments to take them as the same? I'm having trouble explaining the thought further, because it feels more like an intuition, but I guess it feels like there's some necessary degree of chaos present in our world that couldn't be properly modeled in a digital world. That is, something feels importantly different about a created world versus ours, in a way that would seem apt to change how I interacted with the world if I were in the digital environment.

How is sentience going to be approached in this model? Would some entities that would otherwise experience suffering be coded as not conscious for some periods of time?

Another very bad outcome relates to the risk of accidental mass suicide if a widespread belief in the consciousness of digital people is mistaken.

Supposing something like Integrated Information Theory is true, then digital people wouldn't be conscious unless they were instantiated on neuromorphic computer architecture with much more integration than our existing von Neumann architecture. But if people volunteer to get their physical brains extracted, sliced open, scanned, and uploaded onto VN-architecture computers because they think it will give them immortality and other new abilities, they'd accidentally be killing themselves.

It therefore might be preferable to duplicate and modify a small number of initial uploads rather than let just anybody upload who wants to. Obviously there's no suicide risk if digital people aren't uploads or if uploading doesn't require killing the bio-people though. Nevertheless, IIT would still complicate the question about granting civil rights.