robertskmiles

Posts 4

Comments10

My perspective, I think, is that most of the difficulties that people think of as being the extra, hard part of to one->many alignment, are already present in one->one alignment. A single human is already a barely coherent mess of conflicting wants and goals interacting chaotically, and the strong form of "being aligned to one human" requires a solution that can resolve values conflicts between incompatible 'parts' of that human and find outcomes that are satisfactory to all interests. Expanding this to more than one person is a change of degree but not kind.

There is a weaker form of "being aligned to one human" that's just like "don't kill that human and follow their commands in more or less the way they intend", and if that's all we can get then that only translates to "don't drive humanity extinct and follow the wishes of at least some subset of people", and I'd consider that a dramatically suboptimal outcome. At this point I'd take it though.

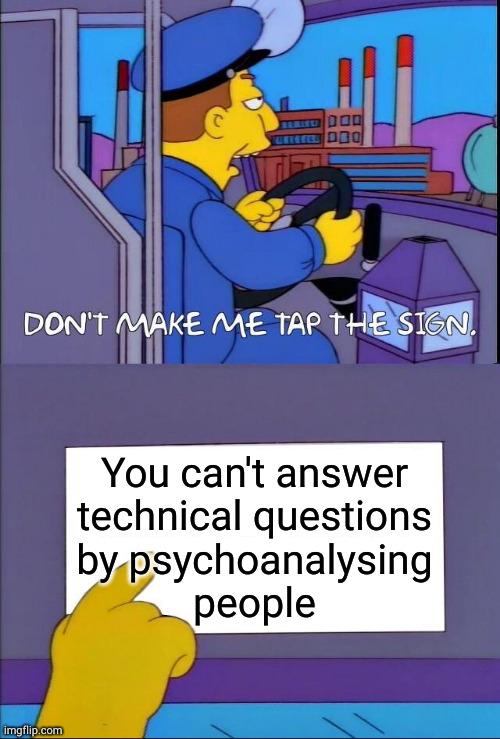

To avoid lowering the quality of discussion by just posting snarky memes, I'll explain my actual position:

"People may have bad reasons to believe X" is a good counter against the argument "People believe X, therefore X". So for anyone whose thought process is "These EAs are very worried about AI so I am too", I agree that there's a worthwhile discussion to be had about why those EAs believe what they do, what their thought process is, and the track record both of similar claims and of claims made by people using similar thought processes. This is because you're using their reasoning as a proxy - the causal chain to reality routes through those people's reasoning methods. Like, "My doctor says this medicine will help me, so it will" is an argument that works because it routes through the doctor having access to evidence and arguments that you don't know about, and you have a reason to believe that the doctor's reasoning connects with reality well enough to be a useful proxy.

However, the fact that some EAs believe certain things about AI is not the only, nor the main, nor even a major component of the evidence and argument available. You can look at the arguments those people make, and the real world evidence they point to. This is so much stronger than just looking at their final beliefs that it basically makes it irrelevant. Say you go outside and the sun is out, the sky is clear, and there's a pleasantly cool breeze, and you bump into the world's most upbeat and optimistic man, who says "We're having lovely weather today". If you reason that this man's thought process is heavily biased and he's the kind of person who's likely to say the weather is nicer than it is, and therefore you're suspicious of his claim that the weather is nice, I'd say you're making some kind of mistake.

This sounds like a really useful thing to make!

Do you think there would be value in using the latest language models to do semantic search over this set of (F)AQs, so people can easily see if a question similar to theirs has already been answered? I ask because I'm thinking of doing this for AI Safety questions, in which case it probably wouldn't be far out of my way to do it for librarian questions as well.

I see several comments here expressing an idea like "Perhaps engaging writing is better, but is it worth the extra effort?", and I just don't think that that trade-off is actually real for most people. I think a more conversational and engaging style is quicker and easier to write than the slightly more formal and serious tone which is now the norm. Really good, polished, highly engaging writing may be more work, but on the margin I think there's a direction we can move that is downhill from here on both effort and boringness.

Exciting! But where's the podcast's URL? All I can find is a Spotify link.

Edit: I was able to track it down, here it is https://spkt.io/f/8692/7888/read_8617d3aee53f3ab844a309d37895c143

A minor point, but I think this overestimates the extent to which a small number of people with an EA mindset can help in crowded cause areas that lack such people. Like, I don't think PETA's problem is that there's nobody there talking about impact and effectiveness. Or rather, that is their problem, but adding a few people to do that wouldn't help much, because they wouldn't be listened to. The existing internal political structures and discourse norms of these spaces aren't going to let these ideas gain traction, so while EAs in these areas might be able to be more individually effective than non-EAs, I think it's mostly going to be limited to projects they can do more or less on their own, without much support from the wider community of people working in the area.

Edited to add TL;DR: If we assume all the quality factors are correct, I think these numbers overestimate my funding by a factor of 3.3, and don't seem to include my Computerphile videos, underestimating my impact by a factor of 3.8 to 4.5, depending what you want to count, in effect underestimating my cost effectiveness by a factor of 12.5 to 14.8

Oh, I somehow missed your messages, what platform were they on?

Anyway, I haven't been keeping track of my grants very well, but I don't think my funding could possibly add up to $1M? My early stuff was self-funded, I only started asking for money in (I think) 2018, and that was only like $40k for a year IIRC. That went up over time obviously, but in retrospect I clearly should have been spending more money! I can try to go through the history of grants and figure it out.

Edit: Ok I just asked Deep Research and it says the total I've received is only ~300K total. I uh, I did think it was more than that, but apparently I am not good at asking for money.

Edit2: Also, which videos are you including for that ~32M minutes estimate? My total channel watch time is 773,130 hours, or 46,387,800 minutes. That doesn't include the Computerphile videos though, which are most of the view minutes. I'll see if I can collect them up.

Edit3: Ok, I got Claude to modify your script to use a list of video URLs I provided (basically all my Computerphile videos, excluding some that weren't really about AI), and that's an additional 97,285,888 estimated viewer minutes, for a total of 143,673,688. Or if you want to exclude my earliest stuff (which was I think impactful but wasn't really causally downstream of being funded), it's 74,841,928 for a total of 121,229,728.