Crossposted to LessWrong.

Summary:

I apply the self-indication assumption (a theory of anthropics) and some non-causal decision theories to the question of how common spacefaring civilisations are in the Universe. These theories push strongly towards civilisations being very common, and combining them with the observation that we haven’t seen any extraterrestrial life yields a quite specific estimate of how common such civilisations are. If you accept the self-indication assumption, you should be almost certain that we’ll encounter other civilisations if we leave the galaxy. In this case, 95 % of the reachable universe will already be colonised when Earth-originating intelligence arrives, in expectation. Of the remaining 5 %, around 70 % would eventually be reached by other civilisations, while 30 % would have remained empty in our absence. Even if you don’t accept the self-indication assumption, most non-causal decision theories have the same practical implications. If you believe that other civilisations colonising the Universe is positive, this provides some reason to prefer interventions that increase the quality of the future over reducing non-AI extinction risk; if you think that other civilisations colonising the universe is negative, the opposite is true.

Introduction

There are billions of stars in the Milky Way, and billions of trillions of stars in the observable universe. The Fermi observation is the surprising observation that not a single one of them shows any signs of life, named after the Fermi paradox. There are several possible explanations for the Fermi observation: perhaps life is very unlikely to arise from any particular planet, perhaps life has just recently begun emerging in the Universe, or perhaps there is some reason that life never leaves the solar system in which it emerges. Without any further information, it may seem difficult to figure out which one it is: but the field of anthropics has a lot to say about this kind of situation.

Anthropics is the study of what we should do and what we should believe as a consequence of observing that we exist. For example, we can observe that life and civilisation appeared on Earth. Should we interpret this as strong evidence that life appears frequently? After all, life is far more likely to arise on this planet if life appears frequently than if it doesn’t; thus, life arising on this planet is evidence for life appearing frequently. On the other hand, some civilisations like ours is likely to exist regardless of how common life is, and all such civilisations will obviously find that life appeared on their planet: otherwise they wouldn’t have existed. No consensus has yet emerged on the correct approach to these questions, but a number of theories have been put forward.

This post is inspired by the recent Dissolving the Fermi Paradox (Sandberg, Drexler and Ord, 2018), which doesn’t draw any special conclusion from our existence, and thus concludes that life is likely to be very uncommon.[1] Here, I investigate the implications of theories that interpret our existence as strong reason to act as if life appears frequently. Specifically, the self-indication assumption implies that life should be quite common, and decision theories such as Evidential Decision Theory, Functional Decision Theory and Updateless Decision Theory gives similar results (anthropics and decision theories are explained in Updating on anthropics). However, the Fermi observation provides a strong upper bound on how common life can be, given some assumptions about the possibility of space travel. The result of combining the self-indication assumption with the Fermi observation gives a surprisingly specific estimate of how common life is in the Universe. The decision theories mentioned above yields different probabilities, but it can be shown that they always give the same practical implications as the self-indication assumption, given some assumptions about what we value.

Short illustration of the argument

In this section, I demonstrate how the argument works by applying it to a simple example.

In the observable universe, there are approximately stars. Now consider 3 different hypotheses about how common civilisations are:

- Civilisations are very common: on average, a civilisation appears once every stars.

- Civilisations are common: on average, a civilisation appears once every stars.

- Civilisations are uncommon: on average, a civilisation appears once every stars.

Under the first hypothesis, we would expect there to be roughly civilisations in the observable universe. Assuming that intergalactic travel is possible, this is very unlikely. The Sun seems to have formed fairly late compared with most stars in the Universe, so most of these civilisations would have appeared before us. All civilisations might not want to travel to different galaxies, and some of these would have formed so far away that they wouldn’t have time to reach us; but at least one out of civilisations would almost certainly have tried to colonise the Milky Way. Since we haven’t seen any other civilisations, the first hypothesis is almost certainly false.

The question then, is how we should compare the second and the third hypothesis. Consider an extremely large (but finite) part of the Universe. In this region, a large number of copies of our civilisation will exist regardless of whether life is common or uncommon. However, if civilisations tend to appear once every stars, we can expect there to be more copies of our civilisation than if civilisations appears once every stars. Thus, when thinking about what civilisations such as ours should do, we must consider that our decisions will be implemented times as many times if civilisations are common compared with if they’re uncommon (according to some decision theories). If we’re total consequentialists,[2] this means that any decision we do will matter times as much if civilisations are common compared with if they’re uncommon (disregarding interactions between civilisations). Thus, if we assign equal prior probability to civilisations being common and civilisations being uncommon, and we care equally much about each civilisation regardless, we should act as if it’s almost certain that life is common.

Of course, the same argument applies to civilisations being very common: decisions are times more important if civilisations appear once every stars as if they appear once every stars. However, the first argument is stronger: given some assumptions, the probability that we wouldn’t have seen any civilisation would be less than , if civilisations were very common.

That civilisations appears once every stars implies that there should be about civilisations in the observable universe. Looking at details of when civilisations appear and how fast they spread, it isn’t that implausible that we wouldn’t have seen any of these; so the argument against the first hypothesis doesn’t work. However, as time goes, and Earth-originating and other civilisations expand, it’s very likely that they will encounter each other, and that most space that Earth-originating intelligence colonise would have been colonised by other civilisations if we didn’t expand.

Cosmological assumptions

Before we dive into the emergence and spread of life, there are some cosmological facts that we must know.

First, we must know how the emergence of civilisations varies across time. One strikingly relevant factor for this is the rate of formation for stars and habitable planets. While there aren’t particularly large reasons to expect the probability that life arises on habitable planets to vary with time, cosmological data suggests that the rate at which such planets are created have varied significantly.

Second, we must know how fast such civilisations can spread into space. This is complicated by the expansion of the Universe, and depends somewhat on what speed we think that future civilisations will be able to travel.

Planet formation

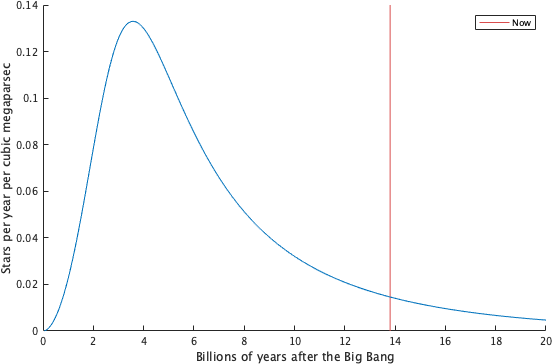

The rate of star formation is a relatively well studied area, with good access to past data. Star formation rate peaked a few billion years after the Big Bang, and has been exponentially declining ever since. A fit to the past rates as a function of redshift (an astronomical observable related to time) is stars per year per megaparsecs (Madau and Dickinson, 2014). It’s a bit unclear how this rate will change into the future, but a decent guess is to simply extrapolate it (Sandberg, personal communication). Translating the redshift z to time, the star formation rate turns out to be log-normal, with an exponentially decreasing tail. This is depicted in figure 1.

Figure 1: Number of stars formed per year per cubic megaparsec in the Universe. The red line marks the present, approximately 13.8 billion years after the Big Bang.

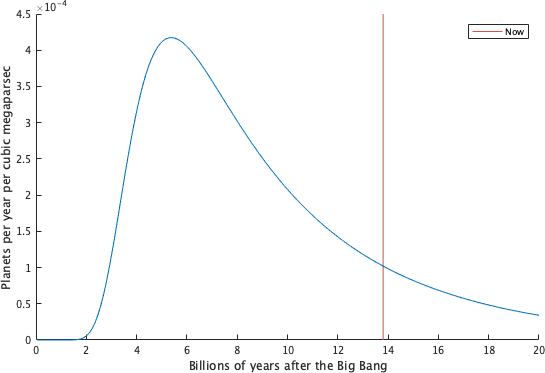

The formation rate of planets similar to the Earth are a bit more uncertain. In general, it seems like they should be similar to those of star formation, but have some delay due to needing heavier metals that weren’t available when the Universe was young. Behroozi and Peeples (2015) think that this is negligible, while Lineweaver (2001) thinks that it’s quite large. Lineweaver’s model assumes that the fraction of metal in the Universe is proportional to the number of stars that have already formed (since the production of heavy metals happens in stars), and that the probability of forming an Earth-like planet is proportional to the logarithm of this fraction.[3] Overall, this results in the peak of planet-formation happening about 2 billion years after the peak of star-formation, as depicted in figure 2.

Figure 2: Number of Earth-like planets formed per year per cubic megaparsec in the Universe. The red line marks the present.

This is the planet-formation rate I will be using for the most part. I discuss some other choices in Appendix C.

Speed of travel

The universe is expanding: distant galaxies are receding from us at fast pace. Moreover, these galaxies are accelerating away from us; as time goes by, most of these will accelerate to velocities so fast that we will never be able to catch up with them. This implies that early civilisations can spread significantly farther than late civilisations. A civilisation arising 5 billion years ago would have been able to reach 2.7 times as much volume as we can reach, and a civilisation arising in another 10 billion years will only be able to reach 15 % of the volume that we can reach (given the assumptions below).

This gradual reduction of reachable galaxies will continue until about 100 billion years from now; at that point galaxies will only be able to travel within the groups that they are gravitationally bound to, all other galaxies will be gone. The group that we’re a part of, the Local Group, contains a bit more than 50 galaxies, which is quite typical. Star formation will continue for 1 to 100 trillion years, after this, but it will have declined so much that it’s largely negligible.

Because of the expansion of the Universe, probes will gradually lose velocity relative to their surroundings. A probe starting out at 80 % of the speed of light would only be going at 56 % of the speed of light after 10 billion years. Due to relativistic effects, very high initial speeds can counteract this: light won’t lose velocity at all, and a probe starting out at at 99.9 % of the speed of light would still be going at 99.6 % after 10 billion years. If there’s a limit to the initial speed of probes, though, another way to counteract this deceleration is to periodically reaccelerate to the initial speed (Sandberg, 2018). This could be done by stopping at various galaxies along the way to gather energy and accelerate back to initial speed. As long as the stops are short enough, this could significantly increase how far a probe would be able to go. Looking at the density of galaxies across the Universe, there should be no problem in stopping every billion light-years; and it may be possible to reaccelerate even more frequently than that by stopping at the odd star in otherwise empty parts of the Universe, or by taking minor detours (Sandberg, personal communication).

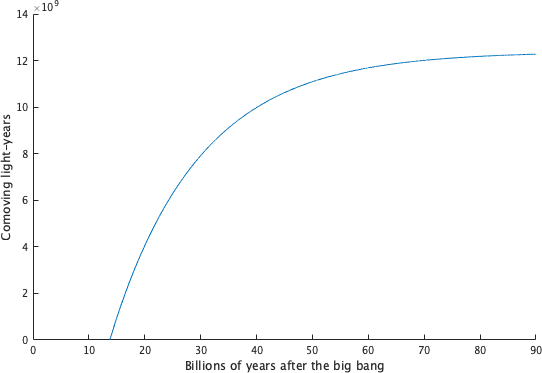

Given some assumptions about potential future technology, it seems plausible that most civilisations will be able to send out probes going at 80 % of the speed of light (Armstrong and Sandberg, 2013). For most calculations, this is the speed I’ve used. I’ve also assumed that probes can reaccelerate every 300 million years, which roughly corresponds to reaccelerating every 300 million light-years. These choices are by no means obvious, but most reasonable alternatives gives the same results, as I show in Appendix C.

To calculate the distances reachable at various points in time, I use equations from Armstrong and Sandberg (2013).[4] These are quite complicated, but when a probe moves at 80 % of the speed of light, any case where reacceleration happens more than once every few billion years will be very similar to continuous reacceleration. During continuous reacceleration, some of the strange effects from the expansion of the Universe disappear, and the probe’s velocity relative to its present surroundings will always equal the initial velocity.

However, to take into account the expansion of the rest of the Universe, it is convenient to measure the velocity in comoving coordinates. Comoving coordinates use a coordinate frame that moves with the expansion of the Universe, such that the coordinates of galaxies remain constant even as they move away from the Earth. At any point in time, the comoving distance between two points is equal to the distance between those points today, regardless of how far they have expanded from each other. As a consequence, a probe with constant real velocity will move slower and slower in comoving coordinates as the real distance between galaxies grows, even if the probe is continuously reaccelerating. If it is reaccelerating often, or moving so fast that the deceleration is negligible, the velocity measured in comoving coordinates will at any time t be , where is the time at which the probe was launched, is the initial velocity, and is the real length of one comoving unit at time . The distance travelled at time by a probe launched at time is , in comoving coordinates. Figure 3 depicts this distance as a function of , for a probe launched from the Earth around now.

Figure 3: Distance that a probe leaving Earth around now could reach, if it reaccelerated to 80 % of the speed of light every 300 million years. The distance corresponds to how far away any reached point is today, measured in light-years. Since the Universe is expanding and accelerating, it will take disproportionally longer for the probe to reach points that are farther away.

Further references to distance, volume and velocity should be interpreted in comoving coordinates, unless they are explicitly about the expansion of the Universe.

Civilisations in the reachable universe

Prior probability distribution

In the classical Drake equation, the expected number of detectable civilisations in the Milky Way is produced by multiplying 7 different estimates together:

- , the rate of star formation in the Milky Way

- , the fraction of stars that have planets around them,

- , the number of Earth-like planets for each such system

- , the fraction of such planets on which life actually evolves

- , the fraction of life-filled planets where intelligence eventually appears

- , the fraction of intelligent civilisations which are detectable

- , the average longevity of such civilisations

I will use a modified version of this equation, where I consider the spread of intergalactic civilisations across the Universe rather than the number of detectable civilisations in the Milky Way. Instead of , , and , I will use the planet formation rate described in Planet formation. For the rest of this post, I will use “planet” and “Earth-like planet” interchangeably. Since what I care about is how intelligent life expands across the Universe over time, rather than how many detectable civilisations exist, I will replace the fraction with a fraction , the fraction of intelligent civilisations that eventually decide to send probes to other galaxies. I assume that it's impossible to go extinct once intergalactic colonisation has begun,[5] and will therefore not use .

Thus, the remaining uncertain parameters are , and . Multiplying , and together, we get the expected fraction of Earth-like planets that eventually yields an intergalactic civilisation, which I will refer to as .

For the prior probability of and , I will use the distributions from Sandberg et al. (2018) with only small modifications.

The fraction of planets that yields life is calculated as using the number of times that life is likely to emerge on a given planet, r, which is in turn modelled as a lognormal distribution with a standard deviation of 50 orders of magnitude.[6] Sandberg et al. (2018) use a conservatively high median of life arising 1 time per planet. Since my argument is that anthropics should make us believe that life is relatively common, rather than rare, I will use a conservatively low median of life appearing once every planets.

I will use the same as Sandberg et al., i.e., a log-uniform distribution between and .

corresponds to the so called late filter, and is particularly interesting since our civilisation hasn’t passed it yet. While the anthropic considerations favor larger values of and , the Fermi observation favors smaller values of at least one of , , and , making the one value which is only affected by one of them. The total effect is that anthropic adjustments put a lot of weight on very small (which corresponds to a large late filter): Katja Grace has described how the self-indication assumption strongly predicts that we will never leave the solar system, and the decision-theoretic approaches ask us to consider that our actions can be replicated across a huge number of planets if other civilisations couldn’t interfere with ours (since this would make our observations consistent with civilisations being very common).

Looking at this from a total consequentialist perspective, the implications aren’t actually too big, if we believe that the majority of all value and disvalue will exist in the future. Consider the possibility that no civilisation can ever leave their solar system. In this case, our actions can be replicated across a vast number of planets: since no civilisations ever leave their planet, life can be very common without us noticing anything strange in the skies. However, if this is true, no civilisation will ever reach farther than it’s solar system, strongly reducing the impact we can have on the future. This reduction of impact is roughly proportional to the additional number of replications, and thus, the total impact that we can have is roughly as large in both cases.[7] To see why, consider that the main determinant of our possible impact is the fraction of the Universe that civilisations like us will eventually control, i.e. the fraction of the Universe that we can affect. This fraction doesn’t vary much by whether the Universe will be colonised by a large number of civilisations that never leave their solar systems, or a small number of civilisations that colonise almost all space they can reach. Since the amount of impact doesn’t vary much, the main thing determining what we should focus on is our anthropic-naïve estimates of what scenarios are likeliest.[8]

I won’t pay much more attention to the late filter in this article, since it isn’t particularly relevant for how life will spread across the Universe. To see why, consider that the Fermi observation and the anthropic update together will push the product to a relatively specific value (roughly the largest value that doesn’t make the Fermi observation too unlikely, as shown in the next two sections). As long as is significantly larger than the final product should be, it doesn’t matter what is: the updates will adjust and so that the product remains approximately the same. A 10 times larger implies a 10 times smaller , so the distribution of will look very similar. While our near term future will look different, the (anthropic-adjusted) probability that a given planet will yield an intergalactic civilisation will remain about the same, so the long term future of the Universe will be very similar. If, however, is so small that it alone explains why we haven’t seen any other civilisations (which is plausible if we assign non-negligible probability to space colonisation being impossible) the long term future of the Universe will look very different. The anthropic update will push and towards 1, and the probability distribution of will roughly equal the distribution of . In this case, however, humans cannot (or are extraordinarily unlikely to) affect the long term future of the Universe, and whether the Universe will be filled with life or not is irrelevant to our plans. For this reason I will ignore scenarios where life is extraordinarily unlikely to colonise the Universe, by making loguniform between and . How anthropics and the Fermi observation should affect our beliefs about late filters is an interesting question, but it’s not one that I’ll expand on in this post.

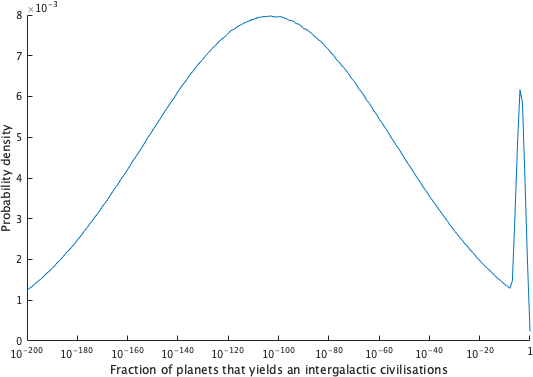

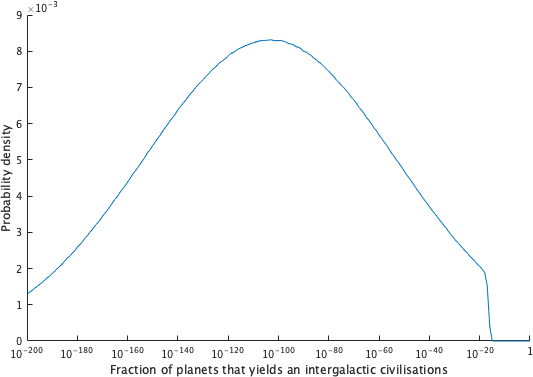

All taken together, the prior of is depicted in figure 4. This and all subsequent distributions were generated using Monte Carlo simulations, i.e., by generating large amounts of random numbers from the distributions and multiplying them together.

Figure 4: Prior probability distribution over the fraction of planets from which an intergalactic civilisations will emerge. The probability density measures the probability per order of magnitude. Since extends from to below , while neither nor varies more than 4 orders of magnitude, most of the shape of the graph is explained by . The number of times that life is likely to arise on a given planet, , has some probability mass on numbers above . For all such , , which explains the increase in probability just at the end.

My conclusions hold for any prior that puts non-negligible probability on life being common works, so the details don’t actually matter that much. This is discussed in Appendix C.

Updating on the Fermi observation

In this analysis, the Fermi observation is the observation that no alien civilisation has reached our galaxy yet. I will take this observation at face value, and neglect the possibility that alien civilisations are here but remain undetectable (known as the Zoo hypothesis).

In order to understand how strong this update is, we must understand how many planets there are that could have yielded intergalactic civilisations close enough to reach us, and yet didn’t. To get this number, we need to know how many civilisation could have appeared a given year, given information about the planet formation rate during all past years. Thus, we need an estimate of the time it takes for a civilisation to appear on a planet after it has been formed, on the planets where civilisations emerge. I will assume that this time is distributed as a normal distribution with mean 4.55 billion years (since this is the time it took for our civilisation to appear) and standard deviation 1 billion years, truncated (and renormalised) so that there is 0 % chance of appearing in either less than 2 billion years or more than 8 billion years.[9] Thus, the number of planets per volume per year from which civilisations could arise at time is

where is the planet formation rate per volume per year at time and is the probability density at t of a normal distribution described as above, centered around years.

Using as the number of possible civilisations appearing per volume per year, all we need is an estimate of the volume of space, , from which civilisations could have reached the Milky Way if they left their own galaxy at time . A probe leaving at time can reach a distance of before the present time, years after the Big Bang, with defined as in Speed of travel. Probes sent out at time could therefore have reached us from any point in a sphere with volume

Using and , the expected number of planets from which intergalactic civilisations could have reached us, if they appeared, is

What does this tell us about the probability that such a civilisation appears on such a planet? Let denote the probability that is the fraction of planets that yields intergalactic civilisations, and denote the observation that none of planets developed an intergalactic civilisation. Bayes theorem tells us that:

is the prior probability distribution described in the above segment, and we can divide by by normalising all values of . Thus, the only new information we need is , i.e., the probability that none of the planets would have yielded an intergalactic civilisation given that an expected fraction of such planets yields such civilizations. Since each of the planets has a chance to not yield an intergalactic civilisation .[10] In this case, this means that . Updating the prior distribution according to this, and normalising, gives the posterior distribution in figure 5.

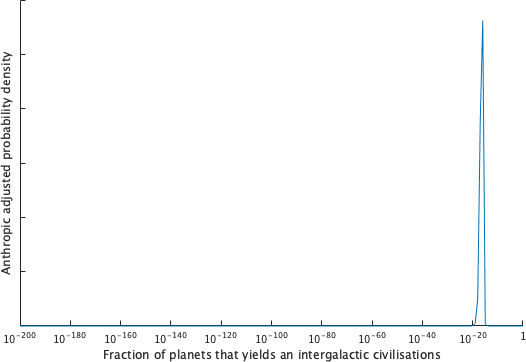

Figure 5: Probability distribution over the fraction of planets from which an intergalactic civilisations will emerge, after updating on the Fermi observation. The probability density measures the probability per order of magnitude.

The posterior assigns close to 0 probability to any fraction larger than , since it’s very unlikely that no civilisation would have reached us if that was the case. Any fraction smaller than is mostly unaffected, however, since us being alone is the most likely outcome under all of them. In the next section, I will use “posterior” to refer to this new distribution.

Updating on anthropics

As stated above, I will be using anthropic theories that weigh hypotheses by the number of copies of us that exists. This isn’t uncontroversial. If we consider a part of the Universe that’s very large, but finite,[11] then some copy of us is likely to exist regardless of how improbable life is (as long as it’s not impossible). The question, then, is how we should reason about the fact that we exist. I’d say that the three most popular views on how to think about this are:

- The self-sampling assumption[12] (SSA): We should reason as if we are a randomly selected observer from all actually existing observers in our reference class, and only update our beliefs on the fact that at least one copy in that reference class exists.[13] Since at least one copy of us would exist regardless of how uncommon civilisations are, the fact that we exist doesn’t provide us with any evidence. Thus, we should stick to our priors.

- The self-indication assumption[14] (SIA): We should reason as if we are a randomly selected observer from all possible observers across all imaginable worlds, weighted by their probabilities. Probabilities are updated by multiplying the prior probability of being in each world with the number of copies of us in each such world, and then normalising. Thus, we are more likely to be in a world where life is common, since life being common implies that more copies of arise across the multiverse.

- Anthropic Decision Theory[15] (ADT): Epistemically, we should only update on the fact that at least one copy of us exist, as SSA does. However, we should take into account that any action that we perform will be performed by all of our copies as well; since there is no way that our copies will do anything differently than we do, we’re effectively making a decision for all of our copies at once. This means that we should care proportionally more about making the right decisions in the case where there are more copies of us, if we accept some form of total consequentialism. For each possible world, the expected impact that we will have is the prior probability of that world multiplied with the number of copies in that world.[16] Despite their differences, SIA and ADT will always agree on the relative value of actions and make the same decisions,[17] given total consequentialism (Armstrong, 2017).

Personally, I think that ADT is correct, and I will assume total consequentialism in this post.[18] However, since SIA and ADT both multiply the prior probability of each possible world with the number of copies in that world, they will always agree on what action to take. The fact that SIA normalises all numbers and treats them as probabilities is irrelevant, since all we’re interested in is the relative importance of actions.

So how does anthropics apply to this case? Disregarding the effects from other civilisations interfering, we should expect the number of copies of us to be proportional to the probability that we in particular would arise from a given Earth-like planet. This is true as long as we hold the number of planets constant, and consider a very large universe. We can disregard the effects from other civilisations since any copy of us would experience an empty university, as well, which we’ve already accounted for in the above section.

The question, then, is how the sought quantities , and relate to the probability that we in particular arise from a given planet. As detailed in Appendix A, and are proportional to the probability that our civilisation in particular appears on Earth. On the other hand, we shouldn’t expect to have any special relation to the probability of us existing (except that it affects the probability of the Fermi observation, which we’ve already considered). is concerned about what happens after this moment in time, not before, so the probability that a civilisation like ours is created should be roughly the same no matter what is. Assuming that the number of copies of us are exactly proportional to and , and independent of , we can call the number of copies of you in a large, finite world , where is some constant.

Thus, the anthropic adjusted probability that some values of , and are correct is the prior probability that they’re correct multiplied with . If we use SIA, disappear after normalising, since we’re just multiplying the probability of every event with the same constant. If we use the decision theoretic approach, we’re only interested in the relative value of our actions, so doesn’t matter.

Using this to update on the posterior we got from updating on the Fermi observation, we get the distribution in figure 5.

Figure 5: Anthropic adjusted probability distribution over the fraction of planets from which an intergalactic civilisations will emerge, taking into account the Fermi observation. If you endorse the self-indication assumption, the Anthropic-adjusted probability density corresponds to normal probability density. If you endorse one of the decision-theoretic approaches, it measures the product of probability density and the number of times that your actions are replicated across the Universe.

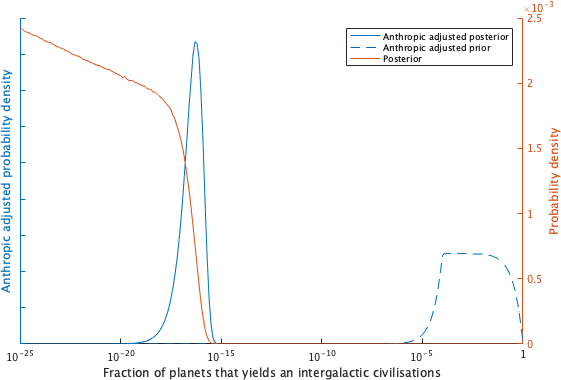

As you can see, the anthropic update is very strong, and the combination of the Fermi observation and the anthropic update yields a relatively small range for non-negligible values of . A more zoomed-in version shows what value of should be expected. To see the interaction between the Fermi observation and the anthropic update, the original posterior and the anthropic-adjusted prior are also depicted in figure 6.

Figure 6: The red line depicts the probability distribution over the fraction of planets from which an intergalactic civilisations will emerge, after updating on the Fermi observation, zoomed in on the smaller interval. The solid blue line depicts the same distribution after adjusting for anthropics. The dashed blue line depicts the anthropic adjusted prior distribution, without taking into account the Fermi observation.

Adjusting for anthropics strongly selects for a large values of and , which would imply that almost all planets develop civilisation. Therefore, the anthropic-adjusted prior probability that a given planet yields an intergalactic civilisation (the dashed blue line in figure 6) is very similar to the probability that an existing civilisation becomes intergalactic: . Thus, the distribution looks very similar to the log uniform distribution of . However, for values of smaller than , either or has to be smaller than . Since the anthropic adjustment is made by multiplying with and , this means that the anthropic adjusted probability declines proportionally below . This looks like an exponential decline in figure 6, since the x-axis is logarithmic.

Updating the prior on the Fermi observation without taking anthropics into account yields the posterior (the red line in figure 6) that strongly penalises any hypothesis that would make intergalactic civilisations common. The update from the Fermi observation is proportional to . Since is very large, and the anthropic update is proportional to , the update from the Fermi observation is significantly stronger than the anthropic update for moderately large values of . Thus, updating the anthropic adjusted distribution on the Fermi observation yields the blue line in figure 6.

The median of that distribution is that each planet has a probability of yielding an intergalactic civilisation; the peak is around .

Simulating civilisations’ expansion

Insofar as we trust these numbers, they give us a lot of information about whether intergalactic civilisations are likely to arise from the planets that we haven’t directly observed. This includes both planets that will be created in the future and planets so far away that any potential civilisations haven’t been able to reach us, yet. In order to find out this implies for our future, I run a simulation of how civilisations spread across the Universe.

To do this, I consider all galaxies close enough that a probe sent from that galaxy could someday encounter a probe sent from Earth. Probes sent from Earth today could reach galaxies that are presently about light-years away; since civilisations that start colonisation earlier would be able to reach farther, I consider all galaxies less than light-years away.

I then run the simulation from the Big Bang to about 60 billion years after the Big Bang, at which point planet formation is negligible (about as many planets per year as now). For every point in time, there is some probability that a civilisation arises from among a group of galaxies, calculated from and the present planet formation rate. If it is, it spreads outwards at 80 % of the speed of light, colonising each galaxy that it passes. The details of how this is simulated is described in Appendix B.

By the end of the simulation, each group of galaxies either has a time at which they were first colonised, or they are still empty. We can then compare this to the time at which Earth-originating probes would have reached the galaxies, if they were to leave now. Assuming that Earth claims every point which it reaches before other civilisations, we get an estimate of the amount of space that Earth would get for a certain . Additionally, we learn what fraction of that space would be colonised by other civilisations in our absence, and what fraction would have remained empty.

For , the median from the previous section, Earth-originating probes arrives first to only 0.5 % of the space that they’re able to reach. Other civilisations eventually arrive at almost all of that space; probes from Earth doesn’t lead to any extra space being colonised.

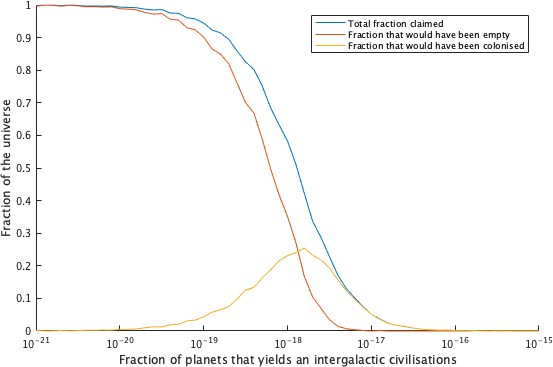

However, we can do better than using point estimates for . Figure 7 is a graph of the fraction of the reachable universe that Earth-originating probes get as function of .

Figure 7: The red line depicts the expected fraction of the reachable universe that will only reached by Earth-originating intelligence. The yellow line depicts the expected fraction of the reachable universe that Earth-originating intelligence reaches some time before other civilisations arrive. The blue line is the sum of these: it depicts the total expected fraction of the reachable universe that Earth-originating intelligence will find empty, when they arrive.

As you can see, Earth-originating probes get a significantly larger share of the Universe for smaller . The anthropic-adjusted probability mass on those estimates isn’t negligible, so they must be taken into account. Summing over the probability and the fraction of the Universe that we get for different values of , Earth-originating intelligence get 5 % of the reachable universe on average. 64 % of this is space that would have otherwise been occupied by alien civilisations, while 36 % is space that would have remained empty in our absence.

These numbers denote the expected values for a random copy of Earth chosen from among all possible copies of Earth weighted by prior probability. Using SIA, this is equivalent to the expected value of what will happen on our Earth. Using ADT, this is the expected value weighted by the number of copies that our decisions affect.

Appendix C describes how these numbers vary for different choices of parameters. The results are surprisingly robust. For the scenarios I consider, the average fraction of space that would be occupied by other civilisations in our absence varies from roughly 50 % to roughly 80 %.

Implications

These results affect the value of colonising the Universe in a number of ways.

Most obviously, the fact that humans won’t get to colonise all the galaxies in reach diminishes the size of our future. This effect is mostly negligible. According to these assumptions, we will get about 5 % of the reachable universe in expectation. Since uncertainty about the size of the far future spans tens of orders of magnitude, a factor of 20 isn’t really relevant for cause prioritisation today.

The effect that our space colonisation might have on other civilisations is more relevant.

Displacing other civilisations

Us claiming space will lead to other civilisations getting less space. If is the fraction of space that we are likely to take from other civilisations, rather than take from empty space, one can express the expected value of the far future as , where is the total volume of space that we are likely to get, is the probability that Earthly life will survive long enough to get it, is the value of Earth-originating intelligence acquiring one unit of volume and is the value of an alien civilisations acquiring one unit of volume.[19] For the reasons mentioned above, I doubt that us displacing aliens would change anyone's mind about the value of focusing on the long term. However, it might play a small role in determining where long-termists should allocate their efforts.

In a simple model, most long-termist causes focus on either increasing , the probability that Earth-originating intelligence survives long enough to colonise space, or on increasing , the value of space colonisation. Some examples of the former is work against risks from biotechnology and nuclear war. Some examples of the latter is AI-alignment (since unaligned AI also is likely to colonise space) and spreading good values. The value of increasing is unaffected by the fact that we will displace other civilisations, since increasing by one unit yields value, which is independent of . However, increasing generates value, which decreases as increases.

How much one should value Earth-originating and alien civilisations is very unclear. If you accept moral anti-realism, one reason to expect aliens to be less valuable than Earth-originating civilisations is that humans are more likely to share your values, since you are a human. However, there might be some convergence among goals, so it’s unclear how strong this effect is.

Consider the case where , i.e., both Earth-originating and alien civilisations are net-positive, but Earth-originating civilisations are better. The extremes are at , where we don’t care at all that we’re displacing other civilisations, and , where we value all civilisations equally, in expectation. With the estimate of , work to reduce extinction is 36 % as good if as it is if . If is in the middle, say at , reducing the risk of extinction is about 70 % as valuable as otherwise.

Now consider the case where . If you believe that alien civilisations are more likely to create harm than good, the value of increasing is greater than it would be otherwise. If you think that alien civilisations cause about as much harm as humans create good, for example, then increasing is 1.64 as good as it would have been otherwise.

A more interesting case is where you believe that and , either because you’re pessimistic about the future or because you hold values that prioritise the reduction of suffering. If you also believe that , the fact that we’re displacing aliens could make space colonisation net-positive, if aliens colonising space is more than 1.4 times as bad as Earth-originating intelligence colonising space (and if you are confident in the assumptions and accuracy of these results). Whether this is likely to be the case is discussed by Jan Brauner and Friederike Grosse-Holz in section 2.1 of this article and by Brian Tomasik here.

Cooperation or conflict

If we loosen the assumption that whoever gets to a galaxy first gets to keep it, we can see that there are possibilities for conflict, which seems very bad, and cooperation, which seems valuable.

It’s unclear exactly how likely conflict would be on an intergalactic scales, in the cases where different civilisations encounter each other. To a large degree, this rests on whether defense or offense is likely to dominate on an intergalactic scale. Phil Torres seems to think that most civilisations will want to attack their neighbours just to make sure that their neighbours doesn’t attack them first, while Anders Sandberg suggests that future technology might enable a perfect scorched earth strategy, removing any incentive to attack. Furthermore, there is a question of how bad conflict would be. There are multiple ways in which conflicts could plausibly waste resources or lead to suffering, but I won't list them all here.

The positive version of this would be to trade and cooperate with neighbouring civilisations, instead. This could take the form of trading various resources with each other, for mutual gain, though this increase in resources seems unlikely to dominate the size of our future. Another case is where neighbouring civilisations are doing something that we don’t want them to do. If, for example, another civilisation is experimenting on various minds with no regard for their suffering, there might be a mutually beneficial deal where we pay them to use the future equivalent of anaesthesia.

So how much space would these considerations affect? Here, considerations of how exactly an extra civilisation might impact the intergalactic gameboard gets quite complicated. Of the 5 % of reachable space that Earth-originating intelligence would be able to claim, all of the 64 % that we get to before other civilisations will be space that the other civilisations might want to fight about when they eventually get there, so such considerations might be quite important. Similarly, the 95 % of space that Earth-originating intelligence won’t arrive at until after other civilisations have claimed it is space that the future rulers of Earth might decide to fight about, or space that the future rulers of Earth might benefit from trading with.

If you believe that conflict is likely to create large disvalue regardless of whether the fight is between Earth-originating civilisations and alien civilisations, or between alien civilisations, one potentially interesting metric is the amount of space that both we and others will eventually arrive to, that would otherwise only ever have been reached by one civilisation. Under the base assumptions, about 1.7 % of all the space that we would be able to reach is space that would only have been reached by one civilisation, if Earth hadn’t existed. This is roughly 36 % as large as all of the space that we'll get to first (which is about 4.7 % of the reachable universe).

Further research

These results also suggests that a few other lines of research might be more valuable than expected.

One potentially interesting question is how other explanations of the Fermi paradox changes the results of these calculations, and to what extent they’re affected by anthropic updates. In general, most explanations of the Fermi paradox either don’t allow a large number of civilisations like us to exist (e.g. a berserker civilisation kill all nascent life), which would imply a weak anthropic update, or don’t give us particularly large power over the reachable universe (e.g. the Zoo hypothesis). As a results, such hypothesis wouldn’t have a particularly large impact on the expected impact we’ll have, as long as their prior probabilities are low.

However, there are some exceptions. Hypotheses which posits that early civilisations are very unlikely, while civilisations emerging around now are likely would systematically allow a larger fraction of the Universe to go to civilisations like ours. An example of a hypothesis like this is neocatastrophism, which asserts that life has been hindered by gamma ray bursts up until now. As gamma ray bursts get less common when the star formation rate declines, neocatastrophism could account for the lack of life in the past while allowing for a large amount of civilisations appearing around now. I haven’t considered this explicitly here (although variations with later life-formation touch on it) since it seems unlikely that a shift from life being very unlikely to life being likely could happen particularly fast across galaxies: there would be large variation in gamma ray bursts between different types of galaxies. However, I might be wrong about the prior implausibility, and the anthropic update seems like it might be quite large.[20] In any case, there may be similar explanations of the Fermi paradox which would be more probable on priors, while receiving a similarly strong anthropic update. There are also some explanations that doesn’t neatly fit into this framework: I discuss the implications of the simulation hypothesis in Appendix D.

It might also be more important than expected to figure out what might happen if we encounter other civilisations. Specifically, research could target how valuable other civilisations are likely to be when compared with Earth-originating civilisations; whether interactions with such civilisations are likely to be positive or negative; and whether there is something we should do to prepare for such encounters today.[21]

There are also a few large uncertainties remaining in this analysis. One big uncertainty concerns how anthropics interacts with infinites. Additionally, all of this analysis assumes total consequentialism without diminishing marginal returns to resources, and I’m unsure how much applies to other, more complicated theories.

Acknowledgments

I’m grateful to Max Daniel and Hjalmar Wijk for comments on the final draft. Thanks also to Max Dalton, Parker Whitfill, Aidan Goth and Catherine Scanlon for discussion and comments on earlier versions, and to Anders Sandberg and Stuart Armstrong for happily answering questions and sharing research with me.

Many of these ideas were first mentioned by Brian Tomasik in the final section of Ranking Explanations of the Fermi Paradox, which served as a major source of inspiration.

Most of this project was done during a research internship at the Centre for Effective Altruism. Views expressed are entirely my own.

References

Armstrong, S. (2017). Anthropic Decision Theory. arXiv preprint arXiv:1110.6437.

Armstrong, S. and Sandberg, A. (2013). Eternity in 6 hours: intergalactic spreading of intelligent life and sharpening the Fermi paradox. Acta Astronautica, 89, 1-13.

Behroozi, P., & Peeples, M. S. (2015). On the history and future of cosmic planet formation. Monthly Notices of the Royal Astronomical Society, 454(2), 1811-1817.

Bostrom, N. (2011). Infinite Ethics. Analysis & Metaphysics, 10.

Lineweaver, C. H. (2001). An estimate of the age distribution of terrestrial planets in the universe: quantifying metallicity as a selection effect. Icarus, 151(2), 307-313.

Madau, P., & Dickinson, M. (2014). Cosmic star-formation history. Annual Review of Astronomy and Astrophysics, 52, 415-486.

Sandberg, A. (2018). Space races: settling the universe fast. Technical Report #2018-01. Future of Humanity Institute. University of Oxford.

Sandberg, A., Drexler, E., & Ord, T. (2018). Dissolving the Fermi Paradox. arXiv preprint arXiv:1806.02404.

Appendix A: How and relates to our existence

In this appendix, I discuss whether it’s justified to directly update and when updating on our existence. denotes the probability that life appears on an Earth-like planet, and denotes the probability that such life eventually becomes intelligent and develops civilisation.

In order to directly update and on our existence, the probability of us appearing needs to be k times as high if either or is k times as high, for all relevant values of and . If this is the case, then there should be k times as many copies of us in worlds where or is k times as high, for all values of and . Because of this, we can multiply with the number of planets to get the expected number of copies of us on those planets, up to a constant factor. We can then use this value to perform the anthropic update, as described in Updating on anthropics.

This does not mean that the probability of us existing needs to be proportional to , in every possible world. Every value of and includes a large number of possible worlds: some of those worlds will have copies of us on a larger fraction of planets, while some will have copies of us on a smaller fraction of planets. All we need is that for every value of and , the weighted average probability of our existence across all possible worlds is proportional to . The probability that we weigh the different worlds by is the prior probability distribution, i.e., the probability distribution that we have before updating on the fact that we exist.

Before taking into account that we exist, it doesn’t seem like our particular path to civilisation is in any way special. If is k times as high, the average path to civilisation must be k times as likely. If we have no reason to believe that our path to civilisation is different from the average, then our particular path to life should also be k times as likely. For every value of and , is therefore proportional to the expected number of copies of us that exist (as long as we sum over all worlds with those particular values of and ). Thus, we can directly update and when updating on our existence.

Note that this isn’t the only conclusion we can draw from our existence. The fact that life emerged through RNA is good evidence that life is likely to emerge through RNA on other planets as well, and the fact that we’ve had a lot of wars is some evidence that other intelligent species are also likely to engage in war, etc.[22] As a contrast, our existence is weak or no evidence for life being able to appear through, for example, a silicon-base instead of a carbon-base. When we update towards life being more common, most of the probability mass comes from worlds where life is likely to emerge in broadly similar ways to how it emerged here on Earth. This is a reason to expect that we’ll encounter civilisations that arose in a similar fashion to how we arose, but it doesn’t change how the basic updating of and works.

In general, the mechanism of the anthropic update isn’t that weird, so it shouldn’t give particularly counter-intuitive conclusions in mundane cases.[23] With the self-indication assumption, updating on our existence on Earth is exactly equal to noticing that life appeared on a specific planet, and updating on that. In that case, we’d conclude that it must be relatively common for life to appear in roughly the way that it did, so that is exactly what the anthropic update should make us believe. As always, Anthropic Decision Theory gets the same answer as the self-indication assumption: the most likely world that contains the most copies of us is going to be the worlds in which it’s relatively common for life to appear in roughly the way that it did here.

Appendix B: Details about the simulation

The simulation simulates the space inside a sphere with radius light years, which contains roughly 400 billion galaxies. Keeping track of that many galaxies is computationally intractable. Thus, I split the space into a much smaller number of points: about 100 000 points randomly distributed in space.

I then run the simulation from the Big Bang to about 60 billion years after the Big Bang. For every time interval , centered around , each point has a probability of generating an intergalactic civilisation equal to

where is the fraction of planets that yields intergalactic civilisations and is the volume that each point represents (equal to the total volume divided by the number of points). is the number of planets per volume per year from which civilisations could arise at time , as described in Updating on the Fermi observation.

Any point which yields an intergalactic civilisation at time is deemed to be colonised at time . For every other point close enough to to be reachable, I then calculate the time at which a probe leaving at reaches . If is earlier than the earliest known time at which becomes colonised, is stored as the time at which becomes colonised. One exception to this is if the civilisation at p would reach Earth before today (13.8 billion years after the Big Bang): since we have already conditioned on this not happening (in Updating on the Fermi observation), the simulation is allowed to proceed under the assumption that p didn’t generate a civilisation at time t.

This process is then repeated for , for every point that isn’t already colonised at or earlier. The simulation continues like this until the end, when every civilisation either has a first time at which it was colonised, or remains empty. These times can then be compared with the times at which Earth-originating probes would reach the various points, to calculate the variables that we care about.

For most variations mentioned in Appendix C, I’ve run this simulation 100 times for each value of , and taken the average of the interesting variables. I’ve used values of from to , with 0.4 between the logarithms of adjacent values of . To get the stated results, I take the sum of the results at each , weighted by the normalised anthropic adjusted posterior. For the base case, I’ve run 500 simulations for each , and used a distance of 0.1 between the logarithms of the values of .

Appendix C: Variations

This appendix discusses the effects of varying a number of different parameters, to assess robustness. Some parts might be difficult to understand if you haven’t read all sections up to and including Simulating civilisations’ expansion.

Speed of travel

Under the assumption of continuous reacceleration, the initial velocity doesn’t change the final results. This is because the initial velocity both affects the fraction of planets that yields intergalactic civilisations, and affects the number of planets that are reachable by Earth. A 10 times greater velocity means that the volume from which planets could have reached us is time greater. If the prior over is similar between to , this means that the estimate of will be times lower. During the simulation, the volume reachable by Earth will be times greater, and at any point, the number of planets that could have reached any given point will be times greater. This exactly cancels out the difference in , yielding exactly the same estimate of the fraction of the Universe that probes from Earth can get.

However, if we assume no reacceleration, the speed does matter.

Sufficiently high initial velocities give the same answer as continuous reacceleration. The momentum is so high that the probes never experience any noticeable deceleration, so the lack of reacceleration doesn’t matter. This situation is quite plausible. If there’s no practical limit to how high initial velocities you can use, the optimal strategy is simply to send away probes at a speed so fast that they won’t need to stop before reaching their destination.

Lower initial velocities means substantially smaller momentum, which means that probes will slow down after a while. This affects the number of probes that will reach our part of the universe in the next few billion years substantially more than it affects the number of probes that should have been able to reach us before now, since probes slowing down becomes more noticeable during longer timespans. Thus, there will be somewhat fewer other civilisations than in other scenarios. With an initial velocity of 80 % of the speed of light, only 51 % of the space that Earth-originating intelligence get to is space that other civilisations would eventually reach. This scenario is less plausible, since periodically reaccelerating would provide large gains with lower speeds, and I don’t know any specific reasons why it should be impossible.

Visibility of civilisations

So far, I have assumed that we wouldn’t notice other civilisations until their probes reached us. However, there is a possibility that any intergalactic civilisations would try to contact us by sending out light, or that they would otherwise do things that we would be able to see from afar. In this case, the fact that we can’t see any civilisation has stronger implications, since there are more planets from which light have reached us than there are planets from which probes could have reached us. This would make the Bayesian update stronger and yield a lower estimate of : we would control more of the reachable universe and the Universe would be less likely to be colonised without us. This effect isn’t noticeable on the results if civilisations travel at 80 % of light speed (or faster) while continuously reaccelerating. However, in this case the speed of travel matters somewhat. If civilisations could only travel at 50 % of light speed, 56 % of the space that Earth-originating civilisation get is space where other civilisations would eventually arrive.

Priors

Since the Bayesian and the anthropic update is so strong, any prior that assigns non-negligible probability to life appearing on to of planets will yield broadly similar results. Given today’s great uncertainty about how life and civilisations emerge, I think all reasonable priors should do this.

One thing that can affect the conclusions somewhat is the relative probabilities inside that interval. For example, a log-normal distribution with standard deviation 50 and with center around assigns greater probabilities to life being less common, in the relevant interval, while a similar distribution centered around 100 assigns greater probabilities to life being more common. This difference is very small: the median in the former case is 3, the median in the latter case is . Therefore, it doesn’t significantly affect the conclusion.

Time to develop civilisation

The time needed for a civilisation to appear after a planet has been formed matters somewhat for the results. Most theories of anthropics agree that we have one datapoint telling us that 4.55 billion years is likely to be a typical time, but we can’t deduce a distribution from one datapoint. To take two extremes, I have considered a uniform distribution between 2 billion years and 4.55 billion years, as well as a uniform distribution between 4.55 billion years and 8 billion years. In the first case, where civilisations appears early, only 60 % of the space that we can claim would have otherwise been claimed by other civilisations. In the latter case, the number is instead 73 %.

Planet formation rate

Multiplying the planet formation rate across all ages of the Universe with the same amount does not change the results. The Fermi observation implies that intergalactic civilisations can’t be too likely to arise within the volume close to us, so more planets per volume just means that a lower fraction of planets yield intergalactic civilisations, and vice versa. As long as our prior doesn’t significantly distinguish between different values of , the number of civilisations per volume of space will be held constant.

However, varying the planet formation rate at particular times in the history of the Universe can make a large difference. For example, if the number of habitable planets were likely to increase in the future, rather than decrease, the Universe would be very likely to be colonised in our absence. Similarly, if the Universe became hospitable to life much earlier than believed, we would have much stronger evidence that intergalactic civilisations are unlikely to arise on a given planet, and life might be much less common in the Universe. These effects can also act together with uncertainty about the time it takes for civilisations to emerge from habitable planets, to further vary the timing of life.

One case worth considering is if planet formation rate follows star formation rate, i.e., there is no delay from metallicity.[24] In order to consider an extreme, we can combine this with the case where civilisations take somewhere between 2 and 4.55 billion years to form. In this case, we have good evidence that intergalactic civilisations are unlikely to appear, and only 49 % of the space that Earth-originating intelligence claims is likely to be colonised in its absence.

The opposite scenario is where the planet formation rate peaks 2 billion years later than Lineweaver’s (2001) model predicts, i.e., the need for metallicity induces a delay of 4 billion years instead of 2 billion years. To consider the extreme, I have combined this with the case where civilisations take between 4.55 and 8 billion years to form. In this case, we are at the peak of civilisation formation right now, and extraterrestrial life is likely to be quite common. In expectation, 81 % of the space that Earth-originating intelligence gets to first would have been colonised in its absence.

Anthropic updates on variations

Just as anthropic considerations can affect our beliefs about the likelihood of civilisations appearing, they can affect our beliefs about which of these variations should receive more weight. Similarly, the variations implies differences in how much space Earth-originating intelligence is likely to affect, and thus how much impact we can have. Taking both of these into account, the variations that get more weight are, in general, those that lead to civilisations appearing at the same time as us (emerging 13.8 billion years after the Big Bang, on a planet that has existed for 4.55 billion years) getting a larger fraction of the Universe.[25] Most importantly, this implies that theories where planet formation happens later are favored; in the cases I’ve considered above, the hypothesis that planet formation happens later gets about 7.5 times as much weight as the base hypothesis, which gets about 6 times as much weight as the case where planet formation happens early. Whether this is a dominant consideration depends on how strong the scientific evidence is, but in general, it’s a reason to lend more credence to the case where extraterrestrial life is more common.

Appendix D: Interactions with the simulation hypothesis

The simulation hypothesis is the hypothesis that our entire civilisation exists inside a computer simulation designed by some other civilisation in the real world.[26]

For simulated civilisations, a natural explanation of the Fermi paradox is that the ones simulating us would prefer to watch what we do without others interfering. I expect most simulated civilisations to exist in simulations where space is faked, since this seems much cheaper (although just a few simulations that properly simulates a large amount of space might contain a huge number of civilisations, so this point isn’t obvious).

If we think that there’s at least a small probability that civilisations will create a very large number of simulated civilisations (e.g. a 1 % chance that 1 % of civilisations eventually create a billion simulations each), a majority of all civilisations will exist in simulations, in expectation. Since the majority of our expected copies exists in simulations, our anthropic theories implies that we should act as if we’re almost certain that we are in a simulation. However, taking into account that the copies living in the real world can have a much larger impact (since ancestor simulations can be shut down at any time, and are likely to contain less resources than basement reality), a majority of our impact might still come from the effects that our real-world copies have on the future (assuming total consequentialism). This argument is explored by Brian Tomasik here.

That line of reasoning is mostly unaffected by the analysis in this post, but there might be some interactions, depending on the details. If we think that the majority of our simulated copies are simulated by civilisations very similar to us, which also emerged around 13.8 billion years after the Big Bang, the fact that we’ll only get 5 % of the reachable universe is compensated by the fact that our simulators also only have 5 % as much resources to run simulations with, in expectation. However, if we think that most species will simulate ancestor civilisations in proportion to how common they are naturally, most of our simulations are run by civilisations who arrived early and got a larger fraction of the Universe. Thus, the fact that we only get 5 % of the reachable universe isn’t necessarily compensated by a corresponding lack of resources to simulate us. Since we’re relatively late, I’d expect us to have relatively little power compared with how frequently we appear, so this would strengthen the case for focusing on the short term. I haven’t quantified this effect.

Footnotes

[1] No anthropic theories are named in the paper, but one way to get this result would be to use the self-sampling assumption on a large, finite volume of space.

[2] Total consequentialism denotes any ethical theory which asserts that moral rightness depends only on the total net good in the consequences (as opposed to the average net good per person) (https://plato.stanford.edu/entries/consequentialism/). It also excludes bounded utility functions, and other theories which assigns different value to identical civilisations because of external factors.

[3] The fraction of metals are normalised to today’s levels. For every star in the same weight-class as the Sun (calculated as 5 % of the total number of stars), the probability of forming an Earth-like planet is chosen to be 0 if the fraction of metals is less than of that of the Sun, and 1 if the fraction is more than 4 times that of the Sun. For really high amount of metal, there is also some probability that the Earth-like planet is destroyed by the formation of a so-called hot Jupiter.

[4] Specifically, I use the differential equations from section 4.4.1. I calculate the distance (measured in comoving coordinates) travelled by a probe launched at as , where .

[5] Some authors, such as Phil Torres (https://www.sciencedirect.com/science/article/pii/S0016328717304056) have argued against the common assumption that space colonisation would decrease existential risk. However, it seems particularly unlikely that species that has started intergalactic colonisation would go extinct all at once, since the distances involved are so great.

[6] is the quantity calculated as in Sandberg et al. (2018).

[7] If you’re a total consequentialist who only cares about presently existing people and animals, however, this isn’t true. In that case, us never leaving the Solar System doesn’t make much of a difference, but the additional number of replications would still increase your impact (although this might depend on the details of your anthropic and ethical beliefs). As a result, I think that the majority of your expected impact comes from scenarios where we’re very unlikely to leave the Solar System, either because of large extinction risks, or because space colonisation is extremely difficult. I have no idea what the practical consequences of this would be.

[8] There does, however, exist some variation in impact, and those variations can matter when we’re not confident of the right answer. For example, the case for the possibility of intergalactic travel isn’t airtight, and there may be some mechanism that makes civilisations like ours get a larger fraction of the Universe if intergalactic travel is impossible: one example would be that we seem to have emerged fairly late on a cosmological scale, and late civilisations gets a larger disadvantage if intergalactic travel is possible, since the expansion of the Universe means that we can’t reach as far as earlier civilisations. On the other hand, if intergalactic travel is impossible, it’s likely to be because technological progress stopped earlier than expected, which probably means that the future contains less value and disvalue than otherwise. I haven’t tried quantifying any of these effects.

[9] This choice is quite arbitrary, but the results aren’t particularly dependent on the details of the distribution. See Appendix C for more discussion.

[10] Since is very tiny and is very large, this is approximately equal to . When running the calculations in matlab, results in a smaller error, so that’s the version I’ve used.

[11] As far as I know, there is no theory of anthropics that works well in infinite cases (except possibly UDASSA, which I’m not sure how to apply in practice). Since the recommendations of ADT and SIA are the same under any large finite size, the simplest thing to do seems to be to extrapolate the same conclusions to infinite cases, hoping that future philosophers will figure out whether there’s any more rigorous justification for that. This is somewhat similar to how many ethical theories don’t work in an infinite universe (Bostrom, 2011); the safest thing to do for now seems to be to act according to their finite recommendations.

[12] Known as the ‘halfer position’ when speaking about the Sleeping Beauty Problem.

[13] There is no clear answer to exactly how we should define our reference class, which is one of the problems with SSA.

[14] Known as the ‘thirder position’ when speaking about the Sleeping Beauty Problem.

[15] Anthropic Decision Theory is simply non-causal decision theories such as Evidential Decision Theory, Functional Decision Theory, and Updateless Decision Theory applied to anthropic problems. The anthropic reasoning follows directly from the premises of these theories, so if you endorse any of them, this is probably the anthropic theory you want to be using (unless you prefer UDASSA, which handles infinities better).

[16] Note that ADT (in its most extreme form) never updates on anything epistemically, if it’s in a large enough universe. If you perform an experiment, there will be always be some copy in the Universe that hallucinated each possible outcome, so you can’t conclude anything from observing an outcome. However, there are more copies of you if your observation was the most common observation, so you should still act exactly like someone who updated on the experiment.

[17] Note that this is only true for anthropic dilemmas. Since ADT is a non-causal decision theory, it may recommend entirely different actions in e.g. Newcomb’s problem.

[18] If you use ADT or SIA and are an average utilitarian, you will act similarly to a total consequentialist using SSA, in this case. If you use SSA and are an average utilitarian, you should probably act as if life was extremely uncommon, since you have a much greater control over the average in that case. I have no idea what a bounded utility function would imply for this.

[19] This model assumes a linear relationship between civilisations’ resources and value; thus, it won’t work if you e.g. care more about the difference between humanity having access to 0 and 1000 galaxies than the difference between 1000 and 3000 galaxies. It’s a somewhat stronger assumption than total consequentialism, since total consequentialism only requires you to have a linear relationship between number of civilisations and value.

[20] If essentially all space went to civilisations arising in a 2 billion year period around now, I’d guess that the anthropic update in favor of that theory would be one or two orders of magnitude.

[21] While this last question might seem like the most important one, there’s a high probability that any future rulers of Earth who care about the answer to such research could do it better themselves.

[22] At the extreme, our existence is evidence for some very particular details about our planet. For example, the fact that we have a country named Egypt is some evidence that other civilisations are likely to have countries named Egypt. However, the prior improbability of civilisations having a country named Egypt outweighs this, so we don’t expect most other civilisations to be that similar to us.

[23] This has previously been asserted by Stuart Armstrong. Note that I don’t use his method of assuming a medium universe in this post, but my method should always yield the same result.

[24] From what I can gather, this view isn’t uncommon. The metallicity-delays that Behroozi and Peeples (2015) consider isn’t significantly different from no delay at all.

[25] To see why, consider that twice as many civilisations appearing at the same time as us implies twice the anthropic update; and that each civilisations like us getting twice as much space implies that we will affect twice as much space. Multiplying these with each other, the amount of space that we will affect is proportional to the amount of space that civilisations appearing at the same time as us will affect.

[26] This should not be confused with the simulation that I use to get my results, which (hopefully) contains no sentient beings.

Thank you for this excellent and detailed post, I expect to use it in the future as a go-to reference for explaining this point. You might be interested in an old paper where Nick Bostrom and I went through some of this reasoning (with similar conclusions but much less explanation) in the course of discussing the implications of anthropic theories for the possible difficulty of evolving intelligence.

I am not so sure about the specific numerical estimates you give, as opposed to the ballpark being within a few orders of magnitude for SIA and ADT+total views (plus auxiliary assumptions), i.e. the vicinity of "(roughly the largest value that doesn’t make the Fermi observation too unlikely, as shown in the next two sections". But that's compatible with much or most of our expected on the total view coming from scenarios where we don't overlap with aliens much.

" However, varying the planet formation rate at particular times in the history of the Universe can make a large difference."

We also update our uncertainty about this sort of temporal structure to some extent from our observation of late existence. Ideally we would want to let as much as possible vary so that we don't asymmetrically immunize some parameters against update.

"For this reason I will ignore scenarios where life is extraordinarily unlikely to colonise the Universe, by making fs loguniform between 10−4 and 1."

This seems overall too pessimistic to me as a pre-anthropic prior for colonization (~10% credence).

I definitely agree about some numbers. Maybe I should have been more explicit about this in the post, but I have low credence in the exact distribution of f (as well as fl, fi, and fs): it depends far too much on the absolute rate of planet formation and the speed at which civilisations travel.

However, I'm much more willing to believe that the average fraction of space that would be occupied by alien civilisations in our absence is somewhere between 30 % and 95 %, or so. A lot of the arbitrary assumptions that affects f cancels out when running the simulation, and the remaining parameters affects the result surprisingly little. My main (known) uncertainties are

Do you agree, or do you have other reasons to doubt the 30%-95% number?

I agree that the mean is too pessimistic. The distribution is too optimistic about the impossibility of lower numbers, though, which is what matters after the anthropic update. I mostly just wanted a distribution that illustrated the idea about the late filter without having it ruin the rest of the analysis. f has almost exactly the same distribution after updating, anyway, as long as fs assigns negligible probability to numbers below 10−10.

Interesting! And nice to see ADT make an appearance ^_^

I want to point to where ADT+total utilitarianism diverges from SIA. Basically, SIA has no problem with extreme "Goldilocks" theories - theories that imply that only worlds almost exactly like the Earth have inhabitants. These theories are a priori unlikely (complexity penalty) but SIA is fine with them (if h1 is "only the Earth has life, but has it with certainty", while h2 is "every planet has life with 50% probability", then SIA loves h1 twice as much as h2).

ADT+total ut, however, cares about agents that reason similarly to us, even if they don't evolve in exactly the same circumstances. So h2 weights much more than h1 for that theory.

This may be relevant to further developments of the argument.

Comment copied to new "Stuart Armstrong" account:

Interesting! And nice to see ADT make an appearance ^_^

I want to point to where ADT+total utilitarianism diverges from SIA. Basically, SIA has no problem with extreme "Goldilocks" theories - theories that imply that only worlds almost exactly like the Earth have inhabitants. These theories are a priori unlikely (complexity penalty) but SIA is fine with them (if h1 is "only the Earth has life, but has it with certainty", while h2 is "every planet has life with 50% probability", then SIA loves h1 twice as much as h2).

ADT+total ut, however, cares about agents that reason similarly to us, even if they don't evolve in exactly the same circumstances. So h2 weights much more than h1 for that theory.

This may be relevant to further developments of the argument.