When choosing an option from a set of options with uncertain utility an optimizer will tend to overestimate the utility of their chosen option. Error can make an option seem better (or worse) than it is. An option with error "playing in it's favor" is more likely to be chosen, leaving the optimizer short of what they were expecting. This is the optimizer's curse *in the strict sense*. For a more detailed description and a great discussion of the implications to effective altruism see here.

I don’t think of the optimizer’s curse as a specific result, but more as a type of dynamic. We can ask, for example, what would happen if you are choosing from options from *different classes* with *different patterns of uncertainty*?

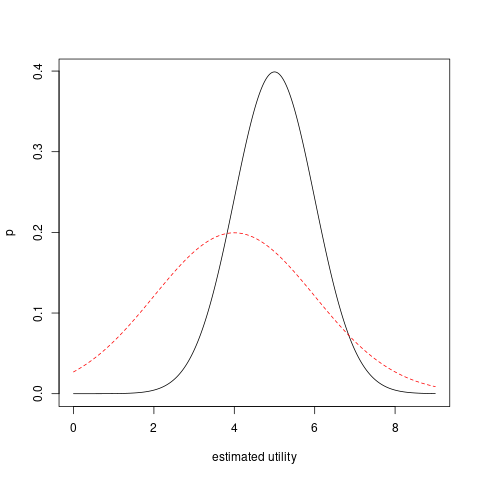

I'll illustrate with an example. For simplicity's sake let's assume two classes of options whose members have exactly the same utility within their class. But the classes differ, let's say utility 4 and 5. Now assume the class with lower expected utility has more uncertainty associated with it. I'll model the perceived utility as two normal distributions with different levels of variance (mean=5 with sd=1, mean=4 with sd=2). The estimated expected utility distributions look something like this:

We might try to find the best option in a naive way, by examining many of them and picking the best seeming one. However, this option is more likely to come from the worse class. It is almost three times more likely to get an estimated utility larger than 7 from the high variance distribution and more than 16 times likelier to get a value larger than 8. The more options we examine, the stronger the effect and the likelier we are to pick an option from the worse class.

The kind of interventions that EAs contemplate have exactly this dynamic, though it's obviously more complicated. In a more realistic model each class would have to have a distribution of utilities rather than a fixed value, and a random error over that. And the uncertainty we would be dealing with is much more extreme. It gets even worse when we consider distributions that aren't even unimodal.

EAs often argue that interventions targeting the long-term future have higher expected value than more short-termist ones. But long-termist options will always have huge uncertainty associated with the relevant, hard to estimate parameters. So the problem is: it will be long-termist interventions that appear to have highest utility, regardless of whether or not we live in a world where they actually have higher utility. The fact that there are no good feedback loops on our reasoning about them does not help the situation.

What is even more perverse is that on the basis of more "promising" options coming from the high uncertainty class, we might redouble our efforts and sample from this class even more heavily, intensifying the curse.

Conclusion: the optimizer's curse is even nastier than you thought if you assume multiple classes with different patterns of uncertainty. Especially if your exploration of options is informed by your initial cursed results.