0. Introduction

An essay being worth $50,000 dollars is a bold claim, so here is another— the person best equipped to adjust your AI existential risk predictions is the 18th-century Scottish historian David Hume.

Eliezer Yudkowsky has used Hume’s is-ought problem to argue that it’s possible, in principle, for powerful AI agents to have any goals. Even a system with cognitive mastery over “is” data requires a framework for “ought” drives to be defined, as the transition from factual knowledge to value-driven objectives is not inherently determined. Bluntly, Eliezer writes that “the world is literally going to be destroyed because people don't understand Hume's is-ought divide. Philosophers, you had ONE JOB.”

As a student of Hume, I think this is a limited picture of what he has to offer this conversation. My goal, however, is not to refute Eliezer’s example or to even engage in x-risk debates at the level of a priori reasoning. Hume’s broader epistemological and historical writing critiques this method.

Hume was not a lowercase-r rationalist. He thought knowledge was derived from experiences and warned that it’s easy to lead yourself astray with plausible sounding abstractions. If you are a capital-R Rationalist, I will argue you should review your foundational epistemological assumptions, because even a small update may ripple out to significantly greater uncertainty about existential risk from AI.

The central premises in Hume’s thought that I think you should consider are:

- All knowledge is derived from impressions of the external world. Our ability to reason is limited, particularly about ideas of cause and effect with limited empirical experience.

- History shows that societies develop in an emergent process, evolving like an organism into an unknown and unknowable future. History was shaped less by far-seeing individuals informed by reason than by contexts which were far too complex to realize at the time.

In this essay, I will argue that these premises are true, or at least truer than the average person concerned about existential risk from AI holds them to be. I hope David Hume can serve as a guide to the limits of “arguing yourself” into any strong view of the future based on a priori reasoning. These premises do not mean that AI safety should be ignored, but they should unsettle strong/certain views.

The best practical example of premise #1 is Anthropic’s description of “empiricism in AI safety.” Anthropic does argue that there is evidence AI will have a large impact, that we do not know how to train systems to robustly behave well, and that “some scary, speculative problems might only crop up” once AI systems are very advanced. Yet they caution that “the space of possible AI systems, possible safety failures, and possible safety techniques is large and difficult to traverse from the armchair alone.” Anthropic is committed to AI safety, but within an empiricist epistemology. Their portfolio approach is built upon uncertainty: “Some researchers who care about safety are motivated by a strong opinion on the nature of AI risks. Our experience is that even predicting the behavior and properties of AI systems in the near future is very difficult. Making a priori predictions about the safety of future systems seems even harder.”

The best practical example of premise #2 is one you may have found frustrating— Tyler Cowen’s “radical agnosticism” on the question of AI existential risk. Cowen’s style irks many in this community, but I believe the tension stems from a real methodological disagreement that is worth considering. If a deep study of history shows it to be incredibly complex and context-dependent, then our ability to “forecast” complex social developments may be overestimated. Laura Duffy of Rethink Priorities has made a similar point about Hayek and the information problem, arguing that longtermist EAs should be more skeptical of individuals or groups’ abilities to reliably model the future of civilization. Yet Hayek was influenced by David Hume’s view of emergent social order, and I think Hume makes the epistemological and historical case more holistically.

In response to Question 2 of OpenPhil’s AI Worldviews Contest, I will argue that the above premises should lead you to increase your error bars and widen your probability distribution of a pre-2070 AGI necessarily spelling existential catastrophe. I will consider my essay a success if you move in this direction at all, rate other essays in this contest that use empirical rather than a priori methods higher, or consider for the length of a single deep breath that this question might be unanswerable with any reasonable degree of confidence. Uncertainty does not mean inaction, but it should shift your priorities, which I will briefly suggest in my conclusion.

1. Empiricism

To make this concrete, here is an example from a recent 80,000 Hours Podcast interview with Tom Davidson, a Senior Policy Analyst at Open Philanthropy. I don’t mean to single out Davidson in particular, and I am aware he spoke more about timelines than x-risks. Still, I think it is representative of the way many AI conversations develop. I also think it would be fruitful to engage with a segment of OpenPhil’s own thinking for the contest.

Here is his response to Luisa Rodriguez’s claim that his arguments seem unintuitive. I have included his full response and highlighted key sections to avoid quoting him out of context:

- “I agree it seems really crazy, and I think it’s very natural and understandable to just not believe it when you hear the arguments. That would have been my initial reaction.

- In terms of why I do now believe it, there’s probably a few things which have changed. Probably I’ve just sat with these arguments for a few years, and I just do believe it. I have discussions with people on either side of the debate, and I just find that people on one side have thought it through much more.

- I think what’s at the heart of it for me is that the human brain is a physical system. There’s nothing magical about it. It isn’t surprising that we develop machines that can do what the human brain can do at some point in the process of technological discovery. To be honest, that happening in the next couple of decades is when you might expect it to happen, naively. We’ve had computers for 70-odd years. It’s been a decade since we started pouring loads and loads of compute into training AI systems, and we’ve realised that that approach works really, really well. If you say, “When do you think humans might develop machines that can do what the human brain can do?” you kind of think it might be in the next few decades.

- I think if you just sit with that fact — that there are going to be machines that can do what the human brain can do; and you’re going to be able to make those machines much more efficient at it; and you’re going to be able to make even better versions of those machines, 10 times better versions; and you’re going to be able to run them day and night; and you’re going to be able to build more — when you sit with all that, I do think it gets pretty hard to imagine a future that isn’t very crazy.”

This is a lowercase-r rationalist epistemology. In this view, new knowledge is derived from “sitting with” arguments and thinking them through, following chains of a priori reasoning to their logical conclusions. Podcasts are a limited medium, but in his Report on Semi-informative Priors for AI Timelines, Davidson presents a similar approach in his framing question:

“Suppose you had gone into isolation when AI R&D began and only received annual updates about the inputs to AI R&D (e.g., researchers, computation) and the binary fact that we have not yet built AGI. What would be a reasonable pr(AGI by year X) to have at the start of 2021?”

This is significantly closer to Descartes’ meditative contemplation than Hume’s empiricist critique of the limits of reason. Davidson literally describes someone thinking in isolation based on limited data. The assumption is that knowledge of future AI capabilities can be usefully derived through reason, which I think we should challenge.

The statement “a sufficiently intelligent AI system would cause an existential catastrophe” is much more comparable to a fact about observable reality than to an a priori idea such as the relationship of the angles of a perfect triangle. This statement makes a claim about cause and effect, which Hume was skeptical that we can know by anything other than association and past experience. I know the sun will almost certainly rise tomorrow because I have formed an association through experience. If Hume is right, we can have no such association of how a superintelligence would behave without empirical evidence either of existing systems or future ones. Hume writes:

“As the power, by which one object produces another, is never discoverable merely from their idea, cause and effect are relations of which we receive information from experience and not from any abstract reasoning or reflection.”

Hume went further. Not only should we prioritize empirical evidence over rational abstractions, but even when we try, we can never step outside of our impressions and experiences. We inevitably reason through analogy, allegory, and impressions of the world. If we “chase our imagination to the heavens, or to the utmost limits of the universe, we never really advance a step beyond ourselves, nor can conceive any kind of existence, but those perceptions, which have appeared in that narrow compass.” Rationalists often fall into the trap not only of overestimating the power of a priori reason but also of underestimating how impressions and past experiences are shaping their thought unconsciously.

It’s true that most rationalists acknowledge uncertainty, but they do so through Bayesian probabilities on their predictions. I think Hume would respond that “All probable reasoning is nothing but a species of sensation.” He writes, “When I am convinced of any principle, it is only an idea, which strikes more strongly upon me. When I give the preference to one set of arguments above another, I do nothing but decide from my feeling concerning the superiority of their influence.” This doesn’t mean it’s impossible to think probabilistically about the future, but that we tend to vastly overestimate how detached and “rational” we are capable of being when we do so.

To Davidson’s credit, he acknowledges the issue of empirical evidence. In the Weaknesses section of his report, the first point reads:

“Incorporates limited kinds of evidence. Excludes evidence relating to how close we are to AGI and how quickly we’re progressing. For some, this is the most important evidence we have.”

My entry into this conversation is to suggest that, yes, this is the most important evidence we have. If Hume is right about how we acquire knowledge (or at least more right than the average reader of this post), then empirical observation of how AI systems actually work in practice may be our only evidence.

So many of the standard arguments for AI risks rely on theoretical arguments detached from empirical evidence. Tyler Cowen rightly jokes that the standard is often a “nine-part argument based upon eight new conceptual categories that were first discussed on LessWrong eleven years ago.” Hume has a cheeky response to these categories as well: “When we entertain, therefore, any suspicion that a philosophical term is employed without any meaning or idea (as is but too frequent), we need but inquire from what impression is that supposed idea derived? And if it be impossible to assign any, this will confirm our suspicion.” Rationalists should more critically assess what impressions of the external world drive their a priori chains of argument.

This error also manifests in the common EA response to x-risk skeptics, “But Have They Engaged with the Arguments?” A failure to “engage with the arguments” is often levied as a slam-dunk critique of others who do not share a highly rationalist epistemology.

Theoretical work on AI safety can be incredibly valuable, as Anthropic notes, but their philosophy to prioritize empirically grounded research and to “show don’t tell” is much more compelling.

There definitely is empirical evidence of some AI risks today, and again some views like Anthropic’s Core Views on AI Safety are concerned on this basis. The empirical case for AI risk can still be compelling. But Anthropic builds much more uncertainty into their worldview than many in this debate. They take seriously the possibility that AI might become dangerous and recognize that theoretical work is necessary to inform empirical work. However, they note that their approach is likely to “rapidly adjust as more information about the kind of scenario we are in becomes available.”

Hume echoes this sentiment in “Of Commerce,” giving practical advice for organizations as he warns against over-theorizing: "When a man deliberates [...] he never ought to draw his arguments too fine, or connect too long a chain of consequences together. Something is sure to happen that will disconcert his reasoning." I would encourage OpenPhil at the margins to apply greater empirical rigor to their projections of AI risk and grant evaluation.

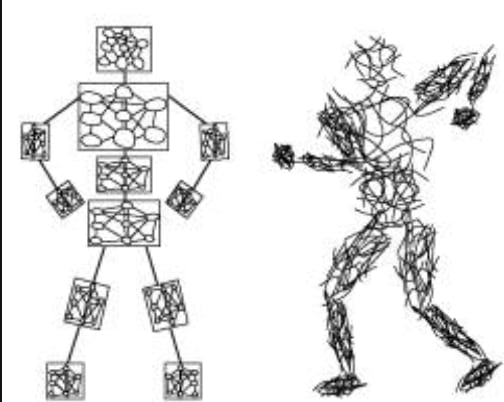

2. Even Our AI Models Are Empiricist

Throughout the history of AI, there was a debate about whether systems would learn by first encoding formal logic and reasoning or by processing vast amounts of data. The “symbolic” versus “connectionist” schools were complex, but I think it’s fair to say that the connectionists have kicked symbolic reasoning’s ass. Our best models today learn by training on massive amounts of examples and making connections between them. To the surprise of many, logic and reasoning can even develop as emergent properties in models by conjoining lots of individual experiences.

I do not think this would surprise David Hume. The success of connectionist AI systems is, in some ways, a vindication of his view of cognition. This isn’t evidence in itself that humans develop knowledge by making associations between vast amounts of previous impressions, but it might move you in that direction. The best way we have discovered to get AI systems to learn is quite Humean.

Further, many Rationalists overrate the dangers of future AI systems because of their overly rationalist epistemology. Sure, if knowledge can be derived from thinking in an armchair hard enough, then a “superintelligent” being could build nanomachines and kill humans in no time. But if knowledge is fundamentally downstream of observation of the world, as Hume suggests, then even advanced AI systems will be bottlenecked by experimentation and access to high-quality datasets.

Jacob Buckman makes this case in “We Aren't Close To Creating A Rapidly Self-Improving AI.” He notes that the current paradigm allows systems to approach human capability on large high-quality datasets, but constructing these datasets is an incredibly difficult bottleneck. The best part of his piece happens in the comment section when a reader suggests that AI could still self-improve once it learns “the rules of reality” such as math, logic, and physics. In a Humean style, Buckman responds that “The rules of reality are *not* logic/math/physics — you have it precisely backwards. In fact, logic/math/physics are just approximations to the rules of reality that we inferred from *observing* reality.” I would encourage OpenPhil to consider the possibility of what this limitation would mean for the odds of an existential collapse caused by AI in the next century.

3. Thinking Historically

Hume’s History of England is a story where, again and again, what seems at first like a causal outcome driven individuals was actually dependent on a vast amount of context, preconditions, and happenstance. Hume’s historical contribution is to emphasize that nothing happens in a vacuum. A complex web of political regimes, climates, education systems, markets, social norms, etc., shaped the history of England, and Hume needed over 3000 pages to feel he’d done justice to it. General principles can be gained from the study of history, but carefully, and always with the caveat “it depends.”

Because of this, Tyler Cowen critiques the “tabula rasa” way of reasoning about the future that many x-risk proponents take on— it can be a vast oversimplification of trends that are, even to the best full-time researchers today, causally uncertain. Context could be vastly more important than just armchair discussions of AI technology itself. If Hume is right, then Cowen bringing up China really matters. As would Taiwan, US political stability, and 1000 other potential factors beyond our foresight. We should still try our best to predict and plan for the future, but there is just too much information to try to grasp a priori. We will miss something.

In the 80k podcast, Davidson presents an argument about history that is common in AI debates— people in the past had no idea what “crazy” times were ahead of them, therefore speculative claims about the future should be taken seriously (or at least not dismissed). Davidson correctly notes that hunter-gatherers had no idea that sprawling empires would emerge, and feudal market vendors had no idea that technology would radically transform the world.

I think the lesson from these examples is actually that predicting the future is recognized to be a nearly impossible task. No blacksmith in 1450 could possibly have predicted the semiconductor with any degree of certainty, and no hunter-gatherer had enough experience to speculate on feudal siege warfare. Davidson’s argument is that self-improving AI might be one among many uncertain futures, which is fair enough. But as Tyler Cowen writes, “the mere fact that AGI risk can be put on a par with those other also distant possibilities simply should not impress you very much.” Yes, it’s true that we can imagine a future like this. But if anything, history shows the limitations of our capacity for speculation.

It’s possible that we are the exception. Perhaps Baysenian superforecasting really is the key, or AI is close enough that real empirical evidence of doom is with us now. But the latter point is addressed in section 1 of this essay, and the former would be a shocking development. As Anthropic writes:

“This view may sound implausible or grandiose, and there are good reasons to be skeptical of it. For one thing, almost everyone who has said ‘the thing we’re working on might be one of the biggest developments in history’ has been wrong, often laughably so. Nevertheless, we believe there is enough evidence to seriously prepare for a world where rapid AI progress leads to transformative AI systems.”

There is an empirical/historical case to be made for AI risk, as Anthropic describes, but it is built upon uncertainty. Anthropic’s commitment to change as new evidence emerges echoes Hume’s point that “the most perfect philosophy of the natural kind only staves off our ignorance a little longer.” The correct response to the p(doom) question— one with literally apocalyptic complexity— is not to continue “arguing yourself” one way or another. For this reason Cowen says he doesn’t think arguing back on x-risk terms is the correct response. A more complex view of history is useful because demystifying the past can help demystify the future. We should acknowledge from Hume that “reason is slave to the passions,” yet try our best to wade through the empirical evidence as it changes.

A historical approach could be criticized because, by definition, we cannot have historical examples of extinction events. The plane meme with the red dots, yes, very good. But we do have clear historical analogs: atomic bombs work; and bioengineered pandemics would use mostly existing tools on one of humanity’s oldest threats. The difference between a fear of UFOs and AGI as an existential threat rests on the weight of the available evidence, not how compelling an a priori argument we can make about their possibility. More in the community should acknowledge this.

And while some may call this the “Safe Uncertainty Fallacy,” arguing that uncertainty of existential risk should not mean it is safe to press ahead, I think incorporating greater uncertainty into your worldview is still actionable. If we are epistemologically limited, we can build that into our models.

4. Conclusion: Uncertainty Does Not Mean Inaction

Building more uncertainty into your worldview does not mean throwing up your hands and giving up on AI. If I have convinced you even at the margin to be more empiricist and to think more historically, here are a few concrete suggestions:

- You could give other essays in this contest higher scores that make empirical cases for or against AI doom. I lack the technical background to do this justice, but they might not.

- You could base your own “portfolio approach” on Anthropic's, increasing funding to the possibility that we are living in an “alignment is difficult but tractable” world over the “it’s clear a priori that we need a Butlerian Jihad” world.

- You could consider whether other aspects of OpenPhil’s operations over-rely on a rationalist epistemology, or at least start having these conversations.

Lastly, uncertainty should also shape how you prioritize other causes. You can still take the old-school-EA approach to problems that have a strong empirical track record, such as global health and animal suffering. I think so many of the “longtermist” trends in EA in recent years have been driven by a weaker epistemology, leaving the movement with a genuine conflict over how to develop knowledge about doing the most good. As someone who misses the early 2010s spreadsheet EA (sheets that track real-world data, not speculative powers of 10), I hope you take these ideas to heart.

I found this post very interesting and useful for my own thinking about the subject.

Note that while the conclusions here are ones intended for OP specifically, there's actually another striking conclusion that goes against the ideas of many from this community: we need more evidence! We need to build stronger AI (perhaps in more strictly regulated contexts) in order to have enough data to reason about the dangers from it. The "arms race" of DeepMind and OpenAI is not existentially dangerous to the world, but is rather contributing to its chance of survival.

This is still at odds, of course, with the fact that rapid advancements in AI create well-known non-existential dangers, so at times we trade off mitigating those with finding out more about existentially-dangerous AI. This is not an easy decision, and should be paid attention to, especially if you're not a longtermist.

Is your claim just that people should generally "increase [their] error bars and widen [their] probability distribution"? (I was frustrated by the difficulty of figuring out what this post is actually claiming; it seems like it would benefit from a "I make the following X major claims..." TLDR.)

I probably disagree with your points about empiricism vs. rationalism (on priors that I dislike the way most people approach the two concepts), but I think I agree that most people should substantially widen their "error bars" and be receptive to new information. And it's for precisely that reason which I feel decently confident in saying "most people whose risk estimates are very low (<0.5%) are significantly overconfident." You logically cannot have extremely low probability estimates while also believing "there's a >10% chance that in the future I will justifiably think there is a >5% chance of doom, but right now the evidence tells me the risk is <0.5%."

Thanks for the feedback! definitely a helpful question. That error bars answer was aimed at OpenPhil based on what I've read from them on AI risk + the prompt in their essay question. I'm sure many others are capable of answering the "what is the probability" forecasting question better/more directly than me, but my two cents was to step back and question underlying assumptions about forecasting that seem common in these conversations.

Hume wrote that "all probable reasoning is nothing but a species of sensation." This doesn’t mean we should avoid probable reasoning (we can't) but I think we should recognize it is based only on our experiences/observations of the world. and question how rational its foundations are. I don't think at this stage anyone actually has the empirical basis to give a meaningful % for "AI will kill everyone." Call it .5 or 1 or 7 or whatever but my essay is about trying to take a step back and question epistemological foundations. Anthropic seems much better at this so far (if they mean it that they'd stop given further empirical evidence of risks).

I did list two premises from Hume that I think are true (or truer than the average person concerned about AI x-risk holds them to be), so those were my TLDR I guess also.

I see. (For others' reference, those two points are pasted below)

Overall, I don't really know what to make of these. They are fairly vague statements, making them very liable to motte-and-bailey interpretations; they border on deepities, in my reading.

"All knowledge is derived from impressions of the external world" might be true in a trivially obvious sense that you often need at least some iota of external information to develop accurate beliefs or effective actions (although even this might be somewhat untrue with regard to biological instincts). However, it makes no clear claim about how much and what kind of "impressions from the external world" are necessary for "knowledge."[1] Insofar as the claim is that forecasts about AI x-risks are not "derived from impressions of the external world," I think this is completely untrue. In such an interpretation, I question whether the principle even lives up to its own claims: what empirical evidence was this claim derived from?

The second claim suffers from similar problems in my view: I obviously wouldn't claim that there have always been seers who could just divine the long-run future. However, insofar as it is saying that the future is so "unknowable" that people cannot reason about what actions in front of them are good, I also reject this: it seems obviously untrue with regards to, e.g., fighting Nazi Germany in WW2. Moreover, I would say that even if this has been true, that does not mean it will always be true, especially given the potential for value lock-in from superintelligent AI.

Overall, I agree that it's important to be humble about our forecasts and that we should be actively searching for more information and methods to improve our accuracy, questioning our biases, etc. But I also don't trust vague statements that could be interpreted as saying it's largely hopeless to make decision-informing predictions about what to do in the short term to increase the chance of making the long-run future go well.

A term I generally dislike for its ambiguity and philosophical denotations (which IMO are often dubious at best).

Thanks for the feedback. I agree that trying to present an alternative worldview ends up quite broad with some good counter examples. And I certainly didn't want to give this impression:

Instead I'd say that it is difficult to make these predictions based on a priori reasoning, which this community often tries for AI, and that we should shift resources towards rigorous empirical evidence to better inform our predictions. I tried to give specific examples- Anthropic style alignment research is empiricist, Yudkowsky style theorizing is a priori rationalist. This sort of epistemological critique of longtermism is somewhat common.

Ultimately, I've found that the line between empirical and theoretical analysis is often very blurry, and if someone does develop a decent brightline to distinguish the two, it turns out that there are often still plenty of valuable theoretical methods, and some of the empirical methods can be very misleading.

For example, high-fidelity simulations are arguably theoretical under most definitions, but they can be far more accurate than empirical tests.

Overall, I tend to be quite supportive of using whatever empirical evidence we can, especially experimental methods when they are possible, but there are many situations where we cannot do this. (I've written more on this here: https://georgetownsecuritystudiesreview.org/2022/11/30/complexity-demands-adaptation-two-proposals-for-facilitating-better-debate-in-international-relations-and-conflict-research/ )