By Holden Clark, updated 13th September 2025

Purpose

I'm Holden, a Year 12 (16 year old) Leaf Fellow who has recently discovered the Effective Altruism community. When first learning about the movement, I was shocked to see that AI safety - a risk most commonly discussed in dystopian fiction - was listed as the most pressing problem facing humanity by leading organisations. However, whilst reading arguments advocating for AI safety funding, I noticed that the arguments rarely quantified how cost-effective they estimated safety initiatives to actually be - an issue which this article aims to address. Furthermore, the arguments were often very one-sided, using uncertain forecasts to form theses without considering critical counter-arguments, most notably the Synthetic Data Problem. This article aims to complete the opposite side of the argument to allow for more objective assessment of the cost-effectiveness of AI safety research.

This is my first time posting on the EA forum, and I would be enormously grateful for any feedback/discussion related to this post.

Summary

This article begins by using the ITN framework to distill existing arguments advocating for increased AI safety funding into a single cost-effectiveness estimate. Next, the Synthetic Data Problem and its consequences are evaluated and the cost-effectiveness estimate correspondingly updated. It is ultimately concluded that more tractable high-priority initiatives, such as malaria treatment, should be prioritised over AI safety initiatives, which risk inhibiting the positive effects of AI for no safety benefit.

1. Introduction

Dystopian stories about AI have been told and retold throughout our lives, in chart-topping movies such as Avengers: Age of Ultron, Terminator 2: Judgement Day and The Matrix, in bestselling novels such as Neuromancer by William Gibson and Prey by Michael Crichton, and for good reason: these stories are emotionally gripping because they activate key primal areas of our brains associated with fear and forecasting danger[1]. Humans have clung to fear-inducing stories throughout history, from the ‘Penny Dreadfuls’ (cheap, wildly overdramatised crime tales) of Victorian London to modern horror and thrill novels, and our adrenaline-seeking brains are attracted to cataclysmic AI tales for the same reasons. Fear of AI is understandable and inevitable, and many academics have taken to complex logical arguments and mathematical forecasts in attempts to rationalise such fears.

However, fear has been proven to cause us to cling to emotionally-charged arguments in support of incorrect hypotheses far beyond the point of rationality[2], whilst disregarding crucial counter-arguments. Consider why conspiracy theories are so widespread, despite strong scientifically-backed evidence against them.

Due to the substantial risk of fears of AI misalignment being distorted out of rational proportion by our brains’ primal adrenaline-seeking instincts, I argue that greater scrutiny must be applied to arguments which use highly uncertain forecasts to argue that an AI takeover of the world could be imminent, before funding which could otherwise save the lives of children at around $4,500 per life[3] through malaria research is diverted to AI safety.

2. Quantifying existing arguments for AI risk

2.1 The ITN Framework

Feel free to skip the indented text below if you are already familiar with the framework.

The ITN framework is a tool used within the Effective Altruism community to estimate the cost-effectiveness of an initiative. Defining each of ‘Importance’, ‘Tractability’ and ‘Neglectedness’ as follows:

Importance = Lives Saved / % of problem solved

Tractability = % of problem solved / % increase in resources

Neglectedness = % increase in resources / extra $ of funding

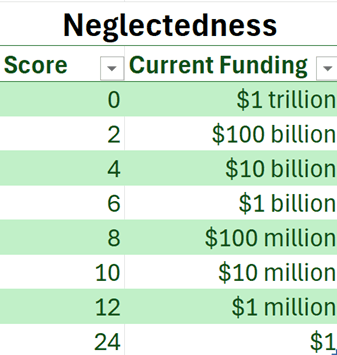

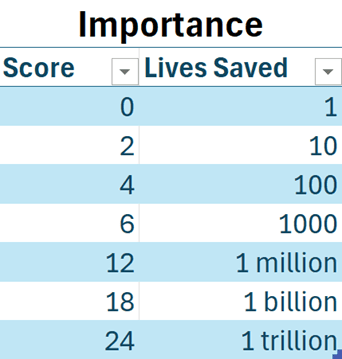

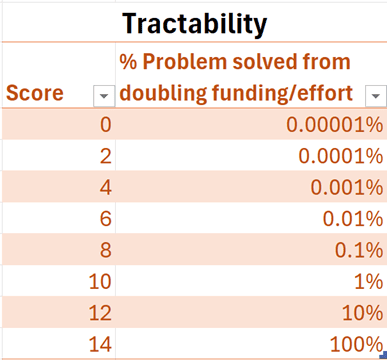

We can calculate the number of lives saved per dollar invested in an initiative by multiplying our scores for each of the variables, as ‘% of problem solved’ and ‘% increase in resources’ will cancel out. Using a logarithmic scale allows us to instead add scores for the variables rather than multiply. In the scales below an increase of two points corresponds to a difference of one order of magnitude (10x):

Figures 1.1-1.3

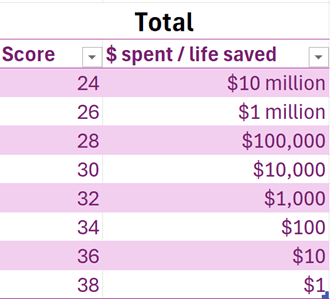

(Take the scores importance 0, tractability 14 and neglectedness 24, indicating a problem which costs $1 to solve which would have a 100% chance of saving one life i.e. $1/life saved. We can therefore see that a total score of 38 (0+14+24) corresponds to a cost-effectiveness of $1/life saved, from which the table below is extrapolated)

Figure 2

Popular arguments suggesting that AI safety research is extremely cost-effective translate roughly as follows into the ITN framework:

- Importance – extremely high, as misaligned AI (AI whose goals do not align with humanity’s) could cause human extinction. Many argue that human extinction is equivalent to approximately 1 trillion deaths, considering the number of future lives which would be lost, and that without any regulation humanity has a 90% chance of producing Artificial General Intelligence (defined as a computer system which could outperform humans at every cognitive task), which has a 16% chance of causing total human extinction or permanent disempowerment of the human species[4]. (average deaths caused without any attempt at intervention – 144 billion; score – 22.3)

- Neglectedness – currently around $1bn per year is spent directly on AI safety research[5]. (score – 6)

- Tractability – rarely quantifiably estimated, however Shulman and Thornley (2023)[6] argue that a $400bn initiative would have a 0.1% chance of preventing an AI-driven catastrophe. As the tractability score above is based on an increase in funding of $1bn, we can assume that the tractability would be 1bn/400bn lower, or 0.00025%. (score – 2.8)

This leads to a total score of 31.1, indicating that investment in AI safety initiatives would save a life for every $3,550 (3sf) invested on average (see Figure 2). This would indeed make AI safety an extremely cost-effective cause area for investment, 28% more so than funding to tackle malaria through the most cost-effective charities (at $4,500/life, or a score of 30.7)[3].

However, this statistic is built from sources which do not acknowledge the Synthetic Data Problem, meaning that it likely significantly overestimates the cost-effectiveness of AI safety initiatives. Upon evaluating the problem and its consequences, the ITN values above will be adjusted to reflect the decrease in cost-effectiveness indicated.

2.2. What are the threats from AI?

Threats from AI which could viably cause human extinction or permanent disempowerment of our species are most commonly cited as AI-driven arms races, unprecedented job losses, and misaligned AI being placed in positions of high power[7][8][9].

One premise explains why these threats are considered greater than similar threats posed by other advancing technology such as increasing computational power each year: AI advancement may be more rapid than that of any other technology in history. An arms race requires two countries’ or alliances’ military capabilities to rapidly increase above others’, destroying the premise of mutually assured destruction which has led to a remarkable period of nonviolence between modern nuclear-armed powers. Likewise, job losses must be unprecedentedly fast to indicate that humanity will not adapt to this technology as we have done to others such as the printing press, electricity and computers in the past, which have each rendered entire professions obsolete. Finally, there must be very strong advantages to placing AI in positions of high power to assume that humanity will not treat this with the same scepticism which we view self-driving cars with: despite AI-driven vehicles being 10x less likely to cause injuries than human-driven vehicles[10], 70% of Brits would be uncomfortable using one[11].

Yet, I argue that it is extremely unlikely that AI will develop as unprecedentedly rapidly as many popular estimates such as those by 80,000 Hours[12] and AI 2027[13] suggest, due to the Synthetic Data Problem.

3. The Synthetic Data Problem

3.1 Limitations of synthetic data

Until 2024, AI models were trained on human data, gaining power as they were trained on increasingly larger datasets. However, current leading AI models are trained on almost all high-quality publicly available data on the Internet[14][15], yet require more. Therefore, the newest AI models have been trained on a combination of human and synthetic (AI-generated) data to facilitate continued progress. In order for AGI to be developed, enormous data sets – which will almost certainly be composed of predominantly synthetic data (see Section 3.2) – must be used for training.

However, using large amounts of synthetic data, especially data synthesised from previous synthetic data, causes many significant problems. Firstly, diversity of data is reduced, as the saturation of original creativity throughout the data declines if a data set larger than the entire Internet is used. This causes model biases to increase and thus response quality to decline. Secondly, models become more vulnerable to error-ridden data on the internet (which could be placed maliciously, making the models more vulnerable to sabotage), as errors which only appeared in one out of billions of items of original data could appear in every item of synthetic data, ultimately risking total model collapse[16]. This is analogous to inbreeding: unpreventable genetic faults unnoticeable in the first generation compound into life-threatening conditions in future generations.

We do not currently have a solution to the synthetic data problem; a paradigm shift is required to overcome it, which has no guarantee of happening. The only areas where the use of synthetic data is currently effective are domains where computers can assign a clear ‘right or wrong’ answer to synthetic data - for example DeepBlue assigning a win or loss to its games played against itself, or a code-writing AI which can attribute success or failure to a program. This indicates that AI performance will only increase rapidly in very limited areas such as maths and coding, greatly decreasing its overall function and thus both restricting job losses and limiting AI to being merely a tool to humans rather than an independent decision maker, preventing harmful misalignment. This has already been shown with GPT-5: despite industry estimates indicating that GPT-5 has 10x the parameters of GPT-4[17], its performance has hardly increased in areas outside of mathematics and coding.

This makes extrapolation of graphs of historical data for yearly increases in AI power into the future – a key argument for AGI’s future creation, forming the foundation of AI 2027’s thesis[13] – dangerously misleading, as such extrapolations ignore the slowdown in progress which will be caused by the synthetic data problem. Conventional model scaling ended with GPT-4, and there is no indication that it will restart in the future. Due to this, I will decrease the chance of humanity producing AGI in the next century (further timescales are disregarded as safety initiatives today will have almost negligible impact on the development of AGI more than a century into the future) by a factor of 3, decreasing the expected loss of life from inaction and thus the importance of solving the problem by half an order of magnitude. I will also decrease the chance of AGI causing human extinction or permanent disempowerment if developed by a factor of 3 due to the slower-moving pace of the technology, which eliminates most risk due to rapid, unprecedented development discussed in Section 2.2. Furthermore, AGI will likely be trained very differently to current systems in order to overcome the synthetic data problem, indicating that current safety initiatives may not be the most effective at preventing AGI’s misalignment. Initiatives will be more cost-effective in the future when we regain the tractability we lose from not knowing how to overcome the Synthetic Data Problem and when we have more advanced technology to work on the problem more efficiently. This indicates that the tractability of current AI safety initiatives is lower than previously estimated, which will be represented on the framework by a decrease of one point.

Before: Importance 22.3, Tractability 2.8, Neglectedness 6, Total 31.1 (C-E $3,550/life)

After: Importance 20.3, Tractability 1.8, Neglectedness 6, Total 28.1 (C-E $89,100/life)

3.2 Could AGI be developed without the use of synthetic data?

It seems very unlikely. There is currently enough publicly accessible data on the internet to train a model with 5x10^28 FLOPs (Floating Point Operations per second, a measure of computational power)[14]; even if 100 times more data becomes available within the next century then we will still be unable to train a model with more than 5x10^30 FLOPs. (Further timescales are disregarded as safety initiatives today will have almost negligible impact on the development of AGI more than a century into the future.) This is just over five orders of magnitude more powerful than current leading models: GPT-4 was trained on 2.15x10^25 FLOPs.

A five order of magnitude increase in FLOPs is extremely unlikely to lead to AGI’s development, as the number of useful tasks which can be accomplished with this increase in computing power is low. Firstly, a five order of magnitude increase in FLOPs is unlikely to lead to a five order of magnitude increase in AI parameters, as companies are shifting towards more profitable smaller models, which use more FLOPs per parameter. Llama 3 was trained on 50x more FLOPs than Llama 2, yet has only between 5 and 6 times the parameters[18][19]; this trend is consistent across the AI industry and is very likely to continue. A three to four order of magnitude increase in model parameters is extremely unlikely to lead to revolutionary technology being developed: this is merely equivalent to the difference between the functional GPT-2 and powerful GPT-4 taking place over the next 100 years, suggesting that AI developed without synthetic data will merely resemble a more powerful version of present technology. The task capabilities of AI must increase by many times more than the difference between GPT-2 and GPT-4 for AGI to be developed, thus synthetic data (or another revolutionary solution, which present AI safety research will be unable to target) will almost certainly be required for AGI’s development.

3.3 Is AI more likely to do good than harm?

Throughout the past few decades, technology has done considerably more good for society than harm, assisting enormously valuable progress in healthcare, education, literacy, renewable energy, disaster responses, food security and other key areas[20][21]. This trend of advancing technology assisting development is very likely to continue, with AI being considered an asset to 128/169 of the UN Sustainable Development Targets[22]. Even in areas where technology can assist criminals (thus presenting a risk to society), the benefits to law enforcement often outweigh the increased threats[23]. Despite modern computers’ use in co-ordinating violent attacks and hacking, CCTV surveillance has been a crucial driver in the reduction of theft and violent personal crime in the UK decreasing by over 300% since 1995[24]. AI developed with only five orders of magnitude more FLOPs will most likely be an enhancement of modern technologies, indicating that similar benefits will be reaped from the technology’s development.

Due to this, AI safety initiatives which slow down the technology’s development risk causing harm for no benefit if AGI is ultimately impossible to develop, or has no risk of misalignment. Hundreds of millions of dollars of funding which could otherwise save human lives for $4,500/life is already being diverted to AI safety initiatives which risk having a negative impact on humanity[3][5], potentially costing thousands of human lives. I therefore argue that AI safety initiatives should only be pursued if they do not significantly hinder the technology’s development, to avert the risk of inhibiting AI’s positive impact on the world.

This evaluation assumes that AI is not concentrated in the hands of a very small number of individuals - a key prerequisite to AI-assisted power grabs or arms aces. However, this seems extremely unlikely, given the potential profitability of AI trained on five orders of magnitude more FLOPs than GPT-4, as a tool used by humans to assist productivity. It is in the interest of private companies, who are leading the AI development race, to sell products to the widest possible audience in order to obtain the largest possible revenue. This suggests that the leading AI models will remain available for public use in the future, as they are currently.

Ultimately, the consideration of the Synthetic Data Problem has changed the evaluation of AI safety research’s cost-effectiveness from $3,550/life saved to $89,100/life (3sf) saved – now twenty times less cost-effective than investment in tackling malaria. This statistic does not consider that most people consider the lives of people alive today to be many times more important than hypothetical unborn people centuries into the future, or that AI safety initiatives risk causing harm if AGI is impossible to develop or has no risk of misalignment.

4. Conclusion

It is understandable that fears around AI misalignment are widespread, including amongst academics: envisioning a future where humanity becomes enslaved by its own creation is terrifying, and spurs our brains to action. However, due to the strength of this fear, I believe that critical counter-arguments have been overlooked in arguments advocating for greater funding for AI safety initiatives. Ultimately, when considering just the Synthetic Data Problem and its consequences, my cost-effectiveness evaluation of AI safety initiatives dropped from saving a life for every $3,550 invested (determined through placing quoted values from existing arguments into the ITN framework), to one life for every $89,100, not considering that most people consider the lives of present people to be worth more than hypothetical unborn people centuries into the future. I therefore argue that proven cost-effective initiatives which also do not risk causing more harm than good, such as malaria treatment which has been reliably proven to save a life for every $4,500 invested[3], should be prioritised over the highly untractable AI Alignment Problem.

5. Answer to a counter-argument: What if AGI is very close?

If AGI is less than 20 years away, it will not be difficult for a future society with orders of magnitude more computing power to develop. Safety regulations introduced today could be scrapped by future world leaders attempting to boost economies or gain a strategic advantage in war, meaning initiatives will only push back the date of an AI-driven catastrophe at best (it seems reasonable to assume by an average of 10 years), rather than prevent it entirely.

Put into the ITN framework:

It is assumed that preventing Western countries from developing misaligned AGI would decrease the chance of misaligned AGI being developed anywhere in the world by 1%, as other countries or non-state actors could develop AGI using the same technology with comparable funding and effort. It is also assumed that an AI-driven catastrophe would kill an average of 10% of the world’s population (as we could likely isolate the catastrophe’s impact to certain regions of the world). Therefore, introducing regulations which eliminate Western countries from developing misaligned AGI would give 1%*10% of the world’s 8 billion people an average of 10 extra years of life (equivalent to 1/8 of a life), equating to saving an average of 1 million lives. (Importance – 12)

Neglectedness is 6, for $1 billion of direct funding on AI safety initiatives.

Doubling direct funding equates to an additional $1 billion spent, which it is assumed would have a 3% chance of preventing Western countries from developing misaligned AI, as governments would likely implement the most effective restrictions without the additional funding, and will be unwilling to let their AI development fall behind that of their political opponents in any case. (Tractability – 11)

In this unlikely worst-case scenario, the total score is 29, indicating that AI safety initiatives will not be significantly more cost-effective if AGI is extremely close.

- ^

Clasen, M (2019). Imagining the End of the World: A Biocultural Analysis of Post-Apocalyptic Fiction. Available at: https://www.researchgate.net/publication/334203670

- ^

Armitage, E (2025). Preparing for the worst: The irrationality of emotionally recalcitrant reasoning. Available at: https://onlinelibrary.wiley.com/doi/10.1111/sjp.12620

- ^

- ^

Grace, K et al. (2024). Thousands of AI Authors on the Future of AI. Available at: https://aiimpacts.org/wp-content/uploads/2023/04/Thousands_of_AI_authors_on_the_future_of_AI.pdf

- ^

Estimated as of 6th September 2025 - as this figure is increasing rapidly, there is no academic source to quote. Determined from considering direct funding from charitable organisations (McAleese, S (2025). An Overview of the AI Safety Funding Situation. Available at: https://forum.effectivealtruism.org/posts/XdhwXppfqrpPL2Y) as well as money spent by organisations such as Safe Superintelligence Inc..

- ^

Shulman, C & Thornley, E (2023) How much should governments pay to prevent catastrophes? Longtermism’s limited role. Available at: https://philpapers.org/archive/SHUHMS.pdf

- ^

Haner, J & Garcia, D (2019). The Artificial Intelligence Arms Race: Trends and World Leaders in Autonomous Weapons Development. Available at: https://doi.org/10.1111/1758-5899.12713

- ^

Dung, L (2023). Current cases of AI misalignment and their implications for future risks. Available at: https://link.springer.com/article/10.1007/s11229-023-04367-0

- ^

Soueidan, M & Shoghari, R (2024). The Impact of Artificial Intelligence on Job Loss: Risks for Governments. Available at: https://heinonline.org/HOL/LandingPage?handle=hein.journals/techssj57&div=14&id=&page=

- ^

Kusano, K et al. (2025) Comparison of Waymo Rider-Only crash rates by crash type to human benchmarks at 56.7 million miles. Available at: https://doi.org/10.1080/15389588.2025.2499887

- ^

YouGov (2021). Are Brits comfortable with self-driving cars?. Available at: https://yougov.co.uk/travel/articles/35562-car-manufacturers-still-some-way-convincing-brits-

- ^

- ^

- ^

Villalobos, P et al. (2024). Will We Run Out of Data? Limits of LLM Scaling Based on Human-Generated Data. Available at: https://epoch.ai/blog/will-we-run-out-of-data-limits-of-llm-scaling-based-on-human-generated-data

- ^

- ^

Shumailov, I et al. (2023), The Curse of Recursion: Training on Generated Data Makes Models Forget. Available at: https://arxiv.org/abs/2305.17493

- ^

Report from Nidhal Jegham, based on figures from paper How Hungry is AI? Benchmarking Energy, Water, and Carbon Footprint of LLM Inference (2025), available at: https://arxiv.org/pdf/2505.09598v2, first reported in The Guardian, article available at: https://www.theguardian.com/technology/2025/aug/09/open-ai-chat-gpt5-energy-use.

- ^

Touvron, H et al. (2023). Llama 2: Open Foundation and Fine-Tuned Chat Models. Available at: https://arxiv.org/pdf/2307.09288

- ^

Grattafiori, A et al. (2024). The Llama 3 Herd of Models. Available at: https://arxiv.org/abs/2407.21783

- ^

Buntin, M et al. (2011). The Benefits Of Health Information Technology: A Review Of The Recent Literature Shows Predominantly Positive Results. Available at: https://www.healthaffairs.org/doi/10.1377/hlthaff.2011.0178

- ^

Ayres, R (1996). Technology, Progress and Economic Growth. Available at: https://doi.org/10.1016/S0263-2373(96)00053-9

- ^

Vinuesa, R et al. (2019). The role of artificial intelligence in achieving the Sustainable Development Goals. Available at: https://arxiv.org/abs/1905.00501

- ^

Nuth, M (2008). Taking advantage of new technologies: For and against crime. Available at: https://doi.org/10.1016/j.clsr.2008.07.003#

- ^

Office of National Statistics (2025): Crime prevention in England and Wales: year ending March 2025. See Table 1 data available for download at: https://www.ons.gov.uk/peoplepopulationandcommunity/crimeandjustice/bulletins/crimeinenglandandwales/latest