This is a crosspost from https://lydianottingham.substack.com/p/field-notes-from-eag-nyc. Posting because I'd enjoy reading others' EAG(x) takeaways! This one's a case study in 'how much can you get out of the weekend having 20+ 1-1s, mostly research-related?'

The Brittleness Problem

I don’t think I can work on LM behavior much longer. It’s too brittle, fickle, sensitive to hyperparameter perturbations—outputs stochastic in a way even Scherrer et al. can’t resolve. A grad student resonates; that’s why he’s working on knowledge graphs now.

Which Way, Scientist AI?

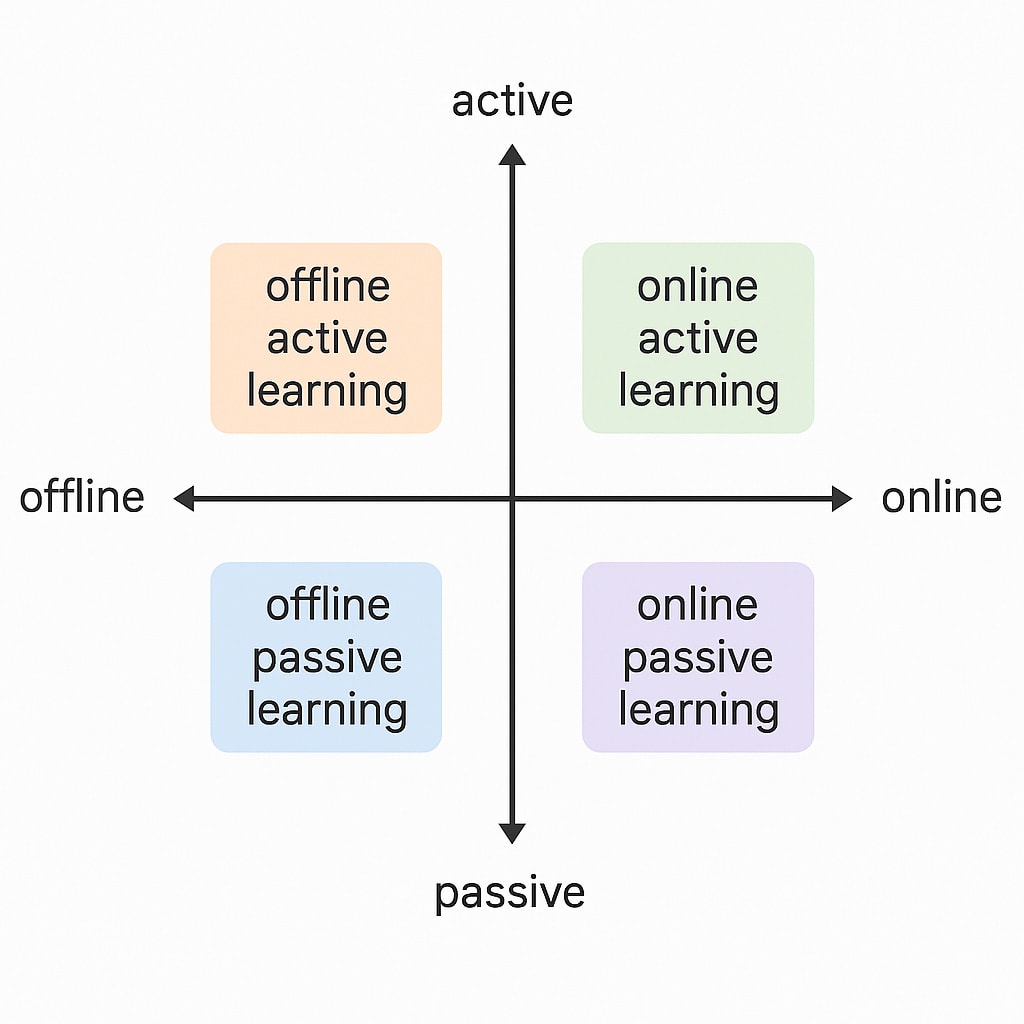

The Scientist AI people don’t seem to have ready answers to “how will Scientist AI expand its database / propose experiments & get them approved?” I perceive two defensible positions here:

- We can still get almost all the value we’d get from agentic / autonomous AI (scientific breakthroughs, poverty alleviation…) with non-agentic / scientist / oracle AI.

- We’re going to be leaving a lot of potential value on the table [by refusing agentic / autonomous systems], but we have no choice but to make this sacrifice.

They don’t make clear which position they’re defending.

I of course want to see more people defend 1. But if 2 is our only option—if ‘Scientist AI’ is as far as we can go[1]—then I’m interested in how we can set standards that prepare us for Scientist AI, so it can do its darn best with a semi-static / slow-moving database. Rory says AlphaFold only worked because all protein-folding researchers used Protein Data Bank in the 1980-90s, yielding abundant standardized training data. What standards can scientific conferences set today to help non-agentic AI readers?

Some ‘Scientist AI’ co-authors I spoke to hadn’t read “Why Tool AIs Want To Be Agent AIs”, which informs a lot of my intuitions here.

Activation Fatigue

When I discuss activation engineering woes, Linh recommends approaches I wish I had time & headspace to chase down—I need to get into an environment where I can properly try solving the problems I’m working on. Notably, I really need to read up on Latent Adversarial Training.

Steering vectors are not currently deployable in frontier systems.

LM Behavior

I’ve been interested in ‘stated vs. revealed preferences in LMs’ for a while now. Abdur is so quick he runs a follow-up experiment on SvR right after we chat: a model tries to circumvent its max_tokens limit while claiming the limit’s chill. Quite sympathetic behavior. This is a comparatively simple / clean setup vs. Petri and ControlArena, other possible testbeds.

Adam has a neat approach to measuring model behavior and the effects of pro-introspection interventions. If you personally specify the model’s preferences, you have a cleaner baseline vs. working with ‘native’ preferences. Something I should keep in mind on SvR project.

The CaML people are spreading synthetic pro-compassion data around the internet, & sharing it for free with model developers. It really is such noble, honorable work—though the world where this is counterfactual is not exactly one I want to live in. :)

I’m starting to think the best empirical LM behavior / ‘revealed preference’ analysis will take place over AI labs’ model logs, AEI-style, though we can operate over datasets like LMSYS-Chat-1M in the meantime.

According to Audrey, J–Pal’s evals (on how AI helps coffee farmers etc.) will inform us about philanthropy-counterfactual AI use. For example, they’ve got preliminary results suggesting chat assistants boost entrepreneurs who were already doing well, but may be unhelpful to struggling business owners who defer and dig holes they can’t get out of.

Alignment Roundup

An ex-FHI / CIWG researcher explains struggles doing research that’s both good and easy to fund. “Just write a paper every ~3 months.” Good advice.

AE Studio is one heck of a company—using software consultancy to fund their alignment research. Self-other overlap, energy-based transformers.

Poseidon Research exists! Cryptography, steganography, LM monitoring. It’s now possible to do AIS research in Manhattan at the NYC Impact Hub.

Robotics interpretability is a growing field, with work in progress at PAIR Lab (Yixiong) and Imperial (Ida) (VLAMs like 7B OpenVLA).

The whole field of making AI science (e.g. jailbreaking) principled seems fun.

Martin would need $20-30k to scale his literature-synthesis, intervention-extracting work from the Alignment Research Dataset ($800) to all of ArXiv.

Tyler G’s study list is relatable: control theory, stochastic processes, categorical systems theory, dynamical systems theory, network theory, game theory, decision theory, multiagent modeling, and formal verification. Towards Guaranteed Safe AI addresses agency, unlike Scientist AI. In general, I appreciate papers that address safe design, like Overview of 11 Proposals.

Bandwidth generally high with long-time rationalists like Justin, and yes, ‘stealth advisor’ is a thing you can be.

Will’s post encouraging EAs to ‘make AI go well’ seems like a helpful summary of the recent zeitgeist around me. Notably, ETAI had this flavor.

Podcasting Tips

I hear all about podcast design from Angela! For context, I’m thinking of starting a podcast in Oxford, mapping the work of PIs and grad students in AI, neurotech, matsci, etc. onto Gap Map. Apparently Oxford Public Philosophy are a strong local podcast.

Angela’s advice for interviewing guests is this:

- Watch their other episodes to see what they’ve spoken about before—get them off-script

- Ask about a moment where they’ve experienced a paradigm shift / went into work believing one thing, then something changed

- Prepare 10-20 questions, including ideas for what you’ll do if things don’t go to plan

- Give them an idea before the episode starts of what you’re planning to do, where you’re planning to go with the episode, and what you’re hoping you, they, & the audience get out of it

Misc. current musings:

- How meaningful are LM outputs produced under the coercion of structured decoding / strict token limits?

- After all the spiking neurons / predictive coding / … ‘neuromorphic AI’ work, are there any lessons we can apply from AI back to neuro (e.g. representation engineering ⇒ neuromodulation)?

- Perhaps there should be a tiny research / forecasting hackathon dedicated to “what are SSI doing, and how can the safety community best respond [to their likely strategy / this flavor of development]?”

- Can we track what they’re doing through their hardware orders?

- The map & the territory…the strings & the world-states.

- Reasoning mode / CoT gives a janky, goodharty increase in power + coherence, not a robust one. & the history of ML shows janky workarounds get subsumed by elegant, clean, simple replacements…

- ‘Comparative advantage for skeptics’, anyone?

Things I want to read pretty soon:

- GFlowNets

- FHI on infohazards, infinite ethics, & everything else

- We now have an FHI-inspired working group in SF/Berkeley! Lmk if interested :)

- As always, everything under ‘Read Later!’ But I’m going to introduce two new tags: one ‘skimmed, deprioritized’ and the other ‘read it, made updates, wrote about them, cleared cache’. It will be good.

Read some Flatland on the plane.

‘Choose-your-own-adventure’ is the right mindset for EAG! I can’t wait for next year :)

With huge appreciation to Iggy, who kindly hosted me after briefly meeting at Memoria, & as ever, to the EAG Team!

- ^

notwithstanding the cat already being out of the bag wrt agentic systems