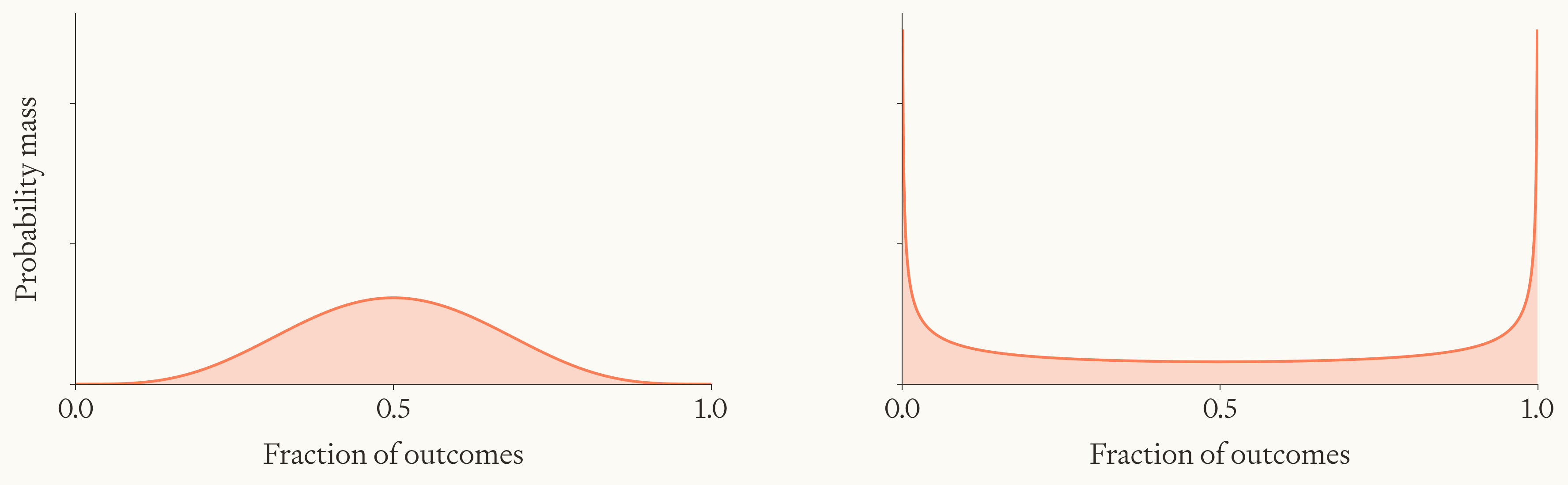

Suppose you think that the chance that humanity flourishes is 10%. That could be because flourishing is responsive to actions people take today, and hence — not knowing what actions people will take — properly chancy.

Instead, it could be because you’re about 90% sure that we’re effectively doomed, and otherwise pretty sure that flourishing is basically guaranteed. In other words, flourishing might be predetermined either way, and therefore more difficult to influence.

I develop a model of this intuition, and test how strong the effect is. On my numbers, accounting for our wide uncertainty about the default likelihood of flourishing makes flourishing-focused interventions look about half as promising as before. That’s not a game-changing difference given all our other uncertainties, but it’s still meaningful.

The model suggests two rules of thumb that apply more generally. First, when working on one factor among several that multiply, act as if you’re optimistic about the others. Second, all else equal, act on problems whose true difficulty you know better.

The original idea is due to Owen Cotton-Barratt, and the formal model is due to Carlo Leonardo Attubato. Major credit also goes to the many people who elaborated on these ideas as part of a work trial.

You can read the full article on the Forethought website.

I think your formulation is elegant, but I think the real possibilities are lumpier and span many more orders of magnitude (OOMs). Here's a modification from a comment on a similar idea:

I think there would be some probability mass that we have technological stagnation and population reductions, though the cumulative number of lives would be much larger than alive today. Then there would be some mass on maintaining something like 10 billion people for a billion years (no AI, staying on earth either due to choice or technical reasons). Then there would be AI doing a Dyson swarm, but either because of technical reasons or high discount rate, not going to other stars. Then there would be AI settles the galaxy, but again either because of technical reasons or discount rate, not going to other galaxies. Then there would be settling many galaxies. Then 30 OOMs to the right, there could be another high slope region corresponding to aestivation. And there could be more intermediate states corresponding to various scales of space settlement of biological humans. Even if you ignore the technical barriers, there are still many different levels scale we could choose to end up at. Even if you think the probability should be smoothed because of uncertainties, still there are something like 60 OOMs between survival of biological humans on Earth and digital aestivation. Or are you collapsing all that and just looking at welfare regardless of the scale? Even welfare could span many OOMs.