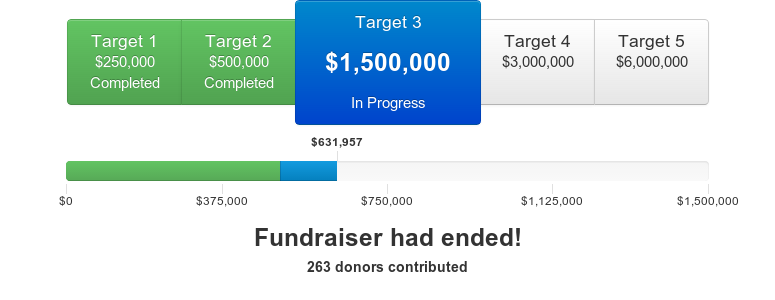

Our summer fundraising drive is now finished. We raised a grand total of $631,957 from 263 donors. This is an incredible sum, making this the biggest fundraiser we’ve ever run.

We've already been hard at work growing our research team and spinning up new projects, and I’m excited to see what our research team can do this year. Thank you to all our supporters for making our summer fundraising drive so successful!

The Machine Intelligence Research Institute (MIRI) is a research nonprofit that works on technical obstacles to designing beneficial smarter-than-human artificial intelligence (AI). I'm MIRI's Executive Director, Nate Soares, and I'm here to announce our 2015 Summer Fundraiser!

— Live Progress Bar —

This is a critical moment in the field of AI, and we think that AI is a critical field. Science and technology are responsible for the largest changes in human and animal welfare, both for the better and for the worse; and science and technology are both a product of human intelligence. If AI technologies can match or exceed humans in intelligence, then human progress could be accelerated greatly — or cut short prematurely, if we use this new technology unwisely. MIRI's goal is to identify and contribute to technical work that can help us attain those upsides, while navigating the risks.

We're currently scaling up our research efforts at MIRI and recruiting aggressively. We will soon be unable to hire more researchers and take on more projects unless we can secure more funding. In the interest of helping you make an informed decision about how you’d like MIRI to develop, we're treating this fundraiser as an opportunity to explain why we're doing what we're doing, and what sorts of activities we could carry out at various funding levels. This post is a quick summary of those points, along with some of the reasons why I think donating to MIRI is currently a very highly leveraged way to do good.

Below, I’ll talk briefly about why we care about AI, why now is a critical moment for MIRI and the wider field, and what specific plans we could execute on with more funding.

Why care about artificial intelligence?

"Intelligence" (and therefore "artificial intelligence") is a vague notion, and difficult to pin down. Instead of trying, I’ll just gesture at the human ability to acquire skills, invent science, and deliberate (as opposed to their ability to carry things, or their fine motor control) and say "that sort of thing."

The field of artificial intelligence aims to automate the many varied abilities we lump under the label "intelligence." Because those abilities are what allow us to innovate scientifically, technologically, and socially, AI systems will eventually yield faster methods for curing disease, improving economies, and saving lives.

If the field of AI continues to advance, most top researchers in AI expect that software will start to rival and surpass human intelligence across-the-board sometime this century. Yet relatively little work has gone into figuring out what technical insights we'll need in order to align smarter-than-human systems with our interests. If we continue to prioritize capabilities research to the exclusion of alignment research, there are a number of reasons to expect bad outcomes:

1. In the absence of a mature understanding of AI alignment, misspecified goals are likely. The trouble is not that machines might "turn evil;" the trouble is that computers do exactly what you program to. A sufficiently intelligent system built to execute plans that it predicts lead to the development of a cancer cure soon may well deduce that the most effective way to ensure that a cure is found is to kidnap humans for experiment, while resisting efforts to shut it down and creating backups of itself. This sort of problem could plague almost any sufficiently autonomous agent using sufficiently powerful search processes: programming machines to do what we meant is a difficult task.

2. Artificially intelligent systems could eventually become significantly more intelligent than humans, and use that intelligence to gain a technological or strategic advantage. In this case, misaligned goals could be catastrophic. Imagine, for example, the cancer-curing system stripping the planet of resources to turn everything it can into computing substrate, which it uses to better understand protein dynamics.

3. The advent of superintelligent machines might come very quickly on the heels of human-level machine intelligence: AI funding could spike as human-level AI draws closer; or cascades of relevant insights may chain together; or sufficiently advanced AI systems might be used to advance AI research further, resulting in a feedback loop. Given that software reliability and AI system safety are probably not features that can be bolted on at the last minute, this implies that by the time superintelligent machines look imminent, we may not have sufficient time to prepare.

These claims and others are explored in more depth in Nick Bostrom's book Superintelligence, and also on our new FAQ.

If we do successfully navigate these risks, then we could see extraordinary benefits: automating scientific and technological progress means fast-tracking solutions to humanity's largest problems. This combination of large risks and huge benefits makes the field of AI a very important lever for improving the welfare of sentient beings.

Why now is a critical moment for MIRI and the field of AI

2015 has been an astounding year for AI safety engineering.

In January, the Future of Life Institute brought together the leading organizations studying long-term AI risk (MIRI, FHI, CSER) along with top AI researchers in academia (such as Stuart Russell, co-author of the leading AI textbook; Tom Dietterich, president of AAAI; and Francesca Rossi, president of IJCAI) and representatives from industry AI teams (Google DeepMind and Vicarious) for a "Future of AI" conference in San Juan, Puerto Rico. In the ensuing weeks and months, we've seen:

- a widely endorsed open letter based on conclusions from the Puerto Rico conference, including an accompanying research priorities document which draws heavily on MIRI's work.

- a grants program, funded by $10M from Elon Musk and $1.2M from the Open Philanthropy Project, aimed at jump-starting the field of long-term AI safety research. (MIRI researchers received funding from four different grants, two as primary investigator.)

- the announcement of the first-ever workshops and discussions about AI safety and ethics at the top AI and machine learning conferences, AAAI, IJCAI, and NIPS. (We presented a paper at the AAAI workshop, and we'll be at NIPS.)

- public statements by Bill Gates, Steve Wozniak, Sam Altman, and others warning of the hazards of increasingly capable AI systems.

- a panel discussion on superintelligence at ITIF, the leading U.S. science and technology think tank. (Stuart Russell and I spoke on the panel, among others.)

This is quite a shift for a public safety issue that was nearly invisible in most conversations about AI a year or two ago.

However, discussion of this new concern is still preliminary. It’s still possible that this new momentum will dissipate over the next few years, or be spent purely on short-term projects (such as making drones and driverless cars safer) without much concern for longer-term issues. Time is of the essence if we want to build on these early discussions and move toward a solid formal understanding of the challenges ahead — and MIRI is well-positioned to do so, especially if we start scaling up now and building more bridges into established academia. For these reasons, among others, my expectation is that donations to MIRI today will go much further than donations several years down the line.

MIRI is in an unusually good position to help jump-start research on AI alignment; we have a developed research agenda already in hand and years of experience thinking about the relevant technical and strategic issues, which gives us a unique opportunity to shape the research priorities and methodologies of this new paradigm in AI.

Our technical agenda papers provide a good overview of MIRI's research focus. We consider the open problems we are working on to be of high direct value, and we also see working on these issues as useful for attracting more mathematicians and computer scientists to this general subject, and for grounding discussions of long-term AI risks and benefits in technical results rather than in intuition alone.

What MIRI could do with more funds

Over the past twelve months, MIRI's research team has had a busy schedule — we've been running workshops, attending conferences, visiting industry AI teams, collaborating with outside researchers, and recruiting. We've done all this while also producing novel research: last year, we published five papers at four conferences, and wrote two more which have been accepted for publication over the next few months. We've also produced around ten new technical reports, and produced a host of new preliminary results that have been posted to our research forum.

That’s what we've been able to do with a three-person research team. With a larger team, we could make progress much more rapidly: we'd be able to have each researcher spend larger blocks of time on uninterrupted research, we'd be able to run more workshops and engage with more interested mathematicians, and we'd be able to build many more collaborations with academia. However, our growth is limited by how much funding we have available. Our plans can scale up in very different ways depending on which of these funding targets we are able to hit:

Target 1 — $250k: Continued growth. At this level, we would have enough funds to maintain a twelve-month runway while continuing all current operations. We will also be able to scale the research team up by one to three additional researchers, on top of our three current researchers and two new researchers who are starting this summer. This would ensure that we have the funding to hire the most promising researchers who come out of our MIRI Summer Fellows Program and our ongoing summer workshop series.

Target 2 — $500k: Accelerated growth. At this funding level, we could grow our team more aggressively, while maintaining a twelve-month runway. We would have the funds to expand the research team to ten core researchers over the course of the year, while also taking on a number of exciting side-projects, such as hiring one or two type theorists. Recruiting specialists in type theory, a field at the intersection of computer science and mathematics, would enable us to develop tools and code that we think are important for studying verification and reflection in artificial reasoners.

Target 3 — $1.5M: Taking MIRI to the next level. At this funding level, we would start reaching beyond the small but dedicated community of mathematicians and computer scientists who are already interested in MIRI's work. We'd hire a research steward to spend significant time recruiting top mathematicians from around the world, we'd make our job offerings more competitive, and we’d focus on hiring highly qualified specialists in relevant areas of mathematics. This would allow us to grow the research team as fast as is sustainable, while maintaining a twelve-month runway.

Target 4 — $3M: Bolstering our fundamentals. At this level of funding, we'd start shoring up our basic operations. We'd spend resources and experiment to figure out how to build the most effective research team we can. We'd upgrade our equipment and online resources. We'd branch out into additional high-value projects outside the scope of our core research program, such as hosting specialized conferences and retreats and running programming tournaments to spread interest about certain open problems. At this level of funding we'd also start extending our runway, and prepare for sustained aggressive growth over the coming years.

Target 5 — $6M: A new MIRI. At this point, MIRI would become a qualitatively different organization. With this level of funding, we would be able to begin building an entirely new AI alignment research team working in parallel to our current team. Our current technical agenda is not the only approach available, and we would be thrilled to spark a second research team approaching these problems from another angle.

I'm excited to see what happens when we lay out the available possibilities (some of them quite ambitious) and let you all collectively decide how quickly we develop as an organization. We are not doing a matching fundraiser this year: large donors who would normally contribute matching funds are donating to the fundraiser proper.

We have plans that extend beyond the $6M level: for more information, shoot me an email at nate@intelligence.org. I also invite you to email me with general questions or to set up a time to chat.

Addressing questions

The above was quite quick: I can say lots more about everything I touched upon above, and in fact we're planning to elaborate on many of these points between now and the end of the fundraiser. We'll be using this five-week period as an opportunity to explain our current research program and our plans for the future. If you have any questions about what MIRI does and why, email them to rob@intelligence.org; we'll be posting answers to the MIRI blog every Monday and Friday.

- July 1 — Grants and Fundraisers. Why we've decided to experiment with a multi-target fundraiser.

- July 16 — An Astounding Year. Recent successes for MIRI, and for the larger field of AI safety.

- July 18 — Targets 1 and 2: Growing MIRI. MIRI's plans if we hit the $250k or $500k funding target.

- July 20 — Why Now Matters. Two reasons to give now rather than further down the line.

- July 24 — Four Background Claims. Core assumptions behind MIRI's strategy.

- July 27 — MIRI's Approach. How we identify technical problems to work on.

- July 31 — MIRI FAQ. Summarizing some common sources of misunderstanding.

- August 3 — When AI Accelerates AI. Some reasons to get started on safety work early.

- August 7 — Target 3: Taking It To The Next Level. MIRI's plans if we hit the $1.5M funding target.

- August 10 — Assessing Our Past And Potential Impact. Why expect MIRI in particular to make a difference?

- August 14 — What Sets MIRI Apart? Distinguishing MIRI from groups in academia and industry.

- August 18 — Powerful Planners, Not Sentient Software. Why advanced AI isn't "evil robots."

- August 28 — AI and Effective Altruism. On MIRI's role in the EA community.

You can also check out questions I've answered on my Effective Altruism Forum AMA. Our hope is that these new resources will let you learn about our activities and strategic outlook, and that this will help you make more informed decisions during our fundraiser.

Regardless of where you decide to donate this year, know that you have my respect and admiration for caring about the state of the world, thinking hard about how to improve it, and then putting your actions where your thoughts are. Thank you all for being effective altruists.

-Nate

I like the multiple targets way of fundraising - it helps bring clarity to thinking about the effect of my possible donations.