Summary

Neartermist[1] funders should factor AGI timelines in their cost effectiveness analyses and possibly prepare to spend all their money quickly in light of an AGI arrival ‘heads-up’. When considering two types of interventions: those which produce value in a short period of time and those that produce value over a long time, factoring in AGI timelines pushes to marginally increase spending on the former.

Main text

Suppose for simplicity there are two types of interventions that neartermists can spend money on:

- 'Fast' interventions are interventions that have an immediate effect but have little to no flow-through effects beyond the time for which they’re applied. Examples could include

- providing pain relief medicines

- paying people to eat fewer animal products (who revert their diet after the intervention)

- 'Slow' interventions are interventions that help in the first year of implementation but they continue producing value for many years afterwards. Examples could include

- improving someone’s education, which improves their future earnings

- improving animal welfare laws

Most of the most cost-effective neartermist interventions are slow interventions.

Suppose we only considered the benefit from the first year of the interventions. Then it seems plausible that some fast interventions would be more cost effective than the cost effective slow interventions. If this was not the case, it may be a case of surprising and suspicious convergence.

Now suppose we knew AGI was one year away and that the slow interventions have no flow-through effects after AGI arrives[2]. Then given the choice between spending on slow or fast interventions, we should spend our money entirely on the latter. At some point, we may be in this epistemic state.

However, we may never be in such an epistemic state where we know AGI is one year away. In this case, we should marginally spend more on fast interventions if we are 50% sure AGI will arrive next year than if we are 10% sure AGI will arrive next year.

In both cases the AGI timeline considerations effectively discounts the slow interventions[3]. With sufficient AGI implied discounting, fast interventions can become the most cost effective.

Simple optimal control model

I have created a simple optimal control[4]model that I describe in the appendix, and that you can play with here[5]. The model (which I explain the appendix) takes parameters

- The real interest on capital

- The diminishing returns to spending on each of slow & fast interventions each year

- The ratio of (benefit in the first year of the best fast intervention per cost) to (benefit in the first year of the best slow intervention per cost)

- The rate at which slow interventions ‘wash out’ (alternatively framed as the degree to which their benefit is spread out over time). [Fast interventions are only beneficial in the first year by assumption].

- AGI timelines

- The probability that at some point we have a one year[6] ‘heads-up’ on the arrival of AGI and so spend all our money on fast intervention

- A non-AGI discount rate to account for non-AGI existential risks, philanthropic opportunities drying up, etc

The model returns the optimal spending on fast inventions () and slow interventions () each year.

Example model results

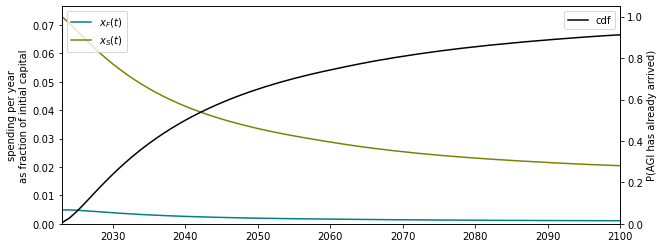

Example 1

This example above has AGI timelines (50% by 2040, 75% by 2061). The best fast interventions have 5x more impact in the first year than slow interventions, but 0.25x impact over the long run (modulo AGI timelines & discounting). The growth in our capital is 5% and there is a 3% discount rate. The diminishing returns to spending on fast and slow interventions are equal. We have a 50% chance of a one year AGI arrival heads-up.

Some of the spending on fast interventions is simply due to already buying all the low-hanging slow interventions and some is due to the AI discounting effect.

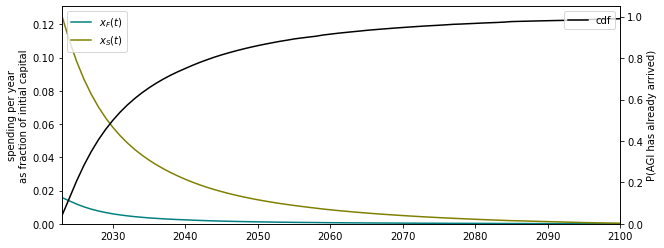

Example 2

In this example, compared to the previous the only change is shorter timelines, which are now (50% by 2030, 75% by 2040). All other parameters are the same. Unsurprisingly, compared to the previous example there is greater spending on fast interventions.

Not shown in either graph is the spending on fast interventions that can occur if we get a heads-up from AGI.

Takeaways

I weakly endorse the claims that:

- Neartermist funders, if they do not already, should consider the AGI implied discount rate in cost effectiveness analyses of ‘slow’ interventions.

- Neartermist funders should marginally speed up their spending in light of their AGI timelines (relative to a spending schedule that did not incorporate AGI timelines).

- Neartermist funders, if they do not already, should consider funding ‘fast’ interventions and potentially building the infrastructure to spend on fast interventions in case of an AGI ‘heads-up’.

Acknowledgements

This project has spun out from a larger ongoing project with Guillaume Corlouer on the optimal spending schedule for AI safety interventions. I’m grateful to Tom Barnes and Nicolas Macé for discussion and comments.

Appendix

The model

I write for our spending on fast interventions at time and for our spending on slow interventions at time t. Our total money is and we receive real interest . The time derivative of our money is

I write for the stock of slow interventions that have been implemented. This stock grows with spending on slow interventions, and I suppose it depreciates over time due washing-out like effects. I take

where is the returns to spending per unit time on slow interventions and is the rate of depreciation.

The utility of a given spending schedule (given by and) is then

I also add the following extra term to the integrand of account for the fact we may have one year ‘heads-up’ and so spend all our money on fast interventions:

I further multiply both terms in by an exogenous discount rate .

Model limitations

- My implementation of the model rescales the AGI timeline PDF such that the optimization is over a finite horizon. This leads to a discontinuity in the PDF.

- I applied a slapdash approach to the exogeneous discount rate, a better formulation could be used.

- The opportunities to do good remain constant and the returns to spending are scale invariant.

- ^

I mean “people who work on or donate to global health or animal welfare issues” rather than any philosophical position. This does not, for example, include philosophical neartermists who work on existential risks for reasons like those given here.

- ^

Potentially as we are all in living in a post-scarcity world or all dead

- ^

Discussed here

- ^

This type of model has been used to model spending by Philip Trammell and to model spending and community growth by Philip Trammell and Nuño Sempere.

- ^

The model is not very robust and sensitive to input. I’ve created it for illustration rather than decision making. Apologies in advance if you have trouble getting it to work!

- ^

For simplicity I’ve fixed one year, though the model could be adapted to allow an arbitrary heads-up time.

Surely most neartermist funders think that the probability that we get transformative AGI this century is low enough that it doesn't have a big impact on calculations like the ones you describe?

There are a couple views by which neartermism is still worthwhile even if there's a large chance (like 50%) that we get AGI soon -- maybe you think neartermism is useful as a means to build the capacity and reputation of EA (so that it can ultimately make AI safety progress), or maybe you think that AGI is a huge problem but there's absolutely nothing we can do about it. But these views are kinda shaky IMO.

The idea that a neartermist funder becomes convinced that world-transformative AGI is right around the corner, and then takes action by dumping all their money into fast-acting welfare enhancements, instead of trying to prepare for or influence the immense changes that will shortly occur, almost seems like parody. See for instance the concept of "ultra-neartermism": https://forum.effectivealtruism.org/posts/LSxNfH9KbettkeHHu/ultra-near-termism-literally-an-idea-whose-time-has-come

This is not obvious to me; I'd guess that for a lot of people, AGI and global health live in separate magisteria, or they're not working on alignment due to perceived tractability.

This could be tested with a survey.

I agree with Thomas Kwa on this

I think neartermist causes are worthwhile in their own right, but think some interventions are less exciting when (in my mind) most of the benefits are on track to come after AGI.

Fair enough. My prediction is that the idea will become more palatable over time as we get closer to AGI in the next few years. Even if there is a small chance we have the opportunity to do this, I think it could be worthwhile to think further given the amount of money earmarked for spending on neartermist causes.

Thanks for writing this!

Conditional on an aligned superintelligence appearing in a short time, there could be interventions that prevent or delay deaths until it appears and probably save these lives and have a lot of value (though it's hard to come up with concrete examples. Speculating without thinking about the actual costs, providing HIV therapy that’s possibly not cost-effective if you to do it for a lifetime but is cost-effective if you do it for a year or maybe freezing people when they die or providing mosquito nets that stop working in a year but are a lot cheaper sound kind of like it)