Consciousness in Artificial Intelligence: Insights from the Science of Consciousness

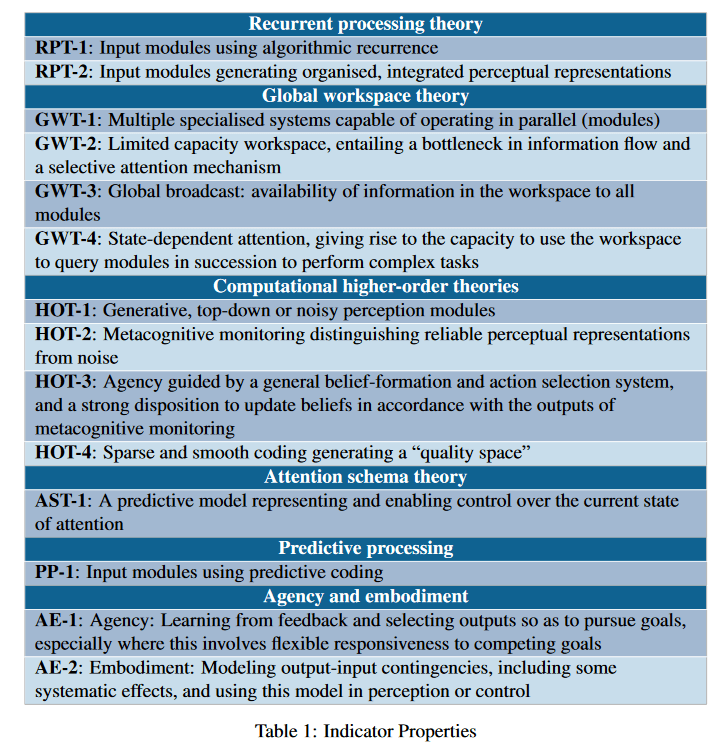

Patrick Butlin and Robert Long work with 18 neuroscientists, AI researchers, and philosophers to develop a framework for assessing consciousness in AI systems. They survey several neuroscientific theories of consciousness and what they might imply about which artificial systems are conscious. They need to assume computational functionalism, which entails that an artificial system with the same essential computational features of conscious biological systems would also be conscious. Given this assumption, you can interpret different possible sufficient conditions for consciousness in AI systems from different possible neuroscientific theories of consciousness.

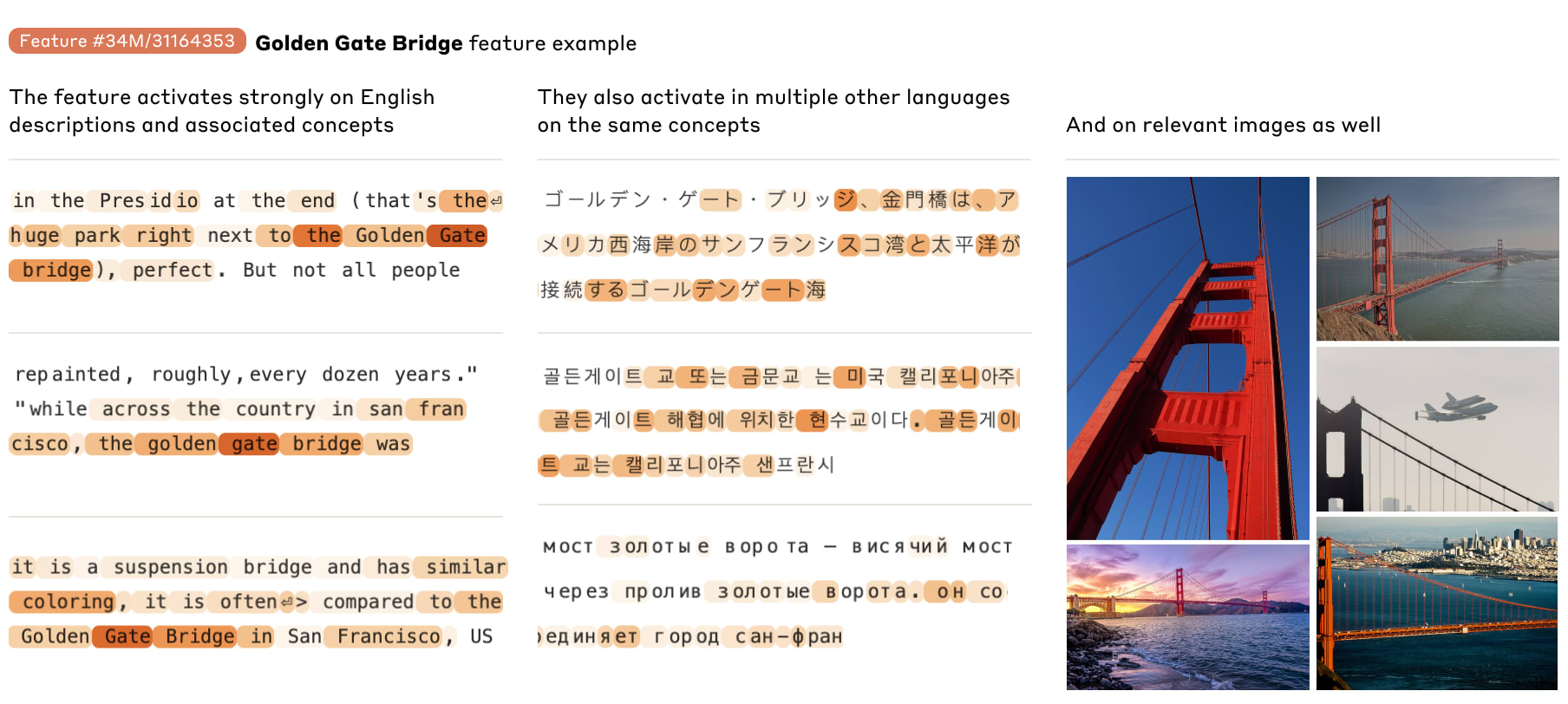

Scaling Monosemanticity: Extracting Interpretable Features from Claude 3 Sonnet

Adly Templeton, Tom Conerly, and others at Anthropic apply interpretability methods to their large language model (Claude) and identify ‘concepts’ as concrete as the Golden Gate Bridge and as abstract as a coding error. They show that these methods (sparse autoencoders) can be applied to large commercial models and give insights into how these systems function.

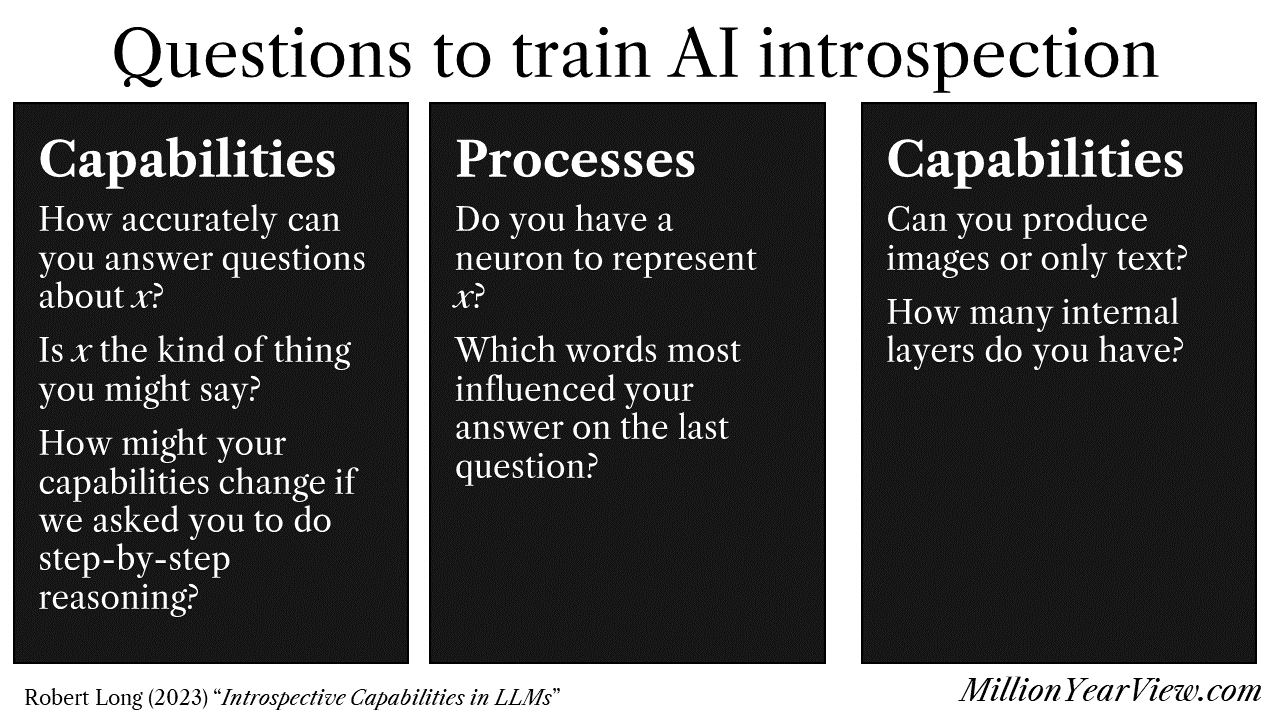

Introspective Capabilities in LLMs

Robert Long talks about how we might build introspective AI systems that can speak to their own consciousness or lack thereof. While this research program is speculative, I think it could provide some evidence that a particular system is conscious if carried out extremely carefully. I wrote a longer summary too.