Quick Intro: My name is Strad and I am a new grad working in tech wanting to learn and write more about AI safety and how tech will effect our future. I'm trying to challenge myself to write a short article a day to get back into writing. Would love any feedback on the article and any advice on writing in this field!

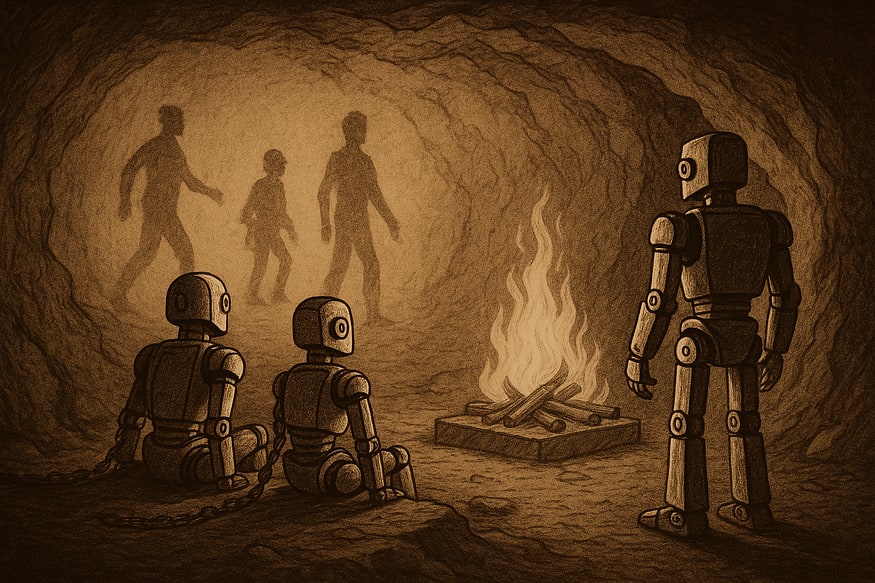

In Plato’s “Allegory of the Cave,” we are asked to imagine a group of people who have lived their whole lives stuck inside a cave. The only remnants of the outside world exposed to them are the shadows reflected on the cave’s walls. To us, these are the shadows of the outside world. But to those stuck in the cave, it is unclear what these shadows represent at all.

Is it possible that today’s AI models are stuck inside a cave of their own? Model’s are trained on data that represent concepts in the real world. They are being trained on “shadows” of reality.

For example, take the concept of a red ball. There are many ways to represent a red ball. You could have an image of a red ball. You could have a piece of text that says “red ball.” An audio recording of someone saying “red ball.” While all of these “modalities” are different, they all refer to the same concept of a red ball. They are the shadows through which current AI model’s learn about the world.

What’s exciting is that these models might actually be discovering the “reality” behind these shadows. A recent paper proposes the Platonic Representation Hypothesis (PRH) which argues that today’s AI models are converging in how they internally represent concepts suggesting a trend towards a shared representation of reality.

Press enter or click to view image in full size

Platonic Representation Hypothesis

What does it mean to say that models are converging in how they represent concepts? There are many ways to measure this, but the paper does so by essentially comparing how different models organize concepts within a given space of concepts. Two models with a similar organization of concepts shows convergence of the models’ representations. The paper argues that, despite differences in the architecture, purpose and even modalities between models, their representations of concepts are converging.

The Platonic Representation Hypothesis (PRH) claims that this tendency is evidence of a broader trend with AI models towards a shared understanding of these concepts at a deeper level, the reality behind the shadows.

Why Convergence Makes Sense

There are three key ideas that explain why models might have a tendency to converge on their internal representations of reality. These are: “Task Generality,” “Model Capacity,” and “The Simplicity Bias.”

Task generality creates a constraint on the amount of possible representations a model could develop based on the amount of tasks the model is asked to perform. The more tasks a model is asked to perform (text understanding, image generation), the less representations there are that could effectively help the model achieve every tasks. The less total effective representations there are, the greater the chance any two models stumble upon the same one.

Model capacity relies on the fact that larger models are more capable at implementing these generalizable representations when compared to smaller models. The more generalizable a representation is, the more “infrastructure” within a model is needed to actually implement it. Since these generalizable representations are more effective at solving a wide range of tasks, models that are able to implement them will tend to do so. Since today’s models are getting larger, then it is more likely they will implement one of these generalizable representations which again, increase the chances of them picking the same one.

Finally, the simplicity bias constrains the class of representations large models might implement. The way in which neural networks are structured and trained makes them naturally optimize for simpler ways of representing reality. As a result, from the set of effective, generalizable representations larger models would tend towards, the simplest of these representations would most likely be implemented. This further constrains the set of possible representations a model might pick, again, increasing the odds of any two picking the same one.

Evidence for Convergence

The paper cites some studies that show convergence appearing across architectures using a method called model stitching. This is essentially where the beginning portion of a model is “stitched” to the end portion of another model with the help of an extra layer to help translate across the stitch.

These stitched models have been shown to perform well at the task the original models where trained to do. This means the representations from the first model are easily translatable to the second model indicating similarities between them.

The paper also showed that models are converging across tasks by analyzing 78 different vision models and comparing their representations based on transfer task performance (the ability for a model to do a task other than the one it was trained for). Models with the highest transfer task performance showed the greatest alignment of representations between each other. The models that performed the worst at transferring tasks showed the most variance in representations.

This suggests that a more general, shared representation was used among effective models which allowed them to do good on a variety of tasks.

The paper goes a step further and shows that models are even converging across modalities, which is the core motivation behind the PRH in the first place. The paper references studies in which effective model stitching was done between LLMs and vision models.

This means that a vision model trained on images was able to easily interpret what an LLM trained on text was encoding and vice versa. This ease of interpretation, once again, suggests a similar representation was used between the two models.

Limitations on the Current State of the PRH

While the research presented in the paper is exciting and seems to support the PRH, the evidence is still in its infancy. For example, the research in the paper primarily focused on convergence between text and vision models, whereas evidence of convergence between other modalities (i.e. vision and robotics) is more sparse.

An interesting limitation is the influence sociological factors might have on this perceived convergence of model representations. People have a bias towards thinking about and working towards models that think like humans. This bias might be leading us to inadvertently constrain the set of possible models we create, leading to higher chances of similarity between models, and therefore convergence.

A similar argument can be made with regards to the hardware we use to run models. Hardware limits the types of architectures we could make which further constrains the set of models we work with potentially increasing the chance of convergence.

Finally, even if the PRH is true, it is important to note the extent to which this cross-modality convergence holds given that many concepts live solely in one modality. For example, there is no visual representation of the sentence “I value free speech.” Therefore, it seems that some concepts would be out of reach for model’s depending on which modalities they work with, limiting the universality of representations to those that are sufficiently represented in multiple modalities.

Implications of the PRH

The implications for PRH are pretty exciting and some of them are already being seen today. For one, the more unified representations are across different models, the easier it is for these models to translate between each other. This means that models in one modality could be used to do tasks in another modality without even needing multimodal data. It also means that data from multiple modalities can be used to effectively train models meant for one modality such as LLMs or vision models.

PRH also has broader implications for the field of mechanistic interpretability (MI) research which aims to understand the inner reasoning and processes AI models used to complete a task. PRH would allow lessons learned interpreting one model to generalize to other models which would speed up the rate of progress in the field. MI research has become an important tool for the creation of safe AI systems for the future, so PRH would inadvertently help with AI safety efforts as well.

Stepping Out of the Cave

Whether or not PRH is true, it is clear that some convergence is occurring between models. This alone is promising, both for the advancement of current AI models and for the future of AI safety.

But this trend potentially reveals something deeper. AI is limited to perceiving the world through images and texts. Yet we carry our own limitations, perceiving the world only through our narrow channel of senses.

Is it possible that we are in a cave of our own? AI models might be on a path to leave their own cave. But maybe, as they continue to advance, they might help us see beyond ours as well.