Summary

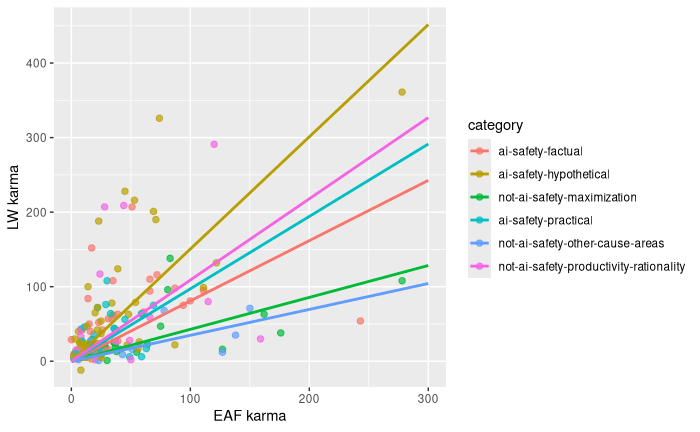

- I modeled how much cross-posts on LW get karma based on their quality (proxied by karma on EAF) and their topic.

- Results are not surprising: cross-posts about AI safety gather more interest than posts about other cause areas or utility maximization.

- Among posts about AI safety, posts about risks, predictions about future, stories and hypothetical scenarios scale the best with quality.

Methods

Categorization of posts

I categorized every post into one of the categories in bold.

- Cause Area specific

- AI safety

- CTAs - advice, hiring etc.

- Factual/News - news, events, writing about things that happened.

- Hypothetical/Risks - everything that doesn't fit into other two categories.

- Other - posts mostly about animal welfare and biosecurity

- AI safety

- Meta

- Cause prioritization/Maximization

- personal and organizational productivity, rationality

I think that there is more than one way to categorize posts. For instance, inside personal and organizational productivity, rationality cluster there are posts that have a very LW vibe and posts that have a very EAF vibe e.g.

I could only get a few posts that show this, but I think that there is a pattern, where LW is more interested in society and personal scale thinking and much less interested in organizational aspect of things.

| ai-safety-hypothetical | 71 |

| ai-safety-factual | 32 |

| ai-safety-practical | 31 |

| not-ai-safety-maximization | 24 |

| not-ai-safety-productivity-rationality | 20 |

| not-ai-safety-other-cause-areas | 11 |

Posts

I used LW and EAF api. I believe this only covers posts that were cross-posted using cross post functionality and not posts that were independently posted on both forums.

- Posts were posted between 2025-03-06 and 2025-09-29 (approx last 6 months, the reason for this oddly specific period is how LW api works, I could’ve pulled more posts to analyze but since the initial results were not interesting I decided to just put out my work and focus on other things)

- n=189

- I removed 2 posts, one of which didn’t fit any category and one that was duplicate:

Results

I fit a one linear regression model with only interaction terms between category and EA karma (no intercept):

LW_karma = coef1 × (cat1 × EA_karma) + coef2 ×(cat2 × EA_karma) + ...

The model explains 59.4% of variance in LW karma (adjusted R^2=0.594, F(6,183)=47.069, p<0.001). Tho it’s important to note that this is a no intercept model and it doesn't make that much sense to look at the R^2 and t-statics and F-statistic.

| Variable | Estimate | Interpretation |

| ai-safety-factual × EA karma | 0.80 | 100 EA karma -> ~81 LW karma |

| ai-safety-hypothetical × EA karma | 1.50 | 100 EA karma -> ~151 LW karma |

| ai-safety-practical × EA karma | 0.97 | 100 EA karma -> ~97 LW karma |

| maximization × EA karma | 0.42 | 100 EA karma -> ~43 LW karma |

| other-cause-areas × EA karma | 0.34 | 100 EA karma -> ~35 LW karma |

| productivity-rationality × EA karma | 1.61 | 100 EA karma -> ~161 LW karma |

I do not report p-values for coefficients since my model is weird (no intercepts) and they are less straightforward to interpret than usually.

- Hypothetical/risk posts have the strongest scaling with EA_karma, followed by productivity and rationality.

- Posts about maximization and other cause areas scale weakly.

- Practical AI safety posts perform similarly between forums, while factual/news posts underperform on LW slightly. When removing outlier (80,000 Hours is producing AI in Context — a new YouTube channel. Our first video, about the AI 2027 scenario, is up!, that got 54 karma on LW and 243 on EAF) they scale similar to factual ai safety posts and rationality content.

Limits

- The results seem to be highly influenced by outliers.

- I used very simple statistical model.

- I use a no-intercept model, which assumes posts with zero EA karma receive zero LW karma. My reasoning was that this is more interpretable that a model where some posts receive some karma just by being there but I guess one could argue that there are always nice people who will just upvote everything.