This is a linkpost for https://www.vox.com/the-highlight/23447596/artificial-intelligence-agi-openai-gpt3-existential-risk-human-extinction

"AI experts are increasingly afraid of what they’re creating" by Kelsey Piper. It came out several weeks ago, but I only found it now and thought other people would want to see it. I think it's one of the best introductory articles on AI risk for people unfamiliar with AI (for more, see this doc). Here are my thoughts:

- When I hover over the tab in my browser, a tooltip appears saying "A guide to why advanced AI could destroy the world - Vox." I think this text would be a better title than the current title.

- Slightly misleading line: "College professors are tearing their hair out because AI text generators can now write essays as well as your typical undergraduate." The link describes how AI chatbots could, in theory, do college homework. But it doesn't show that "college professors are tearing their hair out."

- There's a link to "Scientists Increasingly Can’t Explain How AI Works." I love how this title almost sounds like the headline for a satire piece, although I didn't find the corresponding article very convincing.

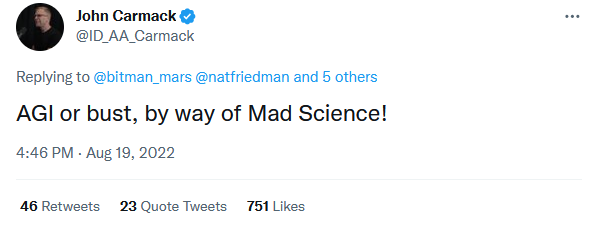

- I really like following John Carmack's announcement of "AGI or bust, by way of Mad Science"

... with "This particular mad science might kill us all. Here’s why." It's difficult to be convey a sense of urgency and importance without sounding alarmist and annoying people, but I think this sequencing and phrasing does the job. - The section "Computers that can think" gives a short history of AI research and where it stands today, with an argument at the end for the importance of interpretability research.

- There are links to "Is Power-Seeking AI an Existential Risk?" and "Without specific countermeasures, the easiest path to transformative AI likely leads to AI takeover." I don't think these are the best AI risk analyses to present to AI researchers (the recommendations in Resources I sent to AI researchers about AI safety are probably better since they rely less on philosophy-style argumentation), but this is fine since I assume the target audience is people unfamiliar with AI research.

- I think Stuart Russell's "k < n" variables example flies over many people's heads if they're unfamiliar with AI or related fields (math, CS, stats, etc).

- Perhaps unintentionally, the first paragraphs in the "Asleep at the wheel" section paint DeepMind as more safety-conscious than OpenAI, which might be false.

- I think the statistic from the 2022 AI Impacts survey is quite eye-catching: "Forty-eight percent of respondents said they thought there was a 10 percent or greater chance that the effects of AI would be “extremely bad (e.g., human extinction)." Although the survey adds that 25% of researchers think the chance is 0%.

I like the concluding paragraph (emphasis mine):

For a long time, AI safety faced the difficulty of being a research field about a far-off problem, which is why only a small number of researchers were even trying to figure out how to make it safe. Now, it has the opposite problem: The challenge is here, and it’s just not clear if we’ll solve it in time.

Cross-posted to LessWrong, although I can't do a proper cross-post without 100 LessWrong karma.