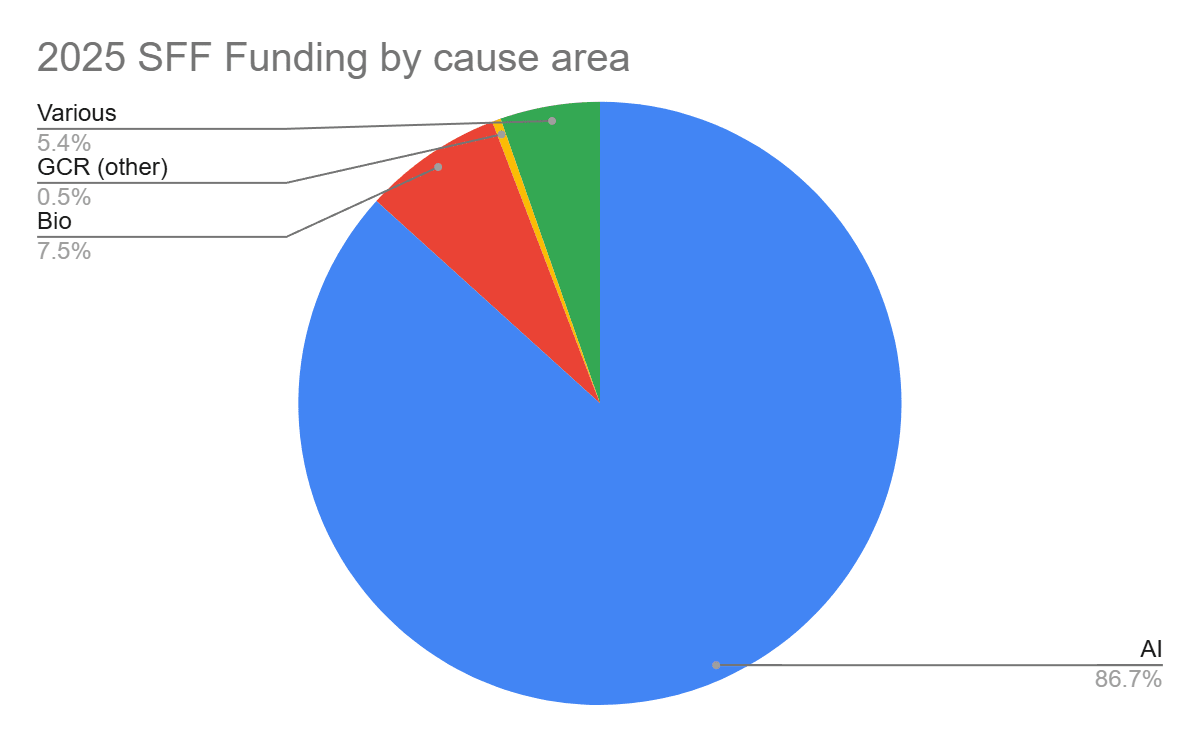

Summary: this year the Survival and Flourishing Fund allocated $34.33 million to organizations working to secure humanity’s future, with the vast majority going to AI (~$29MM), followed by biosecurity (~$2.5MM), and the rest going to various other causes as diverse as fertility, longevity, forecasting, memetics, math research, EA community building, and non-AI/bio global catastrophic risk (GCR) work.

Context

The Survival and Flourishing Fund (SFF) is a philanthropic organization that funds projects and organizations working to reduce global catastrophic risks, strengthen humanity’s long-term resilience, and promote flourishing, often through regranting and expert panels to allocate resources efficiently.

This year I took a particularly in depth look at where SFF allocated its funds and broke down the results by cause area. I figured I would share here the results through a brief post as I think it can be of general interest to others working on organizations that the SFF has funded or might fund.

Methodology in brief

I went over the 89 organizations recommended for funding by SFF in 2025, doing a brief analysis of their websites to categorize them by cause area, with each organization being allocated to either one or two cause areas. Then I used the amount allocated for each organization and their cause area to estimate how much total funding went to each cause area.

To account for the messiness of some organizations doing multiple different kinds of work, I simply 1) estimated a minimum amount for each cause area counting only organizations I was highly confident did mainly or only work in that area, and 2) I estimated the maximum amount potentially going to a cause area by adding the whole amount of organizations that had this cause area as one or two, representing the case that the allocated money was "earmarked" for that cause area only. Finally, I just averaged over the minimum and maximum amounts as a best guess. For example: the organizations that I'm very confident do AI work amounted to ~$28.1MM. Then if you add the ones that do some AI work and may have been funded primarily for that work, the number would go up to $30.6MM, then my best guess is the average of $29.3MM for AI.

Funding by cause area | Average | Minimum | Maximum |

| Total | $34,231,950 | - | - |

| AI | $29,395,475 | $28,167,000 | $30,623,950 |

| Bio | $2,566,500 | $2,254,000 | $2,879,000 |

| GCR (other) | $161,000 | $30,000 | $292,000 |

| Various | $2,108,975 | $1,324,000 | $2,893,950 |

I limited myself to work done by these organizations in the last two years, in part to make the analysis easier but primarily to better reflect what the funding was actually going towards, as I've seen many previous grantees pivot to work mainly or primarily in AI in the last year or two. Thus, the last year is much more representative of what kind of work SFF is funding in those organizations.

Caveat: I am not an authority on the work that a majority of these organizations do and thus do not claim to get each rough category right for them, but I would be extremely surprised if after corrections any slice of the chart would move more than a few percent. Criticism is welcome.

Trends

My impression is this and last year's results indicate a shift in the trend of what cause areas the SFF has been funding historically, due to advances in AI. In the past they used to have a more diverse allocation of funds over a broader array of cause areas, including a larger presence of nuclear war risk reduction, all-hazards GCR resilience, non-AI/bio policy work, cause prioritization, etc. While AI dominated as well, it was not the absolute majority of funds. I have not run as in-depth an analysis that I can show for previous years, but you can get your own conclusions on the trend from the whole dataset here. You can also take a look at the shallower analysis I did of 2024 SFF recommendations, with less disambiguation.