Audio version available at Cold Takes (or search Stitcher, Spotify, Google Podcasts, etc. for "Cold Takes Audio")

This is one of 4 posts summarizing hundreds of pages of technical reports focused almost entirely on forecasting one number: the year by which transformative AI will be developed.1

By "transformative AI," I mean "AI powerful enough to bring us into a new, qualitatively different future." I specifically focus on what I'm calling PASTA: AI systems that can essentially automate all of the human activities needed to speed up scientific and technological advancement.

The sooner PASTA might be developed, the sooner the world could change radically, and the more important it seems to be thinking today about how to make that change go well vs. poorly.

In future pieces, I'm going to lay out two methods of making a "best guess" at when we can expect transformative AI to be developed. But first, in this piece, I'm going to address the question: how good do these forecasting methods need to be in order for us to take them seriously? In other words, what is the "burden of proof" for forecasting transformative AI timelines?

When someone forecasts transformative AI in the 21st century - especially when they are clear about the full consequences it would bring - a common intuitive response is something like: "It's really out-there and wild to claim that transformative AI is coming this century. So your arguments had better be really good."

I think this is a very reasonable first reaction to forecasts about transformative AI (and it matches my own initial reaction). But I've tried to examine what's driving the reaction and how it might be justified, and having done so, I ultimately don't agree with the reaction.

- I think there are a number of reasons to think that transformative AI - or something equally momentous - is somewhat likely this century, even before we examine details of AI research, AI progress, etc.

- I also think that on the kinds of multi-decade timelines I'm talking about, we should generally be quite open to very wacky, disruptive, even revolutionary changes. With this backdrop, I think that specific well-researched estimates of when transformative AI is coming can be credible, even if they involve a lot of guesswork and aren't rock-solid.

This post tries to explain where I'm coming from.

Below, I will (a) get a bit more specific about which transformative AI forecasts I'm defending; then (b) discuss how to formalize the "That's too wild" reaction to such forecasts; then (c) go through each of the rows below, each of which is a different way of formalizing it.

| "Burden of proof" angle | Key in-depth pieces (abbreviated titles) | My takeaways |

| It's unlikely that any given century would be the "most important" one. (More) | Hinge; Response to Hinge | We have many reasons to think this century is a "special" one before looking at the details of AI. Many have been covered in previous pieces; another is covered in the next row. |

| What would you forecast about transformative AI timelines, based only on basic information about (a) how many years people have been trying to build transformative AI; (b) how much they've "invested" in it (in terms of the number of AI researchers and the amount of computation used by them); (c) whether they've done it yet (so far, they haven't)? (More) | Semi-informative Priors | Central estimates: 8% by 2036; 13% by 2060; 20% by 2100.2 In my view, this report highlights that the history of AI is short, investment in AI is increasing rapidly, and so we shouldn't be too surprised if transformative AI is developed soon. |

| Based on analysis of economic models and economic history, how likely is 'explosive growth' - defined as >30% annual growth in the world economy - by 2100? Is this far enough outside of what's "normal" that we should doubt the conclusion? (More) | Explosive Growth, Human Trajectory | Human Trajectory projects the past forward, implying explosive growth by 2043-2065.

|

| "How have people predicted AI ... in the past, and should we adjust our own views today to correct for patterns we can observe in earlier predictions? ... We’ve encountered the view that AI has been prone to repeated over-hype in the past, and that we should therefore expect that today’s projections are likely to be over-optimistic." (More) | Past AI Forecasts | "The peak of AI hype seems to have been from 1956-1973. Still, the hype implied by some of the best-known AI predictions from this period is commonly exaggerated." |

Some rough probabilities

Here are some things I believe about transformative AI, which I'll be trying to defend:

- I think there's more than a 10% chance we'll see something PASTA-like enough to qualify as "transformative AI" within 15 years (by 2036); a ~50% chance we'll see it within 40 years (by 2060); and a ~2/3 chance we'll see it this century (by 2100).

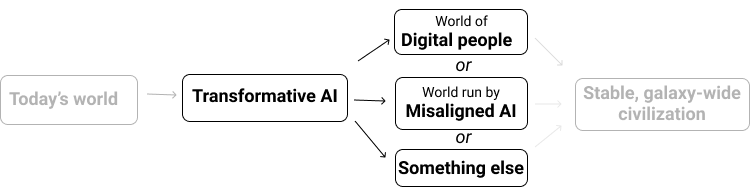

- Conditional on the above, I think there's at least a 50% chance that we'll soon afterward see a world run by digital people or misaligned AI or something else that would make it fair to say we have "transitioned to a state in which humans as we know them are no longer the main force in world events." (This corresponds to point #1 in my "most important century" definition in the roadmap.)

- And conditional on the above, I think there's at least a 50% chance that whatever is the main force in world events will be able to create a stable galaxy-wide civilization for billions of years to come. (This corresponds to point #2 in my "most important century" definition in the roadmap.)

I've also put a bit more detail on what I mean by the "most important century" here.

Formalizing the "That's too wild" reaction

Often, someone states a view that I can't immediately find a concrete flaw in, but that I instinctively think is "just too wild" to be likely. For example, "My startup is going to be the next Google" or "College is going to be obsolete in 10 years" or "As President, I would bring both sides together rather than just being partisan."

I hypothesize that the "This is too wild" reaction to statements like these can usually be formalized along the following lines: "Whatever your arguments for X being likely, there is some salient way of looking at things (often oversimplified, but relevant) that makes X look very unlikely."

For the examples I just gave:

- "My startup is going to be the next Google." There are large numbers of startups (millions?), and the vast majority of them don't end up anything like Google. (Even when their founders think they will!)

- "College is going to be obsolete in 10 years." College has been very non-obsolete for hundreds of years.

- "As President, I would bring both sides together rather than just being partisan." This is a common thing for would-be US Presidents to say, but partisanship seems to have been getting worse for at least a couple of decades nonetheless.

Each of these cases establishes a sort of starting point (or "prior" probability) and "burden of proof," and we can then consider further evidence that might overcome the burden. That is, we can ask things like: what makes this startup different from the many other startups that think they can be the next Google? What makes the coming decade different from all the previous decades that saw college stay important? What's different about this Presidential candidate from the last few?

There are a number of different ways to think about the burden of proof for my claims above: a number of ways of getting a prior ("starting point") probability, that can then be updated by further evidence.

Many of these capture different aspects of the "That's too wild" intuition, by generating prior probabilities that (at least initially) make the probabilities I've given look too high.

Below, I will go through a number of these "prior probabilities," and examine what they mean for the "burden of proof" on forecasting methods I'll be discussing in later posts.

Different angles on the burden of proof

"Most important century" skepticism

One angle on the burden of proof is along these lines:

- Holden claims a 15-30% chance that this is the "most important century" in one sense or another.3

- But there are a lot of centuries, and by definition most of them can't be the most important. Specifically:

- Humans have been around for 50,000 to ~5 million years, depending on how you define "humans."4 That's 500 to 50,000 centuries.

- If we assume that our future is about as long as our past, then there are 1,000 to 100,000 total centuries.

- So the prior (starting-point) probability for the "most important century" is 1/100,000 to 1/1,000.

- It's actually worse than that: Holden has talked about civilization lasting for billions of years. That's tens of millions of centuries, so the prior probability of "most important century" is less than 1/10,000,000.

(Are We Living at the Hinge of History? argues along these general lines, though with some differences.5)

This argument feels like it is pretty close to capturing my biggest source of past hesitation about the "most important century" hypothesis. However, I think there are plenty of markers that this is not an average century, even before we consider specific arguments about AI.

One key point is emphasized in my earlier post, All possible views about humanity's future are wild. If you think humans (or our descendants) have billions of years ahead of us, you should think that we are among the very earliest humans, which makes it much more plausible that our time is among the most important. (This point is also emphasized in Thoughts on whether we're living at the most influential time in history as well as the comments on an earlier version of "Are We Living at the Hinge of History?".)

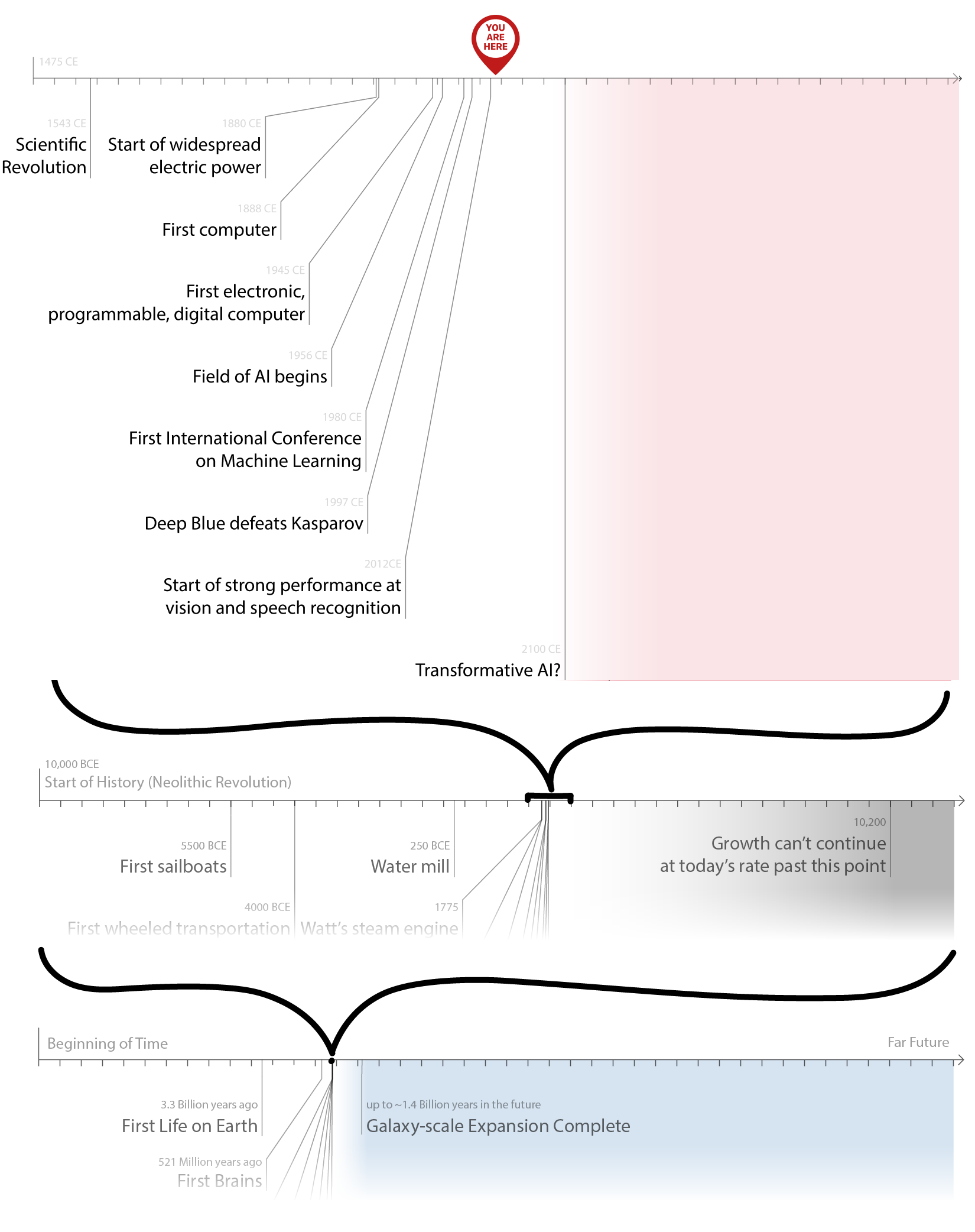

Additionally, while humanity has existed for a few million years, for most of that time we had extremely low populations and very little in the way of compounding technological progress. Human civilization started about 10,000 years ago, and since then we've already gotten to the point of building digital programmable computers and exploring our solar system.

With these points in mind, it seems reasonable to think we will eventually launch a stable galaxy-wide civilization, sometime in the next 100,000 years (1000 centuries). Or to think there's a 10% chance we will do so sometime in the next 10,000 years (100 centuries). Either way, this implies that a given century has a ~1/1,000 chance of being the most important century for the launch of that civilization - much higher than the figures given earlier in this section. It's still ~100x off from the numbers I gave above, so there's still a burden of proof.

There are further reasons to think this particular century is unusual. For example, see This Can't Go On:

- The total size of the world economy has grown more in the last 2 centuries than in all of the rest of history combined.

- The current economic growth rate can't be sustained for more than another 80 centuries or so. (And as discussed below, if its past accelerating trend resumed, it would imply explosive growth and hitting the limits of what's possible this century.)

- It's plausible that science has advanced more in the last 5 centuries than in the rest of history combined.

A final point that makes our time special: we're talking about when to expect transformative AI, and we're living very close in time to the very beginnings of efforts on AI. In well under 1 century, we've gone from the first programmable electronic general-purpose computer to AI models that can compete with humans at speech recognition,6 image classification and much more.

More on the implications of this in the next section.

Semi-informative priors

Report on Semi-informative Priors (abbreviated in this piece as "Semi-informative Priors") is an extensive attempt to forecast transformative AI timelines while using as little information about the specifics of AI as possible. So it is one way of providing an angle on the "burden of proof" - that is, establishing a prior (starting-point) set of probabilities for when transformative AI will be developed, before we look at the detailed evidence.

The central information it uses is about how much effort has gone into developing AI so far. The basic idea:

- If we had been trying and failing at developing transformative AI for thousands of years, the odds of succeeding in the coming decades would be low.

- But if we've only been trying to develop AI systems for a few decades so far, this means the coming decades could contain a large fraction of all the effort that has ever been put in. The odds of developing it in that time are not all that low.

- One way of thinking about this is that before we look at the details of AI progress, we should be somewhat agnostic about whether developing transformative AI is relatively "easy" (can be done in a few decades) or "hard" (takes thousands of years). Since things are still early, the possibility that it's "easy" is still open.

A bit more on the report's approach and conclusions:

Angle of analysis. The report poses the following question (paraphrased): "Suppose you had gone into isolation on the day that people started investing in building AI systems. And now suppose that you've received annual updates on (a) how many years people have been trying to build transformative AI; (b) how much they've 'invested' in it (in terms of time and money); (c) whether they've succeeded yet (so far, they haven't). What can you forecast about transformative AI timelines, having only that information, as of 2021?"

Its methods take inspiration from the Sunrise Problem: "Suppose you knew nothing about the universe except whether, on each day, the sun has risen. Suppose there have been N days so far, and the sun has risen on all of them. What is the probability that the sun will rise tomorrow?" You don't need to know anything about astronomy in order to get a decent answer to this question - there are simple mathematical methods for estimating the probability that X will happen tomorrow, based on the fact that X has happened each day in the past. "Semi-informative Priors" extends these mathematical methods in order to adapt them to transformative AI timelines. (In this case, "X" is "Failing to develop transformative AI, as we have in the past.")

Conclusions. I'm not going to go heavily into the details of how the analysis works (see the blog post summarizing the report for more detail), but the report's conclusions include the following:

- It puts the probability of artificial general intelligence (AGI, which would include PASTA) by 2036 between 1-18%, with a best guess of 8%.

- It puts the probability of AGI by 2060 at around 3-25% (best guess ~13%), and the probability of AGI by 2100 at around 5-35%, best guess 20%.

These are lower than the probabilities I give above, but not much lower. This implies that there isn't an enormous burden of proof when bringing in additional evidence about the specifics of AI investment and progress.

Notes on regime start date. Something interesting here is that the report is less sensitive than one might think about how we define the "start date" for trying to develop AGI. (See this section of the full report.) That is:

- By default, "Semi-informative Priors" models the situation as if humanity started "trying" to build AGI in 1956.7 This implies that efforts are only ~65 years old, so the coming decades will represent a large fraction of the effort.

- But the report also looks at other measures of "effort to build AGI" - notably, researcher-time and "compute" (processing power). Even if you want to say that we've been implicitly trying to build AGI since the beginning of human civilization ~10,000 years ago, the coming decades will contain a large chunk of the research effort and computation invested in trying to do so.

Bottom line on this section.

- Occasionally I'll hear someone say something along the lines of "We've been trying to build transformative AI for decades, and we haven't yet - why do you think the future will be different?" At a minimum, this report reinforces what I see as the common-sense position that a few decades of "no transformative AI yet, despite efforts to build it" doesn't do much to argue against the possibility that transformative AI will arrive in the next decade or few.

- In fact, in the scheme of things, we live extraordinarily close in time to the beginnings of attempts at AI development - another way in which our century is "special," such that we shouldn't be too surprised if it turns out to be the key one for AI development.

Economic growth

Another angle on the burden of proof is along these lines:

If PASTA were to be developed anytime soon, and if it were to have the consequences outlined in this series of posts, this would be a massive change in the world - and the world simply doesn't change that fast.

To quantify this: the world economy has grown at a few percent per year for the last 200+ years, and PASTA would imply a much faster growth rate, possibly 100% per year or above.

If we were moving toward a world of explosive economic growth, economic growth should be speeding up today. It's not - it's stagnating, at least in the most developed economies. If AI were really going to revolutionize everything, the least it could be doing now is creating enough value - enough new products, transactions and companies - to make overall US economic growth speed up.

AI may lead to cool new technologies, but there's no sign of anything nearly as momentous as PASTA would be. Going from where we are to where PASTA would take us is the kind of sudden change that hasn't happened in the past, and is unlikely to happen in the future.

(If you aren't familiar with economic growth, you may want to read my brief explainer before continuing.)

I think this is a reasonable perspective, and it especially makes me skeptical of very imminent forecasts for transformative AI (2036 and earlier).

My main response is that the picture of steady growth - "the world economy growing at a few percent per year" - gets a lot more complicated when we pull back and look at all of economic history, as opposed to just the last couple of centuries. From that perspective, economic growth has mostly been accelerating,8 and projecting the acceleration forward could lead to very rapid economic growth in the coming decades.

I wrote about this previously in The Duplicator and This Can't Go On; here I'll very briefly recap the key reports that I cited there.

Could Advanced AI Drive Explosive Economic Growth? explicitly asks the question, "How likely is 'explosive growth' - defined as >30% annual growth in the world economy - by 2100?" It considers arguments on both sides, including both (a) the long view of history that shows accelerating growth; (b) the fact that growth has been remarkably stable over the last ~200 years, implying that something may have changed.

It concludes: "the possibilities for long-run growth are wide open. Both explosive growth and stagnation are plausible."

Modeling the Human Trajectory asks what future we can expect if we extrapolate out existing trends over the course of economic history. The answer is explosive growth by 2043-2065 - not too far from what my probabilities above suggest. This implies to me that the lack of economic acceleration over the last ~200 years could be a "blip" - soon to be resolved by technology development that restores the feedback loop (discussed in The Duplicator) that can cause acceleration to continue.

To be clear, there are also good reasons not to put too much weight on this as a projection,9 and I am presenting it more as a perspective on the "burden of proof" than as a mainline forecast for when PASTA will be developed.

History of "AI hype"

Another angle on the burden of proof: I sometimes hear comments along the lines of "AI has been overhyped many times in the past, and transformative AI10 is constantly 'just around the corner' according to excited technologists. Your estimates are just the latest in this tradition. Since past estimates were wrong, yours probably are too."

However, I don't think the history of "AI hype" bears out this sort of claim. What should we learn from past AI forecasts? reviewed histories of AI to try to understand what the actual historical pattern of "AI hype" has been.

Its summary gives the following impressions (note that "HLMI," or "human-level machine intelligence," is a fairly similar idea to PASTA):

- The peak of AI hype seems to have been from 1956-1973. Still, the hype implied by some of the best-known AI predictions from this period is commonly exaggerated.

- After ~1973, few experts seemed to discuss HLMI (or something similar) as a medium-term possibility, in part because many experts learned from the failure of the field’s earlier excessive optimism.

- The second major period of AI hype, in the early 1980s, seems to have been more about the possibility of commercially useful, narrow-purpose “expert systems,” not about HLMI (or something similar) ...

- It’s unclear to me whether I would have been persuaded by contemporary critiques of early AI optimism, or whether I would have thought to ask the right kinds of skeptical questions at the time. The most substantive critique during the early years was by Hubert Dreyfus, and my guess is that I would have found it persuasive at the time, but I can’t be confident of that.

My summary is that it isn't particularly fair to say that there have been many waves of separate, over-aggressive forecasts about transformative AI. Expectations were probably too high in the 1956-1973 period, but I don't think there is much reason here to impose a massive "burden of proof" on well-researched estimates today.

Other angles on the burden of proof

Here are some other possible ways of capturing the "That's too wild" reaction:

"My cause is very important" claims. Many people - throughout the world today, and throughout history - claim or have claimed that whatever issue they're working on is hugely important, often that it could have global or even galaxy-wide stakes. Most of them have to be wrong.

Here I think the key question is whether this claim is supported by better arguments, and/or more trustworthy people, than other "My cause is very important" claims. If you're this deep into reading about the "most important century" hypothesis, I think you're putting yourself in a good position to answer this question for yourself.

Expert opinion will be covered extensively in future posts. For now, my main position is that the claims I'm making neither contradict a particular expert consensus, nor are supported by one. They are, rather, claims about topics that simply have no "field" of experts devoted to studying them. Some people might choose to ignore any claims that aren't actively supported by a robust expert consensus; but given the stakes, I don't think that is what we should be doing in this case.

(That said, the best available survey of AI researchers has conclusions that seem broadly consistent with mine, as I'll discuss in the next post.)

Uncaptured "That's too wild" reactions. I'm sure this piece hasn't captured every possible angle that could be underlying a "That's too wild" reaction. (Though not for lack of trying!) Some people will simply have irreducible intuitions that the claims in this series are too wild to take seriously.

A general take on these angles. Something that bugs me about most of the angles in this section is that they seem too general. If you simply refuse (absent overwhelming evidence) to believe any claim that fits a "my cause is very important" pattern, or isn't already backed by a robust expert consensus, or simply sounds wild, that seems like a dangerous reasoning pattern. Presumably some people, sometimes, will live in the most important century; we should be suspicious of any reasoning patterns that would reliably11 make these people conclude that they don't.

-

Of course, the answer could be "A kajillion years from now" or "Never." ↩

-

Technically, these probabilities are for “artificial general intelligence”, not transformative AI. The probabilities for transformative AI could be higher if it’s possible to have transformative AI without artificial general intelligence, e.g. by via something like PASTA. ↩

-

This corresponds to the second two bullet points from this section. ↩

-

From Wikipedia: "Genetic measurements indicate that the ape lineage which would lead to Homo sapiens diverged from the lineage that would lead to chimpanzees and bonobos, the closest living relatives of modern humans, around 4.6 to 6.2 million years ago.[23] Anatomically modern humans arose in Africa about 300,000 years ago,[24] and reached behavioural modernity about 50,000 years ago.[25]" ↩

-

E.g., it emphasizes the odds of being among the most important "people" instead of "centuries." ↩

-

I don't have a great single source for this, although you can see this paper. My informal impression from talking to people in the field is that AI speech recognition is at least quite close to human-level, if not better. ↩

-

"The field of AI is largely held to have begun in Dartmouth in 1956" ↩

-

There is an open debate on whether past economic data actually shows sustained acceleration, as opposed to a series of very different time periods with increasing growth rates. I discuss how the debate could change my conclusions here. ↩

-

"Modeling the Human Trajectory" emphasizes that the model that generates these numbers “is not flexible enough to fully accommodate events as large and sudden as the industrial revolution.” The author adds: "Especially since it imperfectly matches the past, its projection for the future should be read loosely, as merely adding plausibility to an upswing in the next century. Davidson (2021) ["Could Advanced AI Drive Explosive Economic Growth?"] points at one important way the projections could continue to be off for many decades: while the model’s dynamics are dominated by a spiraling economic acceleration, people are still an important input to production, and, if anything becoming wealthy has led to people having fewer children. In the coming decades, that could hamper the predicted acceleration, to the degree we can’t or don’t substitute robots for workers." ↩

-

These comments usually refer to AGI rather than transformative AI, but the concepts are similar enough that I'm using them interchangeably here. ↩

-

(Absent overwhelming evidence, which I don't think we should generally assume will always be present when it is "needed.") ↩

I very much like how careful you are in looking at this question of the burden of proof when discussing transformative AI. One thing I'm uncertain about, though: is the "most important century" framing the best one to use when discussing this? It seems to me like "transformative AI is coming this century" and "this century is the most important century" are very different claims which you tend to conflate in this sequence.

One way of thinking about this: suppose that, this century, there's an AI revolution at least as big as the industrial revolution. How many more similarly-sized revolutions are plausible before reaching a stable galactic civilisation? The answer to this question could change our estimate of P(this is the most important century) by an order of magnitude (or perhaps two, if we have good reasons to think that future revolutions will be more important than this century's TAI), but has a relatively small effect on what actions we should take now.

More generally, I think that claims which depend on the specifics of our long-term trajectory after transformative AI are much easier to dismiss as being speculative (especially given how much pushback claims about reaching TAI already receive for being speculative). So I'd much rather people focus on the claim that "AI will be really, really big" than "AI will be bigger than anything else which comes afterwards". But it seems like framing this sequence of posts as the "most important century" sequence pushes towards the latter.

Oh, also, depending on how you define "important", it may be the case that past centuries were more important because they contained the best opportunities to influence TAI - e.g. when the west became dominant, or during WW1 and WW2, or the cold war. Again, that's not very action-guiding, but it does make the "most important century" claim even more speculative.

Thoughts?

I think AI is much more likely to make this the most important century than to be "bigger than anything else which comes afterwards." Analogously, the 1000 years after the IR are likely to be the most important millennium even though it seems basically arbitrary whether you say the IR is more or less important than AI or the agricultural revolution. In all those cases, the relevant thing is that a significant fraction of all remaining growth and technological change is likely to occur in the period, and many important events are driven by growth or tech change.

I think it's more likely than not that there will be future revolutions as important TAI, but there's a good probability that AI leads to enough acceleration that a large fraction of future revolutions occur in the same century. There's room for the debate over the exact probability and timeline for such acceleration, but I think no real way to argue for anything as low as 10%.

I agree they're different claims; I've tried not to conflate them. For example, in this section I give different probabilities for transformative AI and two different interpretations of "most important century."

This post contains a few cases where I think the situation is somewhat confusing, because there are "burden of proof" arguments that take the basic form, "If this type of AI is developed, that will make it likely that it's the most important century; there's a burden of proof on arguing that it's the most important century because ___." So that does lead to some cases where I am defending "most important century" within a post on AI timelines.

I struggled a bit with this; you might find this page helpful, especially the final section, "Holistic intent of the 'most important century' phrase." I ultimately decided that relative to where most readers are by default, "most important century" is conveying a more accurate high-level message than something like "extraordinarily important century" - the latter simply does not get across the strength of the claim - even though it's true that "most important century" could end up being false while the overall spirit of the series (that this is a massively high-stakes situation) ends up being right.

I also think it's the case that the odds of "most important century" being literally true are still decently high (though substantially lower than "transformative AI this century"). A key intuition behind this claim is the idea that PASTA could radically speed things up, such that this century ends up containing as much eventfulness as we're used to from many centuries. (Some more along these lines in the section starting "To put this possibility in perspective, it's worth noting that the world seems to have 'sped up'" from the page linked above.)

I address this briefly in footnote 1 on the page linked above: "You could say that actions of past centuries also have had ripple effects that will influence this future. But I'd reply that the effects of these actions were highly chaotic and unpredictable, compared to the effects of actions closer-in-time to the point where the transition occurs."

Thanks for the response, that all makes sense. I missed some of the parts where you disambiguated those two concepts; apologies for that. I suspect I still see the disparity between "extraordinarily important century" and "most important century" as greater than you do, though, perhaps because I consider value lock-in this century less likely than you do - I haven't seen particularly persuasive arguments for it in general (as opposed to in specific scenarios, like AGIs with explicit utility functions or the scenario in your digital people post). And relatedly, I'm pretty uncertain about how far away technological completion is - I can imagine transitions to post-human futures in this century which still leave a huge amount of room for progress in subsequent centuries.

I agree that 'extraordinarily important century" and "transformative century" don't have the same emotional impact as "most important century". I wonder if you could help address this by clarifying that you're talking about "more change this century than since X" (for x = a millennium ago, or since agriculture, or since cavemen, or since we diverged from chimpanzees). "Change" also seems like a slightly more intuitive unit than "importance", especially for non-EAs for whom "importance" is less strongly associated with "our ability to exert influence".

Agreed that we probably disagree about lock-in. I don't want my whole case to ride on it, but I don't want it to be left out as an important possibility either.

With that in mind, I think the page I linked is conveying the details of what I mean pretty well (although I also find the "more change than X" framing interesting), and I think "most important century" is still the best headline version I've thought of.

Strongly agree - the final paragraph rubbed me the wrong way because it equated "the most important century" with "people needing to take action to save the world"

Since you're (among other things) listing reference classes that people might put claims about transformative AI into, I'll note that millennarianism is a common one among skeptics. I.e., "lots of [mostly religious] groups throughout history have claimed that society is soon going to be swept away by an as-yet-unseen force and replaced with something new, and they were all deluded, so you are probably deluded too".

Agreed. This is similar in spirit to the "My cause is most important" part.

I don't feel this post engages with the strongest reasons to be skeptical of a growth explosion. The following post outlines what I would consider some of the strongest such reasons:

https://magnusvinding.com/2021/06/07/reasons-not-to-expect-a-growth-explosion/

Most of your post seems to be arguing that current economic trends don't suggest a coming growth explosion.

If current economic trends were all the information I had, I would think a growth explosion this century is <<50% likely (maybe 5-10%?) My main reason for a higher probability is AI-specific analysis (covered in future posts).

This post is arguing not "Current economic trends suggest a growth explosion is near" but rather "A growth explosion is plausible enough (and not strongly enough contraindicated by current economic trends) that we shouldn't too heavily discount separate estimates implying that transformative AI will be developed in the coming decades." I mostly don't see the arguments in the piece you linked as providing a strong counter to this claim, but if you highlight which you think provide the strongest counters, I can consider more closely.

The one that seems initially like the best candidate for such an argument is "Many of our technologies cannot get orders of magnitude more efficient." But I'm not arguing that e.g. particular energy technologies will get orders of magnitude more efficient; I'm arguing we'll see enough acceleration to be able to quickly develop something as transformative as digital people. There may be an argument that this isn't possible due to key bottlenecks being near their efficiency limits, but I don't think the case in your piece is at that level of specificity.

Thanks for your reply :-)

That's not quite how I'd summarize it: four of the six main points/sections (the last four) are about scientific/technological progress in particular. So I don't think the reasons listed are mostly a matter of economic trends in general. (And I think "reasons listed" is an apt way to put it, since my post mostly lists some reasons to be skeptical of a future growth explosion — and links to some relevant sources — as opposed to making much of an argument.)

I get that :-) But again, most of the sections in the cited post were in fact about scientific and technological trends in particular, and I think these trends do support significantly lower credences in a future growth explosion than the ones you hold. For example, the observation that new scientific insights per human have declined rapidly suggests that even getting digital people might not be enough to get us to a growth explosion, as most of the insights may have been plugged already. (I make some similar remarks here.)

Additionally, one of the things I had in mind with my remark in the earlier comment relates to the section on economic growth, which says:

In relation to this point in particular, I think the observation mentioned in the second section of my post seems both highly relevant and overlooked, namely that if we take a nerd-dive into the data and look at doublings, we have actually seen an unprecedented deceleration (in terms of how the growth rate has changed across doublings). And while this does not by any means rule out a future growth explosion, I think it is an observation that should be taken into account, and it is perhaps the main reason to be skeptical of a future growth explosion at the level of long-run growth trends. So that would be the kind of reason I think should ideally have been discussed in that section. Hope that helps clarify a bit where I was coming from.

Fair point re: economic trends vs. technological trends, though I would stand by the outline of what I said: your post seems to be arguing that current trends don't suggest a coming explosion, but not that they establish a super-high burden of proof for expecting one.

Re: "For example, the observation that new scientific insights per human have declined rapidly suggests that even getting digital people might not be enough to get us to a growth explosion, as most of the insights may have been plugged already."

Note that the growth modeling analyses I draw on incorporate the "ideas are getting harder to find" dynamic and discuss it at length. So I think a more specific, quantitative argument needs to be made here - I didn't argue for the plausibility of explosive growth based on non-declining insights per mind.

Re: "I think the observation mentioned in the second section of my post seems both highly relevant and overlooked, namely that if we take a nerd-dive into the data and look at doublings, we have actually seen an unprecedented deceleration (in terms of how the growth rate has changed across doublings). And while this does not by any means rule out a future growth explosion, I think it is an observation that should be taken into account, and it is perhaps the main reason to be skeptical of a future growth explosion at the level of long-run growth trends."

I strongly agree with this, but feel that it's been acknowledged both in the reports I draw on and in my pieces. E.g., I discuss the demographic transition and present the possible explosion as one possibility, rather than as a future strongly implied by the past (and this is something I made a deliberate effort to do).

To be clear, my claim here isn't "The points you're raising are unimportant." I think they are quite important. In a world with linear insights per human and no deceleration, I would've written this series very differently; the declining returns and deceleration move me toward "The developments in question are plausible from (but not strongly implied) by the broad trends, and adding the AI-specific analysis moves me to above 50/50 this century, but not dramatically above."

I'm not sure what you mean by a "super-high burden of proof", but I think the reasons provided in that post represent fairly strong evidence against expecting a future growth explosion, and, if you will, a fairly high burden of proof against it. :)

Specifically, I think the points about the likely imminent end of Moore's law (in the section "Moore’s law is coming to an end") and the related point that hardware has already slowed down for years in a pattern that's consistent with an imminent (further) slowdown (see e.g. the section "The growth of supercomputers has been slowing down for years") count as fairly strong evidence against a future computer-driven growth explosion, beyond the broader economic trends, and I think they count as being among the main reasons to be skeptical.

Perhaps I can ask: What are your reasons for believing that we'll see an unprecedented growth explosion in computer hardware in this century, despite the observed slowdown in Moore's law and related metrics? Or do you think a future growth explosion would generally not require a hardware explosion?

(I suspect my main disagreement with your takes on likely future scenarios comes down to expectations about hardware. I don't see why we should expect a hardware explosion, and I'm keen to see a strong case for why we should expect it.)

At the risk of repeating myself, I'll just clarify that my point isn't merely that economic growth has declined significantly since the 1960s. That would be a fairly trivial and obvious point. :)

The key point I was gesturing at, and which I haven't seen discussed anywhere else, is that when we look at doubling rates across the entire history of economic growth (and perhaps the history of life, cf. Hanson, 1998), we have seen a slowdown since the mid-20th century that's unprecedented in that entire history. To quote the key passage:

This pattern seems overlooked in Hanson's discussions of a future growth explosion (to be fair, the pattern was less clear when he wrote his 1998 paper, but it was clear when he wrote The Age of Em), and is relevant precisely because his analysis seems motivated by an inductive case for a new growth mode (which, to be clear, is by no means decisively undermined by the unprecedented slowdown we've seen since the mid-20th century, but this unique slowdown is highly relevant counterevidence that appears unduly neglected).

Why exactly is this obvious?

I understand the basic thrust of actions taken earlier having a greater chance to cause significant downstream effects because there's simply more years in play, but is that all there is to the claim?

FYI this link is broken for me: "Audio version available at Cold Takes "

Thanks! This post is using experimental formatting so I can't fix this myself, but hopefully it will be fixed soon.