The potential upsides of advanced AI are enormous, but there’s no guarantee they’ll be distributed optimally. In this talk, Cullen O’Keefe, a researcher at the Centre for the Governance of AI, discusses one way we could work toward equitable distribution of AI’s benefits — the Windfall Clause, a commitment by artificial intelligence (AI) firms to share a significant portion of their future profits — as well as the legal validity of such a policy and some of the challenges to implementing it.

Below is a transcript of the talk, which we’ve lightly edited for clarity. You can also watch it on YouTube or read it on effectivealtruism.org.

The Talk

Thank you. Today I'll be talking about a research project that I’m leading at the Centre for the Governance of Artificial Intelligence (AI) on the Windfall Clause.

Many [people in the effective altruism movement] believe that AI could be a big deal. As a result, we spend a lot of time focusing on its potential downsides — so-called x-risks [existential risks] and s-risks [systemic risks].

But if we manage to avoid those risks, AI could be a very good thing. It could generate wealth on a scale never before seen in human history. And therefore, if we manage to avoid the worst downsides of artificial intelligence, we have a lot of work ahead of us.

Recognizing this opportunity (and challenge), Nick Bostrom, at the end of Superintelligence, laid out what he called “the common good principle” — the premise that advanced AI should be developed only for the benefit of all humanity.

It's in service of the common good principle that we've been working on the Windfall Clause at the Center for the Governance of AI.

We've been working on it for about a year [as of summer 2019], and I'd like to share with you some of our key findings.

I'll start by defining the project’s goal, then describe how we're going to pursue that goal, and end by sharing some open questions.

The goal of the Windfall Clause project

Our goal with this project is to work toward distributing the gains from AI optimally. Obviously, this is both easier said than done and underdefined, as it invokes deep questions of moral and political philosophy. We don't aim to answer those questions [at the time of this update], though hopefully our friends from the Global Priorities Institute will help us do that [in the future]. But we do think that this goal is worth pursuing, and one we can make progress on for a few reasons.

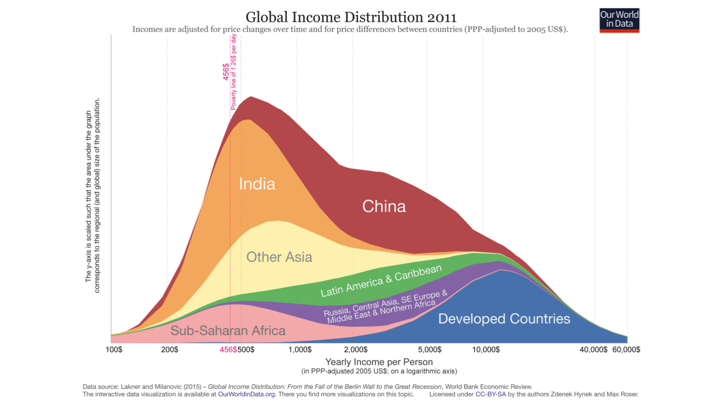

First, it's not a goal that we expect will be achieved naturally. The gains from the current global economy are distributed very unequally, as graphs like [the one below indicate].

AI could further exacerbate these trends by primarily benefiting the world's wealthiest economies, and also by devaluing human labor. Indeed, industrialization has been a path to development for a number of economies, including the one in which we sit today.

And by eroding the need for human labor with complementary technologies like robotics, AI could remove that path to development.

Industry structure is also very relevant. The advanced tech industries tend to be quite concentrated [with a few large companies dominating most major markets].

A number of people have speculated that due to increasing returns on data and other input factors, AI could be a natural monopoly or oligopoly. If so, we should expect oligopoly pricing to take effect, which would erode social surplus, transferring it from consumers to shareholders of technology producers.

Working toward the common good principle could serve as a useful way to signal that people in the technology fields are taking the benefits of AI seriously — thereby establishing a norm of beneficial AI development. And on an international level, it could credibly signal that the gains from cooperative (or at least non-adversarial) AI development outweigh the potential benefits of an AI race.

One caveat: I don't want to fall victim to the Luddite fallacy, which is the prediction throughout history that new technologies would erode the value of human labor, cause mass unemployment, and more.

Those predictions have repeatedly been proven wrong, and could be proven wrong again with AI. The answer will ultimately turn on complex economic factors that are difficult to predict a priori. So instead of making predictions about what the impacts of AI will be, I merely want to assert that there are plausible reasons to worry about the gains from AI.

How we intend to pursue the Windfall Clause

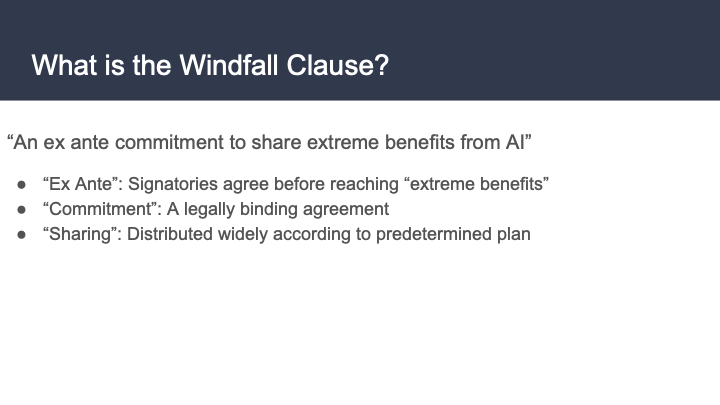

Our goal is to optimally distribute the gains from AI, and I've shared some reasons why we think that goal is worth pursuing. Now I’ll talk about our mechanism for pursuing it, which is the Windfall Clause.

In a phrase, the Windfall Clause is an ex ante commitment to share extreme benefits from AI. I'm calling it an “ex ante commitment” because it's something that we want [companies working on AI] to agree to before [any firm] reaches, or comes close to reaching, extreme benefits.

It's a commitment mechanism, not just an agreement in principle or a nice gesture. It’s something that, in theory, would be legally binding for the firms that sign it. So ultimately, the Windfall Clause is about distributing benefits in a way that's closer to the goal of optimal distribution than we would have without the Windfall Clause.

A lot turns on the phrase “extreme benefits.” It’s worth defining this a bit more.  For the purposes of this talk, the phrase is synonymous with “windfall profits,” or just “windfall” generally. Qualitatively, you can think of it as something like “benefits beyond what we would expect an AI developer to achieve without achieving a fundamental breakthrough in AI” — something along the lines of AGI [artificial general intelligence] or transformative AI. Quantitatively, you can think of it as on the order of trillions of dollars of annual profit, or as profits that exceed 1% of the world’s GDP [gross domestic product].

For the purposes of this talk, the phrase is synonymous with “windfall profits,” or just “windfall” generally. Qualitatively, you can think of it as something like “benefits beyond what we would expect an AI developer to achieve without achieving a fundamental breakthrough in AI” — something along the lines of AGI [artificial general intelligence] or transformative AI. Quantitatively, you can think of it as on the order of trillions of dollars of annual profit, or as profits that exceed 1% of the world’s GDP [gross domestic product].

A key part of the Windfall Clause is translating the definition of “windfall” into meaningful, firm obligations. And we're doing that with something that we're calling a “windfall function.” That's how we translate the amount of money a firm is earning into obligations that are in accordance with the Windfall Clause.

We wanted to develop a windfall function that was clear, had low expected costs, scaled up with firm size, and was hard for firms to manipulate or game to their advantage. We also wanted to ensure that it would not [competitively] disadvantage the signatories, for reasons I'll talk about later.

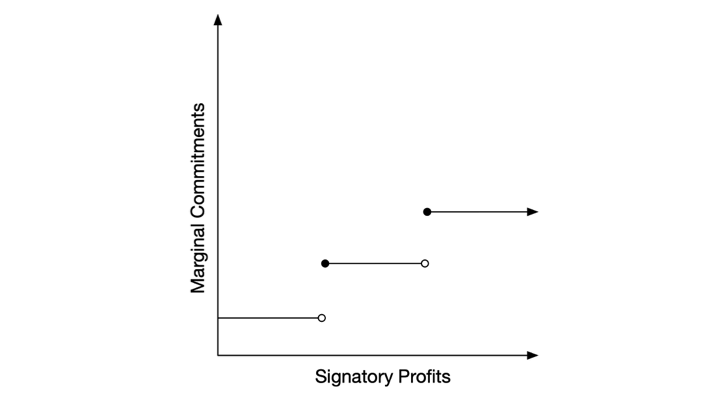

To make this more concrete, you can think of [the windfall function] like this: When firm profits are at normal levels (i.e., the levels that we currently see), obligations remain low, nominal, or nothing at all. But as a firm reaches windfall profits, over time their obligations would scale up on the margin. It’s like income taxes; the more you earn, the more on the margin you're obligated to pay.

Just as a side note, this particular example [in the slide above] uses a step function on the margin, but you can also think of it as smoothly increasing over time. And there might be strategic benefits to that.

The next natural question is: Is this worth pursuing?

A key input to that question is whether it could actually work. Since we're members of the effective altruism community, we want [the change we recommend to actually have an effect].

One good reason to think that the Windfall Clause might work is that it’s a matter of corporate law. This was a problem that we initially had to confront, because in American corporate law, firm directors — the people who control and make decisions for corporations — are generally expected to act in the best interests of their shareholders. After all, the shareholders are the ones who provide money to start the firm. At the same time, the directors have a lot of discretion in how they make decisions for the firm. The shareholders are not supposed to second-guess every decision that corporate directors make. And so traditionally, courts have been quite deferential to firm boards of directors and executives, applying a very high standard to find that they have violated their duties to shareholders. In addition, corporate philanthropy has traditionally been seen as an acceptable means of pursuing the best interests of shareholders.

In fact, in all seven cases in which shareholders have challenged firms on their corporate philanthropy, courts have upheld it as permissible. That’s a good track record. Why is it permissible if firm directors are supposed to be acting in the best interest of shareholders? Traditionally, firms have noted that corporate philanthropy can bring benefits like public relations value, improved relations with the government, and improved employee relations.

The Windfall Clause could bring all of these as well. We know that there is increasing scrutiny [of firms’ actions] by the public, the government, and their own employees. We can think of several different examples. Amazon comes to mind. And the Windfall Clause could help [advance this type of scrutiny]. When you add in executives who are sympathetic to examining the negative implications of artificial intelligence, then there's a plausible case that they would be interested in signing the Windfall Clause.

Another important consideration: We think that the Windfall Clause could be made binding as a matter of contract law, at least in theory. Obviously, we're thinking about how a firm earning that much money might be able to circumvent, delay, or hinder performing its obligations under the Windfall Clause. And that invokes questions of internal governance and rule of law. The first step, at least theoretically, is making the Windfall Clause binding.

Open questions

We’ve done a lot of work on this project, but many open questions remain.

Some of the hard ones that we've grappled with so far are:

1. What's the proper measure of “bigness” or “windfall”? I previously defined it in relation to profits, but there's also a good case to be made that market cap is the right measure of whether a firm has achieved windfall status, since market cap is a better predictor of a firm's long-term expected value. A related question: Should windfall be defined relative to the world economy or in absolute terms? We’ve made the assumption that it would be relative, but it remains an open question.

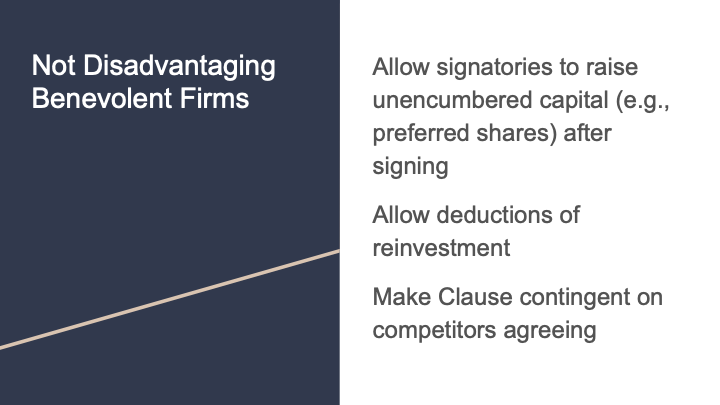

2. How do we make sure that the Windfall Clause doesn’t disadvantage benevolent firms, competitively speaking? This is a more important question. It would be quite bad if multiple firms were potential contenders for achieving AGI, but only the most benevolent ones signed onto the Windfall Clause. They’d be putting themselves at a competitive disadvantage by giving themselves less money to reinvest [if they activated the Windfall Clause], or making themselves less able to attract capital to invest in that goal [because investor returns would probably be lower]. Therefore, it would become more likely that amoral firms would achieve windfall profits or AGI [before benevolent firms]. We’ve had some ideas for how to prevent this [see slide below], and think these could go a long way toward solving the problem. But it’s unclear whether these ideas are sufficient.

Those are questions that we have explored.

Some questions that are still largely open — and that we intend to continue to pursue throughout the lifetime of this project — include:

1. How does the Windfall Clause interact with different policy measures that have been proposed to address some of the same problems?

2. How do we distribute the windfall? I think that's a bigger question, and a pretty natural question for us to ponder as EAs. There are also some related questions: Who has input into this process? How flexible should this be to input at later stages in the lifetime of the Clause? How do we ensure that the windfall is being spent in accordance with the common good principle?

Luckily, as members of the EA community, we have a lot of experience answering these questions through charity design and governance. I think that if this project goes well and we decide to pursue it further, this could be a relevant project for the EA community as a whole to undertake — to think about how to spend the gains from AI.

Accordingly, the Centre for the Governance of AI, in collaboration with partners like OpenAI and the Partnership on AI, intends to make this a flagship policy investigation. It is one in a series of investigations on the general question of how to ensure that the gains from AI are spent and distributed well.

In closing, I'd like to reiterate the common good principle and make sure that when we think about the potential downside risks of AI, we don't lose sight of the fact that [a safe and powerful AI could address many of the world’s problems]. [Keeping this fact in sight] is a task worth pursuing on its own.

If you're interested in learning more about this project and potentially contributing to it, I invite you to email me. Thank you so much.

Moderator: Thanks for your talk. I think this is an [overlooked] area, so it is illuminating to [hear about]. Going back to the origins of the project, did you find historical case studies — other examples where companies came into a lot of wealth or power, and tried to distribute it more broadly?

Cullen: Yeah. We found one interesting one, which is in what is now the Democratic Republic of the Congo. When that area was under Belgian colonial rule, a mineral company came into so much wealth from its extractive activities there that it felt embarrassed about how much money it had. The company tried to do charitable work in the Congo with the money as a way of defusing tensions.

I don't think that turned out well for the company. But it is also quite common for firms throughout the world to engage in corporate social responsibility campaigns in the communities in which they work, as a means of improving community relations and ultimately mitigating the risk of expropriation, or activist action, or adverse governmental actions of other sorts. There's a range of cases — from those that are very analogous to more common ones.

Moderator: What mechanisms currently exist in large companies that are closest to the kind of distribution you're thinking about?

Cullen: A number of companies make commitments that are contingent on their profit levels. For example, Paul Newman has a line of foods. They give all of their profits to charity; it's [Newman’s] charitable endeavor. That's a close analogy to this.

It’s also quite common to see companies making commitments along the lines of a certain percentage of a product’s purchase going to charity. That's similar, although it doesn't involve the firm’s relative profit levels.

It's not super common to see companies make commitments contingent on profit levels. But OpenAI just restructured into a capped-profit model. That’s somewhat similar to [what we’re proposing in the Windfall Clause project]. They're giving all profits above a certain level to the nonprofit that continues to govern them.

Moderator: You mentioned toward the end of your talk that there are some legal binding commitments that a company could be held to, assuming that they decided to enter into this sort of social contract. But you could also imagine that they become so powerful from having come into so many resources that the law is a lot weaker. Can you say a little bit more on the mechanisms you have in mind that might be used to hold their feet to the fire?

Cullen: Yeah, absolutely. I think this is an outstanding problem in AI governance that's worth addressing not just for this project, but in general. We don’t want companies to be above the law once they achieve AGI. So it’s worth addressing for reasons beyond just the Windfall Clause.

There are very basic things you could do, like have the Windfall Clause set up in a country with good rule of law and enough of a police force to plausibly enforce the clause. But I don't expect this project to resolve this question.

Another point along the same lines is that this involves questions of corporate governance. Who in a corporation has the authority to tell an AGI, or an AGI agent, what to do? That will be relevant to whether we can expect AGI-enabled corporations to follow the rule of law. It also involves safety questions around whether AI systems are designed to be inherently constrained by the rule of law, regardless of what their operators tell them to do. I think that's worth investigating from a technical perspective as well.

Moderator: Right. Have you had a chance to speak with large companies that look like they might come into a windfall, as a result of AI, and see how receptive they are to an idea like this?

Cullen: We haven't done anything formal along those lines yet. We've informally pitched this at a few places, and have received generally positive reactions to it, so we think it's worth pursuing for that reason. I think that we’ve laid the foundation for further discussions, negotiations, and collaborations to come. That's how we see the project at this stage.

Presumably, the process of getting commitments (if we want to pursue it that far) will involve further discussions and tailoring to the specific receptiveness of different firms based on where they perceive themselves to be, what the executives’ personal views are, and so forth.

Moderator: Right. One might think that if you're trying to take a vast amount of resources and make sure that they get distributed to the public at large — or at least distributed to a large fraction of the public — that you might want those resources to be held by a government whose job it is to distribute them. Is that part of your line of thinking?

Cullen: Yeah. I think there are reasons that the Windfall Clause could be preferable to taxation. But that is also not to say that we don't think governments should have a role in input for democratic accountability, and also pragmatic reasons to avoid nationalization.

One general consideration is that tax dollars tend not to be spent as effectively as charitable dollars, for a number of reasons. And, for quite obvious reasons involving stakeholder incentives, taxes tend to be spent primarily on the voters of that constituency. On the other hand, the common good principle, to which we're trying to stick with this project, demands that we distribute resources more evenly.

But as a pragmatic consideration, making sure that governments feel like they have influence in this process is something that we are quite attentive to.

Moderator: Right. And is there some consideration of internationalizing the project?

Cullen: Yeah. One thing that this project doesn't do is talk about control of AGI. And one might reasonably think that AGI should be controlled by humanity collectively, or through some decision-making body that is representative of a wide variety of needs. [Our project] is more to do with the benefits of AI, which is a bit different from control. I think that's definitely worth thinking about more, and might be very worthwhile. This project just doesn't address it.

Moderator: As a final question, the whole talk is predicated on the notion that there would be a windfall from having a more advanced AI system. What are the circumstances in which you wouldn't get such a windfall, and all of this is for naught?

Cullen: That’s definitely a very good question. I'm not an economist, so what I'm saying here might be more qualitative than I would like. But if you're living in a post-scarcity economy, then money might not be super relevant. But it's hard to imagine this in a case where corporations remain accountable to their shareholders. Their shareholders are going to want to benefit in some way, and so there's going to have to be some way to distribute those benefits to shareholders.

Whether that looks like money as we currently conceive it, or vouchers for services that the corporation is itself providing, is an interesting question — and one that I think current corporate law and corporate governance are not well-equipped to handle, since money is the primary mode of benefit. But you can think of the Windfall Clause as capturing other sorts of benefits as well.

A more likely failure mode is just that firms begin to primarily structure themselves to benefit insiders, without meaningful accountability from shareholders. This is also a rule-of-law question. Because if that begins to happen, then the normal thing that you expect is for shareholders to vote out or sue the bad directors. And whether they're able to do that turns on whether the rule of law holds up, and whether there's meaningful accountability from a corporate-governance perspective.

If that fails to happen, you could foresee that the benefits might accrue qualitatively inside of corporations for the corporate directors.

Moderator: Right. Well, on that note, thank you so much for your talk.

Cullen: Thank you.

Apologies for nitpicking, but I just noticed that "s-risks" was explained here by the editor(s) as referring to "systemic risks". This should be "suffering risks", e.g. see here.

(I also double checked and the talk only uses the abbreviation s-risks and the paper itself doesn't mention "s-risks," "systemic risks," or "suffering." The transcript is also on effectivealtruism.org)

I thought this was an informative talk. I especially enjoyed the exposition of the issue of unequal distribution of gains from AI. However, I am not quite convinced a voluntary Windfall Clause for companies to sign up would be effective. The examples you gave in the talk aren't quite cases where the voluntary reparation by companies come close to the level of contribution one would reasonably expect from them to address the damage and inequality the companies caused. I am curious, if the windfall issue is essentially one of oligopolistic regulation, since there's only a small number of them, would it be more effective to simply tax the few oligopolies, instead of relying on voluntary singups? Perhaps what we need is not a voluntary legally binding contract, but a legally binding contract, period, regardless of voluntariness of the companies involved?