Aaron Bergman

Bio

Participation4

I graduated from Georgetown University in December, 2021 with degrees in economics, mathematics and a philosophy minor. There, I founded and helped to lead Georgetown Effective Altruism. Over the last few years recent years, I've interned at the Department of the Interior, the Federal Deposit Insurance Corporation, and Nonlinear.

Blog: aaronbergman.net

How others can help me

- Give me honest, constructive feedback on any of my work

- Introduce me to someone I might like to know :)

- Offer me a job if you think I'd be a good fit

- Send me recommended books, podcasts, or blog posts that there's like a >25% chance a pretty-online-and-into-EA-since 2017 person like me hasn't consumed

- Rule of thumb standard maybe like "at least as good/interesting/useful as a random 80k podcast episode"

How I can help others

- Open to research/writing collaboration :)

- Would be excited to work on impactful data science/analysis/visualization projects

- Can help with writing and/or editing

- Discuss topics I might have some knowledge of

- like: math, economics, philosophy (esp. philosophy of mind and ethics), psychopharmacology (hobby interest), helping to run a university EA group, data science, interning at government agencies

Posts 22

Comments202

Topic contributions1

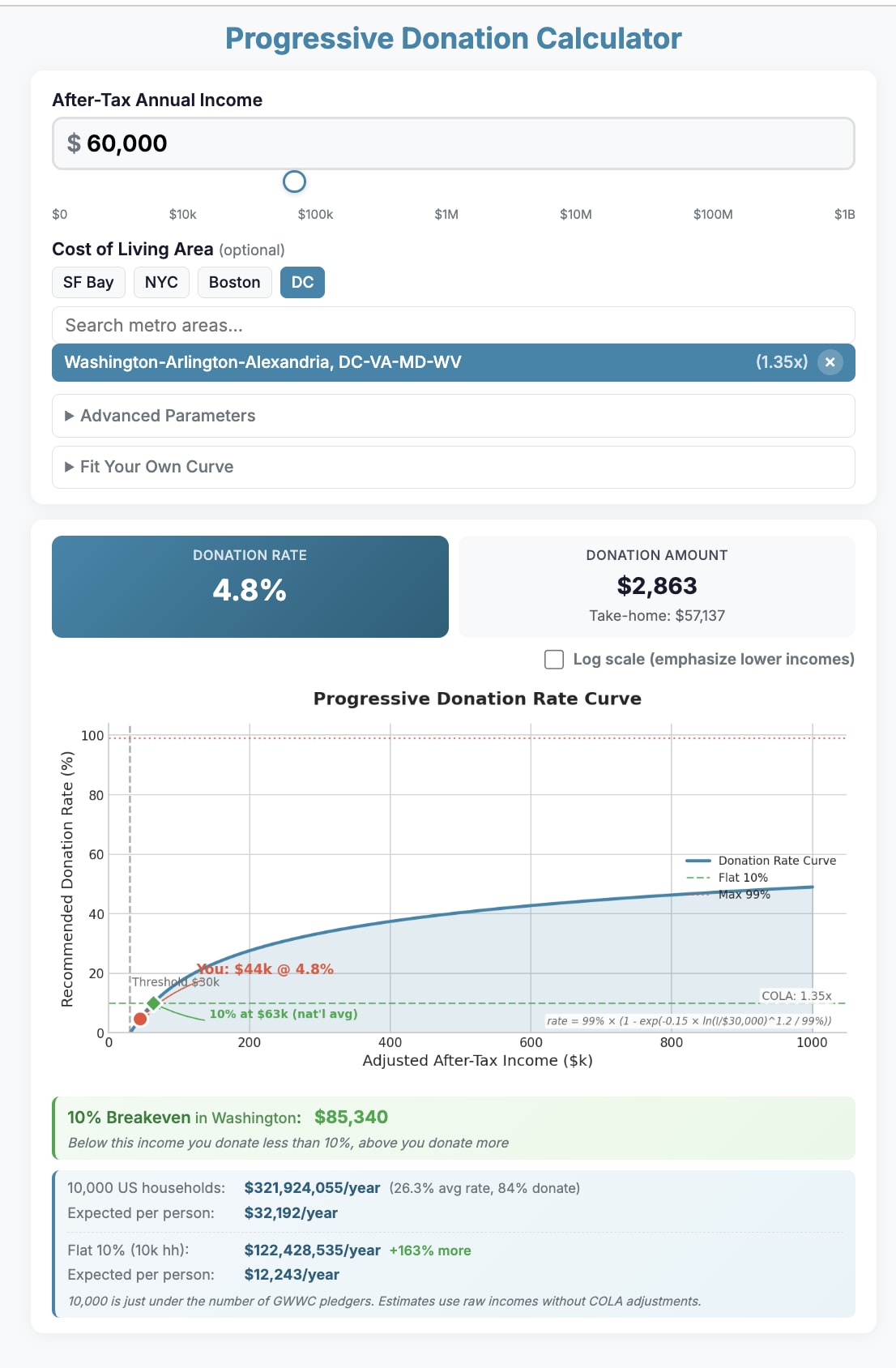

I made a tool to play around with how alternatives to the 10% GWWC Pledge default norm might change:

- How much individuals are "expected" to pay

- The idea being that there are functions of income that people would prefer to the 10% pledge behind some relevant veil of ignorance, along the lines of "I don't want to commit 10% of my $30k salary, but I gladly commit 20% of my $200k salary"

How much total donation revenue gets collected

There's some discussion at this Tweet of mine

Some folks pushed back a bit, citing the following:

- The pledge isn't supposed to be revenue maximizing

- A main function of the pledge is to "build the habit of giving" and complexity/higher expectations undermines this

- Many pledgers give >10% - the pledge doesn't set an upper bond

I don't find these points very convincing...

Some points of my own

- To quote myself: "I think it would be news to most GWWC pledgers that actually the main point is some indirect thing like building habits rather than getting money to effective orgs"

- If the distribution of GWWC pledgers resembles the US distribution of household incomes and then cut implied donations in half to account for individual vs household discrepancy (obviously false but maybe still illustrative assumptions), the roughly 10,000 GWWC pledgers are giving $60M/year

- That's a lot of money. It matters a lot in absolute terms

- This isn't a giving game situation where a few college fellowships give $100/semester - there the main effect is indirect, not via the $100 moved. But at $60M/year, even relatively modest % increases matter a lot

- The 10% GWWC pledge functions as a norm that probably has some influence over total amounts given even though folks are free to give as much (or as little, really) as they want.

- The simplicity/memetic fitness of flat 10% is nice, but this has to be weighed against the actual object level consideration of how much money gets moved per year

- Plausibly more folks would take a revised version of the pledge because it's less costly at lower incomes. There's a reason that the US income tax system is progressive!

- Possibly we can have a best of both worlds situation, where the GWWC pledge remains a strong norm but there's a different, mostly compatible system for those so inclined.

- Tentatively it seems like a progressive system makes sense purely from a money moved POV. This screenshot is from the default settings, which is approximately the curve I endorse

Some design choices to make explicit

- The site is supposed to be about after-tax income, which is what matters insofar as you're open to a non-flat curve

- The site accounts for exactly two variables for simplicity and convenience: (1) metro area to determine cost of living and (2) after-tax income; plenty of other things that would plausibly change the function for the better, such as family size

- The default curve when you load the page is roughly what I personally endorse but I haven't put a ton of thought into it

- That is:

- You can modify the parameters in a couple different ways

Why adjust for cost of living?

- The actual reason I chose to do this is that I think there are altruistic benefits to living in major US cities, most notably SF/Bay Area and DC, but also others like NYC and Boston, and so don't want to disincentivize living in those places

- I realize this is not incredibly principled, maybe this is the wrong call

The site one more time

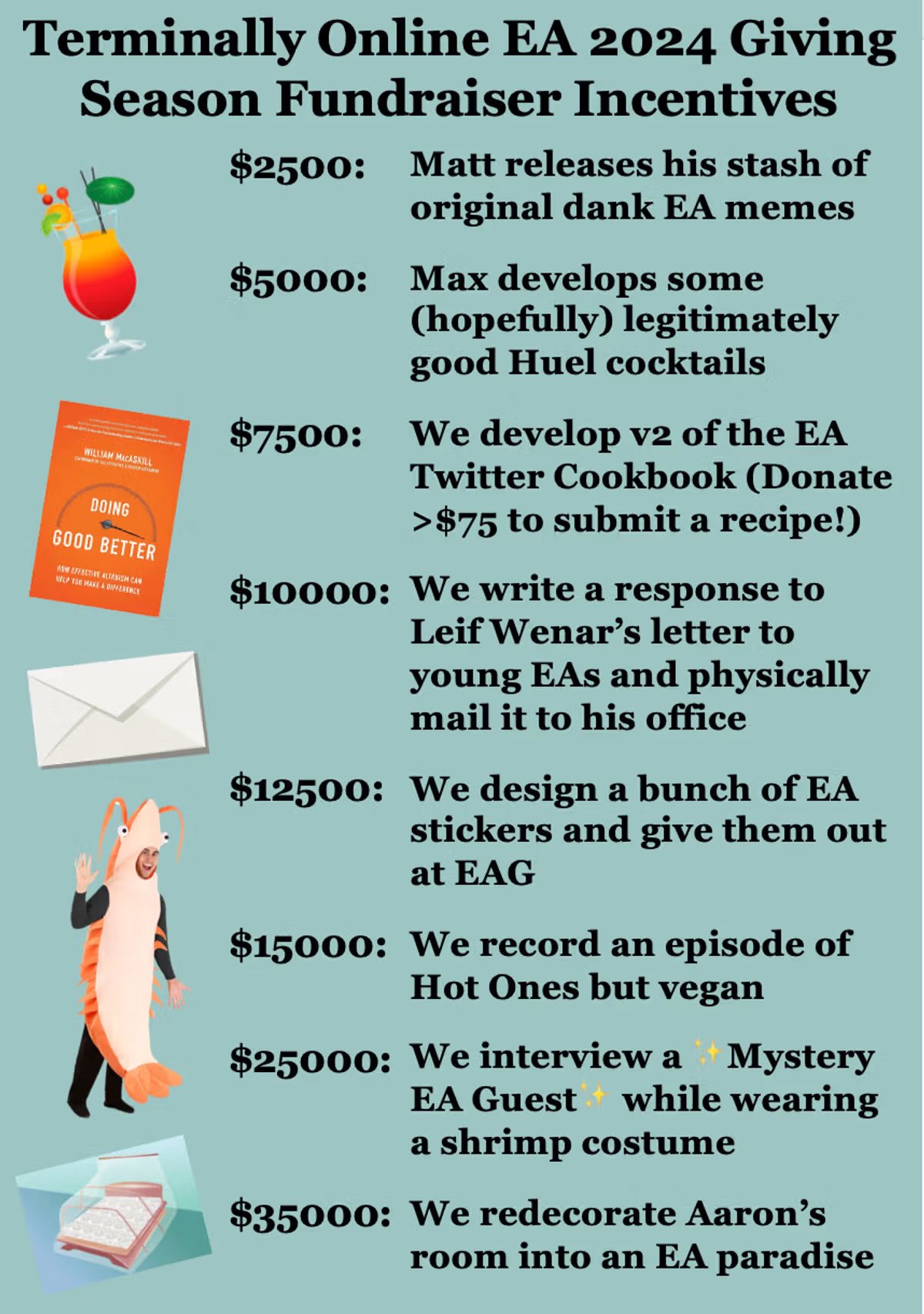

New interview with Will MacAskill by @MHR🔸

Almost a year after the 2024 holiday season Twitter fundraiser, we managed to score a very exciting "Mystery EA Guest" to interview: Will MacAskill himself.

- @MHR🔸 was the very talented interviewer and shrimptastic fashion icon

- Thanks to @AbsurdlyMax🔹 for help behind the scenes

- And of course huge thanks to Will for agreeing to do this

Summary, highlights, and transcript below video!

Summary and Highlights

(summary AI-generated)

Effective Altruism has changed significantly since its inception. With the arrival of "mega donors" and major institutional changes, does individual effective giving still matter in 2025?

Will MacAskill—co-founder of the Centre for Effective Altruism and Giving What We Can, and currently a senior research fellow at the Forethought Institute—says the answer is a resounding yes. In fact, he argues that despite the resources currently available, individuals are "systematically not ambitious enough" relative to the scale of the problems the world faces.

In this special interview for the 2025 EA Twitter Fundraiser, Will joins host Matt to discuss the evolution of the movement’s focus. They discuss why animal welfare—specifically the fight against factory farming—has risen in prominence relative to global health, and why Will believes those working on it are "on the right side of history."

Will also shares updates from his current work at the Forethought Institute, where he is moving beyond standard AI safety concerns to focus on "model character"—the idea that as AI agents become more autonomous, their embedded ethics and personality will determine how our economy and society function.

Matt and Will discuss:

- Why "mega donors" haven't made individual giving obsolete

- The "founder effect" that initially prioritized global health over animal welfare

- The funniest moment from the What We Owe the Future media tour (involving Tyler Cowen)

- Why Forethought is focused on the moral status of digital beings

- Will’s call for the community to embrace an "impartial, altruistic scout mindset" on social media

Highlights

On earning to give:

Then, should people be more ambitious [about earning to give]? I genuinely think yes. I think people systematically aren't ambitious enough, so the answer is almost always yes. Again, the ambition you have should match the scale of the problems that we’re facing—and the scale of those problems is very large indeed.

On current, most exciting work at Forethought:

Maybe the thing I’ll emphasize most at the moment... is the idea of "model character" or AI personality.

There is now OpenAI, which has this "Model Spec," and then Claude’s "soul" was leaked. Basically, these companies are having to make decisions about how these AIs behave. That affects hundreds of millions of people every day already. And then in the future, it will determine how our most active economic agents in the world act.

There’s a real spectrum that you can imagine between AI that is as maximally like a tool as possible—just an obedient servant—versus an AI that actually has its own sense of ethics and own conscience. Once you’ve got that, then take every single hairy ethical issue or important circumstance the AIs will face; we really should be thinking in advance about how we want them to behave. I’d be keen for a lot more thought to be going into that.

On what he'd tell EA Twitter:

The main thing is just that I have been loving what I’ve been seeing as a resurgence in EA activity online—on Substack and Twitter in particular. If you think I haven't been following along with popcorn on the Andy Masley data center water usage story, you would be wrong.

Honestly, I just love seeing more people really coming out to bat for EA ideas, demonstrating EA ideas on all sorts of topics...

Transcript

MATT

Hello everyone and welcome. I’m Matt from the 2025 EA Twitter Fundraiser. I’m here with Will MacAskill himself, who likely needs no introduction for folks watching this. But in case anyone does, Will is a senior research fellow at the Forethought Institute and former associate professor at Oxford University. He co-founded Giving What We Can and the Centre for Effective Altruism. He is the author of numerous books and articles, including Doing Good Better, What We Owe the Future, and my personal favorite, An Introduction to Utilitarianism. Thank you for joining us, Will.

WILL

Thanks for the interview.

MATT

We’re doing this in service of promoting effective giving. To start us off, we were curious to ask: What do you think the role of effective giving should be in the EA community in 2025? And maybe in particular, should people be more ambitious with trying to earn to give?

WILL

Yes. I think of effective giving as a core part of Effective Altruism. It was the start of it all, and it continues to be important. It continues to be important even if you see "mega donors" coming on the scene, because there are often a lot of things that individuals can fund that the mega donors can’t. And even taking the mega donors into account, there are still enormous problems in the world that they are not going to be able to solve.

Then, should people be more ambitious? I genuinely think yes. I think people systematically aren't ambitious enough, so the answer is almost always yes. Again, the ambition you have should match the scale of the problems that we’re facing—and the scale of those problems is very large indeed.

MATT

Absolutely. One of those big problems—and the one that we’re raising money for in this year’s online fundraiser—is specifically farmed animal welfare. That’s something that has been really popular with highly engaged EAs recently. If you look at, for example, the donation election on the Forum, you’ll see there’s really a lot of enthusiasm around that. However, it is something that has historically played a smaller role in public EA messaging. Do you think that’s a good balance, or is that something that you would like to see change?

WILL

I definitely think that there was never some grand plan of reducing the focus on animal welfare. I and some others really got in via global health and development, so I think that really set the stage—there is a bit of a founder effect there. And then I think it's also just the case that maybe there is naturally wider reach because more people are concerned [about human-centric causes].

But yes, I’d be down for that to change. The problem of factory farming is just so stark and so unnecessary. The arguments for [addressing] it are so good.

I remember when I became vegetarian twenty years ago—I’m getting old now—I got a lot of pushback. It was quite a weird thing to do. People thought it was "holier than thou." It was like you were morally grandstanding or judging others at the time. That has changed. Now, regarding the people who are vegetarian or vegan who really care about animal welfare, it’s just clear that these are the good people. They are on the right side of history.

The amount of good that you can do is just absolutely enormous; the sheer amount you can affect is huge. So, yes, I’d love to see that rise in prominence.

MATT

Very cool. When I was doing some research for this, I was looking back at old versions of the 80,000 Hours and Giving What We Can websites on the Wayback Machine. I was surprised that the first-ever archive of the 80,000 Hours page has farmed animal welfare as one of the issues on there. So, you mentioned the founder effect, but I was pleasantly surprised to see just how early this was getting into EA messaging.

WILL

We all cared. I was vegetarian. Little known fact: I and others at 80,000 Hours helped to set up Animal Charity Evaluators.

MATT

Yeah, I remember reading that at some point.

WILL

That was because we felt it was a gap at that point—that was in 2012 or something.

MATT

Well, one of the ways that we are trying to promote the fundraiser is leaning into EA memes. That’s certainly something that’s always been fun about the online EA community. Do you have a favorite EA meme that you’ve run across?

WILL

I feel I have many favorites. One is the April Fools' posts naming Giving What We Can "Naming What We Can."

I also feel it’s a shame that Dustin Moskovitz is no longer memeing quite as hard as he used to. But something he did... there was a big debate on Reddit where people were complaining about Wytham Abbey. There was a debate in the comments between who bought it—who owned it. One person was saying it was Will MacAskill, and the other was saying it was Owen Cotton-Barratt—both of which are incorrect. And then someone came in and said, "What if it’s neither of those people, but some mystery third person?"

That commenter, like many threads down into this Reddit post, was Dustin Moskovitz himself, who actually bought it. So, I salute him. Maybe not a meme per se, but part of the same culture.

MATT

Maybe if we lean in, we can get him to participate a little bit more again.

WILL

Maybe.

MATT

You did quite the media blitz for What We Owe the Future. Was there a funniest or most unexpected story you have from that whirlwind tour?

WILL

The thing that leaps out the most is when I was being interviewed by Tyler Cowen. Podcasts normally have a bit of a chat and get a bit of a warm-up. Tyler just immediately goes in: "Okay, so what is something you like that’s ineffective?"

I just couldn't think of an answer. I went completely blank. So this gets edited from the podcast, but there were minutes of me just being like... [silence]. It kind of threw me off for the whole episode, really. It’s not that I only like maximally efficient things, to be clear!

MATT

I sometimes listen to Conversations with Tyler, and yes, he sometimes has that energy where you really feel like he should let the guest take a breather.

WILL

That was right at the start.

MATT

Well, Will, I know you’ve been doing some really exciting work at Forethought these days. Do you want to tell us a little bit about the work that’s going on there and maybe what you’re most excited about?

WILL

Sure. Forethought in general is trying to do research that is as useful as possible in helping the world as a whole navigate the transition to extremely advanced AI—superintelligence. Distinctively, we tend to not focus on the risk of loss of control to AI systems themselves. Our work often hits on that or helps on that, and it’s a big risk—we’re glad that other people are working on it—but I personally don't think that makes up the vast majority of the issues we face.

We are often looking at neglected issues other than that. That can include:

- The risk of intense concentration of power among humans.

- The idea that we should be taking seriously the moral status of digital beings themselves and what kind of rights they have.

- A number of other issues, like how AI will interact with society’s ability to reason well and make good decisions.

Maybe the thing I’ll emphasize most at the moment, because I’m very excited about it from an impact perspective but I think people don’t appreciate it quite enough, is the idea of "model character" or AI personality.

There is now OpenAI, which has this "Model Spec," and then Claude’s "soul" was leaked. Basically, these companies are having to make decisions about how these AIs behave. That affects hundreds of millions of people every day already. And then in the future, it will determine how our most active economic agents in the world act.

There’s a real spectrum that you can imagine between AI that is as maximally like a tool as possible—just an obedient servant—versus an AI that actually has its own sense of ethics and own conscience. Once you’ve got that, then take every single hairy ethical issue or important circumstance the AIs will face; we really should be thinking in advance about how we want them to behave. I’d be keen for a lot more thought to be going into that.

MATT

Absolutely. That seems incredibly important. All right, well to close, is there anything else you want to tell the people of EA Twitter?

WILL

The main thing is just that I have been loving what I’ve been seeing as a resurgence in EA activity online—on Substack and Twitter in particular. If you think I haven't been following along with popcorn on the Andy Masley data center water usage story, you would be wrong.

Honestly, I just love seeing more people really coming out to bat for EA ideas, demonstrating EA ideas on all sorts of topics—whether that’s data center usage or the utter monstrosity of current shrimp factory farming. So I would just love for more people to feel empowered to be shouting about the giving they’re doing, the causes that they’re working on, and demonstrating the impartial, altruistic scout mindset that I think is EA at its best.

MATT

Awesome. Well, you heard it here first, folks: post more.

WILL

And thanks for the fundraiser; thanks to everybody who has contributed. It’s very, very cool to see this.

Matt

Yes, we definitely are really grateful for everyone who has donated so far and who will continue to donate, because the animal welfare fund is awesome and supporting effective animal interventions is a really, really fantastic cause.

All right. Well, thank you, Will, for joining us. It’s been a pleasure speaking with you.

WILL

It's been a joy. Cool. Merry Christmas, Happy Holidays.

MATT

Merry Christmas.

I strongly endorse this and think that there are some common norms that stand in the way of actually-productive AI assistance.

- People don't like AI writing aesthetically

- AI reduces the signal value of text purportedly written by a human (i.e. because it might have been trivial to create and the "author" needn't even endorse each claim in the writing)

Both of these are reasonable but we could really use some sort of social technology for saying "yes, this was AI-assisted, you can tell, I'm not trying to trick anyone, but also I stand by all the claims made in the text as though I had done the token generation myself."

I think I'm more bullish on digital storage than you.

Most alignment work today exists as digital bits: arXiv papers, lab notes, GitHub repos, model checkpoints. Digital storage is surprisingly fragile without continuous power and maintenance.

SSDs store bits as charges in floating-gate cells; when unpowered, charge leaks, and consumer SSDs may start losing data after a few years. Hard drives retain magnetic data longer, but their mechanical parts degrade; after decades of disuse they often need clean-room work to spin up safely. Data centres depend on air-conditioning, fire suppression, and regular maintenance.

In a global collapse where grids are down for years, almost all unmaintained digital archives eventually succumb to bit-rot, corrosion, fire, or physical decay.

This is true but the fundamental value proposition of digital storage is extremely cheap, and easy (~free on both fronts for a few GB of PDFs) replication. So it's true that a single physical device by default won't last very long, but the data itself simply ("simply" - I mean the crux is how hard/common this will be) needs to hop from device to device (possibly and ideally existing on many devices at any one time) indefinitely.

By analogy you might think that human aging/death poses a major problem for the continued existence of the information necessary to recreate a human (i.e. DNA + a few other sources of info) but in fact the ability for people to replicate makes destruction of that knowledge much, much harder.

Of course a key consideration is how bad the setback is. At the limit you're right because our ability to create the tools for info replication won't exist, but at least naively it might take quite a bit to destroy all of society's ability to manufacture flash drives and computers for long enough for all info to die out via the mechanisms you describe.

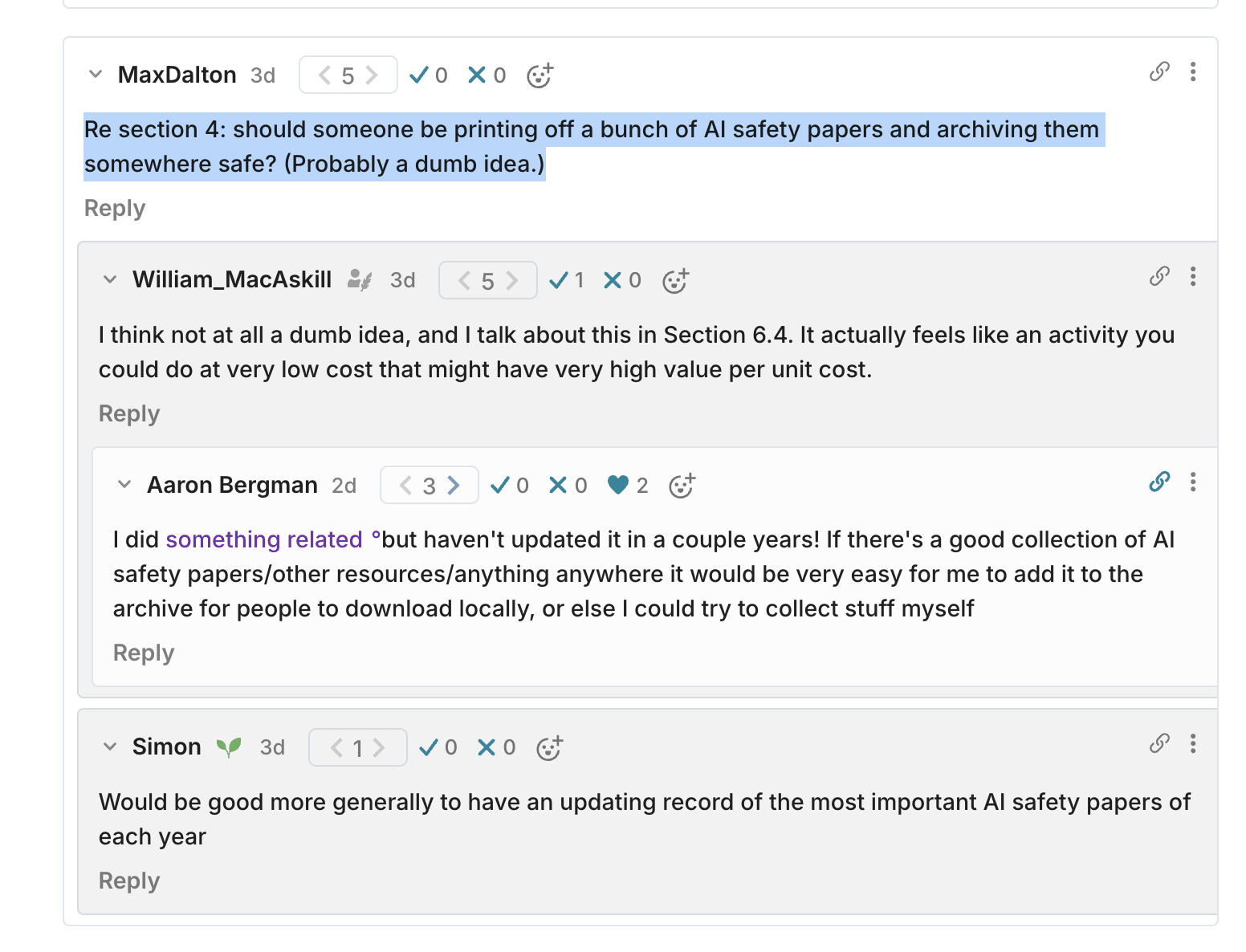

Wanted to bring this comment thread out to ask if there's a good list of AI safety papers/blog posts/urls anywhere for this?

(I think local digital storage in many locations probably makes more sense than paper but also why not both)

Lightcone and Alex Bores (so far)

Edit: to say a tiny bit more, LessWrong seems instrumentally good and important and rationality is a positive influence on EA. Lightcone doesn't have the vibes of "best charity" to me, but when I imagine my ideal funding distribution it is the immediate example of "most underfunded org" that comes to mind. Obviously related to Coefficient not supporting rationality community building anymore. Remember, we are donating on the margin, and approximately the margin created by Coefficient Giving!

Edit: And Animal Welfare Fund

Super cool - a bit hectic and I substantively disagree with one of the "fallacies" the fallacy evaluator flagged on this post but I'll definitely be using this going forward

Thanks for the highlight! Yeah I would love better infrastructure for trying to really figure out what the best uses of money are. I don't think it has to be as formal/quantitative as GiveWell. To quote myself from a recent comment (bolding added)

At some level, implicitly ranking charities [eg by donating to one and not another] is kind of an insane thing for an individual to do - not in an anti-EA way (you can do way better than vibes/guessing randomly) but in a "there must be better mechanisms/institutions for outsourcing donation advice than GiveWell and ACE and ad hoc posts/tweets/etc and it's really hard and high stakes" way.

Like what I would love is a lineup of 10-100 very highly engaged and informed people (could create the list simply by number of endorsements/requests) who talk about their strategy and values in a couple pages and then I just defer to them (does this exist?)

I did something related but haven't updated it in a couple years! If there's a good collection of AI safety papers/other resources/anything anywhere it would be very easy for me to add it to the archive for people to download locally, or else I could try to collect stuff myself

So cool! Comprehensive EA grants data is like the most central possible example of what I'm interested in, I don't want to promise anything but potentially interested in contributing if there's a need. I might just play around with the code and see if there's anything potentially valuable to add

Also: genuinely unsure this is worth the time/effort to fix but I'd flag that the smallest grants all look like artifacts of some sort of parsing error rather than actual grants