Jackson Wagner

Bio

Scriptwriter for RationalAnimations! Interested in lots of EA topics, but especially ideas for new institutions like prediction markets, charter cities, georgism, etc. Also a big fan of EA / rationalist fiction!

Posts 20

Comments378

The Christians in this story who lived relatively normal lives ended up looking wiser than the ones who went all-in on the imminent-return-of-Christ idea. But of course, if christianity had been true and Christ had in fact returned, maybe the crazy-seeming, all-in Christians would have had huge amounts of impact.

Here is my attempt at thinking up other historical examples of transformative change that went the other way:

-

Muhammad's early followers must have been a bit uncertain whether this guy was really the Final Prophet. Do you quit your day job in Mecca so that you can flee to Medina with a bunch of your fellow cultists? In this case, it probably would've been a good idea: seven years later you'd be helping lead an army of 100,000 holy warriors to capture the city of Mecca. And over the next thirty years, you'll help convert/conquer all the civilizations of the middle east and North Africa.

-

Less dramatic versions of the above story could probably be told about joining many fast-growing charismatic social movements (like joining a political movement or revolution). Or, more relevantly to AI, about joining a fast-growing bay-area startup whose technology might change the world (like early Microsoft, Google, Facebook, etc).

-

You're a physics professor in 1940s America. One day, a team of G-men knock on your door and ask you to join a top-secret project to design an impossible superweapon capable of ending the Nazi regime and stopping the war. Do you quit your day job and move to New Mexico?...

-

You're a "cypherpunk" hanging out on online forums in the mid-2000s. Despite the demoralizing collapse of the dot-com boom and the failure of many of the most promising projects, some of your forum buddies are still excited about the possibilities of creating an "anonymous, distributed electronic cash system", such as the proposal called B-money. Do you quit your day job to work on weird libertarian math problems?...

People who bet everything on transformative change will always look silly in retrospect if the change never comes. But the thing about transformative change is that it does sometimes occur.

(Also, fortunately our world today is quite wealthy -- AI safety researchers are pretty smart folks and will probably be able to earn a living for themselves to pay for retirement, even if all their predictions come up empty.)

"Articulate a stronger defense of why they're good?"

I'm no expert on animal-welfare stuff, but just thinking out loud, here are some benefits that I could imagine coming from this technology (not trying to weigh them up versus potential harms or prioritize which seem largest or anything like that):

- You imagine negative PR consequences once we realize that animals might mostly be thinking about basic stuff like food and sex, but I picture that being only a small second-order consequence -- the primary effect, I suspect, is that people's empathy for animals might be greatly increased by realizing they think about stuff and communicate at all. The idea that animals (especially, like, whales) have sophisticated thoughts and communicate, and the intuition that they probably have valuable internal subjective experience, might both seem "obvious" to animal-welfare activists, but I think for most normal people globally, they either sorta believe that animals have feelings (but don't think about this very much) or else explicitly believe that animals lack consciousness / can't think like humans because they don't have language / don't have full human souls (if the person is religious) / etc. Hearing animals talk would, I expect, wake people up a little bit more to the idea that intelligence & consciousness exist on a spectrum and animals have some valuable experience (even if less so than humans).

- In particular, I'm definitely picturing that the journalists covering such experiments are likely to be some combination of 1. environmentalists who like animals, 2. animal rights activists who like animals, 3. just think animals are cute and figure that a feel-good story portraying animals as sweet and cute will obviously do better numbers than a boring story complaining about how dumb animals are. So, with friendly media coverage, I expect the biggest news stories will be about the cutest / sweetest / most striking / saddest things that animals say, not the boring fact that they spend most of their time complaining about bodily needs just like humans do.

- Compare for instance "news coverage" (and other cultural perceptions of) human children. To the extent that toddlers can talk, they are mostly just demanding things, crying, failing to understand stuff, etc. Yet, we find this really cute and endearing (eg, i am a father of a toddler myself, and it's often very fun). I bet animal communication would similarly be perceived positively, even if (like babies) they're really dumb compared to adult humans.

- Talk-to-animals tech also seems potentially philosophically important in some longtermist, "sentient-futures" style ways:

- What's good versus bad for an animal? Right now we literally just have to guess, based on eyeballing whether the creature seems happy. And if you are less of a total-hedonic-utilitarian, more of a preference utilitarian, the situation gets even worse. It would be nice if we could just ask animals what their problems are, what kind of things they want, etc! Even a very small amount of communication would really increase what we are able to learn about animals' preferences, and thus how well we are able to treat them in a best-case scenario.

- Maybe we could use this tech to do scientific studies and learn valuable things about consciousness, language, subjective experience, etc, in a way that clarifies humanity's thinking about these slippery issues and helps us better avoid moral catastrophes (perhaps becoming more sympathetic to animals as a result, or getting a better understanding of when AI systems might or might not be capable of suffering).

- Perhaps humanity has some sort of moral obligation to (someday, after we solve more pressing problems like not destroying the world or creating misaligned AI) eventually uplift creatures like whales, monkeys, octopi, etc, so they too can explore and comprehend the universe together with us. Talk-to-animals tech might be an early first step toward such future goals, might set early precedents, might help us learn about some of the philosophical / moral choices we would need to make if we embarked on a path of uplifting other species, idk.

it also advocates for the government of California to in-house the engineering of its high-speed rail project rather than try to outsource it to private contractors

Hence my initial mention of "high state capacity"? But I think it's fair to call abundance a deregulatory movement overall, in terms of, like... some abstract notion of what proportion of economic activity would become more vs less heavily involved with government, under an idealized abundance regime.

Sorry to be confusing by "unified" -- I didn't mean to imply that individual people like klein or mamdani were "unified" in toeing an enforced party line!

Rather I was speculating that maybe the reason the "deciding to win" people (moderates such as matt yglesias) and the "abundance" people, tend to overlap moreso than abundance + left-wingers, is because the abundance + moderates tend to share (this is what I meant by "are unified by") opposition to policies like rent control and other price controls, tend to be less enthusiastic about "cost-disease-socialism" style demand subsidies since they often prefer to emphasize supply-side reforms, tend to want to deemphasize culture-war battles in favor of an emphasis on boosting material progress / prosperity, etc. Obviously this is just a tendency, not universal in all people, as people like mamdani show.

FYI, I'm totally 100% on board with your idea that abundance is fully compatible with many progressive goals and, in fact, is itself a deeply progressive ideology! (cf me being a huge georgist.) But, uh, this is the EA Forum, which is in part about describing the world truthfully, not just spinning PR for movements that I happen to admire. And I think it's an appropriate summary of a complex movement to say that abundance stuff is mostly a center-left, deregulatory, etc movement.

Imagine someone complaining -- it's so unfair to describe abundance as a "democrat" movement!! That's so off-putting for conservatives -- instead of ostracising them, we should be trying to entice them to adopt these ideas that will be good for the american people! Like Montana and Texas passing great YIMBY laws, Idaho deploying modular nuclear reactors, etc. In lots of ways abundance is totally coherent with conservative goals of efficient government services, human liberty, a focus on economic growth, et cetera!!

That would all be very true. But it would still be fair to summarize abundance as primarily a center-left democrat movement.

To be clear I personally am a huge abundance bro, big-time YIMBY & georgist, fan of the Institute for Progress, personally very frustrated by assorted government inefficiencies like those mentioned, et cetera! I'm not sure exactly what the factional alignments are between abundance in particular (which is more technocratic / deregulatory than necessarily moderate -- in theory one could have a "radical" wing of an abundance movement, and I would probably be an eager member of such a wing!) and various forces who want the Dems to moderate on cultural issues in order to win more (like the recent report "Deciding to Win"). But they do strike me as generally aligned (perhaps unified in their opposition to lefty economic proposals which often are neither moderate nor, like... correct).

A couple more "out-there" ideas for ecological interventions:

- "recording the DNA of undiscovered rainforest species" -- yup, but it probably takes more than just DNA sequences on a USB drive to de-extinct a creature in the future. For instance, probably you need to know about all kinds of epigenetic factors active in the embryo of the creature you're trying to revive. To preserve this epigenetic info, it might be easiest to simply freeze physical tissue samples (especially gametes and/or embryos) instead of doing DNA sequencing. You might also need to use the womb of a related species -- bringing back mammoths is made a LOT easier by the fact that elephants are still around! -- and this would complicate plans to bring back species that are only distantly related to anything living. I want to better map out the tech tree here, and understand what kinds of preparation done today might aid what kinds of de-extinction projects in the future.

- Normal environmentalists worry about climate change, habitat destruction, invasive species, pollution, and other prosaic, slow-rolling forms of mild damage to the natural environment. Not on their list: nuclear war, mirror bacteria, or even something as simple as AGI-supercharged economic growth that sees civilization's economic footprint doubling every few years. I think there is a lot that we could do, relatively cheaply, to preserve at least some species against such catastrophes.

- For example, seed banks exist. But you could possibly also save a lot of insects from extinction by maintaining some kind of "mostly-automated insect zoo in a bunker", a sort of "generation ship" approach as oppoed to the "cryosleep" approach that seedbanks can use. (Also, are even today's most hardcore seed banks hardened against mirror bacteria and other bio threats? Probably not! Nor do many of them even bother storing non-agricultural seeds for things like random rainforest flowers.)

- Right now, land conservation is one of the cheapest ways of preventing species extinctions. But in an AGI-transformed world, even if things go very well for humanity, the economy will be growing very fast, gobbling up a lot of land, and probably putting out a lot of weird new kinds of pollution. (Of course, we could ask the ASI to try and mitigate these environmental impacts, but even in a totally utopian scenario there might be very strong incentives to go fast, eg to more quickly achieve various sublime transhumanist goods and avoid astronomical waste.) By contrast, the world will have a LOT more capital, and the cost of detailed ecological micromanagement (using sensors to gather lots of info, using AI to analyze the data, etc) will be a lot lower. So it might be worth brainstorming ahead of time what kinds of ecological interventions might make sense in such a world, where land is scarce but capital is abundant and customized micro-attention to every detail of an environment is cheap. This might include high-density zoos like described earlier, or "let the species go extinct for now, but then reliably de-extinct them from frozen embryos later", or "all watched over by machines of loving grace"-style micromanaged forests that achieve superhumanly high levels of biodiversity in a very compact area (and minimizing wild animal suffering by both minimizing the necessary population and also micromanaging the ecology to keep most animals in the population in a high-welfare state).

- A lot of today's environmental-protection / species-extinction-avoidance programs aren't even robust to, like, a severe recession that causes funding for the program to get cut for a few years! Mainstream environmentalism is truly designed for a very predictable, low-variance future... it is not very robust to genuinely large-scale shocks.

- It's kind of fuzzy and unclear what's even important about avoiding species extinctions or preserving wild landscapes or etc, since these things don't fit neatly into a total-hedonic-utilitarian framework. (In this respect, eco-value is similar to a lot of human culture and art, or values like "knowledge" or "excellence" and so forth.) But, regardless of whether or not we can make philosophical progress clarifying exactly what's important about the natural world, maybe in a utopian future we could find crazy futuristic ways of generating lots more ecological value? (Obviously one would want to do this while avoiding creating lots of wild-animal suffering, but I think this still gives us lots of options.)

- Obviously stuff like "bringing back mammoths" is in this category.

- But maybe also, like, designing and creating new kinds of life? Either variants of earth life (what kinds of interesting things might dinosaurs have evolved into, if they hadn't almost all died out 65 million years ago?), or totally new kinds of life that might be able to thrive on, eg, Titan or Europa (though obviously this sort of research might carry some notable bio-risks a la mirror bacteria, thus should perhaps only be pursued from a position of civilizational existential security).

- Creating simulated, digital life-forms and ecologies? In the same way that a culture really obsessed with cool crystals, might be overjoyed to learn about mathematics and geometry, which lets them study new kinds of life.

- There is probably a lot of exciting stuff you could do with advanced biotech / gene editing technologies, if the science advances and if humanity can overcome the strong taboo in environmentalism against taking active interventions in nature. (Even stuff like "take some seeds of plants threatened by global warming, drive them a few hours north, and plant them there where it's cooler and they'll survive better" is considered controversial by this crowd!)

- Just like gene drives could help eradicate / suppress human scourges like malaria-carrying mosquitoes, we could also use gene drives to do tailored control of invasive species (which are something like the #2 cause of species extinctions, after #1 habitat destruction). Right now, the best way to control invasive species is often "biocontrol" (introducing natural predators of the species that's causing problems) -- biocontrol actually works much better than its terrible reputation suggests, but it's limited by the fact that there aren't always great natural predators available, it takes a lot of study and care to get it right, etc.

- Possibly you could genetically-engineer corals to be tolerant of slightly higher temperatures, and generally use genetic tech to help species adapt more quickly to a fast-changing world.

EcoResilience Inititative is working on applying EA principles (ITN analysis, cost-effectiveness, longtermist orientation, etc) to ecological conservation. But right now it's just my wife Tandena and a couple of her friends doing research on a part-time volunteer basis, no funding or anything, lol.

Here are two recent posts of theirs describing their enthusiasm for precision fermentation technologies (already a darling of the animal-welfare wing of EA) due to its potentially transformative impact on land use if lots of people ever switch from eating meat towards eating more precision-fermentation protein. And here are some quick takes of theirs on deep ocean mining (investigating the ecological benefits of mining the seabed and thereby alleviating current economic pressures to mine in rainforest areas) and biobanking (as a cheap way of potentially enabling future de-extinction efforts, once de-extinction technology is further advanced).

There are also some bigger, more established EA groups that focus mostly on climate interventions (Giving Green, Founder's Pledge, etc); most of these have at least done some preliminary explorations into biodiversity, although there is not really much published work yet. Hannah Ritchie at OurWorldInData has compiled some interesting information about various ecological problems, and her book "Not The End of the World" is great -- maybe the best starting place for someone who wants to get involved to learn more?

There is a very substantial "abundance" movement that (per folks like matt yglesias and ezra klein) is seeking to create a reformed, more pro-growth, technocratic, high-state-capacity democratic party that's also more moderate and more capable of winning US elections. Coefficient Giving has a big $120 million fund devoted to various abundance-related causes, including zoning reform for accelerating housing construction, a variety of things related to building more clean energy infrastructure, targeted deregulations aimed at accelerating scientific / biomedical progress, etc. https://coefficientgiving.org/research/announcing-our-new-120m-abundance-and-growth-fund/

You can get more of a sense of what the abundance movement is going for by reading "the argument", an online magazine recently funded by Coefficient giving and featuring Kelsey Piper, a widely-respected EA-aligned journalist: https://www.theargumentmag.com/

I think EA the social movement (ie, people on the Forum, etc) try to keep EA somewhat non-political to avoid being dragged into the morass of everything becoming heated political discourse all the time. But EA the funding ecosystem is significantly more political, also does a lot of specific lobbying in connection to AI governance, animal welfare, international aid, etc.

Yup, I think there's a lot of very valuable research / brainstorming / planning that EA (and humanity overall) hasn't yet done to better map out the space of ways that we could create moral value far greater than anything we've seen in history so far.

- In EA / rationalist circles, discussion of "flourishing futures" often focuses on "transhumanist goods", like:

- extreme human longevity / immortality through super-advanced medical science

- intelligence amplification

- reducing suffering, and perhaps creating new kinds of extremely powerful positive emotions

- you also hear a bit about AI welfare, and the idea that maybe we could create AI minds experiencing new forms of valuable subjective experience

But there are perhaps a lot of other directions worth exploring:

- various sorts of spiritual attainment that might be possible with advanced technology / digital minds / etc

- things that are totally out of left field to us because we can't yet imagine them, like how the value of consciousness would be a bolt from the blue to a planet that only had plants & lower life forms.

- instantiating various values, like beauty or cultural sophistication or ecological richness, to extreme degrees

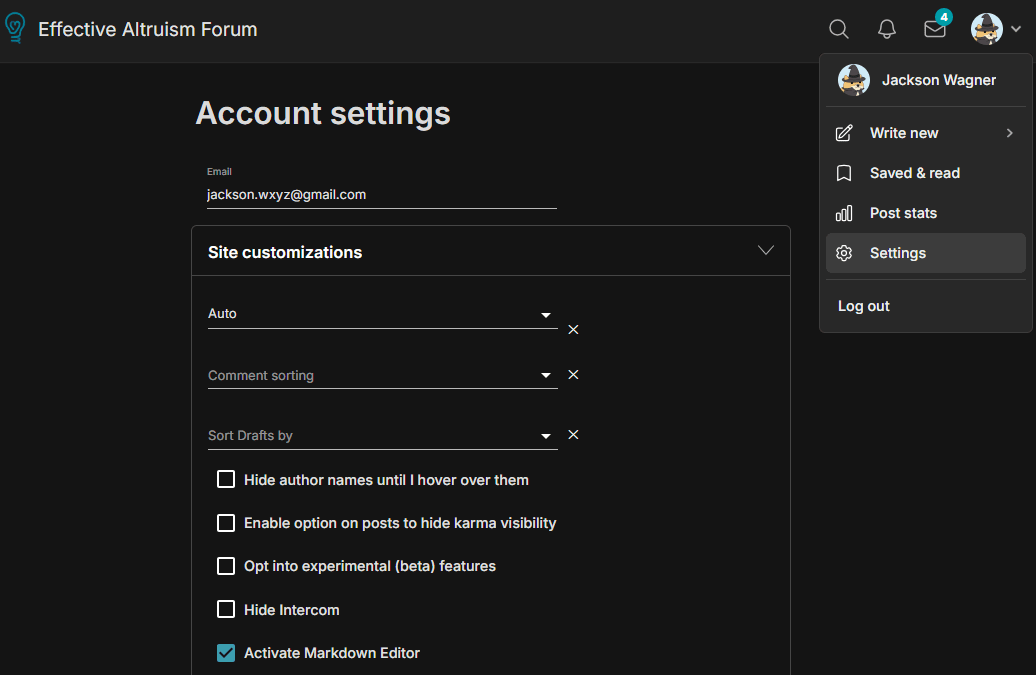

On a more practical note, the Forum does support markdown headings if you enable the "activate markdown editor" feature on the EA Forum profile settings! This would turn all your ##headings into a much more beautiful, readable structure (and it would create a little outline in a sidebar, for people to jump around to different sections).

[content warning: buncha rambly thoughts that might not make much sense]

certainly -- see my bit about how my preferred solution would be to run a volunteer army even if that takes ruinously high taxes on the rest of the population. (The United States, to its credit, has indeed run an all-volunteer army ever since the end of the Vietnam War in 1973! But having an immense population makes this relatively easy; smaller countries face sharper trade-offs and tend to orient more towards conscription. See for instance the fact that Russia's army is less reliant on conscripts than Ukraine's.)

but also, almost every policy in society has unequal benefits, perhaps helping a small group at the expense of more diffuse harm to a larger group, or vice versa. For example, greater investment in bike lanes and public transit (at the expense of simply building more roads) helps cyclists and public-transit users at the expense of car-drivers. Using taxes to fund a public-school system is basically ripping off people who don't have children and subsidizing those that do; et cetera. at some point, instead of trying to make sure that every policy comes out even for everyone involved, you have to just kind of throw up your hands, hope that different policies pointing in different directions even out in the end, and rely on some sense of individual willingness to sacrifice for the common good to smooth over the asymmetries.

One could similarly say it's unfair that residents of Lviv (who are very far from the Ukranian front line, and would almost certainly remain part of a Ukrainian "rump state" even in the case of dramatic eventual Russian victory) are being asked to make large sacrifices for the defense of faraway eastern Ukraine. (And why are residents of southeastern Poland, so near to Lviv, asked to sacrifice so much less than their neighbors?!)

Perhaps there is some galaxy-brained solution to problems like this, where all of Europe (or all of Ukraine's allies, globally) could optimally tax themselves some fractional percent in accordance with how near or far they are to Ukraine itself? Or one could be even more idealistic and imagine a unified coalition of allies where everyone decides centrally which wars to support and then contributes resources evenly to that end (such that the armies in eastern Ukraine would have a proportionate number of frenchmen, americans, etc). But in practice nobody has figured out how a scheme like that would possibly work, or why countries would be motivated to adopt it, how it could be credibly fair and neutral and immune to various abuses, etc.

Another weakness to the idea of democratic feedback is simply that it isn't very powerful -- every couple of years you get essentially a binary choice between the leading two coalitions, so you can do a reasonably good job expressing your opinion on whatever is considered the #1 issue of the day, but it's very hard to express nuanced views on multiple issues through the use of just one vote. So, in this sense, democracy isn't really a guarantee of representation across many issues, so much as a safety valve that will hopefully fix problems one-by-one as they rise to the position of #1 most-egregiously-wrong-thing in society.

I think that today's "liberal democracy" is pretty far from some kind of ethically ideal world with optimally representative governance (or optimally pursuing-the-welfare-of-the-population governance, which might be a totally different system)! Whatever is the ideal system of optimal governance, it would probably seem pretty alien to us, perhaps extremely convoluted in parts (like the complicated mechanisms for Venice selecting the Doge) and overly-financialized in certain ways (insofar as it might rely on weird market-like mechanisms to process information).

But conscription doesn't stand out to me as being especially worse than other policy issues that are similarly unfair in this regard (maybe it's higher-stakes than those other issues, but it's similar in kind) -- it's a little unfair and inelegant and kind of a blunt instrument, just like all of our policies are in this busted world where nations are merely operating with "the worst form of government, except for all the others that have been tried".

To answer with a sequence of increasingly "systemic" ideas (naturally the following will be tinged by by own political beliefs about what's tractable or desirable):

There are lots of object-level lobbying groups that have strong EA endorsement. This includes organizations advocating for better pandemic preparedness (Guarding Against Pandemics), better climate policy (like CATF and others recommended by Giving Green), or beneficial policies in third-world countries like salt iodization or lead paint elimination.

Some EAs are also sympathetic to the "progress studies" movement and to the modern neoliberal movement connected to the Progressive Policy Institute and the Niskasen Center (which are both tax-deductible nonprofit think-tanks). This often includes enthusiasm for denser ("yimby") housing construction, reforming how science funding and academia work in order to speed up scientific progress (such as advocated by New Science), increasing high-skill immigration, and having good monetary policy. All of those cause areas appear on Open Philanthropy's list of "U.S. Policy Focus Areas".

Naturally, there are many ways to advocate for the above causes -- some are more object-level (like fighting to get an individual city to improve its zoning policy), while others are more systemic (like exploring the feasibility of "Georgism", a totally different way of valuing and taxing land which might do a lot to promote efficient land use and encourage fairer, faster economic development).

One big point of hesitancy is that, while some EAs have a general affinity for these cause areas, in many areas I've never heard any particular standout charities being recommended as super-effective in the EA sense... for example, some EAs might feel that we should do monetary policy via "nominal GDP targeting" rather than inflation-rate targeting, but I've never heard anyone recommend that I donate to some specific NGDP-targeting advocacy organization.

I wish there were more places like Center for Election Science, living purely on the meta level and trying to experiment with different ways of organizing people and designing democratic institutions to produce better outcomes. Personally, I'm excited about Charter Cities Institute and the potential for new cities to experiment with new policies and institutions, ideally putting competitive pressure on existing countries to better serve their citizens. As far as I know, there aren't any big organizations devoted to advocating for adopting prediction markets in more places, or adopting quadratic public goods funding, but I think those are some of the most promising areas for really big systemic change.