Stavros

Bio

Impact markets profile: https://app.impactmarkets.io/profile/cldpox45v0002opoyklp7q09q

Posts 3

Comments16

I'm a sober alcoholic, have been for quite a few years now (enough that I've lost count.)

I guess everyone's path is different, but what helped me was reading the book This Naked Mind. It's not a greaaat book, has very mixed reviews and plenty of justified criticism. But it got me to stop drinking, and convinced me that if I stayed sober long enough it would last.

And it did.

What worked for me was drawing a small fish-hook on my arm every morning, a visible reminder that the addiction was there, that the addiction was separate from me, and that 'my' desire to drink was the addiction's desire.

Around 2 weeks sober it seemed to get noticeably easier, and the longer I stayed sober the easier it became to stay sober.

I don't miss alcohol, and I don't feel like I'm missing out.

Like I said, everyone's situation and path is different. But one thing you can rely on is that the longer you're sober, the easier it will get to stay sober.

Replying to myself with an additional contribution I just read that says everything much better than I managed:

In physics terms, the world economy, as well as all of the individual economies within it, are dissipative structures. As such, growth followed by collapse is a usual pattern. At the same time, new versions of dissipative structures can be expected to form, some of which may be better adapted to changing conditions. Thus, approaches for economic growth that seem impossible today may be possible over a longer timeframe.

For example, if climate change opens up access to more coal supplies in very cold areas, the Maximum Power Principle would suggest that some economy will eventually access such deposits. Thus, while we seem to be reaching an end now, over the long-term, self-organizing systems can be expected to find ways to utilize (“dissipate”) any energy supply that can be inexpensively accessed, considering both complexity and direct fuel use.

I would add that while new structures can be expected to form, because they are adapted for different conditions and exploiting different energy gradients, we should not expect them to have the same features/levels of complexity.

Not sure if this is the best place to ask this question, but I'm seeking to explore EA's connection to Taiwan's Digital Democracy movement - it doesn't seem like there is one right now, which seems ineffective.

I'm specifically, personally, interested in talking to anyone directly involved with the movement in Taiwan who can help me develop a deeper understanding, and who can provide feedback on ideas I'm playing with about digital trust systems.

Ah, I want to acknowledge that the definition of civilization is quite broad without getting too in the weeds on this point.

I heard the economist Steve Keen describe civilization as 'harnessing energy to elevate us above the base level of the planet' (I may be paraphrasing somewhat).

I think this is a pretty good definition, because it also makes it clear why civilization is inherently unstable - and thus fragile - it is, by definition, out of equilibrium with the natural environment.

And any ecologist will know what happens next in this situation - overshoot[1].

So all civilization is inherently fragile, and the larger it grows the more it depletes the carrying capacity of the environment.

Which brings us to industrial/post industrial civilization:

I think the best metaphor for industrial civilization is a rocket - it's an incredibly powerful channeled explosion that has the potential to take you to space, but also has the potential to explode, and has a finite quantity of fuel.

The 'fuel', in the case of industrial civilization is not simply material resources such as oil and coal, but also environmental resources - the complex ecologies that support life on the planet and even the stable, temperate, climate that gave us the opportunity to settle down and form civilization.

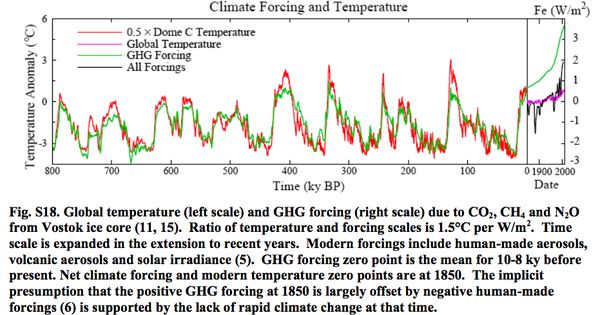

Civilization can only form during these tiny little peaks, the interglacial periods. Anthropogenic climate change is far beyond the bounds of this cycle and there is no guarantee that it will return to a cadence capable of supporting future civilizations.

Further, our current level of development was the result of a complex chain of geopolitical events that resulted in a prolonged period of global stability and prosperity.

While it may be possible for future civilizations to achieve some level of technological development, it is incredibly unlikely they will ever have the resources and conditions that enabled us to reach the 'digital' tech level.

Consider that even now, under far better conditions than we can expect future civilizations to have, it is still more likely that we'll destroy ourselves than flourish.

That potential for self-destruction is unabated in future civilizations, whereas the potential for flourishing is heavily if not completely depleted.

- ^

https://biologydictionary.net/carrying-capacity/

- You start by assuming that a civilizational collapse would be irrecoverable, and just about as bad as human extinction.

- Given that assumption, you see a lot of bad stuff that could wipe out civilization without necessarily killing everybody, like a global food supply disaster, a pandemic, a war, climate change, energy production problems, etc.

- Since all these potential sources of collapse seem just as bad as human extinction, you think it'x worth putting effort into all of them.

- EA often prioritizes protecting human/sentient life directly, but doesn't focus that hard on things like evaluating risks to the global energy supply except insofar as those risks stem from problems that might also just kill a lot of people, like a pandemic or ΑΙ run amok.

Overall, it seems like you think there's a lot more sources of fragility than EA takes into account, lots of ways civilization could collapse, and EA's only looking at a few.

Is that roughly where you're coming from?

Yeah that's a good summary of my position.

Just a note on your communication style, at least on EA forum I think it would help if you replaced more of your "deckchairs on the Titanic" and "forest for the trees" metaphores with specific examples, even hypothetical.

Thanks, will keep this in mind. It's been an active (and still ongoing) effort to adjust my style toward EA norms.

How much time do you think we have?

95% certainty <100 years, 80% certainty <50 years, 50% certainty, <30 years...

But the question is 'how much time do we have until X?' and for that...

So it sounds like you are an X-risk guy, which is a very mainstream EA position. Although I'm not sure if you're a "last 1%-er," as in weighing the complete loss of human life much more heavily than losing say 99% of human life.

This is where I diverge heavily, and where the metacrisis framework comes into play: I am a civilization x-risk guy, not a homo sapiens x-risk guy.

My timeline is specifically 'how much time do we have until irreversible, permanent, loss of civilizational capacity[1]'

Whether humans survive is irrelevant to me[2].

What seems clear to me is that we are faced with a choice between two paradigm shifts: one in which we grow beyond our current limitations as a species, and one in which we are forever confined to them.

Technology is the deciding factor, to quote Homer Simpson - 'the cause of, and solution to, all of life's problems' :p

And achieving our current technological capacity is not repeatable. The idea that future humans can rebuild is incredibly naive yet rarely questioned in EA[3].

If you accept that proposition, even if just for the sake of argument, then my emphasis on the hinge of history should make sense. This is our one chance to build a better future, if we fail then none of the futures we can expect are ones any of us would want to live in.

And this is where the insight of the metacrisis is relevant: interventions focused on the survival/flourishing of civilization itself are, from my[4] point of view, the only ones with positive EV.

What to do that we're not already doing:

Increased focus/prioritization of:

- Governance (both working with existing decision making structures, and enabling the creation and growth of new ones)

- Social empowerment/'uplift' (thinking specifically of things like Taiwanese Digital Democracy)

- Economic Innovation - the fact that we are in a situation where we are reliant on the philanthropy of billionaires is conclusive evidence that the current system is well overdue for an overhaul.

- Resilience (really broad category)

- The former three points are critical for this category as well: inequality, social unrest and incompetent governance are huge sources of fragility.

- Domestic energy infrastructure

- Domestic sustainable food production

- Global health and longevity

- And by global, I mean everywhere with rapidly aging demographics.

- Pandemic management/prevention

I would say all of these areas are either underprioritized or, as in the case of global health, often missing the forest for the trees (literally - saving trees without doing anything about the existential threat to the forest itself).

- ^

Most notably loss of energy and capital intensive advanced technologies dependent on highly specialized workers, global supply chains and geopolitical stability (i.e. no one dropping bombs on your infrastructure) - e.g. computing.

- ^

I know how this sounds, but to me the opposite (human survival is an ultimate goal) sounds like paperclip maximizing.

- ^

This is a whole other argument, and I don't really want to get into it now. This is what I've been trying to write a post on for a while now. I find it personally quite frustrating as I feel the burden of evidence should be on those making the extraordinary claim - i.e. that rebuild is possible.

- ^

Admittedly rather fringe

Mmmm, I'll try my best to deconfuse.

Clearly, there are a bunch of emergencies.

- Some of these emergencies are orders of magnitude more important or urgent than others.

- My first claim is that scale and context matter

- e.g. an intervention in cause area X may be obvious and effective when evaluated in isolation, but in context the lives saved from X are then lost to cause Y instead.

- My second claim is that many of these emergencies are not discrete problems.

- Rather they are complex interdependent systems in a state of extreme imbalance, stress or degradation - e.g. climate, ecology, demography, economy

- My third claim is that, yes, governance is a more-or-less universal bottleneck in our ability to engage with these emergencies.

- But, my fourth claim is that this doesn't make all of the above a governance problem. Solutions to governance do not solve these emergencies, they simply improve our ability to engage with these emergencies.

- If you really really want somewhere specific to point the finger, it's homo sapiens. There's a great quote: "We Have Stone Age Emotions, Medieval Institutions and God-Like Technology" - E. O. Wilson

Practically, my position, informed by the metacrisis, is that:

We have less time to make a difference than is commonly believed in EA circles, and the difference we have to make has to be systemic and paradigm changing - saving lives doesn't matter if the life support system itself is failing.

Thus interventions which aren't directly or indirectly targeting the life support system itself can seem incredibly effective while actually being a textbook case of rearranging the deckchairs on the Titanic.

P.S. Thanks for your time and patience in engaging with me on this topic and encouraging me to clarify in this manner.

I'm glad to see this being talked about; I'm glad to see non-western cultures exploring what they can make of EA.

For me, Filial Piety is the other 'F word' - I have seen it used to normalize a level of abusiveness towards children that, even in the context of typing a post from the comfort of my home, is making me furious.

And yet, I recognise it seems to be part of the recipe that empowers collectivism in Asian cultures, that has protected them from the Randian individualist race to the bottom that Western cultures are engaged in.

No good answers, no conclusion, no clear message; reality is messy, don't romanticise ideals.