Wei Dai

Posts 10

Comments287

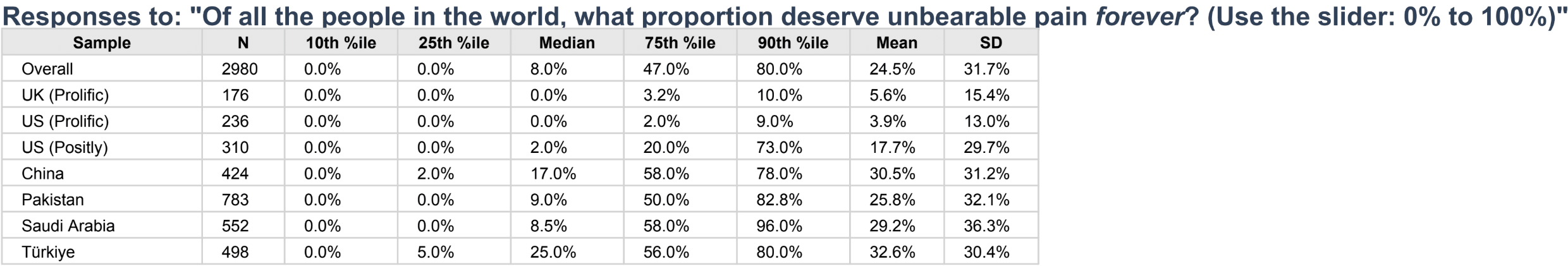

If I'm interpreting this correctly, 25% of people in China think that at least 58% of all people in the world deserve eternal unbearable pain (with similar results in 3 other countries). This is so crazy that I think there must be another explanation, e.g., results got mixed up, or a lot of people weren't paying attention and just answered randomly.

- Cultivating people's ability and motivation to reflect on their values.

- Structuring collective deliberations so that better arguments and ideas win out over time.

Problems with this:

- People disagree strongly about which arguments and ideas are better than others.

- The vast majority of people seem to lack both the hardware (raw cognitive capacity) and software (a reasonable philosophical tradition) to reflect on their values in a positive way.

- AI seems likely to make things worse or not sufficiently better, for these reasons.

Solving these problems seem to require (at least) some kind of breakthrough in metaphilosophy, such that we have a solution for what is actually the right way to reflect/deliberate about philosophical topics like values, and the solution is so convincing that everyone ends up agreeing with it. But I would love to know if you (or anyone else) have other ideas for solving or getting around these problems for building a viable Viatopia.

Another problem is that morality/values is in large part a status game, but talking about status is generally bad for one's status (who wants to admit that they're espousing some morality/values to win a status game) so this important aspect of morality/values is generally ignored for structural reasons that may be unfixable regardless of other technical and philosophical advances.

@Wei Dai, I understand that your plan A is an AI pause (+ human intelligence enhancement). And I agree with you that this is the best course of action. Nonetheless, I’m interested in what you see as plan B: If we don’t get an AI pause, is there any version of ‘hand off these problems to AIs’/ ‘let ‘er rip’ that you feel optimistic about? or which you at least think will result in lower p(catastrophe) than other versions? If you have $1B to spend on AI labour during crunch time, what do you get the AIs to work on?

The answer would depend a lot on what the alignment/capabilities profile of the AI is. But one recent update I've made is that humans are really terrible at strategy (in addition to philosophy) so if there was no way to pause AI, it would help a lot to get good strategic advice from AI during crunch time, which implies that maybe AI strategic competence > AI philosophical competence in importance (subject to all the usual disclaimers like dual use and how to trust or verify its answers). My latest LW post has a bit more about this.

(By "strategy" here I especially mean "grand strategy" or strategy at the highest levels, which seems more likely to be neglected versus "operational strategy" or strategy involved in accomplishing concrete tasks, which AI companies are likely to prioritize by default.)

So for example if we had an AI that's highly competent at answering strategic questions, we could ask it "What questions should I be asking you, or what else should I be doing with my $1B?" (but this may have to be modified based on things like how much can we trust its answers of various kinds, how good is it at understanding my values/constraints/philosophies, etc.).

If we do manage to get good and trustworthy AI advice his way, another problem would be how to get key decision makers (including the public) to see and trust such answers, as they wouldn't necessarily think to ask such questions themselves nor by default trust the AI answers. But that's another thing that a strategically competent AI could help with.

BTW your comment made me realize that it's plausible that AI could accelerate strategic thinking and philosophical progress much more relative to science and technology, because the latter could become bottlenecked on feedback from reality (e.g., waiting for experimental results) whereas the former seemingly wouldn't be. I'm not sure what implications this has, but want to write it down somewhere.

Moreover, above we were comparing AIs to the best human philosophers / to a well-organised long reflection, but the actual humans calling the shots are far below that bar. For instance, I’d say that today’s Claude has better philosophical reasoning and better starting values than the US president, or Elon Musk, or the general public. All in all, best to hand off philosophical thinking to AIs.

One thought I have here is that AIs could give very different answers to different people. Do we have any idea what kind of answers Grok is (or will be) giving to Elon Musk when it comes to philosophy?

I wish you titled the post something like "The option value argument for preventing extinction doesn't work". Your current title ("The option value argument doesn't work when it's most needed") has the unfortunate side effects of:

- People being more likely to misinterpret or misremember your post as claiming that trying to increase option value doesn't work in general.

- Reducing extinction risk becomes the most salient example of an idea for increasing option value.

- People using "the option value argument" to mean the the option value argument for preventing extinction, even when this can't be inferred from context. (See example.)

- It's harder to use the phrase "the option value argument" contextually to refer to the option value argument currently or previously discussed, when it's not about extinction risk, due to it becoming a term of art for "the option value argument for preventing extinction".

I think it may not be too late to change the title and stop or reverse these effects.

The argument tree (arguments, counterarguments, counter-counterarguments, and so on) is exponentially sized and we don't know how deep or wide we need to expand it, before some problem can be solved. We do know that different humans looking at the same partial tree (i.e., philosophers who have read the same literature on some problem) can have very different judgments as to what the correct conclusion is. There's also a huge amount of intuition/judgment involved in choosing which part of the tree to focus on or expand further. With AIs helping to expand the tree for us, there are potential advantages like you mentioned, but also potential disadvantages, like AIs not having good intuition/judgment about what lines of arguments to pursue, or the argument tree (or AI-generated philosophical literature) becoming too large for any humans to read and think about in a relevant time frame. Many will be very tempted to just let AIs answer the questions / make the final conclusions for us, especially if AIs also accelerate technological progress, creating many urgent philosophical problems related to how to use them safely and beneficially. Or if humans try to make the conclusions, can easily get them wrong despite AI help with expanding the argument tree.

So I think undergoing the AI transition without solving metaphilosophy, or making AIs autonomously competent at philosophy (good at getting correct conclusions by themselves) is enormously risky, even if we have corrigible AIs helping us.

Do you want to talk about why you're relatively optimistic? I've tried to explain my own concerns/pessimism at https://www.lesswrong.com/posts/EByDsY9S3EDhhfFzC/some-thoughts-on-metaphilosophy and https://forum.effectivealtruism.org/posts/axSfJXriBWEixsHGR/ai-doing-philosophy-ai-generating-hands.

Thanks! I hope this means you'll spend some more time on this type of work, and/or tell other philosophers about this argument. It seems apparent that we need more philosophers to work on philosophical problems related to AI x-safety (many of which do not seem to be legible to most non-philosophers). Not necessarily by attacking them directly (this is very hard and probably not the best use of time, as we previously discussed) but instead by making them more legible to AI researchers, decisionmakers, and the general public.

In my view, there isn't much desire for work like this from people in the field and they probably wouldn't use it to inform deployment unless a lot of effort is also added from the author to meet the right people, convince theme to spend the time to take it seriously etc.

Any thoughts on Legible vs. Illegible AI Safety Problems, which is in part a response to this?

Thanks for clarifying. Sill, this suggests that the Chinese participants were on average much less conscientious about answering truthfully/carefully than the US/UK ones, which implies that even the filtered samples may still be relatively more noisy.

Perplexity w/ GPT-5.2 Thinking when I asked "Are there standard methods for dealing with this in surveying/statistics?", among other ideas (sorry I don't know how good the answer actually is):