This is my contribution to the December blogging carnival on “blind spots”. It's also on my blog; apologies if some of the formatting hasn't copied across.

Summary: People frequently compartmentalize their beliefs, and avoid addressing the implications between them. Ordinarily, this is perhaps innocuous, but when the both ideas are highly morally important, their interaction is in turn important – many standard arguments on both sides of moral issues like the permissibility of abortion are significantly undermined or otherwise effected by EA considerations, especially moral uncertainty.

A long time ago, Will wrote an article about how a key part of rationality was taking ideas seriously: fully exploring ideas, seeing all their consequences, and then acting upon them. This is something most of us do not do! I for one certainly have trouble.

Similarly, I think people selectively apply EA principles. People are very willing to apply them in some cases, but when those principles would cut at a core part of the person’s identity – like requiring them to dress appropriately so they seem less weird – people are much less willing to take those EA ideas to their logical conclusion.

Consider your personal views. I’ve certainly changed some of my opinions as a result of thinking about EA ideas. For example, my opinion of bednet distribution is now much higher than it once was. And I’ve learned a lot about how to think about some technical issues, like regression to the mean. Yet I realized that I had rarely done a full 180 – and I think this is true of many people:

- Many think EA ideas argue for more foreign aid – but did anyone come to this conclusion who had previously been passionately anti-aid?

- Many think EA ideas argue for vegetarianism – but did anyone come to this conclusion who had previously been passionately carnivorous?

- Many think EA ideas argue against domestic causes – but did anyone come to this conclusion who had previously been a passionate nationalist?

Yet this is quite worrying. Given the power and scope of many EA ideas, it seems that they should lead to people changing their mind on issues were they had been previously very certain, and indeed emotionally involved. That they have not suggests we have been compartmentalizing.

Obviously we don’t need to apply EA principles to everything – we can probably continue to brush our teeth without need for much reflection. But we probably should apply them to issues with are seen as being very important: given the importance of the issues, any implications of EA ideas would probably be important implications.

Moral Uncertainty

In his PhD thesis, Will MacAskill argues that we should treat normative uncertainty in much the same way as ordinary positive uncertainty; we should assign credences (probabilities) to each theory, and then try to maximise the expected morality of our actions. He calls this idea ‘maximise expected choice-worthiness’, and if you’re into philosophy, I recommend reading the paper. As such, when deciding how to act we should give greater weight to the theories we consider more likely to be true, and also give more weight to theories that consider the issue to be of greater importance.

This is important because it means that a novel view does not have to be totally persuasive to demand our observance. Consider, for example, vegetarianism. Maybe you think there’s only a 10% chance that animal welfare is morally significant – you’re pretty sure they’re tasty for a reason. Yet if the consequences of eating meat are very bad in those 10% of cases (murder or torture, if the animal rights activists are correct), and the advantages are not very great in the other 90% (tasty, some nutritional advantages), we should not eat meat regardless. Taking into account the size of the issue at stake as well as probability of its being correct means paying more respect to ‘minority’ theories.

And this is more of an issue for EAs than for most people. Effective Altruism involves a group of novel moral premisses, like cosmopolitanism, the moral imperative for cost-effectiveness and the importance of the far future. Each of these imply that our decisions are in some way very important, so even if we assign them only a small credence, their plausibility implies radical revisions to our actions.

One issue that Will touches on in his thesis is the issue of whether fetuses morally count. In the same way that we have moral uncertainty as to whether animals, or people in the far future, count, so too we have moral uncertainty as to whether unborn children are morally significant. Yes, many people are confident they know the correct answer – but there many of these on each side of the issue. Given the degree of disagreement on the issue, among philosophers, politicians and the general public, it seems like the perfect example of an issue where moral uncertainty should be taken into account – indeed Will uses it as a canonical example.

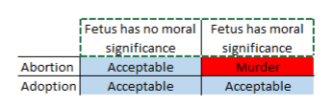

Consider the case of a pregnant women Sarah, wondering whether it is morally permissible to abort her child1. The alternative course of action she is considering is putting the child up for adoption. In accordance with the level of social and philosophical debate on the issue, she is uncertain as to whether aborting the fetus is morally permissible. If it’s morally permissible, it’s merely permissible – it’s not obligatory. She follows the example from Normative Uncertainty and constructs the following table

In the best case scenario, abortion has nothing to recommend it, as adoption is also permissible. In the worst case, abortion is actually impermissible, whereas adoption is permissible. As such, adoption dominates abortion.

However, Sarah might not consider this representation as adequate. In particular, she thinks that now is not the best time to have a child, and would prefer to avoid it.2 She has made plans which are inconsistent with being pregnant, and prefers not to give birth at the current time. So she amends the table to take into account these preferences.

Now adoption no longer strictly dominates abortion, because she prefers abortion to adoption in the scenario where it is morally permissible. As such, she considers her credence: she considers the pro-choice arguments slightly more persuasive than the pro-life ones: she assigns a 70% credence to abortion being morally permissible, but only a 30% chance to its being morally impermissible.

Looking at the table with these numbers in mind, intuitively it seems that again it’s not worth the risk of abortion: a 70% chance of saving oneself inconvenience and temporary discomfort is not sufficient to justify a 30% chance of committing murder. But Sarah’s unsatisfied with this unscientific comparison: it doesn’t seem to have much of a theoretical basis, and she distrusts appeals to intuitions in cases like this. What is more, Sarah is something of a utilitarian; she doesn’t really believe in something being impermissible.

Fortunately, there’s a standard tool for making inter-personal welfare comparisons: QALYs. We can convert the previous table into QALYs, with the moral uncertainty now being expressed as uncertainty as to whether saving fetuses generates QALYs. If it does, then it generates a lot; supposing she’s at the end of her first trimester, if she doesn’t abort the baby it has a 98% chance of surviving to birth, at which point its life expectancy is 78.7 in the US, for 78.126 QALYs. This calculation assumes assigns no QALYs to the fetus’s 6 months of existence between now and birth. If fetuses are not worthy of ethical consideration, then it accounts for 0 QALYs.

We also need to assign QALYs to Sarah. For an upper bound, being pregnant is probably not much worse than having both your legs amputated without medication, which is 0.494 QALYs, so lets conservatively say 0.494 QALYs. She has an expected 6 months of pregnancy remaining, so we divide by 2 to get 0.247 QALYs. Women’s Health Magazine gives the odds of maternal death during childbirth at 0.03% for 2013; we’ll round up to 0.05% to take into account risk of non-death injury. Women at 25 have a remaining life expectancy of around 58 years, so thats 0.05%*58= 0.029 QALYs. In total that gives us an estimate of 0.276 QALYs. If the baby doesn’t survive to birth, however, some of these costs will not be incurred, so the truth is probably slightly lower than this. All in all a 0.276 QALYs seems like a reasonably conservative figure.

Obviously you could refine these numbers a lot (for example, years of old age are likely to be at lower quality of life, there are some medical risks to the mother from aborting a fetus, etc.) but they’re plausibly in the right ballpark. They would also change if we used inherent temporal discounting, but probably we shouldn’t.

We can then take into account her moral uncertainty directly, and calculate the expected QALYs of each action:

- If she aborts the fetus, our expected QALYs are 70%x0 + 30%(-78.126) = -23.138

- If she carries the baby to term and puts it up for adoption, our expected QALYs are 70%(-0.247) + 30%(-0.247) = -0.247

Which again suggests that the moral thing to do is to not abort the baby. Indeed, the life expectancy is so long at birth that it quite easily dominates the calculation: Sarah would have to be extremely confident in rejecting the value of the fetus to justify aborting it. So, mindful of overconfidence bias, she decides to carry the child to term.

Indeed, we can show just how confident in the lack of moral significance of the fetuses one would have to be to justify aborting one. Here is a sensitivity table, showing credence in moral significance of fetuses on the y axis, and the direct QALY cost of pregnancy on the x axis for a wide range of possible values. The direct QALY cost of pregnancy is obviously bounded above by its limited duration. As is immediately apparent, one has to be very confident in fetuses lacking moral significance, and pregnancy has to be very bad, before aborting a fetus becomes even slightly QALY-positive. For moderate values, it is extremely QALY-negative.

Other EA concepts and their applications to this issue

Of course, moral uncertainty is not the only EA principle that could have bearing on the issue, and given that the theme of this blogging carnival, and this post, is things we’re overlooking, it would be remiss not to give at least a broad overview of some of the others. Here, I don’t intend to judge how persuasive any given argument is – as we discussed above, this is a debate that has been going without settlement for thousands of years – but merely to show the ways that common EA arguments affect the plausibility of the different arguments. This is a section about the directionality of EA concerns, not on the overall magnitudes.

Not really people

One of the most important arguments for the permissibility of abortion is that fetuses are in some important sense ‘not really people’. In many ways this argument resembles the anti-animal rights argument that animals are also ‘not really people’. We already covered above the way that considerations of moral uncertainty undermine both these arguments, but it’s also noteworthy that in general it seems that the two views are mutually supporting (or mutually undermining, if both are false). Animal-rights advocates often appeal to the idea of an ‘expanding circle’ of moral concern. I’m skeptical of such an argument, but it seems clear that the larger your sphere, the more likely fetuses are to end up on the inside. The fact that, in the US at least, animal activists tend to be pro-abortion seems to be more of a historical accident than anything else. We could imagine alternative-universe political coalitions, where a “Defend the Weak; They’re morally valuable too” party faced off against a “Exploit the Weak; They just don’t count” party. In general, to the extent that EAs care about animal suffering (even insect suffering ), EAs should tend to be concerned about the welfare of the unborn.

Not people yet

A slightly different common argument is that while fetuses will eventually be people, they’re not people yet. Since they’re not people right now, we don’t have to pay any attention to their rights or welfare right now. Indeed, many people make short sighted decisions that implicitly assign very little value to the futures of people currently alive, or even to their own futures – through self-destructive drug habits, or simply failing to save for retirement. If we don’t assign much value to our own futures, it seems very sensible to disregard the futures of those not even born. And even if people who disregarded their own futures were simply negligent, we might still be concerned about things like the non-identity problem.

Yet it seems that EAs are almost uniquely unsuited to this response. EAs do tend to care explicitly about future generations. We put considerable resources into investigating how to help them, whether through addressing climate change or existential risks. And yet these people have far less of a claim to current personhood than fetuses, who at least have current physical form, even if it is diminutive. So again to the extent that EAs care about future welfare, EAs should tend to be concerned about the welfare of the unborn.

Replaceability

Another important EA idea is that of replaceability. Typically this arises in contexts of career choice, but there is a different application here. The QALYs associated with aborted children might not be so bad if the mother will go on to have another child instead. If she does, the net QALY loss is much lower than the gross QALY loss. Of course, the benefits of aborting the fetus are equivalently much smaller – if she has a child later on instead, she will have to bear the costs of pregnancy eventually anyway. This resembles concerns that maybe saving children in Africa doesn’t make much difference, because their parents adjust their subsequent fertility.

The plausibility behind this idea comes from the idea that, at least in the US, most families have a certain ideal number of children in mind, and basically achieve this goal. As such, missing an opportunity to have an early child simply results in having another later on.

If this were fully true, utilitarians might decide that abortion actually has no QALY impact at all – all it does is change the timing of events. On the other hand, fertility declines with age, so many couples planning to have a replacement child later may be unable to do so. Also, some people do not have ideal family size plans.

Additionally, this does not really seem to hold when the alternative is adoption; presumably a woman putting a child up for adoption does not consider it as part of her family, so her future childbearing would be unaffected. This argument might hold if raising the child yourself was the only alternative, but given that adoption services are available, it does not seem to go through.

Autonomy

Sometimes people argue for the permissibility of abortion through autonomy arguments. “It is my body”, such an argument would go, “therefore I may do whatever I want with it.” To a certain extent this argument is addressed by pointing out that one’s bodily rights presumably do not extent to killing others, so if the anti-abortion side are correct, or even have a non-trivial probability of being correct, autonomy would be insufficient. It seems that if the autonomy argument is to work, it must be because a different argument has established the non-personhood of fetuses – in which case the autonomy argument is redundant. Yet even putting this aside, this argument is less appealing to EAs than to non-EAs, because EAs often hold a distinctly non-libertarian account of personal ethics. We believe it is actually good to help people (and avoid hurting them), and perhaps that it is bad to avoid doing so. And many EAs are utilitarians, for whom helping/not-hurting is not merely laud-worthy but actually compulsory. EAs are generally not very impressed with Ayn Rand style autonomy arguments for rejecting charity, so again EAs should tend to be unsympathetic to autonomy arguments for the permissibility of abortion.

Indeed, some EAs even think we should be legally obliged to act in good ways, whether through laws against factory farming or tax-funded foreign aid.

Deontology

An argument often used on the opposite side – that is, an argument used to oppose abortion, is that abortion is murder, and murder is simply always wrong. Whether because God commanded it or Kant derived it, we should place the utmost importance of never murdering. I’m not sure that any EA principle directly pulls against this, but nonetheless most EAs are consequentialists, who believe that all values can be compared. If aborting one child would save a million others, most EAs would probably endorse the abortion. So I think this is one case where a common EA view pulls in favor of the permissibility of abortion.

I didn’t ask for this

Another argument often used for the permissibility of abortion is that the situation is in some sense unfair. If one did not intend to become pregnant – perhaps even took precautions to avoid becoming so – but nonetheless ends up pregnant, you’re in some way not responsible for becoming pregnant. And since you’re not responsible for it you have no obligations concerning it – so may permissible abort the fetus.

However, once again this runs counter to a major strand of EA thought. Most of us did not ask to be born in rich countries, or to be intelligent, or hardworking. Perhaps it was simply luck. Yet being in such a position nonetheless means we have certain opportunities and obligations. Specifically, we have the opportunity to use of wealth to significantly aid those less fortunate than ourselves in the developing world, and many EAs would agree the obligation. So EAs seem to reject the general idea that not intending a situation relieves one of the responsibilities of that situation.

Infanticide is okay too

A frequent argument against the permissibility of aborting fetuses is by analogy to infanticide. In general it is hard to produce a coherent criteria that permits the killing of babies before birth but forbids it after birth. For most people, this is a reasonably compelling objection: murdering innocent babies is clearly evil! Yet some EAs actually endorse infanticide. If you were one of those people, this particular argument would have little sway over you.

Moral Universalism

A common implicit premise in many moral discussion is that the same moral principles apply to everyone. When Sarah did her QALY calculation, she counted the baby’s QALYs as equally important to her own in the scenario where they counted at all. Similarly, both sides of the debate assume that whatever the answer is, it will apply fairly broadly. Perhaps permissibility varies by age of the fetus – maybe ending when viability hits – but the same answer will apply to rich and poor, Christian and Jew, etc.

This is something some EAs might reject. Yes, saving the baby produces many more QALYs than Sarah loses through the pregnancy, and that would be the end of the story if Sarah were simply an ordinary person. But Sarah is an EA, and so has a much higher opportunity cost for her time. Becoming pregnant will undermine her career as an investment banker, the argument would go, which in turn prevents her from donating to AMF and saving a great many lives. Because of this, Sarah is in a special position – it is permissible for her, but it would not be permissible for someone who wasn’t saving many lives a year.

I think this is a pretty repugnant attitude in general, and a particularly objectionable instance of it, but I include it here for completeness.

May we discuss this?

Now we’ve considered these arguments, it appears that applying general EA principles to the issue in general tends to make abortion look less morally permissible, though there were one or two exceptions. But there is also a second order issue that we should perhaps address – is it permissible to discuss this issue at all?

Nothing to do with you

A frequently seen argument on this issue is to claim that the speaker has no right to opine on the issue. If it doesn’t personally affect you, you cannot discuss it – especially if you’re privileged. As many (a majority?) of EAs are male, and of the women many are not pregnant, this would curtail dramatically the ability of EAs to discuss abortion. This is not so much an argument on one side or other of the issue as an argument for silence.

Leaving aside the inherent virtues and vices of this argument, it is not very suitable for EAs. Because EAs have many many opinions on topics that don’t directly affect them:

- EAs have opinions on disease in Africa, yet most have never been to Africa, and never will

- EAs have opinions on (non-human) animal suffering, yet most are not non-human animals

- EAs have opinions on the far future, yet live in the present

Indeed, EAs seem more qualified to comment on abortion – as we all were once fetuses, and many of us will become pregnant. If taken seriously this argument would call foul on virtually ever EA activity! And this is no idle fantasy – there are certainly some people who think that Westerns cannot usefully contribute to solving African poverty.

Too controversial

We can safely say this is a somewhat controversial issue. Perhaps it is too controversial – maybe it is bad for the movement to discuss. One might accept the arguments above – that EA principles generally undermine the traditional reasons for thinking abortion is morally permissible – yet think we should not talk about it. The controversy might divide the community and undermine trust. Perhaps it might deter newcomers. I’m somewhat sympathetic to this argument – I take the virtue of silence seriously, though eventually my boyfriend persuaded me it was worth publishing.

Note that the controversial nature is evidence against abortion’s moral permissibility, due to moral uncertainty.

However, the EA movement is no stranger to controversy.

- There is a semi-official EA position on immigration, which is about as controversial as abortion in the US at the moment, and the EA position is such an extreme position that essentially no mainstream politicians hold it.

- There is a semi-official EA position on vegetarianism, which is pretty controversial too, as it involves implying that the majority of Americans are complicit in murder every day.

Not worthy of discussion

Finally, another objection to discussing this is it simply it’s an EA idea. There are many disagreements in the world, yet there is no need for an EA view on each. Conflict between the Lilliputians and Blefuscudians notwithstanding, there is no need for an EA perspective on which end of the egg to break first. And we should be especially careful of heated, emotional topics with less avenue to pull the rope sideways. As such, even though the object-level arguments given above are correct, we should simply decline to discuss it.

However, it seems that if abortion is a moral issue, it is a very large one. In the same way that the sheer number of QALYs lost makes abortion worse than adoption even if our credence in fetuses having moral significance was very low, the large number of abortions occurring each year make the issue as a whole of high significance. In 2011 there were over 1 million babies were aborted in the US. I’ve seen a wide range of global estimates, including around 10 million to over 40 million. By contrast, the WHO estimates there are fewer than 1 million malaria deaths worldwide each year. Abortion deaths also cause a higher loss of QALYs due to the young age at which they occur. On the other hand, we should discount them for the uncertainty that they are morally significant. And perhaps there is an even larger closely related moral issue. The size of the issue is not the only factor in estimating the cost-effectiveness of interventions, but it is the most easily estimable. On the other hand, I have little idea how many dollars of donations it takes to save a fetus – it seems like an excellent example of some low-hanging fruit research.

Conclusion

People frequently compartmentalize their beliefs, and avoid addressing the implications between them. Ordinarily, this is perhaps innocuous, but when the both ideas are highly morally important, their interaction is in turn important. In this post we the implications of common EA beliefs on the permissibility of abortion. Taking into account moral uncertainty makes aborting a fetus seem far less permissible, as the high counterfactual life expectancy of the baby tends to dominate other factors. Many other EA views are also significant to the issue, making various standard arguments on each side less plausible.

- There doesn’t seem to be any neutral language one can use here, so I’m just going to switch back and forth between ‘fetus’ and ‘child’ or ‘baby’ in a vain attempt at terminological neutrality.

- I chose this reason because it is the most frequently cited main motivation for aborting a fetus according to the Guttmacher Institute.

This post made me rather uncomfortable. I think what got to me was a somewhat flippant expression of what it was like for 'Sarah' to be unwantedly pregnant: "She has made plans which are inconsistent with being pregnant, and prefers not to give birth at the current time.", which is summarised in the table as the idea that bringing the fetus to term would be 'inconvenient'. This seems dismissive of the suffering which could be entailed by bringing it to term.

While it seems quite likely that people do compartmentalise, in this particular example it's not at all clear to me that that is a bad rather than good thing. Abortion is a very hot button topic. We're not likely to get any leverage on it one way or the other, because of the resources already invested pushing both ways. It's also likely to be emotional to people (as Lila described in her comment). So actually, taking pains to think through what implications effective altruist ideas might have for abortion, and discussing it a bunch, might just have negative consequences. It might be better to start by focusing on areas where we can 'push the rope sideways'.

It's probably not a good idea to tackle abortion for the reasons you give, but we can't know that if we never think through the implications of our beliefs ('compartmentalisation').

There should probably be a private place to discuss issues like this and determine if they are worth bringing up more publicly, after taking account of the many downsides (stoking internal division, wasting effort on a mainstream issue, alienating/offending half of the political spectrum whichever position we take etc).

Re use of 'inconvenient', I've also used that to describe things that are harmful, but fall short of e.g. a serious injury, for lack of a better English term. However, I've discovered that the general public thinks of inconveniences exclusively as things that wastes time or effort. So, for example, eating less tasty food is never an 'inconvenience', even if it is a modest harm.

Using inconvenience this way is confusing, so I've stopped using it as a general term for medium-sized harms, and recommend others do the same.

Instead we can use depending on context: loss, misfortune, modest/mild/serious harm, damage and hurt.

Discomfort, maybe

Good phrase; I'll use it in future.

Having experienced (a comparatively smooth and uncomplicated) pregnancy and delivery, 'discomfort' feels wildly inadequate.

For example, rates of post partum depression are about 10%, higher in adverse circumstances. Depression is one illness where a person's QALY's are plausibly <0

As well as being woefully inadequate to describe what's involved physically, the analysis here doesn't address the other harms and distress that would be involved in term pregnancy, delivery and relinquishing of a child. The woman's family, personal and work-life would all be affected in difficult to predict and probably negative ways. For example, what if you are a casual worker with no maternity allowance? Almost certainly lose shifts as you get more pregnant, probably lose your job.

Apparently even "Major depressive disorder: severe episode" only costs 0.655 QALYs.. If we generously assume every instance of post partum depression is 'major' and 'severe', and generously assume it lasts for 3 years, we get 30.10.655 = 0.1965 QALYs. As we were generous on both multiplicative assumptions, it's plausible the true number is much much lower. Yet even if it was as high as 0.1965, that's not enough to justify aborting a fetus if you ascribed even a 1% chance of it having moral value.

So perhaps my wording choice could have been better - but I don't think it actually makes much difference to the object-level issue.

there are different ways of scoring QALYs - most of the constructs seem pretty dumb on the face of it that advantage people with small issues across the board compared to people with life-cripplingly severe issues along one dimension.

Untrained childbirth scores much higher on pain severity than almost all medical problems - at least on one pain scale http://www.thblack.com/links/RSD/McGill500x700.jpg

Do you think we should give up on immigration as well? That's a pretty 'hot button topic' as well. At least in the UK it seems much more political than abortion.

I certainly think we should be wary when discussing immigration: we should be aware that discussing it in detail could be destructive (eg alienate people with particular political affiliations entirely), and that it seems intractable. We might want to, for example, wait for the Copenhagen Consensus Centre to do more work on it, and see whether they come up with tractable ways to help the world based on it. On the other hand, the harm I was trying to describe in the case of abortion is far greater than immigration. While I would expect there to be somewhat few readers of the blog sufficiently emotionally attached to one view on immigration that they would experience a somewhat careless discussion of it as distressing, I would expect there to be decidedly more so on abortion. If abortion seemed a more tractable issue, it would likely still be worth having the discussion, but trying to be careful in the language used (just as when comparing the effectiveness of charities, it's important to be sensitive to the suffering relieved by charities which are comparatively ineffective). But given the current state of things, it doesn't seem worth it. This is compounded by the fact that effective altruism in general is sometimes seen as somewhat male dominated, and the people likely to be put off by insensitive-seeming discussion of abortion are likely to be disproportionately women. Given that, and the huge space of effective research to be done, and topics which need further analysis, it seems sensible to focus on those others rather than this one.

One relevant difference between abortion and immigration is that rival views about abortion, but not about immigration, correlate well with positions on the left-right continuum. Pulling the rope sideways thus seems easier in the case of immigration, because you can appeal to both the left and the right, and cannot be accused of being partisan to either.

My impression was that the left is pro-immigrant (at least more so than the right) and the far right is very xenophobic.

Indeed, you're right.

The most frequently cited main motivation for aborting a fetus is

which does not mention suffering at all, and neither do the next two most frequently cited reasons

Can’t afford a baby now

Have completed my childbearing/have other people depending on me/children are grown

source

Given the availability of adoption, "inconvenient" seems like a reasonable description for "timing is wrong".

I agree, however, that sometimes it might cause substantial suffering - hence why I suggested an annualized figure of 0.494 QALYs. That's a very high number! It suggests pregnant women would be almost indifferent between

pregnancy and then adoption

a 50% chance of going into a coma for the rest of the year

which seems if anything to assign too great a negative weight to pregnancy.

In I think we need to be very careful of availability bias and scope insensitivity. Our individual preferences, and the suffering of already existing, literate people are very cognitively available - the opportunity cost is not. Doing explicit QALY calculations allows us to avoid this, but we have to actually do the calculations.

I've just skimmed this, so sorry if any of these points are covered already.

(1) On the moral uncertainty argument: I think people tend to overlook the fact that abortion can be morally obligatory according to utilitarianism. If having a child will divert the time and financial resources of people away from more effective causes then, especially if we consider the expected far future impact of those resources, someone can be strongly morally obligated to have an abortion. In general, it seems like utilitarianism is going to tend to have pretty strong recommendations either for or against abortion in a given case, depending on the particulars of that case. In cases where someone is morally obligated to have an abortion according to utilitarianism, we have a case of disagreement between moral theories and not a case of moral dominance under uncertainty. I still think that it means we should think that abortion is worse in most cases than it is typically considered, but it seems worth noting that this isn't a simple dominance case as is sometimes assumed.

(2) On the QALY assessment of abortions: People have mentioned that this sort of calculation seems to entail that women should consistently get pregnant and adopt out the children throughout their fertile years. One issue here is that it's the additional QALY benefit or loss of having children compared with what the person would have done that should be considered when we try to determine how morally good it is for people to have children. And it seems like a lot of people have the ability to move more QALYs with their resources and time than would be created by having children (either by giving to effective causes, or by convincing or paying someone else to have a child). I think it's very implausible that EAs should have children instead of using those resources elsewhere. Of course, it's less clear in the case of non-EA people. But then I think "if you're not going to spend your money and time more morally, then you're morally obligated to have children whenever they are able to" is a view that it would probably be damaging to publicly endorse.

(3) We might be worried about how the moral uncertainty argument applies in other cases. For example, homosexual acts seem to be mildly morally good on utilitarian views, but according to a lot of religious moral theories, homosexual acts are very morally bad (and very few moral theories say that they are morally good to the same degree). Does this mean that homosexual acts are a potentially prohibited under moral uncertainty? Perhaps not, but it seems worth noting that using the moral uncertainty arguments to argue against acts like abortion might lead people to this kind of example, so it's worth having something to say about them.

True, and it's worth breaking down what might be publcally damaging:

But we shouldn't let considerations of what is politically correct affect what we believe. We should try to believe that which is true.

I'm not sure why this would be a worry. Yes, moral uncertainty arguments might contradict our prior beliefs. But what is the point of considering new arguments if not to change our minds? We shouldn't expect novel arguments to always yield reassuringly early-21st-century-liberal conclusions. (I say this as someone who believes gay marriage is probably a good thing).

Actually, I'd like to amend my response here. I think there is a clear division between aborting fetuses and homosexuality here. There are strong non-religious arguments for its being wrong to abort a baby, but not very many at all for homosexuality being wrong. It seems that most of the disagreement about homosexuality comes from disagreement about whether Islam is true, which is a factual disagreement, not a moral disagreement. As such, moral uncertainty is much more important for the (im)morality of aborting fetuses than for homosexuality.

Jason Brennan, a philosopher at Georgetown who is at least interested in EA, presents a similar argument here.

This argument is based on an obligation to take any action that helps others more than it hurts us, but this is too strong. There are many opportunities to help others with varying costs to ourselves and we should prioritize them by the ratio of self-cost to other-benefit.

You have:

I would put the expected QALY impact of abortion as smaller and of carrying the baby to term as larger, but even taking your numbers as they are pregnancy is not as efficient as other options. The ratio here is 93 to 1. Lets look at some other options:

Donating a kidney is not as bad as pregnancy in terms of cost to yourself, so let's say -0.1 QALY. Receiving a kidney gets someone about 10 years, and the ability of an altruistic person to enable a chain of donations means maybe this comes to 30 years on average, so say 30 QALYs. This gives a ratio of 300 to 1.

Giving additional money to AMF or SCI is somewhat better than 1 QALY per $100. The impact on a relatively well off first worlder of giving $100 is far less than 1 QALY, and would continue to be until getting down to extremely low levels of spending. The ratio here is at least 1k to 1.

For the level of sacrifice involved in bringing an undesired child to term someone could have much more positive impact in other ways.

Good point. The advantage of AMF/SCI might be lower, though, considering the income and quality-of-life difference between poor and rich countries. Having a baby yourself is similar to having an extra poor-country birth and then increasing that person's income to rich-country levels (10-100x), although someone with a given income might be less happy in a rich country if they care about their relative wealth.

I agree with you, conditional on the person actually going on to donate a kidney, or donating a lot to AMF. Most people don't however - if they abort the fetus, they won't make a compensating kidney donation. So it seems like the right comparison for them.

Obviously if utilitarianism etc. are false this argument doesn't work.

"Many think EA ideas argue for vegetarianism – but did anyone come to this conclusion who had previously been passionately carnivorous?"

I'm not sure what it means to be passionately carnivorous, but before I was exposed to EA ideas I ate animal products, enjoyed doing so, and did not anticipate stopping. I am now a vegan, and donate to non-human animal charities. My conversion to veganism was based partly on the moral arguments to do so, and partly on moral luck. I wouldn't quite say that EA ideas argue for veganism (I think the "A" part suffices), but I would say that EA ideas give a strong argument for charities that promote reduction of animal product consumption.

I meant the sort of carnivore who says things like

takes delight trying to persuade their vegetarian friends to try bacon, and so on.

This is plausibly just the typical mind fallacy, but I've always read these as more "being annoyed by fake moral sanctimony, and signalling opposition to it" than actually being pro-omnivory.

I'll try not to descend into object-level here, but I'll continue using your original example. I was anti-choice before I became an EA. EA has actually pushed me to believe, on a rational level, that abortion is of utilitarian value in many cases (though the population ethics are a bit unclear). However, on a visceral level I still find abortion deeply distasteful and awful. So I still "oppose" abortion, whatever that means. I think as an EA, it's okay to have believies (as opposed to beliefs) that are comforting to you, as long as you don't act on them. Many political issues fall into this category. Since I don't vote on the issue of abortion and will probably not become accidentally pregnant, my believy isn't doing any harm.

Believies:

https://www.youtube.com/watch?v=cFsouvVdoJQ

Upvoted; good piece.

It sounds like your statement here amounts to "this attitude triggers a disgust response in me, therefore it's incorrect". I'm not persuaded. A more persuasive argument: there's a danger that our hypothetical woman aborts her fetus, gets rich, and then uses her developing powers of rationalization to find some reason not to give very much money to charity.

Thought experiment: Let's say instead of being a woman, we're dealing with a female android. Unlike humans, androids always know their own minds perfectly, never rationalize, keep all their promises, etc. The android tells you in her robotic voice that she's aborting her android fetus so she can make more money and save more lives, and you know for a fact that she's telling the truth. Does your answer to her stay the same?

Another thought: Maybe the reason you feel that this attitude is repugnant is because it sounds hypocritical. In that case, it might be useful to distinguish between preferences and advocacy. For example, maybe as an EA I would prefer that non-EA women carry their unwanted fetuses to term for the reasons you outline. But that doesn't mean that I have to start protesting at abortion clinics. If someone came to me and asked me whether they should carry their baby to term or not, it seems reasonable for me to respond and say "Well, what are your opportunity costs like? Where will the time and energy raising your baby be spent if you choose to abort it?" and listen to her before giving my answer.

In fact, I would argue that endorsing many universal moral principles, such as "don't abort fetuses", "don't eat animals", and "borders should be open", effectively amount to compartmentalization--the very thing you wrote this essay against. The real world is complicated. Our values are complicated. Simple principles like "don't eat animals" are intuitively appealing and easy for groups to rally around. But, inconveniently, the world is not simple and our moral principles will conflict. When our moral principles conflict, we should have a process for resolving those conflicts. I'm not sure that process should favor simplicity in the result. In the context of discussing a single principle, the way you discuss your "don't abort fetuses" principle here, it's easy to compartmentalize and avoid letting the "replaceability" principle in to the "don't abort fetuses" compartment. I wonder whether if your essay had been about replaceability instead of abortion, you would have come to the opposite conclusion. In other words, I wonder if humans have a bias towards letting moral conflicts resolve in favor of whichever moral principle is most salient. That seems suboptimal.

Another thought: If women are morally obligated to carry unwanted babies to term, are they also obligated to pump out as many babies as possible during their fertile years? Personally, the idea that the two cases are different strikes me as status quo bias. (Idea based on this paper.)

Ironically I was partly worried about being accused of offering a straw (wo)man so wanted to distance my self from it.

Good analogy. I think I'm basically concerned that this is not the sort of reasoning we can accept from fallible humans, not that it is inherently wrong, so I would be much more tolerant of the android. I Earn to Give, so vaguely similar considerations come up - some activities which are generally self-serving, but are transformed into altruistic resulting donations. In general I think the potential for personal self-deception is sufficiently high that I don't endorse such activities in myself. I guess perhaps I think we're less serious about altruism than we often like to think we are.

You have a good point about second-order compartmentalization.

Yeah I think this is also credible, albeit slightly less so. I frequently feel guilty about not having had children yet. Nor can I simply defend myself by arguing they are too expensive as:

It's also an unusual case because it's one where I am actually falling morally short of the majority of women throughout history. I guess this is how people who think that the 20th century was a disaster because of factory farming feel.

When people like Jeff, Julia, Bryan say childrearing is cheap, they're saying that it shouldn't stop someone from doing it if they want to. Not that it's the cheapest way to make there be more people. That honour would go to existential risk reduction, or failing that to global health measures. Noone is trying to get effective altruists to have kids if they don't want to.

Cool. I don't know how I feel about Eliezer's ethical injunctions sequence. I'd say I basically agree with it, with the caveat that I'm maybe half as concerned about it as he is. I'm happy to pirate books, leave lousy tips, "forget" to put away dishes so non-EA roommates do it, etc. in the service of EA, but I'd think very hard before, say, murdering someone.

That said, I'm glad that Eliezer is as concerned as he is... it does a good job of making up for the fact that he's so willing to disregard the opinions of others (to his discredit, in my opinion). You've got to have some kind of safeguard. I guess maybe in my case I feel like I'm well safeguarded by thinking carefully before straying outside the bounds of what friends & society regard as non-horrible ethical behavior, which is why I'm not concerned about aborting a baby leading to some kind of slippery slope of unethicalness... it's on the "sufficiently ethical" side of my "publicly regarded as non-horrible" fence.

I think you're only morally obligated to have kids insofar as they're the cheapest way to purchase QALYs with your time, energy, and money. I expect existential risk reduction is the cheapest way to do this if you think future lives have value comparable to present lives.* I'm not sure how it compares to Givewell's top charities. If it turns out having kids really is the cheapest way to purchase QALYs, I wonder if you're best off focusing on efforts to get other people to have kids (or improving gender relations so people get married more, or something like that), and only have kids to facilitate your advocacy and make it clear that you aren't a hypocrite.

* This is the strongest argument I've seen for this perspective from a valuing-future-life perspective, but others have argued that decreased fertility and a slowing economy will be good for x-risk reduction--the issue seems complicated.

One final point: I tend to think that even in well-developed countries, many people live lives that are full of misery (I'm wealthy and privileged and employed with hundreds of facebook friends and I still feel intense misery much more often than I feel intense joy). That's part of the reason why I'm so bullish on H+ causes.

This seems to be a further example of what the OP's point that she overlooked. There are multiple principles one might use to differentiate the two views:

but many of them are not available to EAs:

I agree with pretty much all of this, especially the last two paragraphs.

That last comparison also highlights that the argument against abortion in the popular space is overwhelmingly deontological, rather than consequentialist; it revolves around whether 'foetuses are people' or not, and therefore whether 'abortion is murder' or not, with the assumption granted (on both sides) that murder is automatically wrong. Which is why the popular space doesn't make the link between women having abortions and women simply choosing not to have children.

And if you are deontological in your thinking, the argument about moral uncertainty is pretty strong; a 10% chance (say) of committing murder sounds terrible.

But for me personally, as I've become more consequentialist in my thinking (which EA has been a part of, though not the only part), it's actually pushed me more and more pro-abortion.

I think this is missing the point of the post. I'm sure you are pretty consequentialist in your thinking, so am I. But are you certain that consequentialism is the correct moral theory? Such certainty seems implausible, there are lots of examples where consequentialism is in favour of things that seem crazy. If you do have some credence in moral theories that ban murder, and that include abortion as a case of murder, you are going to have to take that into account if you wish to act morally.

Maybe I'm misunderstanding you, but I actually don't think I missed that point at all if you read to the end of my post.

The whole point is that I'm not certain that consequentialism is correct but that my internal probability of it being so has been sharply rising, which is why "as I've become more consequentialist in my thinking...it's actually pushed me more and more pro-abortion". The 'more' implies lack of certainty/conviction here both for my current and (especially) my past self.

I'm claiming that deontology broadly provides more of the anti-abortion arguments than consequentialism does, certainly in the popular space. So it's reasonable for more consequentialist groups (like EAs) to be more pro-abortion.

If your only point is that people with greater degrees of consequence should be more pro-abortion, then I would agree. However, I interpreted your comment as also saying or implying that you were, in fact, pro-abortion, which is of course different (and I apologise if you didn't imply this).

I agree with you

I also posted this comment at Less Wrong, but I guess I'll post it here as well...

As someone who's had a very nuanced view of abortion, as well as a recent EA convert who was thinking about writing about this, I'm glad you wrote this. It's probably a better and more well-constructed post than what I would have been able to put together.

The argument in your post though, seems to assume that we have only two options, either to totally ban or not ban all abortion, when in fact, we can take this much more nuanced approach.

My own, pre-EA views are nuanced to the extent that I view personhood as something that goes from 0 before conception, to 1 at birth, and gradually increases in between the two. This satisfies certain facts of pregnancy, such as that twins can form after conception and we don't consider each twin part of a single "person", but rather two "persons". Thus, I am inclined to think that personhood cannot begin at conception. On the other hand, infanticide arguments notwithstanding, it seems clear to me that a mature baby both one second before, and one second after it is born, is a person in the sense that it is a viable human being capable of feeling conscious experiences.

I've also considered the neuroscience research that suggests that fetuses in the womb as far back as 20 weeks in are capable of memorizing the music played to them. This along with the completion of the Thalamocortical connections at around 26 weeks, and evidence of sensory response to pain at 30 weeks, suggest to me that the fetus develops the ability to sense and feel well before birth.

All this together means that my nuanced view is that if we have to draw a line in the sand over when abortion should and shouldn't be permissible, I would tentatively favour somewhere around 20 weeks, or the midpoint of pregnancy. I would also consider something along the lines of no restrictions in the first trimester, some restrictions in the second trimester, and a full ban in the third trimester, with exceptions for if the mother's life is in danger (in which case we save the mother because the mother is likely more sentient).

Note that in practice the vast majority of abortions happen in the first trimester, and many doctors refuse to perform late-term abortions anyway, so these kinds of restrictions would not actually change significantly the number of abortions that occur.

That was my thinking before considering the EA considerations. However, when I give thought to the moral uncertainty and the future persons arguments, I find that I am less confident in my old ideas now, so thank you for this post.

Actually, I can imagine that a manner of integrating EA considerations into my old ideas would be to weigh the value of the fetus not only by its "personhood", but also its "potential personhood given moral uncertainty".and its expected QALYs. Though perhaps the QALYs argument dominates over everything else.

Regardless, I'm impressed that you were willing to handle such a controversial topic as this.

Haha, I now feel bad leaving this comment unanswered because it very thoughtful, so I guess I'll copy-paste my response too...

Thanks! It took a long time - and was quite stressful. I'm glad you liked it.

I actually deliberately avoided discussing legal issues (ban or not ban) because I felt the purely moral issues were complicated enough already.

Yeah, if you want to do both you need a joint probability distribution, which seemed a little in-depth for this (already very long!) post.

I had another thought as well. In your calculation, you only factor in the potential person's QALYs. But if we're really dealing with potential people here, what about the potential offspring or descendants of the potential person as well?

What I mean by this is, when you kill someone, generally speaking, aren't you also killing all that person's future possible descendants as well? If we care about future people as much as present people, don't we have to account for the arbitrarily high number of possible descendants that anyone could theoretically have?

So, wouldn't the actual number of QALYs be more like +/- Infinity, where the sign of the value is based on whether or not the average life has more net happiness than suffering, and as such, is considered worth living?

Thus, it seems like the question of abortion can be encompassed in the question of suicide, and whether or not to perpetuate or end life generally.

My main thought on matter is that de-compartmentalising has its disadvantages as well, as has been discussed in depth at (of-course!) LessWrong.

First, here are some posts taking your old-school view that decompartmentalising is valuable: Compartmentalisation in epistemic and instrumental rationality, Taking Ideas Seriously. They give similar arguments to you but in both cases, the authors have since revised their opinions. For the first piece, Anna wrote a significant comment moderating her conclusions. For the latter piece, Will retracted it.

Phil Goetz wrote one of LessWrong's most upvoted pieces, arguing that decompartmentalisation is often irrational, Reason as a Memetic Immune Disorder.

So why have people started off so in favour of compartmentalisation and now turned their positions around? If, like Dale, you're really interested in this topic, it's probably worthwhile to work through some of these original sources. But some of the general issues are that compartmentalising means treading an uncommon path, which means that you have fewer footsteps to follow in, which means that you'll end up with weird and incorrect beliefs a lot of the time. It's also very likely that decompartmentalising - letting some beliefs affect all areas of decision-making - will lead to fanaticism - overvaluing certain beliefs.

It's kind-of a timeless question in rationality. But the growing consensus I'm discovering is that decompartmentalisation should be matched with what Anna calls 'safety features' - how to know when you have strange beliefs, and when to backtrack, when to think more, when to get friends to review your opinions, when to cede to the majority viewpoint, et cetera. Basically, I think that we need to work to put safety measures onto our thinking, and share these around the effective altruist community.

Other posts about problems with consequentialism and rationality include Virtue Ethics for Consequentialists and the less relevant but also interesting Ontological Crises though they're less directly relevant.

Yeah, I basically agree with this. The problem is that much of the EA movement relies on decompartmentalizing - Singer's classic Drowning Child argument basically relies on us decompartmentalizing our views on local charity from those on third world charity.

Indeed, many people would argue that EAs are fanatical - imagine taking some weird philosophy so seriously you end up giving away millions of dollars! Or worse, giving up your fufilling career to become a miserable corporate lawyer!

(I liked this post, but apologise for pushing into object level concerns.)

It isn't wholly clear to me that 'EA concerns' will generally push against broadly liberal views on abortion - it seems to depend a lot on what considerations one weighed heavily either pro- or con- abortion in the first place. I definitely agree that common EA considerations could well undermine reasons which led someone to a liberal view of abortion, but it may also undermine reasons which led to a conservative one as well. A few examples of the latter:

1) One may think that human life is uniquely sacred. Yet perhaps one might find this belief challenged (among other ways) by folks interested in animal welfare, and instead of setting humans on a different moral order to other animals, rather they should be on a continuum according to some metric that 'really' explains moral value (degree of consciousness, or similar). Although fetuses may well remain objects of moral concern, they become less sacrosanct, and so abortion becomes more conscionable.

2) As Amanda alludes to, consequentialist/counterfactual reasoning will supply many cases where abortion becomes permissible depending on particular circumstances, especially where one lives in a world where QALYs are relatively cheap (suppose Sarah wants to continue her pregnancy, but I offer to give £20,000 to AMF if she has an abortion instead - ignoring all second-order effects, this is likely a good deal; more realistically, one could include factors like expected loss-of-earnings, stigma, etc. etc.). If one's opposition to abortion is motivated by deontological concerns, a move to teleological ways of thinking may lead one to accept that there will be many cases when abortion is morally preferable.

3) Total views and replaceability could also be supportive, if Sarah anticipates to be in a better position to start a family later (so both her and the child stand to benefit), then the problem reduces to a different number population problem (imagine a 3x2 table - Sarah gains some QALYs by aborting over adopting, the 'current fetus' loses some QALYs by abortion, yet the 'counter factual' fetus gains even more QALYs by abortion, as it will be broad into existence and has - ex hypothesi - a better life than the current fetus would have). So the choice to abort (on the proviso to have a child in better circumstances) looks pretty good. (You are right to note there are other corrections, but they seem less significant).

The all-things-considered calculus is unclear, but it is not obvious to me the 'direction of travel' after contemplating EA-related principles is generally against abortion, and I would guess even an 'EA perspective' constructed from the resources you suggest would not be considered a conservative view. It may be that the inconvenience and risk of being pregnant would be insufficient to justify an abortion by the lights of this 'EA perspective', but most women who had an abortion had weightier concerns motivating them - concerns the 'EA perspective' might well endorse as sufficient justification in the majority of cases abortions have actually occurred.

There might be a regression to the mean issue where the considerations found compelling by-the-lights of one perspective will generally look less compelling when that perspective changes (so if one was 'pre-EA' strongly in favour of a liberal view on abortion, this enthusiasm would likely regress). There's also a related point that one should not expect all updates to be psychologically pleasant, and they're probably not that much more likely to comfort our prior convictions as to offend them. But I'm not sure the object-level example provides good support to the more meta considerations.

Good point. I suspect this may end up being mathematically equivalent to the moral uncertainty argument, which in practice equates to counting fetuses as having 30% of the value of an adult. In both cases EA-concerns lead to taking a midpoint between the "fetuses don't matter" and "unborn babies are equally valuable" points of view.

My impression is that the largest determinant of child success is genetic, followed by shared environment - direct parental environmental influences are small. On the one hand, this suggests that simply waiting will not improve things all that much, especially as she should be uncertain as to whether her situation really will improve. On the other hand, it suggests an easy way to improve the 'quality' of the later baby - choose a higher quality father. This improvement quite possibly wouldn't be included in QALYs (as they are bounded above by 1) but seems significant nonetheless.

That's a good point. Indeed, it seems that in basically every instance EA-considerations degrade the quality of the standard object-level arguments. They then make up for this by supplying entirely new arguments - moral uncertainty, replaceability.

Do you have any cases in mind where EA considerations case psychologically unpleasant updates?

When considering a controversial political issue, an EA should also think about whether there are positions to take that differ from those typically presented in the mainstream media. There might be alternatives that EA reasoning opens up that people traditionally avoid because they, for example, stick to deontological reasoning and believe that either an act is right or it is wrong in all cases, and that these restrictions should be codified into law.

For the object level example raised in the article, the traditional framing is "abortion should be legal" vs. "abortion should be illegal". Other alternatives to this might be, for example, performing other social interventions aimed at reducing the number of abortions within a framework where abortion is legal (ie. increasing social support offered to single mothers, so that fewer people choose to have an abortion).

I've just realized that a possible failure mode here would be conversation descending into a general discussion of abortion, which I've avoided doing so in the post. Instead, please limit any comments to the actual subject of the post:

Thus the article discusses the impact of believing in animal rights on fetus-personhood - but not the object-level issue of whether fetuses are people.

If you don't think abortion is a good concrete application to discuss, you should probably change the original post to work through a different example.

Perhaps kidney donation? The sacrifice of kidney donation is smaller, the benefit is arguably larger because there's no moral uncertainty as to whether the recipients count, and a much larger fraction of us are in a position to donate one?

I don't think this is a fair restriction on commentary for this sort of article, especially since you go into the example in such detail. You're suggesting that having EA beliefs seems to imply a higher degree of belief in the immorality of abortion than some people do have. People can respond to this by (a) retaining their abortion beliefs and changing their EA beliefs, (b) retaining their EA beliefs and changing their abortion beliefs, (c) retaining their EA beliefs and their abortion beliefs and rejecting the consistency requirement, or (d) retaining their EA beliefs and their abortion beliefs and rejecting the entailment. I don't really see why we should prevent people from defending (d) here.

I think if you want people to think about the meta-level, you would be better off with a post that says "suppose you have an argument for abortion" or "suppose you believe this simple argument X for abortion is correct" (where X is obviously a strawman, and raised as a hypothetical), and asks "what ought you do based on assuming this belief is true". There may be a less controversial topic to use in this case.

If you want to start an object level on abortion (which, if you believe this argument is true, it seems you ought to), it might be helpful to circulate the article you want to use to start the discussion to a few EAs with varying positions on the topic before posting for feedback, because it is on a topic likely to trigger political buttons.

I agree with Amanda that you discuss abortion at too much length to not make it open for discussion.

You should probably edit this into the post to make sure it's visible to everyone who comments.

Thanks for the post Dale. It's interesting to think about how EAs' beliefs touch on their other beliefs. It's received a bunch of criticism for focussing on a very controversial topic - abortion - and using offensive language - 'convenient', 'baby', not privately circulating drafts etc, criticism that I mostly agree with. As a moderator, it's not like I'm interesting in deleting the post here but I do hope that the information will be taken on-board to improve future posts!

I remember Will bringing this up years ago in Oxford, but it's good to see the details of the QALY calculation worked out in detail. To generalise somewhat, it seems that because QALYs are bounded between 0 and 1 there's only limited room for quality-improvements. Instead, issues that involve life-and-death, either by increasing the number of people or their duration, will likely dominate the calculations - in this case the 70-odd QALYs from an entire life dominating the possible QALY-loss due to pregnancy, which is bounded inside [ 0 , 0.75 ].

Yes - though perhaps this is a limitation in how QALYs are calculated.

In particular, QALYs don't incorporate money, only health. For large changes in health, this isn't that important, since generally people are willing to pay a lot for health ($50-100k for a 1% decreased chance of dying). In this case, however, the monetary costs of pregnancy might be comparable to the direct health costs.