We can probably influence the the world in the near future, as well as in the far future, and both near and far seem to matter.

The point I want to convey here is that, unless you are living in a particularly unusual time of your personal history, the influence you can have on the world in the next 2 years is the most important influence you will have in any future pair of years. To do that I will use personal stories, as I did in my last post.

Let us assume for the time being that you care about the far future a substantial amount. This is a common trend that many though not all EAs have converged to after a few years of involvement with the movement. We are assuming this to steel-man the case against the importance of the next 2 years. If what matters is over a 100 years into the future, which difference could 2015 to 2017 make?

Why what I do now, relatively, doesn't matter anymore

I believe the difference to be a lot. When I look back at the sequence of transformations that happened to me and that I caused after being a visiting fellow at the old version of MIRI, the birth of EA me, one event stands as more causally relevant than, quite likely, all others put together. Giving a short TED on Effective Altruism and Friendly AI. It mattered much. It didn't matter that much because it was good or not, or because it conveyed complex ideas to the general public - it didn't - or whichever other thing a more self-serving version of me might have wanted to pretend.

It mattered because it happened soon, because it was the first, and because I nagged the TED organizers about getting someone to talk about EA at the TED global event (the event was framed by TED as a sort of pre-casting for the big TED global 2013). TED 2013 was the one to which Singer was invited. I don't know if there was a relation.

If I went to the same event, today, that would be a minor dent, and it hasn't been three years yet.

Fiction: Suppose now I continue my life striving to be a good, or even a very good EA, I do research on AGI, I talk to friends about charity, I even get, say, one very rich industrialist to give a lot of money to Givewell recommended charities in late 2023 at some point. It is quite likely that none of this will ever surpass the importance of making the movement grow bigger (and better) by that initial acceleration, that single one night silly kick-starter.

The leveraging power you can still have

Now think about the world in which Toby Ord and Will MacAskill did not start Giving What We Can.

The world in which Bostrom did not publish Superintelligence, and therefore Elon Musk, Bill Gates, and Steve Wozniak didn't turn to "our side" yet.

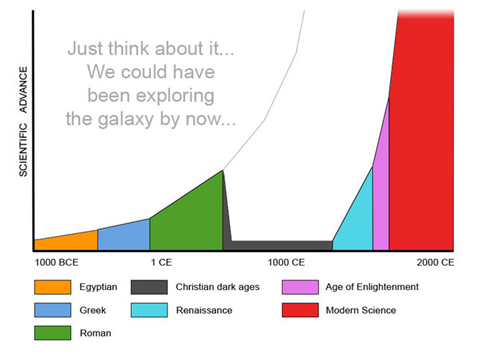

The EA movement is growing in number, in resources, in power, and in accessing the people in positions of power. You can still change the derivative of the growth curve so it grows faster, like they could have done in the joke below:

But not for long. Soon we will have exhausted all incredibly efficient derivative increasing opportunities. We will have only the efficient ones.

Self Improvement versus Effectivity

It is commonly held among EAs that self-improvement is the route to being a maximally powerful individual, which then means becoming a maximally causally powerful individual so you can do your Max altruistic good. A lot of people's time goes to self-improvement.

Outreach and getting others to do work are neglected because of that, in my opinion. Working on self-improvement can be tremendously useful, but some people use it to enter a cocoon from which they believe they will emerge butterflies long later. They may even be right, but long later may be too late for their altruism.

Does not this argument hold, at any point in time, for the next two years?

Yes. Unless you are say, going to finish college in two years, or repaying a debt so you can work on the FAI team three years from now, or something very unusual, most of your power involves contacting the right people now, having ideas now, and getting people as good as you to join your cause now.

Recently I watched a GWWC pledge event, where expected one million dollars were raised in future expected earnings. This was the result of a class on Altruism given to undergrads in Berkeley (hooray Ajea and Oliver by the way). You can probably get someone to teach a course on EA, or do it yourself now etc... and then all the consequences of those people turning into EAs now versus in five years, or for some people never, are on you.

If it holds at any time, does it make sense?

Of course it does. What Singer did in 1972 has influenced us all more than anything he could do now. But he is alive now, he can't change the past, and he is doing his best with the next two years.

The same is true of you now. In fact, if you have a budget of resources to allocate to effective altruism, I suggest you go all in during the next two years (or one year, if you like moving fast). Your time will never be worth the same amount of Altruistic Utilons after that. For some of us, I believe that there will be a crossover point, a point at which your actions are mattering less than how much you want to dedicate effort to them, this is your EA-retirement day. This depends on which other values you have besides EA and how old you are.

But the next two years are going to be very important, and to start acting on them now, and going all in, seems to me like an extremely reasonable strategy to adopt even if you have a limited number of total hours or effort you intend to allocate to EA. If you made a pledge to donate 10% of your time for instance, donate the first 10% you can get a hold of.

Exceptions

Many occasions are candidate exceptions for this "bienial all-in" policy I'm suggesting. Before the end of Death was fathomable, it would be a bad idea to spend two years fighting it, as Bostrom suggests in the fable. If you will finish some particularly important project that will enable you to do incredible things in two years, but it won't matter that much how well as long as you do it (say you will get a law degree in two years) then maybe hold your breadth. If you are an AI researcher who thinks the most important years for AI will be between 10 and 20 years from now, it may not be your time to go all in yet. There are many other cases.

But for everyone else, the next two years will be the most important future two years you will ever get to have as an active altruist.

Make the best of them!

My claim is a little narrower than the one you correctly criticize.

I believe that for movements like EA, and for some other types of crucial consideration events (atomic bombs, FAI, perhaps the end of aging) there are windows of opportunity where resources have the sort of exponential payoff decay you describe.

I have high confidence that the EA window of opportunity is currently in force. So EAs en tant que telle are currently in this situation. I think it is possible that AI's window is currently open as well, I'm far less confident in that. With Bostrom, I think that the "strategic considerations" or "crucial considerations" time window is currently open. I believe the atomic bomb time window was in full force in 1954, and highly commend the actions of Bertrand Russell in convincing Einstein to sign the anti-bomb manifesto. Just like today I commend the actions of those who caused the anti-UFAI manifesto. This is one way in which what I intend to claim is narrower.

The other way is that all of this rests on a conditional: assuming that EA as a movement is right. Not that it is metaphysically right, but some simpler definition, where in most ways history unfolds, people would look back and say that EA was a good idea, like we say the Russell-Einstein manifesto was a good idea today.

As for reasons to believe the EA window of opportunity is currently open, I offer the stories above (TED, Superintelligence, GWWC, and others...), the small size of the movement at the moment, the unusual level of tractability that charities have acquired in the last few years due to technological ingenuity, the globalization of knowledge - which increases the scope of what you can do a substantial amount - the fact that we have some, but not all financial tycoons yet, etc...

As to the factor of resource value decrease, I withhold judgement, but will say the factor could go down a lot from what it currently is, and the claim would still hold (which I tried to convey by Singer's 1972 example).

We have financial tycoons?? Then why is there still room for funding with AMF GiveDirectly SCI and DwTW?? Presumably they're just flirting with us.