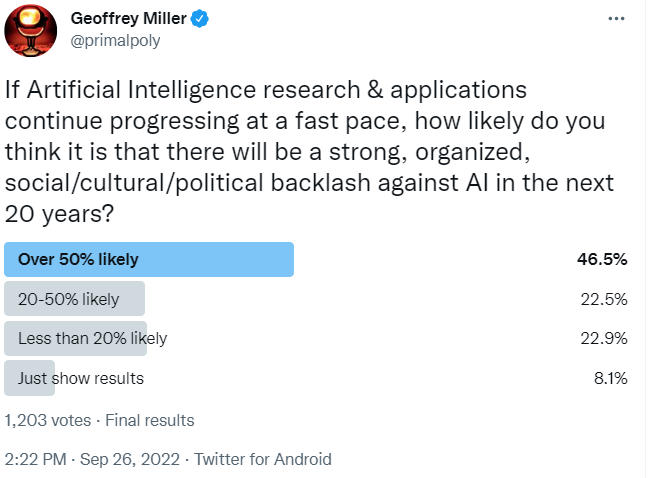

I ran a little Twitter poll yesterday asking about the likelihood of a strong, organized backlash against Artificial Intelligence (AI) in the next 20 years. The results from 1,203 votes are below; I'm curious about your reactions to these results.

Caveats about poll sampling bias: Only about 1% of my 124k followers voted (which is pretty typical for polls). As far as I can tell, most of my followers seem to be based in the US, with some in the UK, Europe, etc.; most are politically centrist, conservative, libertarian, or slightly Lefty; most are male, college-educated, and somewhat ornery. These results should not be taken seriously as a globally, demographically, or politically representative poll; more research is needed.

Nonetheless: out of the 1,106 people who voted for one of the likelihood options, about 69% expected more than a 20% likelihood of a strong anti-AI backlash. That's much higher than I expected (given that most people carry around dozens of narrow AI systems every day, arguably, in the form of apps on their smartphones.)

We've seen timelines predicting when AGI will be developed. Has anyone developed any timelines about if/when an anti-AI backlash might develop, and/or considered how such a backlash might delay (or stop) AI research and development?

I'm familiar with arguments that there are irresistible geopolitical and corporate incentives to develop AI as fast as possible, and that formal government regulation of AI would be unlikely to slow that pace. However, the arguments I've seen so far don't seem to take seriously the many ways that informal shifts in social values could stigmatize AI research, analogous to the ways that the BLM movement, MeToo movement, anti-GMO movement, anti-vax movement, etc have stigmatized some previously accepted behaviors and values -- even those that had strong government and corporate support.

PS I ran two other related polls that might be of interest: one on general attitudes towards AI researchers (N=731 votes; slightly positive attitudes overall, but mixed), and one on whether people would believe AI experts who claim to have develop theorems proving some reassuring AI safety and alignment results (N=972 votes; overwhelming 'no' votes, indicating high skepticism about formal alignment claims).

I think if there is major labor displacement by AI there will be a backlash just based on historical precedent. I think it would be much weirder if people were happy or even neutral about being replaced. I think the idea that massive amounts of people will be able to be retrained especially if they are middle aged is unrealistic. Especially since most of the jobs that are prone to replacement are those that are low or middle skill and retraining to a higher skill job requires quite a bit of effort. The other side of the coin is that the demographic shift that will happen in the coming decades will keep the labor market tight enough to prevent automation having such a huge impact. The increased automation may just make up for the lower labor force participation.

Good points. Collapsing birth rates and changing demographics might slightly soften the technological unemployment problem for younger people. But older people who have been doing the same job for 20-30 years will not be keen to 'retrain', start their careers over, and make an entry-level income in a job that, in turn, might be automated out of existence within another few years.