Epistemic status: speculative

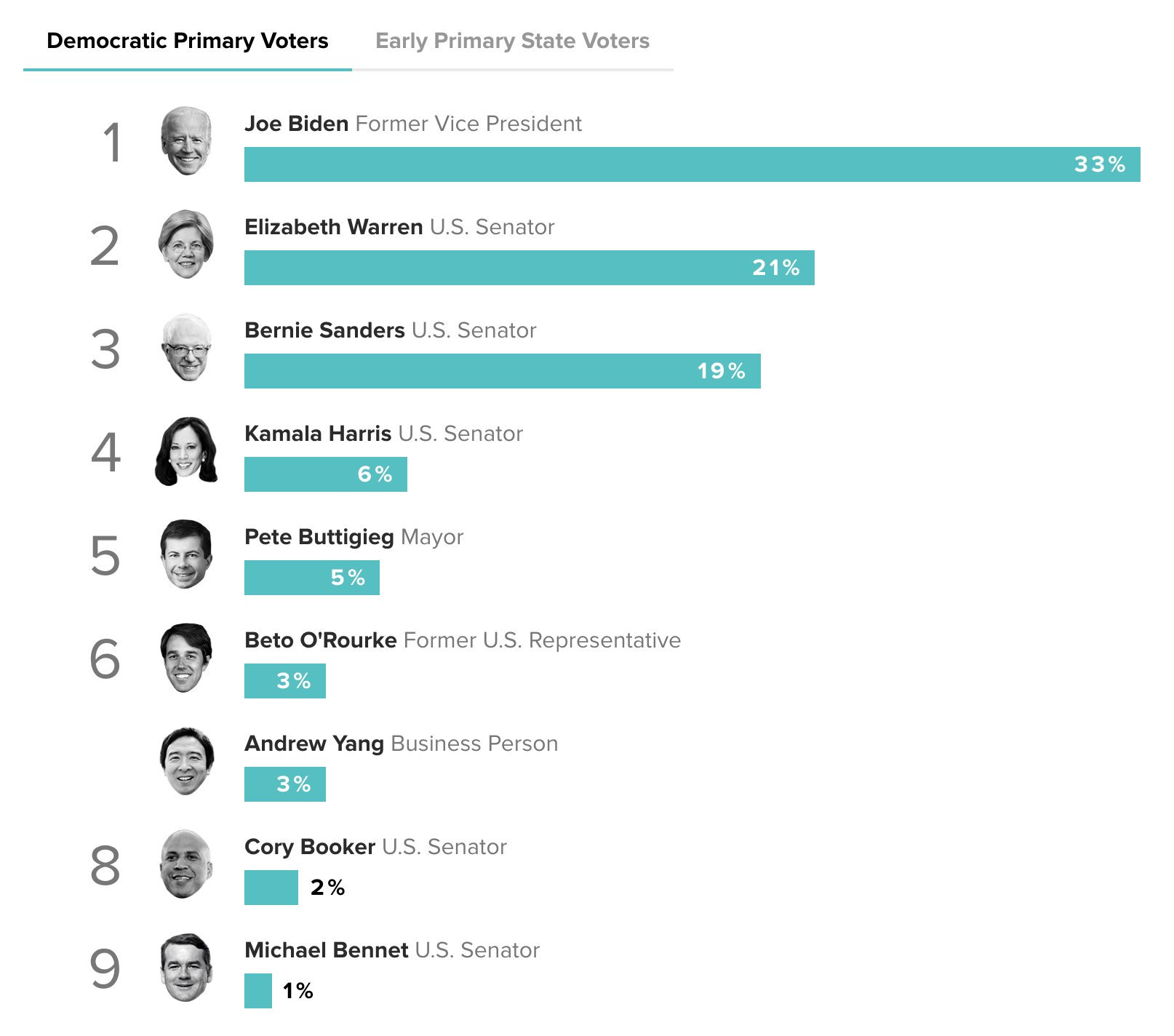

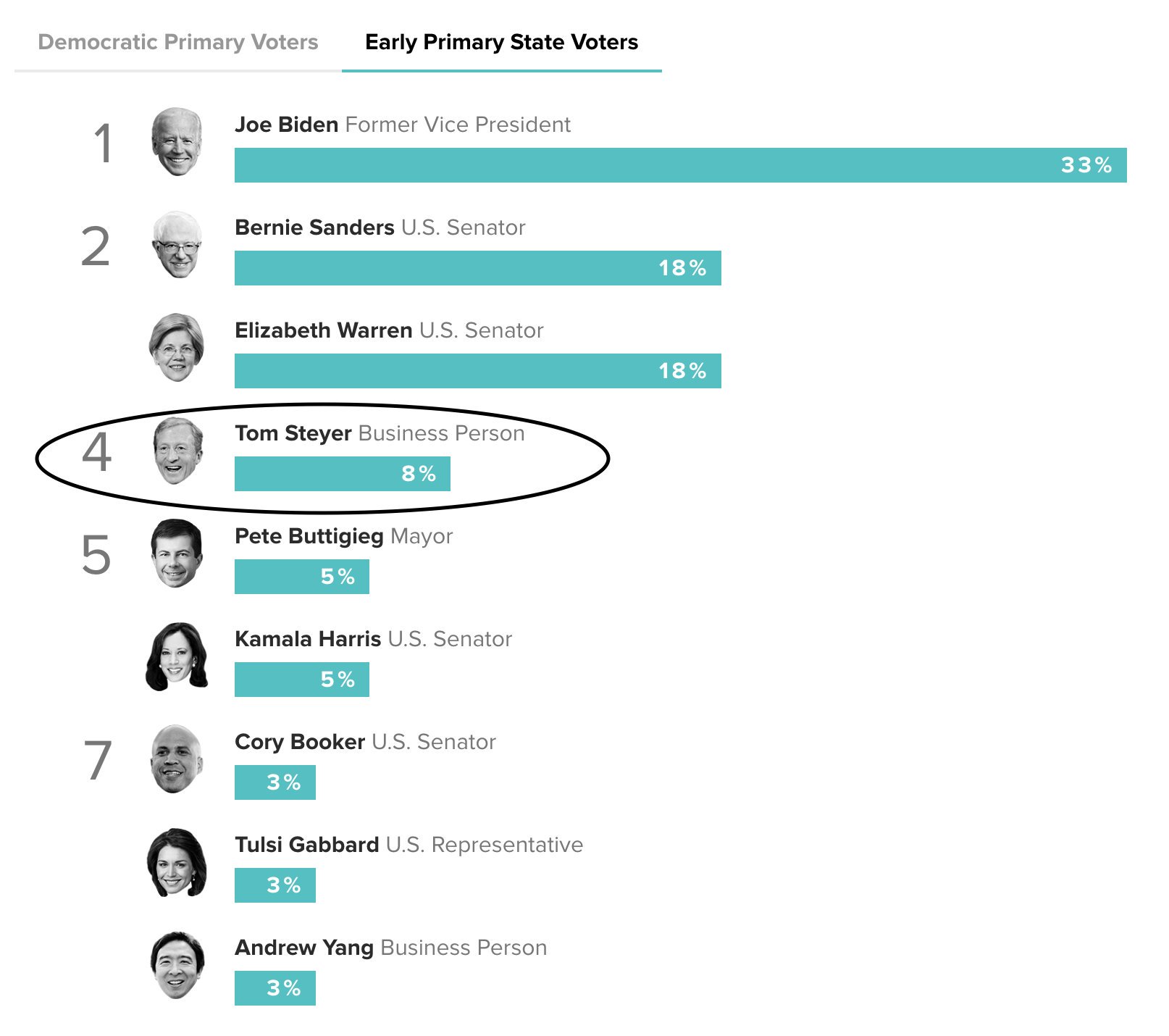

Andrew Yang understands AI X-risk. Tom Steyer has spent $7 million on adds in early primary states, and it has had a big effect:

If a candidate gets more than 15% of the vote in Iowa (in any given caucus), they get delegates. Doing that consistently in many caucuses would be an important milestone for outsider-candidates. And I'm probably biased because I think many of his policies are correct, but I think that if Andrew Yang just becomes mainstream, and accepted by some "sensible people" after some early primaries, there's a decent chance he would win the primary. (And I think he has at least a 50% chance of beating Trump). It also seems surprisingly easy to have an outsize influence in the money-in-politics landscape. Peter Thiel's early investment in Trump looks brilliant today (at accomplishing the terrible goal of installing a protectionist).

From an AI policy standpoint, having the leader of the free world on board would be big. This opportunity is potentially one that makes AI policy money constrained rather than talent constrained for the moment.

I don't think that making alignment a partisan issue is a likely outcome. The president's actions would be executive guidance for a few agencies. This sort of thing often reflects partisan ideology, but doesn't cause it. And Yang hasn't been pushing AI risk as a strong campaign issue, he only acknowledged it modestly. If you think that AI risk could become a partisan battle, you might want to ask yourself why automation of labor - Yang's loudest talking point - has NOT become subject to partisan division (even though some people disagree with it).