I am a lawyer. I am not licensed in California, or Delaware, or any of the states that likely govern OpenAI's employment contracts. So take what I am about to say with a grain of salt, as commentary rather than legal advice. But I am begging any California-licensed attorneys reading this to look into it in more detail. California may have idiosyncratic laws that completely destroy my analysis, which is based solely on general principles of contract law and not any research or analysis of state-specific statutes or cases. I also have not seen the actual contracts and am relying on media reports. But.

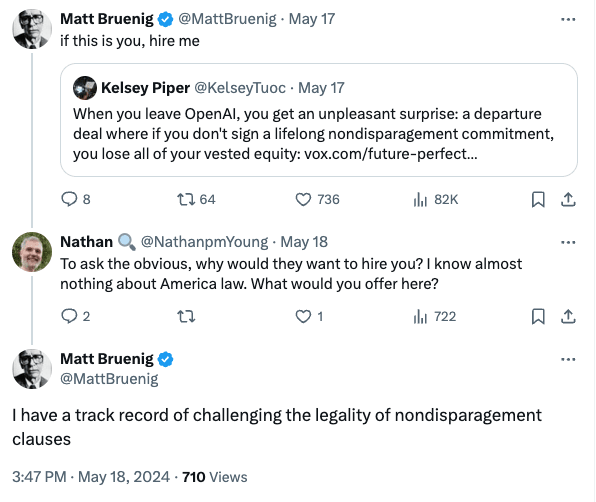

I think the OpenAI anti-whistleblower agreement is completely unenforceable, with two caveats. Common law contract principles generally don't permit surprises, and don't allow new terms unless some mutual promise is made in return for those new terms. A valid contract requires a "meeting of the minds". Per Kelsey Tuoc's reporting, the only warning about this nondisparagement agreement required at termination is a line in the employment contract requiring that employees sacrifice even their vested profit participation units if they refuse to sign a general release upon ending their employment. Then OpenAI demands a "general release" that includes a lifetime nondisparagement clause. Which is not a standard part of a "general release". As commonly understood, that term means you give up the right to sue them for anything that happened during your employment. Now, employment contracts can get tricky. If you have at-will employment, as the vast majority of people do, your contract is subject to renegotiation at any time. Because you have no continued right to your job, an employer can legally impose new terms in exchange for your continued employment. If you don't like it, your remedy is to quit. But vested PPUs are different because you already have a right to them. New terms can't be imposed unless there are new benefits.

So, the caveats:

- The employment contract might define "general release" differently than that term is normally used. Contracts are allowed to do this; you are presumed to have read and understood everything in a contract you sign, even if the contract is too long for that to be realistic, and contract law promotes the freedom of the parties to agree to whatever they want. You might still be able to contest this if it's an "adhesion contract", wherein you have no realistic opportunity to negotiate, but I suspect most OpenAI employees have enough bargaining power that they don't have this out.

2. OpenAI might give out some kind of deal sweetener in exchange for the nondisparagement agreement. While contract law requires mutual promises, they don't have to be of equal value. It might, for example, offer mutual nondisparagement provisions that weren't included in the employment agreement. That's the trick I would use to make it enforceable.

So, tldr, consult a lawyer. The employees who have already left and signed a new agreement might be screwed, but anyone else thinking about leaving OpenAI, and anyone who left without signing anything, can probably keep their PPUs and their freedom to speak, if they consult a lawyer before leaving. If you are a lawyer licensed in CA (or DE, or whatever state turns out to govern OpenAI's employment contracts), my ask is that you give some serious thought to helping anyone who has left/wants to leave OpenAI navigate these challenges, and drop a line in the comments with your contact info if you decide you want to do so.

low thousands? Obviously haven't seen the documents, but a few hours times few hundred dollars per billable hours should be in the ballpark. Obviously someone might want a more in-depth study before taking actions that could expose them to massive liability, though...