This is a short impression of the AI Safety Europe Retreat (AISER) 2023 in Berlin.

Tl;dr: 67 people working on AI safety technical research, AI governance, and AI safety field building came together for three days to learn, connect, and make progress on AI safety.

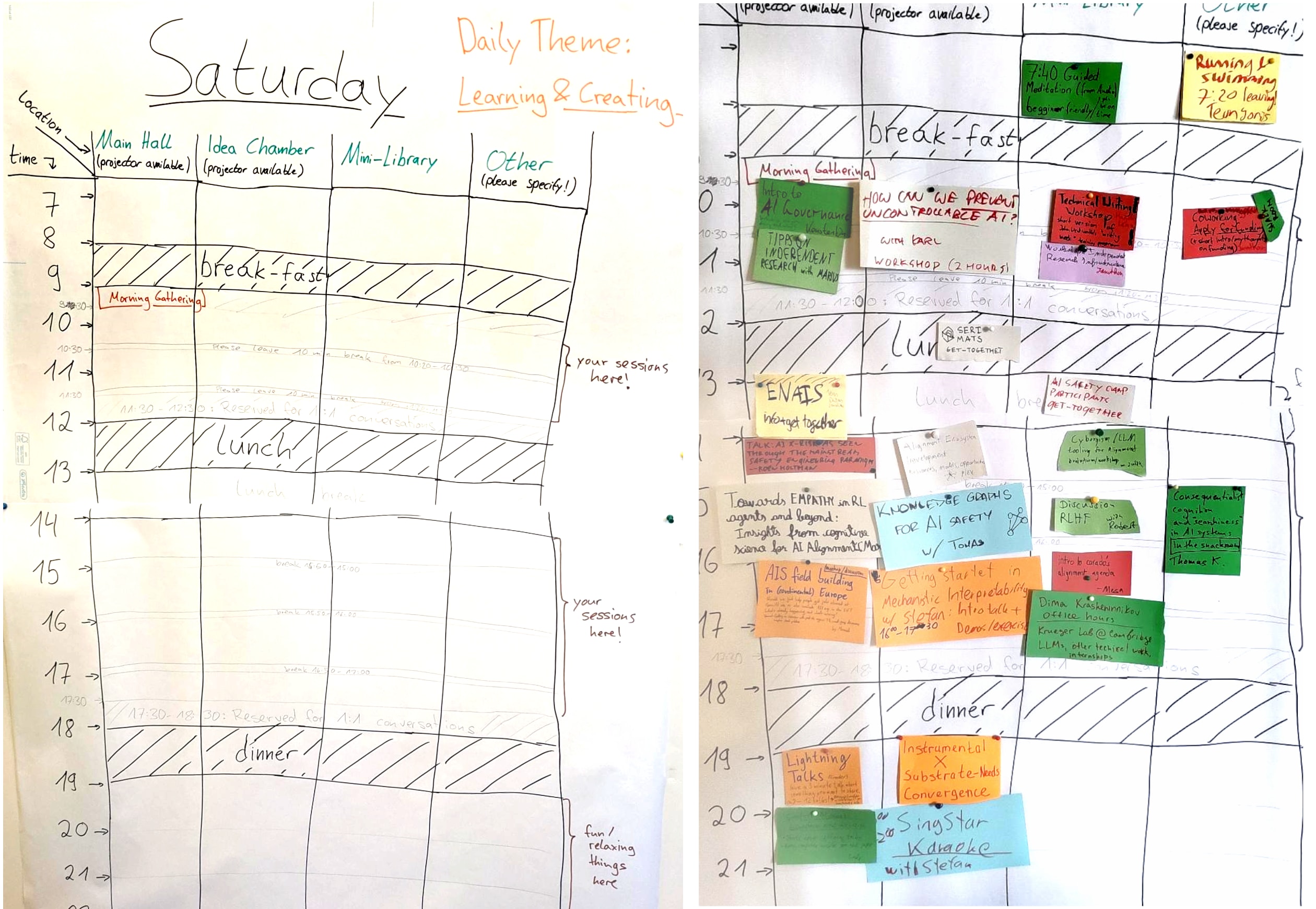

Format

The retreat was an unconference: Participants prepared sessions in advance (presentations, discussions, workshops, ...). At the event, we put up an empty schedule, and participants could add in their sessions at their preferred time and location.

Participants

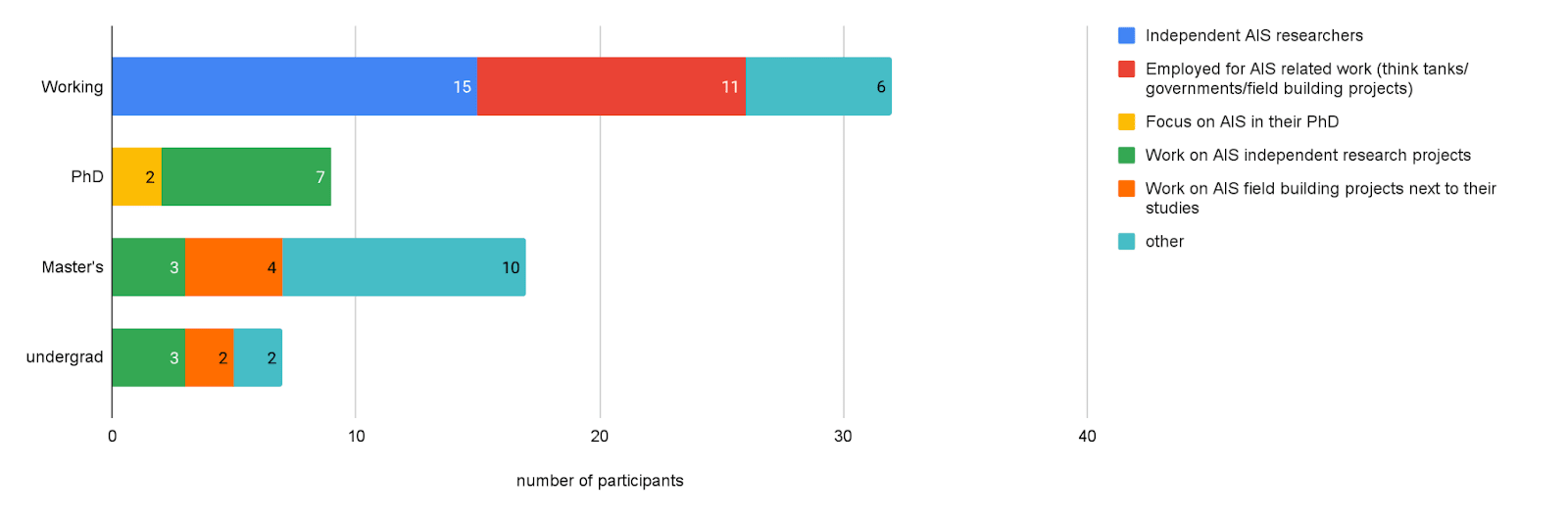

Career Stage

About half the participants are working, and half of them are students. Everyone was either already working on AI safety, or intending to transition to work on AI safety.

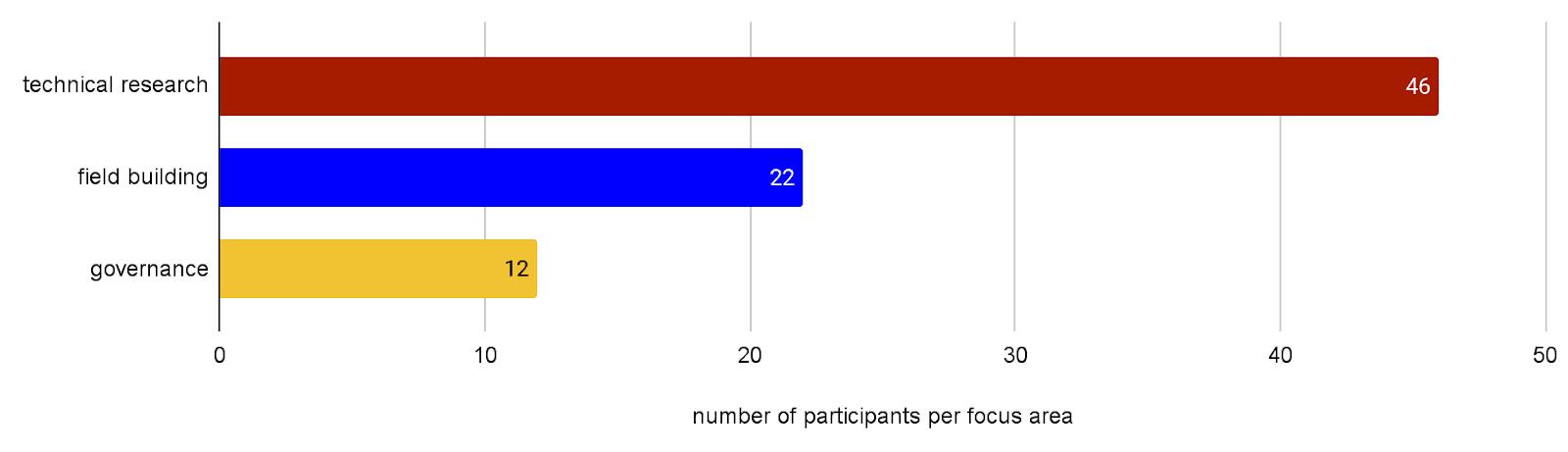

Focus areas

Most participants are focusing on technical research, but there were also many people working on field building and AI governance:

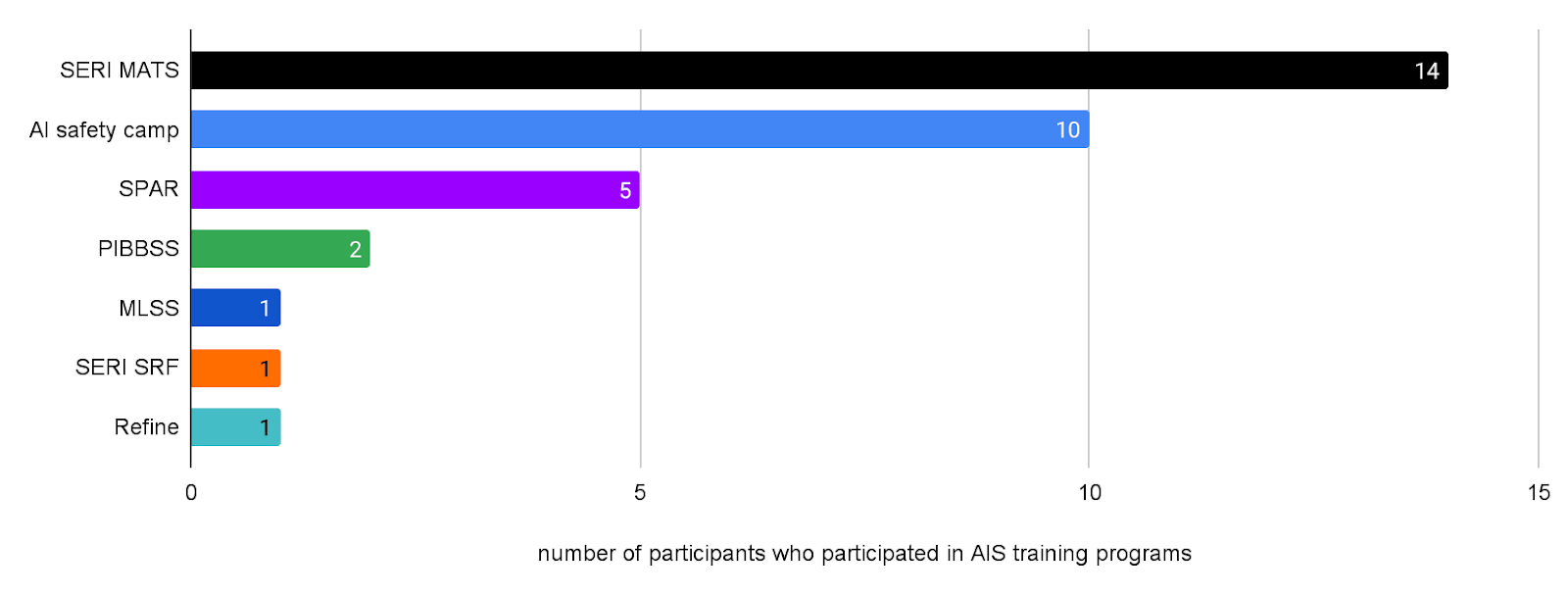

Research Programs

Many participants had previously participated, or currently participate in AI safety research programs (SERI MATS, AI safety Camp, SPAR, PIBBSS, MLSS, SERI SRF, Refine)

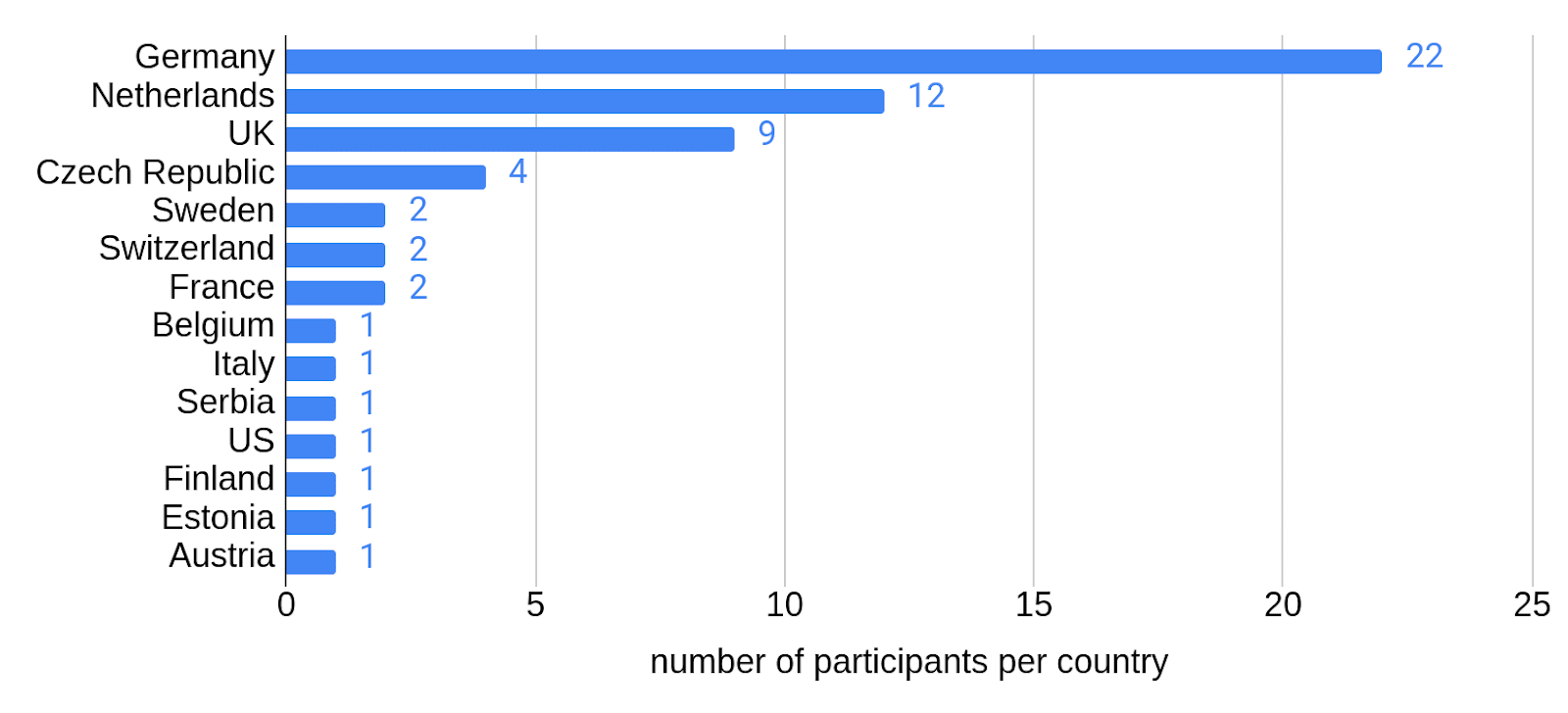

Countries

All but one participant were based in Europe, with most people from Germany, the Netherlands and the UK.

Who was behind the retreat?

The retreat was organized by Carolin Basilowski (now EAGx Berlin team lead) and me, Magdalena Wache (independent technical AI safety researcher and SERI MATS scholar). We got funding from the long-term future fund.

Takeaways

- I got a feeling of "European AI safety community".

- Unlike in AI safety hubs like the Bay area, continental Europe’s AI safety crowd is scattered across many locations.

- Before the retreat I already personally knew many people working on AI safety in Europe, but that didn't feel as community-like as it does now.

- Other people noted a similar feeling of community.

- Prioritizing 1:1s was helpful

- We reserved a few time slots just for 1:1 conversations, and encouraged people to prioritize 1:1s over content-sessions.

- Many people reported in the feedback form that 1:1 conversations were the most valuable part of the retreat for them.

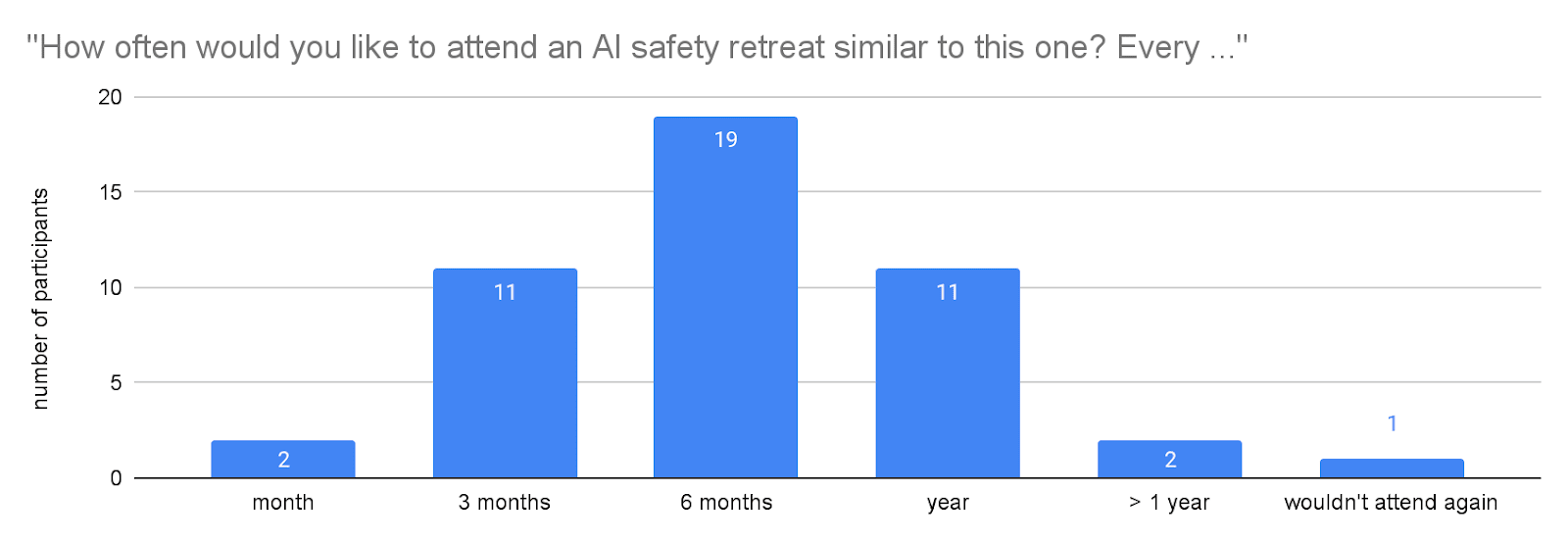

- There is a demand for more retreats like this.

- Almost everyone would like to attend this kind of retreat at least yearly:

- The retreat was relatively low-budget. It was free of charge, and most participants slept in dorm rooms. Next time, I would make the retreat ticketed and pay for a better venue with more single/double rooms

- I would make a future retreat larger.

- We closed the applications early because we had limited space, and wanted to limit the cost that comes with rejecting people who are actually a good fit. I think we would have had ~50 more qualified applications if we had left them open for longer.

- I would also do targeted outreach to AI safety institutions next time, in order to increase the share of senior people at the retreat.

- I think the participant-driven "unconference" format is great for a few reasons:

- Lots of content: As the work for preparing the content was distributed among many people, there was more content overall, and there could be multiple sessions in parallel.

- For me, being able to choose the topics that are most interesting to me makes me take away a lot more relevant content than if there was only one track of content.

- Small groups (usually 10-20 people) made it possible to have very interactive sessions.

- There was no "speaker-participant divide".

- I think this makes an important psychological difference: If there are "speakers" who get the label of "experienced person", other people are a lot more likely to defer to their opinion, and won't speak up if something seems wrong or confusing.

- However, when everyone can contribute to the content, and everyone feels more “on the same level”, it becomes a lot easier to disagree and to ask questions.

- Lots of content: As the work for preparing the content was distributed among many people, there was more content overall, and there could be multiple sessions in parallel.

I’m very excited about more retreats such as this one happening! If you are interested in organizing one, I am happy to support you - please reach out to me!

If you would like to be notified of further retreats, fill in this 1-minute form.

Update: We did a follow-up survey 4 months after the retreat, asking participants what impact the retreat had on them. Here is a summary of the responses:

(Note that the survey consisted of a free text field, and the categories I made up for summarizing the results are pretty subjective. Also note that some people mentioned that they started projects or applied for things, but would have done so even without the retreat. I did not include those in my count.)

I think these results are really helpful to improve the picture of how such a retreat is valuable for people!

The biggest surprise for me is the amount of tangible results (research collaborations, getting into SERI MATS, concrete projects, career changes) that came out of the retreat. There were 9 people who said in the feedback form that they want to start a project as a result of the retreat (the form they filled out right at the end of the retreat). I would have guessed that maybe 5 would actually follow through after the initial motivation directly after the retreat has worn off. And I think that would have been a very good result already. Instead, it was more than 9 people who started projects after the retreat!

I still have the intuition that the more vague effects of the retreat (such as feeling part of a community) are really important, potentially more so than the tangible outcomes. And these vague things are probably not measured very well by a survey that asks “What impact did the retreat have on you?”, because it is just easier to remember concrete than vague things.

Overall, these survey results make me even more excited about this kind of AI safety retreat!