Thank you to Jolien Sweere for her thoughtful review and suggestions; and Tzu Kit Chan, et al. for his insightful feedback.

Intro

Defining the concept of “economics” can be challenging even for tenured academics in the field. Economics can be the study of collective human behavior, the utilization of human capital, or policy (i.e., endogenous factors). It can also be a study of productivity and efficiency, and therefore a sort of meta study of technology (i.e., exogenous factors). It can explore how we produce and consume goods and services, and it is deeply integrated with many other social sciences. Within Economics lie numerous sub-fields beyond the classical micro and macro, including: Natural Resource Economics, Game Theory, Environmental Economics, Behavioral Economics, Business Economics, Financial Economics, Health Economics, Industrial Economics, International and Trade Economics, Econometrics, Public Economics, and, I now humbly propose: AI Economics.

Writing about current topics in economics is often difficult and controversial. Writing about the anticipated economics of the future under unprecedented, likely transformative technologies, as you may have guessed, is even harder and more controversial. The reasons for this are many. The many facets of Economics are deeply intertwined with policy, taxation, and resource allocation decisions that have historically disproportionately benefited some members of society and disadvantaged others. Economic decisions can have significant long-term consequences for wealth distribution, financial inequality, class structure, global power dynamics, trade, business subsidization, technology R&D, and even scientific discovery.

Economists generally tend to compare AI to previous technological developments, pointing to productivity and GDP growth leading to the creation of new industries and new jobs. There are certainly parallels we can apply and learn from, but ultimately this comparison is naive, short-sighted and simply inappropriate for a variety of reasons we’ll explore further.

While free-market and self-regulating solutions may have worked for most of the technologies developed over the last few centuries, advanced AI of many varieties, much less agentic autonomous AGI, are fundamentally different. There are a multitude of valid reasons to suggest that AI deserves significantly more legal deliberation and moral consideration, but what I'd like to highlight are the fundamentally misaligned incentives in our most commonly used later-stage organizational legal structures (namely incorporation). Public markets are considerably larger than private markets. According to McKinsey researchers, the global value of public equity markets was estimated at $124 trillion for 2021 versus $10 trillion for private markets. One of many problems in the context of AI is that public companies come with various duties to shareholders. Management must operate under explicit and implicit obligations to produce continued returns for (largely passive, disengaged) shareholders. These agendas are sometimes not just incentives but legal fiduciary duties which are more likely than not to conflict with those incentives that might align advanced AI with humanity’s greater interests.

I am not a traditional research economist. I hold undergraduate degrees in Business Economics and a graduate degree in Business information systems. I previously worked with The Wall Street Journal /, Dow Jones; but most of my economics expertise comes from my work as an analyst in private equity and half a decade in the quantitative hedge fund world, developing macro models for commodities markets and genetic optimization pipelines. I’m also a technologist and developer with 15 years of full-stack development, machine learning (ML)ML and data -science experience, beginning with Google in 2007. Despite my economic modeling work over the years, I’ve only recently come to fully appreciate how central economics is, and will be, to AI alignment.

Many within the AI Alignment communities who are genuinely interested in a future where AI does not go very bad, seem to be focusing their attention and resources towards technical, conceptual, policy, control and other forms of alignment research that presume AI could become unaligned with humanity. I want to be overwhelmingly clear that I think research in these fields is important and worthwhile. That said, we are failing to recognize just how much more probable and consequential economic misalignment could be, not just in terms of dollars, but in terms of human quality of life, suffering and death.

Despite a greater expected net impact, we are failing to appreciate the very bad scenarios because they are not as acutely dramatic as the catastrophic and existential scenarios so frequently discussed.

Summary

A few months ago I found myself reading yet another journal article on potential future job displacement due to AI. The article's specifics and conclusion were hardly novel. It suggested something along the lines of 80% of jobs being at risk over the next decade, but not to worry: 60% of them will be offset by productivity gains leading to new job creation and GDP growth. What began to bother me (beyond the implied 20% total increase in real unemployment) is the question of why AI safety and alignment researchers do not seem to more seriously consider significant AI-related declines in labor participation a viable threat vector worthy of inclusion in the classic short list of AI alignment risks. After all, a healthy economy with a strong educated middle class would almost certainly be better positioned to mitigate AI risks for numerous reasons.

Now to be fair, some researchers and economists certainly have thought about the economic issues surrounding AI and they may (or may not) concur with much of the following. AI economic alignment is a complex, interconnected and international issue with monumental political and legislative challenges. That said, the related economic conversation occupies a very small fraction of the aggregate AI safety and alignment attention span with only a small number of quality posts per year on forums such as Less Wrong. I believe this is not good.

It is not good for many reasons both direct and indirect, not least of which is because if any advanced AI, much less AGI or SI, goes bad at some point in the future (and I think it eventually will), we will need functional federal institutions to mount an appropriate response. If the U.S. economy and its parent institutions are in disarray due to rapid and substantial declines in labor participation, collapsing wages, extreme inequality and potential civil unrest, those institutions capable of intervening simply may no longer be effective.

Needless to say, there are a number of other circumstances beyond labor participation that could erode or inhibit an effective federal response to AI, including but certainly not limited to: extreme concentration of power and wealth, political division, corruption and lobbying, hot or cold wars, targeted disinformation and so on. Nevertheless, it seems incontrovertible that any AI takeoff regardless of speed during an economic depression would be much worse than if it were to happen during a period of political stability and economic health. The below will explain why I think economic dysfunction is likely and actually a greater immediate threat than any catastrophic risks or existential risks.

Hypothesis

I suspect most researchers would likely agree with the statement that when it comes to AI over the next couple decades:

p(mass job displacement) > p(major acute catastrophe) > p(extinction-level doom).

This hierarchical comparison isn’t completely presumptuous for a few reasons: 1) the latter, more serious risks (p(doom)) are somewhat inclusive of the former (p(catastrophe)), 2) statistically we might experience a technological economic depression and mass unemployment regardless of AI, 3) current trends indicate labor participation due to job displacement is already on a long-term decline, suggesting it could actually be more probable than not.

My personal estimate is that the probability of substantial and sustained (i.e., ~20%+) real unemployment as a result of advanced AI over the next 20 years is roughly 30% to 50%, roughly an order of magnitude higher than the probability of an acute unprecedented major catastrophe (i.e., AI-related hot conflict resulting in hundreds of thousands or millions of deaths), the probability of which is also roughly an order of magnitude higher than an existential extinction-level event.

In short, my argument distills down to three key points:

1. AI will increase productivity, result in job displacement and lower labor participation*

2) Sustained significant unemployment and/or general economic dysfunction will likely:

2a) Contribute to political and economic instability when stability would be needed most

2b) Weaken institutional capacity to respond to a severe AI threat when needed most

2c) Reduce funding for AI alignment, social programs and aid when needed most

2d) Potentially allow for massive corporate power consolidation and extreme inequality

2e) Result in millions of deaths, more than most feasible AI catastrophes or wars.

3) Thus, AI labor economics deserves as much attention as catastrophic risk and ex-risk in the AI alignment public discussion

* even if offset by new job creation, the duration of labor market shocks could persist for many years

Evidence

The latest UN DESA data show that, by 2050, our ‘population is expected to reach 9.8 billion people, over 6 billion of whom will be of working age’ and we are currently struggling to create meaningful employment for more than 71 million young unemployed people worldwide.

A 2024 report by the International Monetary Fund (IMF) estimated that 40% of global employment is at risk due to AI. In advanced economies, roughly 60% of jobs will be impacted by AI, and roughly with half (30% total) likely to be mostly replaced, resulting in displacement and dramatically lower labor demand and, lower wages.

The UN this year recognized in its own internal research that 80% of jobs will be at risk of being automated in the next few decades. In emerging markets and low-income countries, by contrast, AI exposure is expected to be 40 percent and 26 percent, respectively.

A large number of researchers, academics and industry analysts agree that we will likely experience significant job displacement, labor market disruptions and at least modest increases in unemployment as a result of AI technologies. The list of similar corroborating research organizations is long and includes The IMF, WHO, Pew Research Center, Harvard Business Review, World Economic Forum and the US Bureau of Labor and at least a dozen others. The consensus on the duration of these disruptions and the impact of productivity gains and new job creation is a lot less clear and very difficult to model, but obviously critical to understanding the net aggregate long-term impact.

In short, a majority of researchers in the field suggest AI is more likely than not to create significant job displacement and increased unemployment for at least a period of several years.

We have highly conclusive evidence that the direct and indirect death toll from prolonged increases in unemployment is substantial, with every 1% increase in unemployment resulting in a 2% increase in mortality. (Voss 2004, Brenner 2016, Brenner 1976). We know that sustained unemployment (vs short term swings like during the COVID-19 pandemic) amplifies this increase in mortality.

Please understand that I am absolutely not suggesting that various ex-risks are not plausible longer term or that catastrophic risks are not plausible within decades. Quite the contrary. I believe we do need significantly more progress in AI technical alignment as well as industry regulation on autonomous agentic system inference and microbiology engineering services. for example improvements in custody and KYC requirements for various private-sector genomic service providers and CRO’s contributing to pathogenic bio-risks and chemical risks.

My overarching point is that current evidence suggests the greatest expected AI risk to humanity *within the next few decades* comes not from agentic power-seeking unaligned AGI - but rather from the death and suffering resulting from significant economic disruptions, particularly in developed labor markets.

These risk profiles will shift longer term but so does uncertainty. Ultimately, time provides opportunity to innovate solutions and creative risk mitigations with novel technologies. Some may argue that if AI extinction level threats are only a matter of time, even if on a 100-year time horizon, they still deserve a prominent place on the alignment agenda. I do not disagree with this. However, it would seem rational that a 50% chance of 50 million deaths in the near term is at least equally deserving of our attention as a 0.5% chance of 5 billion deaths longer term.

Unemployment and Mortality

In a 1979 hearing before the Joint Economic Committee of the U.S. Congress, Dr. Harvey Brenner spoke on his research suggesting that a 1% increase in unemployment resulted in a 2% increase in total U.S. mortality. Over the following decades, Brenner’s research held mostly consistent, showing for every 1% rise in unemployment, at least 40,000 additional U.S. deaths occurred annually over the following 2 to 5 years. The deaths were largely attributed to various forms of stress, anxiety, and depression, including suicide, homicide, substance abuse, heart attack and stroke among incarceration and others.

This research started in the 1970s and several subsequent peer-reviewed research programs largely corroborated his findings. Several datasets suggesting the increase in mortality due to unemployment has probably increased since. Notably, these mortality numbers increase substantially, as the annual unemployment rate and/or the duration of increased unemployment persists.

We know fairly conclusively from multiple longitudinal research programs that increases in unemployment result in disproportionate increases in mortality rates and other associated human suffering. The specific estimates do range depending on the studies but generally fall within the 37,000 to 48,000 additional deaths for each 1% of additional unemployment within the United States alone. As a relative proportion, that is roughly a 1.9 to 2.2% increase in mortality for each 1% increase in unemployment.

Importantly, these observed effects can be thought of as exponential multi-year moving averages, thus quickly compounded by prolonged periods of high unemployment. Some reasonably conservative models project that if real unemployment rates were to exceed 20% for more than one year, death tolls from heart attack, stroke, suicide, overdose, and related causes would likely exceed a million deaths annually within a year. Increases in rates of psychiatric admissions and incarceration would be even higher.

Brenner’s research found several variables increase the ratio of mortality to unemployment. (countries with high rates of these things fared worse). Not surprisingly, they are things that are normally inversely associated with longevity and general health. The first is Smoking prevalence and Alcohol Consumption. According to worldpopulationreview.com the US is relatively average here with a 24.3% total smoking rate in 2022 (and dropping fast), lower than Latin America and Europe. The second is High BMI and Diets with relatively high Saturated fat consumption. Conversely populations with diets high in Fruit and vegetable consumption were affected less. The biggest risk according to Bremmer was BMI (ie. the major risk factor for cardiovascular health and diabetes). As of 2022, the U.S. has a 42.7% obesity rate. Much higher than average.

There are many areas of AI research that clearly lack consensus. Wherever you may fall on the P(doom) spectrum, I think that we will likely agree that the economic impact will be substantial. That is not to say that AGI will usher in an era of Utopic UBI, but rather that our economic paradigm will be shocked and probably in more ways than one. This will be true whether or not Agentic AGI decides to redefine its alignment, or whether any sort of Super-Intelligence comes to fruition at all.

20% is not an unrealistic or over-exaggerated estimate of a worst-case-scenario unemployment rate. It is entirely plausible that it could be higher without swift federal intervention. Ultimately, in a scenario of >30% unemployment globally, lasting more than a few years, their data suggests that total mortality could reach upwards of one hundred million globally, exceeding the total mortality of any known pandemic or geopolitical conflict in history.

Why it Could be Worse

In a bad to worst-case scenario, many factors could further amplify this unemployment-to-mortality ratio, such that sustained substantial unemployment beyond a few years could potentially result in more than 100 million deaths in the developed world over just a few decades, and even more globally. (less developed countries, however, will likely be less impacted by technological automation). 100 million lives is certainly a big number, but to put it in perspective, research suggests that international aid from developed countries to the developing world has helped save nearly 700 million lives in the past 25 years. (Reuters, 2018). Just a few of the particular factors that would likely compound the problem include various government’s misrepresentation and understatement of actual unemployment metrics, generally increased anxiety and depression rates particularly in those demographics that will be most impacted by AI-related unemployment, and most of all, the compounding effect of prolonged periods of high unemployment. Let’s take a closer look at a few of these:

First is the significant under-reporting of unemployment in the U.S. and potentially other countries. Unemployment is the intuitive default nomenclature when speaking about those who don’t have jobs. However the term ‘Unemployment’ is misleading because officially it only includes those receiving unemployment benefits. When you hear a news report that suggests unemployment rates are at 5%, that does not include people under-employed or working short-term contracts or those who have given up on finding new work after leaving the workforce. It does not include the PhD working for minimum wage in a job far beneath their ability. It does not include those forced into early retirement or those who can’t work due to disability. The incentives for government to under-report actual unemployment are several fold but boil down to the political and self-fulfilling economic prophecy. In other words, suggesting the economy is doing great, puts some decision makers at ease who might otherwise make decisions that further exacerbate the economic problem.

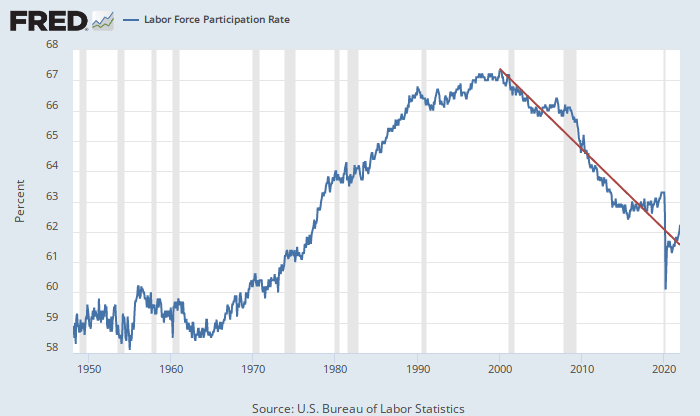

Regardless, what we should be more interested in is the Labor Participation Rate (i.e., how many people of working age are actually working full time jobs -preferably above the poverty line). As discussed, unemployment numbers are full of problems and seriously misrepresent the actual number of unemployed people. While I have used the terms somewhat interchangeably thus far, I want to acknowledge that there is a distinct difference. Those who will be negatively affected by automation, however, will not care whether their governments define them as unemployed or underemployed or non participating labor. What matters to them is whether those who want to work can actually find work in minimally appropriate jobs.

Second, studies on the relationship between unemployment and mortality suggest that a large proportion of that mortality is ultimately due to stress, anxiety and unhealthy psychological consequences of that financial hardship. The problem: those generational demographics most likely to be impacted by AI are those already suffering from pronounced higher rates of anxiety and depression. In a recent 2023 cross-sectional study of about 3 million US adults, “anxiety and depression were significantly higher among adults aged 18 to 39 years (40% and 33%, respectively) compared with adults aged 40 years and older (31% and 24%, respectively). Greater economic precarity and greater reactivity to changing case counts among younger adults were associated with this age disparity.” (Villaume, 2023)

Lastly, we will be fighting an uphill battle with numerous longitudinal studies showing a consistently declining labor participation rate since the early 2000’s. What’s even more troubling is that this is true even in light of increased labor participation of women in the later half of the 20th century. Significantly more research is needed to better understand our already declining labor participation rates.

Counterpoints

I’d like to begin with several disclaimers: Firstly, far more evidence exists to support point 2 (mass unemployment results in increased mortality) than point 1 (that advanced AI will result in mass unemployment). The obvious reason for this is that advanced AI does not yet exist and so observational evidence does not exist. We must extrapolate what we know about economics, automation, business incentives and digital delivery to a broad range of cognitive labor tasks and job functions in various socio economic cultures with differing regulatory attitudes. The hypothesis that advanced AI will result in increased unemployment in developed countries seems highly likely but deserves much further research because we simply do not know how many new jobs will be created.

There is a large degree of uncertainty here due to known unknowns and unknown unknowns. There may be reasons that AI stimulates job creation that actually outweighs job losses. It would seem probable that if said job creation did occur, it would be technologically (e.g., computer-science) oriented. These programming, data science, and engineering functions require skill sets and education that the majority of displaced labor do not have and would require many years to develop if they are realistically capable of learning it at all.

If for some inexplicable reason, progress in AI research plateaued such that human-AI collaboration produced superior work than either Humans or AIs alone, it is possible that significant diverse job creation could occur. Such a situation seems plausible for a short period of time but unlikely to persist for more than a few years given the rapid pace and momentum of AI research.

It is conceivable that AI positively stimulates net labor markets, consequently lowering unemployment levels through increased production efficiency. In this scenario, the expansion of production will create more jobs and employment opportunities in the long term, however, commensurate consumption (demand for) those goods and services must also exist. It seems unlikely that AI systems would be granted any sort of legal status allowing them to purchase and consume goods within the next few decades and so we must investigate where that additional demand would come from and for what goods especially if real wages continue to decline.

The prospect of some agentic AGI systems attaining legal and civil rights at some point in the far future is feasible but this remains highly speculative and we must also admit there would be many reasons to think that they might prefer to exist within digital environments where they are primarily consumers of energy and compute resources rather than physical consumer goods and services.

It may also be possible that advanced AI quickly leads to groundbreaking discoveries in sectors such as energy, health and longevity, propulsion or other space technologies. These developments could serve as a catalyst for entirely new industries and scientific research areas as it would open the door for commercialization of novel business models and infrastructure such as asteroid mining, interplanetary discovery and biomedical sciences. Revolutionizing energy distribution through experimental technologies like cost-effective nuclear fusion, could create hundreds of thousands even millions of jobs globally as we build new energy infrastructure. Nevertheless, a few million jobs is still a drop in the bucket compared to the 1+ billion jobs potentially at stake.

The last counterpoint I’ll touch on is healthcare, specifically elderly and chronic disease care. Roughly half our 4.5 Trillion in U.S. healthcare spending is for retirees and elderly with chronic diseases, heart diseases and cancers disproportionately represented. Caring for our elderly currently consumes vast economic resources 55% of our total healthcare spending. AI could conceivably create significant value for care, treatment and management of at-risk patient populations.

Suggestions, Solutions and Hope

Unemployment is significantly understated. We must reframe and define unemployment to actually include everyone who is not working and not voluntarily retired by choice.

Brenner found that Southern European countries experienced less incidence of unemployment related mortality than northern Europe. He did not cite specific factors behind this finding but given the social, dietary and environmental differences, it would seem reasonable to associate some of that linked to healthier Mediterranean diets, warmer winters and more closely knit family ties, offering greater “social cohesion” for southern countries.

I do not have all the economic policy answers but it is my tentative personal opinion there is no precedent to suggest blanket technological bans or censorship will work to effectively regulate digital technologies. What does seem reasonable and perhaps necessary is:

- The effective tax rate on labor in the United States is roughly 25 to 33 percent. The effective tax rate on capital is roughly 5 to 10 percent for most businesses. While there may be some variability, it is clear that so long as substantial tax advantages exist for enterprise, companies will be incentivized to replace and automate workers rather than invest in them. Equalizing these discrepancies may better align incentives.

- I believe that researchers should investigate novel tax structures on large AI product and service-oriented organizations. Further, these tax structures should effectively address the tax strategies exploited by tech companies like Amazon that inflate expenses to avoid corporate income taxes. It is a challenging loophole but evidence suggest this practice can lead to anti-competitive monopolization of large markets.

- Just as importantly we must ensure that tax revenue applied to AI companies is not misappropriated and allocated/used to benefit those workers displaced. We should advocate for full corporate and government transparency in AI related activity so that proceeds fund functional innovative social programs that benefit everyone. (e.g., perhaps (human) coaching, counseling and personal development programs)

- We should incentivize hiring (especially in smaller businesses) by making easier for businesses and perhaps incentivize co-operative organizational models.

- We should support local manufacturing, industry and agriculture and of course human-first service products when possible. Vote with your wallet.

- Carefully crafted UBI programs may or may not be a part of the solution. I think we need more pilots and research to evaluate its potential but regardless it’s important that they encourage (or at least do not discourage) meaningful occupation, and social engagement.

Conclusions:

There’s an old military quote that posits ‘“Amateurs study tactics, professionals study logistics.” Some have attributed it to Napoleon, most sources suggest it was said by General Omar Bradley during World War 2. Regardless of authorship and despite its somewhat facile oversimplification, there’s an element of truth to the statement that can’t be ignored. Tactics may win battles, which are needed to win wars, but ultimately logistics and economics win protracted wars whether they’re hot or cold. This is a key part of the overarching message I’d like to end on: we should more deliberately expand our view of AI alignment beyond the technical tactics and policy battles to think harder about the bigger picture economic ‘logistics’ that may be far more important in incentivizing aligned behavior in the long term.

We can not discount the plausibility of an AI assisted catastrophe resulting in hundreds of thousands, even millions of deaths. We can not deny there is a non-zero chance agentic AI may become unaligned with or even hostile towards humanity. On a long enough time horizon, these things are more than merely plausible.

The difference here is that cognitive labor automation, protracted mass unemployment and job displacement in the coming decades are not just plausible, nor possible -but actually probable and this is not a controversial fringe position. It is a matter of how and when, not if - and I’d argue this snowball has already started rolling.

When this rapid acceleration of automation happens, not only might many economies fail under their own (aging demographic) weight but a lack of meaningful employment will almost certainly result in the death and suffering of many millions of people worldwide.

A problem well-stated is halfway solved. This document is hardly a comprehensive well-crafted problem statement but it is my starting point and if you’re interested, please reach out because it’s an area worthy of our time and attention.

Needed Areas of Future Research

- Analysis and segmentation of all top job functions within each industry and their loss exposure

- Evaluation of novel organizational models more appropriate to deliver advanced AI agents.

- Wage trend analysis and projections for all jobs under a highly-automated economy.

- Economic modeling and evaluation of concentrations of power in AI

- Incremental Impact of Humanoid Robotics on broader GDP growth and specific industries.

Select Sources

- https://unthsc-ir.tdl.org/server/api/core/bitstreams/27cf9d31-0e90-424b-9f56-4d1a5047e049/content

- https://worldpopulationreview.com/country-rankings/smoking-rates-by-country

- https://www.ncbi.nlm.nih.gov/pmc/articles/PMC10690464/

Disclaimers / Acknowledgements:

The following is an informal overview and not a rigorous academic research study. I concede that I have focused primarily on the U.S. economy and federal institutions in the paper below due to a lack of bandwidth to do the requisite research on EMEA and APAC regions and their constituent economies, which will surely be relevant to the bigger AI-risk landscape. According to PWC, “The U.S. and China, already the world’s two largest economies, are likely to experience the greatest economic gains from AI,” and together, China and North America will account for about 70% of the global economic impact of AI by 2030. However, the impact on automating cognitive labor in the decades following 2030 will surely be far greater, and are the focal point of this paper. It is also presently the case that by most metrics, the US private and public sector is arguably leading AI research and infrastructure development by most metrics. Despite AI R&D consolidation in the UK, France and China, it seems likely that the dominant US position of dominance in AI will continue into the near-term foreseeable future. We should not take this for granted beyond 2040.

Executive summary: Labor participation decline due to AI automation is a high-priority alignment risk that could lead to widespread unemployment, economic instability, and millions of deaths, deserving as much attention as catastrophic and existential AI risks.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.

@alx, thank you for addressing this crucial topic. I totally agree that the macro risks[1] of aligned AI are often overlooked compared to risk of misaligned AI (notwithstanding both are crucial). My impression is that the EA community is focused significantly more on the risk of misaligned AI. Consider, however, that Metaculus estimates of the odds of misaligned AI ("Paperclipalypse") at only about half as likely as an "AI-Dystopia."

The future for labor is indeed one of the bigger macro risks, but it's also one of the more tangible ones, making it arguably less neglected in terms of research. For example, your first call to action is to prioritize "Analysis and segmentation of all top job functions within each industry and their loss exposure." This work has largely been done already, insofar as government primary source data exists in the US and EU. I personally led research in quantifying labor exposures,[2] and I'll readily admit this work is largely duplicative vs. what many others have also done. I'll also be frank that such point forecasts are inherently wild guesstimates, given huge unknowns around technological capabilities, complementary innovations, and downstream implementation.

A couple suggestions for macro discussions:

These critiques aside, this post is great and again I totally agree with your core point.

There are other AI-related macro risks that extend beyond—and might exceed—employment. I'll share a post soon with my thoughts on those. For now, I'll just say: As we approach this brave new world, we should be preparing not only by trying to find all the answers, but also by building better systems to be able to find answers in the future, and to be more resilient, reactive, and coordinated as a global society. Perhaps this is what you meant by "innovate solutions and creative risk mitigations with novel technologies"? If so, then you and I are of the same mind.

By "macro," I mean everything from macroeconomic (including labor) to political, geopolitical, and societal implications.

Michael Albrecht and Stephanie Aliaga, “The Transformative Power of Generative AI,” J.P.Morgan Asset Management, September 30, 2023. I co-authored this high-level "primer" covering various macro implications of AI. It covers a lot of bases, so feel free to skip around if you're already very familiar with some of the content.

I have reservations about your specific mortality rate analysis, but I'll save that discussion for another time. I do appreciate and agree with your broader perspective.

Thanks for the thoughtful feedback (and for being so candid that so many economics research forecasts are often 'wild guesstimates' ) Couldn't agree more. That said it does seem to me that with additional independent high-quality research in areas like this, we could come to more accurate collective aggregate meta forecasts.

I suspect some researchers completely ignore that just because something can be automated (has high potential exposure), doesn't mean it will. I suspect (as Bostrom points out in Deep Utopia) we'll find that many jobs will be either legally shielded or socially insulated due to human preferences (eg. a masseuse or judge or child-care provider or musician or bartender) . All are highly capable of being automated but most people prefer them to be done by a human for various reasons.

Regarding probability of paperclips vs economic dystopia ( assuming paperclips in this case are a metaphor/stand-in for actual realistic AI threats) I don't think anyone takes the paperclip optimizer literally - it entirely depends on timeline. This is why I was repetitive to qualify I'm referring to the next 20 years. I do think that catastrophic risks increases significantly as AI increasingly permeates supply chains, military, transportation and various other critical infrastructure.

I'd be curious to hear more about what research you're doing now. Will reach out privately.