I seem to remember an EA forum post (or maybe on a personal blog) basically formalizing the idea (which is in Holden's original worldview diversification post) that with declining marginal returns and enough uncertainty, you will end up maximizing true returns with some diversification. (To be clear, I don't think this would apply much across many orders of magnitude.) However, I am struggling to find the post. Anyone remember what I might be thinking of?

19

Reactions

4 Answers sorted by

I was thinking about this recently too, and vaguely remember it being discussed somewhere and would appreciate a link myself.

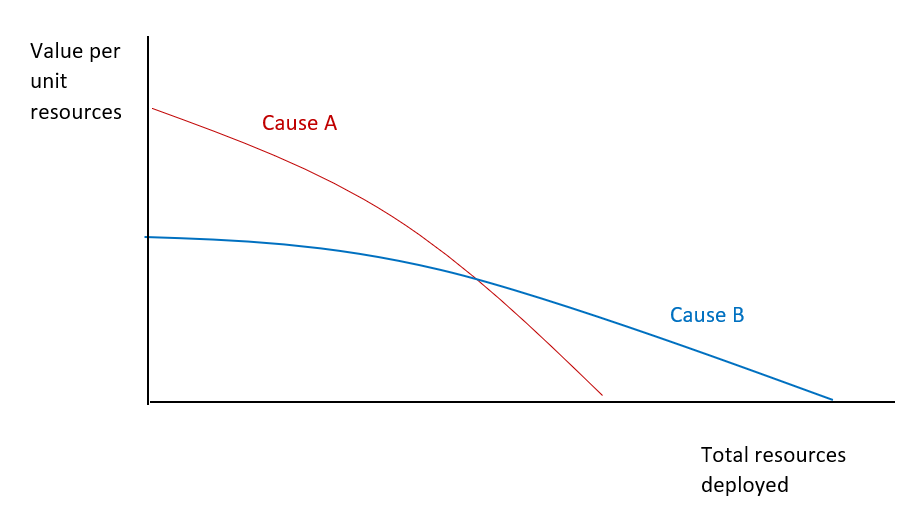

To answer the question, here's a rationale for diversification that's illustrated in the picture below that I just whipped up.

Imagine you have two causes where you believe their cost-effectiveness trajectories cross at some point. Cause A does more good per unit resources than cause B at the start but hits diminishing marginal returns faster than B. Suppose you have enough resources to get to the crossover point. What do you do? Well, you fund A up to that point, then switch to B. Hey presto, you're doing the most good by diversifying.

This scenario seems somewhat plausible in reality. Notice it's a justification for diversification that doesn't rely on appeals to uncertainty, either epistemic or moral. Adding empirical uncertainty doesn't change the picture: empirical uncertainty basically means you should draw fuzzy lines instead of precise ones, and it'll be less clear when you hit the crossover.

What's confusing for me about the worldview diversification post is that it seems to run together two justifications for, in practice, diversifying (i.e. supporting more than one thing) that are very different in nature.

One justification for diversification is based on this view about 'crossovers' illustrated above: basically, Open Phil has so much money, they can fund stuff in one area to the point of crossover, then start funding something else. Here, you diversify because you can compare different causes in common units and you so happen to hit crossovers. Call this "single worldview diversification" (SWD).

The other seems to rely on the idea there are different "worldviews" (some combination of beliefs about morality and the facts) which are, in some important way, incommensurable: you can't stick things into the same units. You might think Utilitarianism and Kantianism are incommensurable in this way: they just don't talk in the same ethical terms. Apples 'n' oranges. In the EA case, one might think the "worldviews" needed to e.g. compare the near-term to the long-term are, in some relevant sense incommensurable - I won't to try to explain that here, but may have a stab at in another post. Here, you might think you can't (sensibly) compare different causes in common units. What should you do? Well, maybe you give each of them some of your total resources, rather than giving it all to one. How much do you give each? This is a bit fishy, but one might do it on the basis of how likely you think each cause is really the best (leaving aside the awkward fact you've already said you don't think you can compare tem). So if you're totally unsure, each gets 50%. Call this "multiple worldview diversification" (MWD).*

Spot the difference: the first justification for diversification comes because you can compare causes, the second because you can't. I'm not sure if anyone has pointed this out before.

*I think MWD is best understood as an approach dealing with moral and/or empirical uncertainty. Depending on the type of uncertainty at hand, there are extant responses about how to deal with the problem that I won't go into here. One quick example: for moral uncertainty, you might opt for 'my favourite theory' and give everything to the theory in which you have most credence; see Bykvist (2017) for a good summary article on moral uncertainty.

I have two related posts, but they're about deep/model uncertainty/ambiguity, not precisely quantified uncertainty:

It sounds closer to the first one.

For precisely quantified distributions of returns, and expected return functions which depend only on how much is allocated to their corresponding project (and not to other projects), you can just use the expected return functions and forget about the uncertainty, and I think the following is very likely to be true (using appropriate distance metrics):

(Terminology: "weakly concave" means at most constant marginal returns, but possibly sometimes decreasing marginal returns; "strictly concave" means strictly decreasing marginal returns.)

If the expected value... (read more)

You might be thinking of this GPI paper:

given sufficient background uncertainty about the choiceworthiness of one’s options, many expectation-maximizing gambles that do not stochastically dominate their alternatives ‘in a vacuum’ become stochastically dominant in virtue of that background uncertainty

It has the point that with sufficient background uncertainty, you will end up maximizing expectation (i.e., you will maximize EV if you take stochastically dominated actions). But it doesn't have the point that you would add worldview diversification, though.

I don't remember a post but Daniel Kokotajlo recently said the following in a conversation. Someone with maths background should have an easy time to check & make this precise.

> It is a theorem, I think, that if you are allocating resources between various projects that each have logarithmic returns to resources, and you are uncertain about how valuable the various projects are but expect that most of your total impact will come from whichever project turns out to be best (i.e. the distribution of impact is heavy-tailed) then you should, as a first approximation, allocate your resources in proportion to your credence that a project will be the best.

This looks interesting, but I'd want to see a formal statement.

Is it the expected value that's logarithmic, or expected value conditional on nonzero (or sufficiently high) value?

tl;dr: I think under one reasonable interpretation, with logarithmic expected value and precise distributions, the theorem is false. It might be true if made precise in a different way.

If

- you only care about expected value,

- you had the expected value of each project as a function of resources spent (assuming logarithmic expected returns already assumes a lot, but does leave a lot of

It might be helpful to draw a dashed horizontal line at the maximum value for B, since you would fund A at least until the intersection of th... (read more)

If the uncertainty is precisely quantified (no imprecise probabilities), and the expected returns of each option depends only on how much you fund that option (and not how much you fund others), then you can just use the expected value functions.