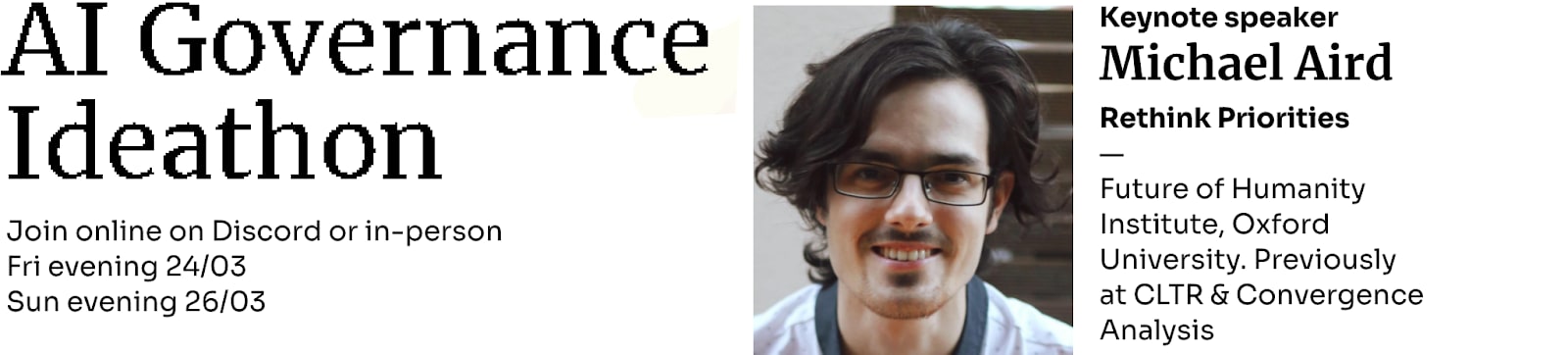

TLDR; Join us this weekend for the non-technical AI governance ideathon with Michael Aird as keynote speaker, happening both virtually and in-person at 12 locations! We also invite you to join the interpretability hackathon with Neel Nanda on the 14th of April.

Below is an FAQ-style summary of what you can expect.

What is it?

The Alignment Jams are weekend-long fun research events where participants of all skill levels join in teams (1-5) to engage with direct AI safety work. You submit a PDF report on the participation page with the great opportunity to receive a review from great people like Emma Bluemke, Elizabeth Seger, Neel Nanda, Otto Barten and others.

If you are not at any of the in-person jam sites, you can participate online through our Discord where the keynote, award ceremony and AI safety discussion is happening!

The ideathon happens from the 24th to the 26th of March and we have the honour of presenting Michael Aird, lead AI governance researcher at Rethink Priorities as our keynote speaker. The interpretability hackathon happens on the 14th to 16th of April and we are collaborating with keynote speaker Neel Nanda for the third time to bring great starter resources to you. Get all dates into your calendar.

Join this weekend's AI governance ideathon to write proposals for solutions to problem cases and think more about strategy in AI safety. And we promise you that you'll be surprised what you can achieve in just a weekend's work!

Read more about how to join, what you can expect, the schedule, and what previous participants have said about being part of the jams below.

Where can I join?

You can join the event both in-person and online but everyone needs to make an account and join the jam on the itch.io page.

See all in-person jam sites here. These include Hồ Chí Minh City, Copenhagen, Delft, Oxford, Cambridge, Madison, Aarhus, Paris, Toronto, Detroit (Ann Arbor), São Paulok, London, Sunnyvale and Stanford.

Everyone should join the Discord to ask questions, see updates and announcements, find online team members, and more. Join here.

What are some examples of AI governance projects I could make?

The submissions will be based on the cases presented on the Alignment Jam website and focus on specific problems in the interaction between society and artificial intelligence.

We provide some great inspiration with the cases that have been developed in collaboration with Richard Ngo, Otto Barten, Centre for the Governance of AI and others:

- Categorizing the future risks from artificial intelligence in a way that is accessible for policymakers.

- Write up a report of the considerations and actions that OpenAI should take for a hypothetical release of a multimodal GPT-6 to be safe.

- Imagine a policy proposal that, with full support from policymakers, would be successful in slowing or pausing progress towards AGI in a responsible and safe manner.

- Come up with ways that AI might self-replicate in dangerous ways and brainstorm solutions to these situations.

- Whose values should AI follow and how do we design systems to aggregate and understand highly varied preferences for systems that take large-scale decisions?

- If we imagine that in 7 years, the US ban on AI hardware export to China leads to antagonistic AGI development race dynamics between the two nations, what will lead to this scenario? And how might we avoid risky scenarios from a governance perspective?

- As AI takes over more and more tasks in the world, how will the technology fit into democratic processes and which considerations will we have to take?

This will be our first non-technical hackathon (besides an in-person retreat in Berkeley) and we're excited to see which proposals you come up with!

Why should I join?

There’s loads of reasons to join! Here are just a few:

- See how fun and interesting AI safety can be!

- Get a new perspective on AI safety

- Acquaint yourself with others interested in the same things as you

- Get a chance to win $1,000 in the AI governance ideathon!

- Get practical experience with AI safety research

- Show the AI safety labs and institutions what you are able to do to increase your chances at some amazing jobs

- Get a cool certificate that you can show your friends and family

- Have a chance to work on that project you've considered starting for so long

- Get proof of your skills so you can get that one grant to pursue AI safety research

- And of course, many other reasons… Come along!

What if I don’t have any experience in AI safety?

Please join! This can be your first foray into AI and ML safety and maybe you’ll realize that it’s not that hard. Even if you don't find it particularly interesting, this might be a chance to engage with the topics on a deeper level.

There’s a lot of pressure from AI safety to perform at a top level and this seems to drive some people out of the field. We’d love it if you consider joining with a mindset of fun exploration and get a positive experience out of the weekend.

What is the agenda for the weekend?

The schedule runs from 6PM CET / 9AM PST Friday to 7PM CET / 10AM PST Sunday. We start with an introductory talk and end with an awards ceremony. Subscribe to the public calendar here.

| CET / PST | |

| Fri 6 PM / 9 AM | Introduction to the hackathon, what to expect, and a talk from Michael Aird or Neel Nanda. Afterwards, there's a chance to find new teammates. |

| Fri 7:30 PM / 10:30 AM | Jamming begins! |

| Mon 4 AM / 8 PM | Final submissions have to be finished. Judging begins and both the community and our great judges from ERO and GovAI join us in reviewing the proposals. |

| Wed 6 PM / 9 AM | The award ceremony: The winning projects are presented by the teams and the prizes are presented. |

| Afterwards! | We hope you will continue your work from the hackathons with the purpose of sharing it on the forums or your personal blog! |

I’m busy, can I join for a short time?

As a matter of fact, we encourage you to join even if you only have a short while available during the weekend!

So yes, you can both join without coming to the beginning or end of the event, and you can submit research even if you’ve only spent a few hours on it. We of course still encourage you to come for the intro ceremony and join for the whole weekend but everything will be recorded and shared for you to join asynchronously as well.

Wow this sounds fun, can I also host an in-person event with my local AI safety group?

Definitely! It might be hard to make it for the AI governance ideathon but we encourage you to join our team of in-person organizers around the world for the interpretability hackathon in April!

You can read more about what we require here and the possible benefits it can have to your local AI safety group here. Sign up as a host on the button on this page.

What have previous participants said about this hackathon?

| I was not that interested in AI safety and didn't know that much about machine learning before, but I heard from this hackathon thanks to a friend, and I don't regret participating! I've learned a ton, and it was a refreshing weekend for me. | A great experience! A fun and welcoming event with some really useful resources for starting to do interpretability research. And a lot of interesting projects to explore at the end! |

| Was great to hear directly from accomplished AI safety researchers and try investigating some of the questions they thought were high impact. | I found the hackaton very cool, I think it lowered my hesitance in participating in stuff like this in the future significantly. A whole bunch of lessons learned and Jaime and Pablo were very kind and helpful through the whole process. |

| The hackathon was a really great way to try out research on AI interpretability and getting in touch with other people working on this. The input, resources and feedback provided by the team organizers and in particular by Neel Nanda were super helpful and very motivating! |

Where can I read more about this?

- The Alignment Jam website (where you can subscribe to email updates)

- The Discord server

- AI governance ideathon

- The interpretability hackathon

- Previous results

Again, sign up here by clicking “Join jam” and read more about the hackathons here.

Godspeed, research jammers!

The discord link no longer seems to work.