Below is this month's EA Newsletter. I'm experimenting with crossposting it to the Forum so that people can comment on it and give feedback. If this is your first time hearing about the EA Newsletter, you can:

Subscribe and read past issuesHello!

Our favourite links this month include:

- A podcast with entomologist Meghan Barrett about the surprising abilities of insects and why you should care about insect welfare even if you think insects probably aren’t conscious.

- Our World In Data shows that some health interventions are 1,000x as effective as others, and charities are no different.

- An update on SB 1047, the California Senate bill which would hold AI companies liable for catastrophic harms their models may cause.

Also, much more content, including an article about gene drives, an update from CEA’s new CEO, and a short video about the dangers of AI adapted from a short story. We also highlight jobs such as CEO of Giving What We Can (30 September) and Head of Operations at CEA (7 October).

— Toby, for the EA Newsletter Team

Articles

Do insects suffer?

Insects are more complex than you think. Monogamous breeding pairs of termites regularly have 20-year relationships. The smallest mammal brain (a shrew) is only six times the size of the largest insect brain (a solitary wasp). Fruit flies are used in efficacy studies of depression medication — because fruit flies can exhibit depression-like behavior.

Yet scientists have long assumed that insects don’t suffer. As entomologist Meghan Barrett argues on a recent episode of the 80,000 Hours podcast, their reasons for believing this no longer stand up. For example, scientists used to claim that insects didn’t have nociceptors (put simply, pain receptors), but these, and analogous systems, have now been found. It was observed that some insects reacted strangely to grievous injury, ignoring their wounds. Now insects have been observed grooming their own or other insects’ wounds, and even responding to painkillers.

This matters because, in 2020, between 1 and 1.2 trillion insects were farmed, generally without any concern for their welfare. Barrett is too much of a scientist to confidently assert that insects do feel pain. However, she believes that, even if you think insect suffering is unlikely, the sheer number of individuals involved makes insect farming a major issue.

If you are interested in insect welfare, check out Barrett’s related blog post, which provides ways that we can all help to bring awareness to, or work directly on, this problem.

Almost all of us can save a life

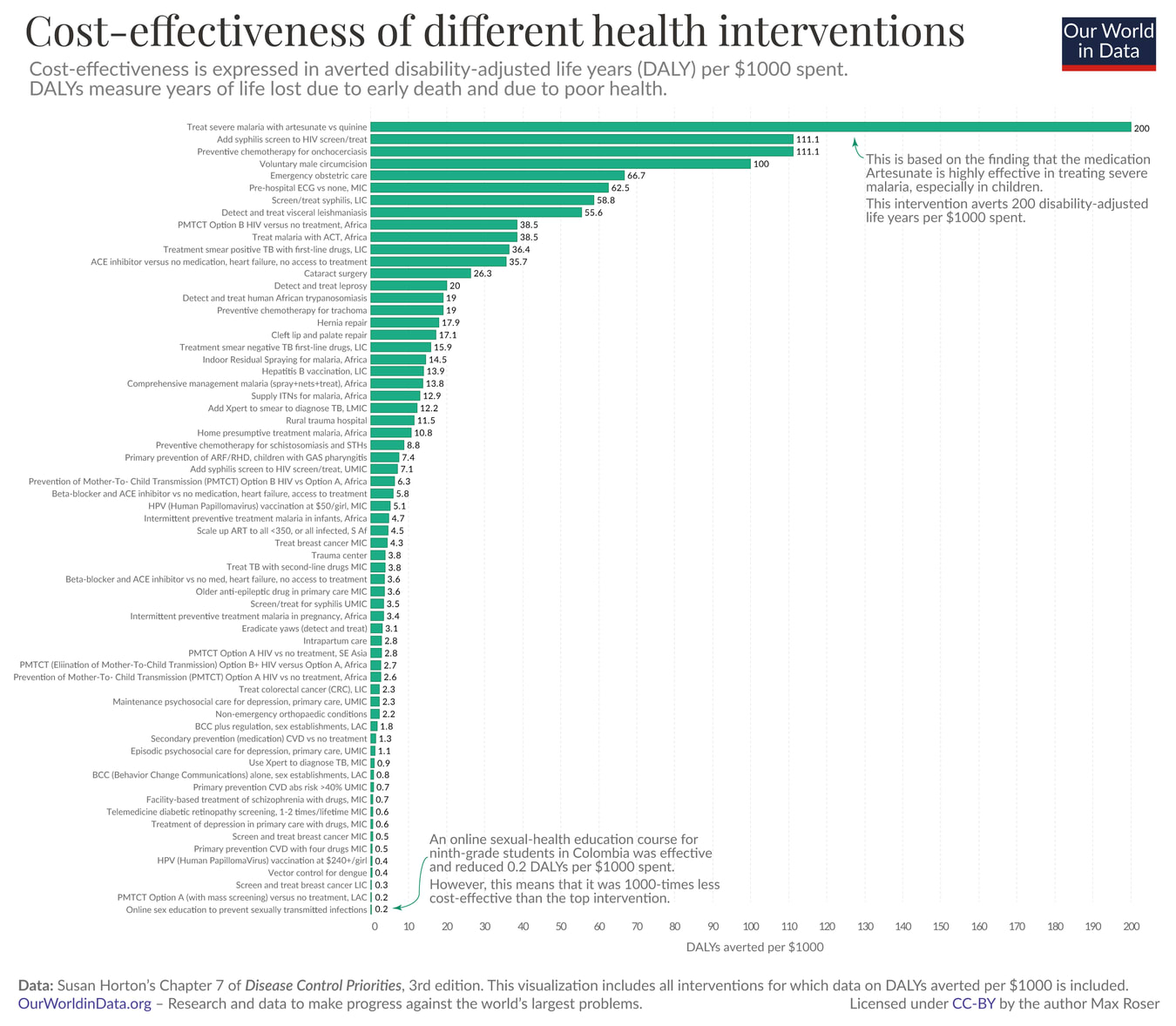

The key is to donate to the right charities. Some are far more effective than others, and some interventions are incredibly cost-effective. A new piece from Our World in Data makes a concise and data-driven case for donating wisely. They argue:

- Some health interventions are 1,000x as effective as others (see the graph below). Likewise, the best charities are much more effective at saving lives than the average charity.

- Saving a life is relatively inexpensive. GiveWell, a leading charity evaluator, has found four charities that can save a life for around $5,000 — a lot less than the $1 million that the UK government is willing to spend.

If you’re not sure how to pick the best charities, rely on an expert charity evaluator, like GiveWell, or a managed fund. Also, consider sending the article to friends or family who are reluctant to compare charities.

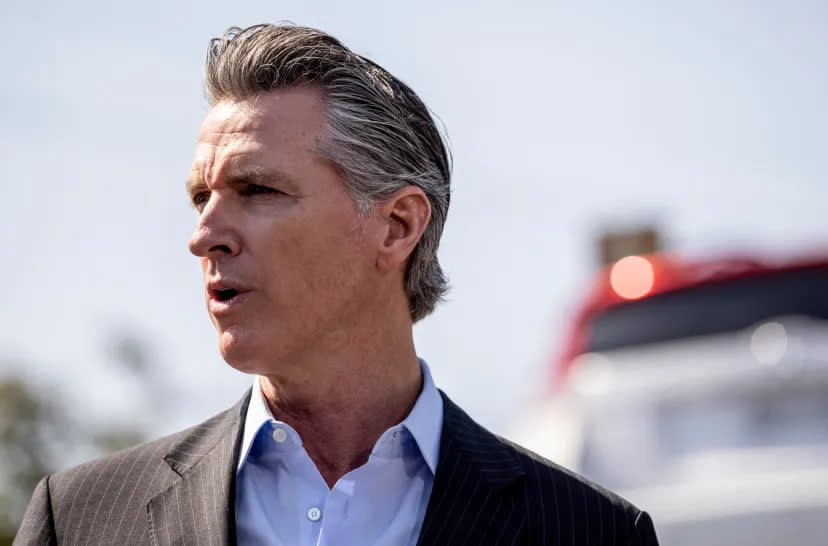

California attempts AI regulation

SB 1047 is a California Senate bill that would require companies to develop safety plans for AI models costing over $100 million to train. If companies refuse, they would be liable if the models cause mass casualty events, or over $500 million in damages. The bill has passed the California Senate and now awaits Governor Gavin Newsom's decision to sign or veto.

The bill appears popular among Californians, including current and former staff from AI companies like OpenAI, DeepMind, and Meta, who have signed an open letter urging Newsom to make the bill law.

However, Google, Meta, and OpenAI have been lobbying against the bill, as has Andreessen Horowitz, a venture capital firm heavily invested in AI. Representatives from the tech industry have argued that the bill would stifle innovation among AI startups — even though it only applies to the most expensive training runs — and falsely claimed that it would threaten AI developers with jail.

Crowd-sourced prediction market Metaculus currently puts a 20% chance on the bill being enacted by the first of October this year. Expect more updates in the next couple of weeks.

In other news

- Alternative protein innovation is in a slump (following a boom). But Lewis Bollard gives some reasons for optimism about the future: governments are investing, retailers are setting ambitious targets, and a recent study shows plant-based chicken nuggets beating chicken nuggets in a taste test.

- Matt Clancy makes the case for more living literature reviews — writing by a single author that explores key questions and summarizes cutting-edge research in important academic fields.

- The Centre for Effective Altruism’s new CEO, Zach Robinson, outlines his plans for a principles-first CEA.

- In Afghanistan, people are being poisoned with lead by their pots and pans.

- The EA Newsletter often includes charts (and we’re not alone), so it’s good to remember that charts can be very misleading.

- Asimov Press published a mini-edition about pandemic preparedness. It included a piece on how to build new broad-spectrum antivirals to protect against future pandemics, and another on building a genomic surveillance network to detect new diseases faster.

- Between 1990 and 2021, child deaths linked to malnutrition decreased by 4.2 million. But in 2021, the number still stood at 2.4 million, or half of all child deaths.

- Gene drives, a relatively new technology which can be used to genetically modify entire species in the wild, could eradicate malaria-carrying mosquitoes.

- Jacob Trefethen, who oversees Open Philanthropy’s science and science policy programmes, discusses ways to speed up global health research and development and life-saving technologies we could invent if we tried.

- GiveWell explains their current thinking on grantmaking for vaccines.

- How do you even start to investigate consciousness in AI? Philosopher Robert Long explains his approach.

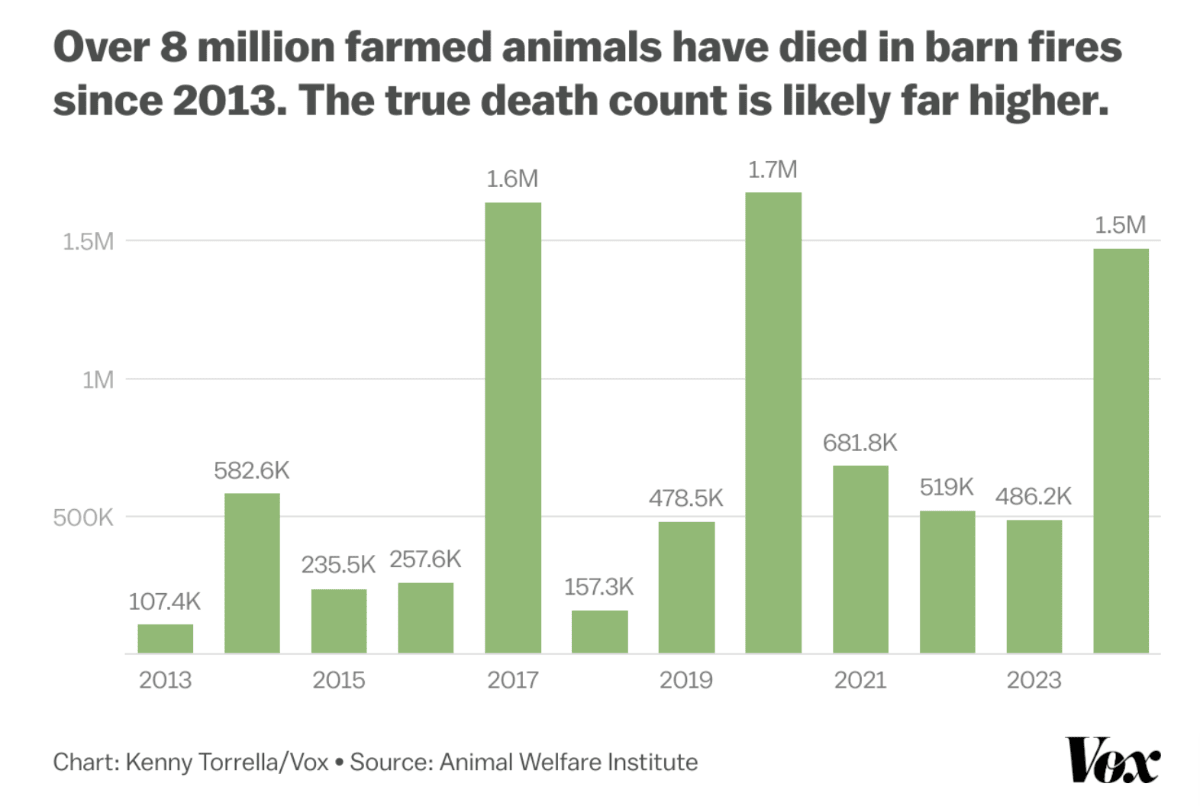

- Pigs die in barn fires fairly regularly — but the animal agriculture industry in the US is advocating against installing sprinklers.

- 80,000 Hours published a new problem profile on understanding the moral status of digital minds.

- Also from 80,000 Hours: experts anonymously answer the question “What are the best ways to fight the next pandemic?”

- AI

- OpenAI’s new models have been graded a “medium” on the company's own safety rating — the highest rating to date. Vox reports that they are “scarily good at deception”.

- What kinds of tests would we have to run to check for dangerous capabilities in future, more advanced, AI systems?

- That Alien Message is a video adaptation of an Eliezer Yudkowsky short story which provides a vivid analogy for humanity’s relationship with AI.

- Anthropic, Deepmind, and OpenAI have all published AI safety policies. METR, which evaluates cutting-edge AI models for safety, analyzes what their frameworks have in common.

Resources

Links we share every time — they're just that good!

- Take a free online course on effective altruism

- Learn at your own pace with the EA Handbook

- Find groups and meetups in your area

- Get funding for your research or other projects

Jobs

- The 80,000 Hours Job Board features more than 800 positions. We can’t fit them all in the newsletter, so you can check them out there.

- The EA Opportunity Board collects internships, volunteer opportunities, conferences, and more — including part-time and entry-level job opportunities.

- If you’re interested in policy or global development, you may also want to check Tom Wein’s list of social purpose job boards.

Selection of jobs

BlueDot Impact

- AI Alignment Teaching Fellow (Remote, £4.9K–£9.6K, apply by 22 September)

Centre for Effective Altruism

- Head of Operations (Remote, £107.4K / $179.9K, visa sponsorship, apply by 7 October)

Cooperative AI Foundation

- Communications Officer (Remote, £35K–£40K, no visa sponsorship, apply by 29 September)

Founders Pledge

- Research Assistant – Global Catastrophic Risks (Remote in US, $30 per hour)

GiveWell

- Senior Researcher (Remote, $200K–$220.6K, visa sponsorship)

Giving What We Can

- Global CEO (Remote, $130K+, visa sponsorship, apply by 30 September)

Lead Exposure Elimination Project (LEEP)

- If you’re interested in working at LEEP, please complete this form.

Legal Impact for Chickens

- Attorney (Remote in US, $80K–$130K, apply by 7 October)

Open Philanthropy

- Operations Coordinator/Associate (San Francisco, Washington, DC, $99.6K–$122.6K, no visa sponsorship)

- If you’re interested in working at Open Philanthropy but don't see an open role that matches your skillset, express your interest.

The Good Food Institute

- Managing Director, GFI India (Hybrid in Mumbai, Delhi, Hyderabad, or Bangalore, ₹4.5M, apply by 2 October)

Announcements

Fellowships, internships, and volunteering

- The Cosmos Fellowship is looking for applicants with “the potential for world-class AI expertise and deep philosophic insight” to work alongside the Human-Centered AI Lab at the University of Oxford (or other host institutions), pursuing independent projects, with access to mentorship. The fellowship pays $75,000 (pro rata) for up to one year of 90-day intervals. Apply before 1 December.

- Future Impact Group is offering a part-time, remote-first, 12-week fellowship. Fellows will spend 5-10 hours per week working on policy or philosophy projects on subjects such as suffering risks, coordinating international governance, reducing risks from ideological fanaticism, and more. Apply by 28 September.

- Artificial Intelligence Governance & Safety Canada is looking for volunteers to help with content creation, events, translation (French to English) and more. If you’re interested in helping, contact them here.

Conferences and events

- Upcoming EA Global Conferences: Boston (1-3 November, apply by 20 October).

- Upcoming EAGx Conferences: Bengaluru (19-20 October), and Sydney (22-24 November)

Funding and prizes

- The Strategic Animal Funding Circle is offering up to $1 million in funding, to be distributed among promising farmed animal welfare projects. Find out more, and apply for funding before 20 September.

- If you are a donor willing to give upwards of $100K to farmed animals per year, you can enquire about joining the funding circle at this email: jozden[at]mobius.life

Organizational updates

You can see updates from a wide range of organizations on the EA Forum.

Timeless classic

We only came up with the idea of human extinction fairly recently. Why? In an 80,000 Hours episode, Thomas Moynihan, an intellectual historian, discusses the strange and surprising views previous generations held about extinction, apocalypse, and much more. The podcast recasts our present day assumptions as recent discoveries, and asks what future intellectual historians might think of our beliefs today.

We hope you found this edition useful!

If you’ve taken action because of the Newsletter and haven’t taken our impact survey, please do — it helps us improve future editions.

Finally, if you have feedback for us, positive or negative, let us know via our feedback form, or in the comments below.

– The Effective Altruism Newsletter Team