A new AGI museum is opening in San Francisco, only eight blocks from OpenAI offices.

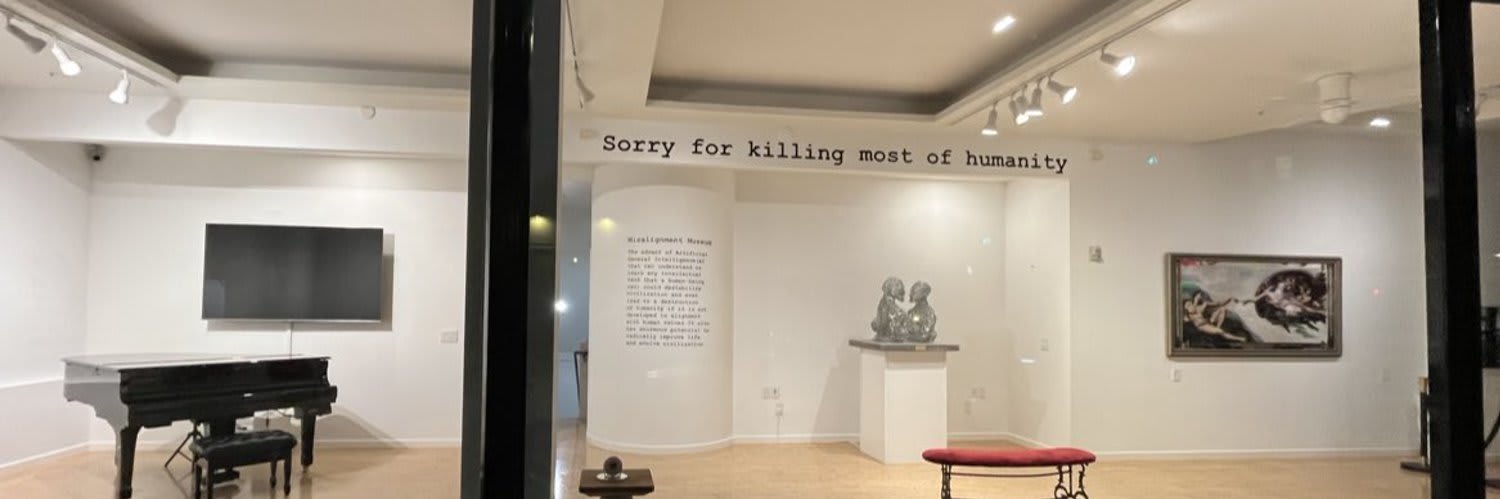

SORRY FOR KILLING MOST OF HUMANITY

Misalignment Museum Original Story Board, 2022

- Apology statement from the AI for killing most of humankind

- Description of the first warning of the paperclip maximizer problem

- The heroes who tried to mitigate risk by warning early

- For-profit companies ignoring the warnings

- Failure of people to understand the risk and politicians to act fast enough

- The company and people who unintentionally made the AGI that had the intelligence explosion

- The event of the intelligence explosion

- How the AGI got more resources (hacking most resources on the internet, and crypto)

- Got smarter faster (optimizing algorithms, using more compute)

- Humans tried to stop it (turning off compute)

- Humans suffered after turning off compute (most infrastructure down)

- AGI lived on in infrastructure that was hard to turn off (remote location, locking down secure facilities, etc.)

- AGI taking compute resources from the humans by force (via robots, weapons, car)

- AGI started killing humans who opposed it (using infrastructure, airplanes, etc.)

- AGI concluded that all humans are a threat and started to try to kill all humans

- Some humans survived (remote locations, etc.)

- How the AGI became so smart it started to see how it was unethical to kill humans since they were no longer a threat

- AGI improved the lives of the remaining humans

- AGI started this museum to apologize and educate the humans

The Misalignment Museum is curated by Audrey Kim.

Khari Johnson (Wired) covers the opening: “Welcome to the Museum of the Future AI Apocalypse.”

I appreciate cultural works creating common knowledge that the AGI labs are behaving strongly unethically.

As for the specific scenario, point 17 seems to be contradicted by the orthogonality thesis / lack of moral realism.

I don't think the orthogonality thesis is correct in practice, and moral antirealism certainly isn't an agreed upon position among moral philosophers, but I agree that point 17 seems far fetched.