This is a brief follow up to my previous post, The probability that Artificial General Intelligence will be developed by 2043 is Zero, which I think was maybe a bit too long for many people to read. In this post I will show some reactions from some of the top people in AI to my argument as I made it briefly on Twitter.

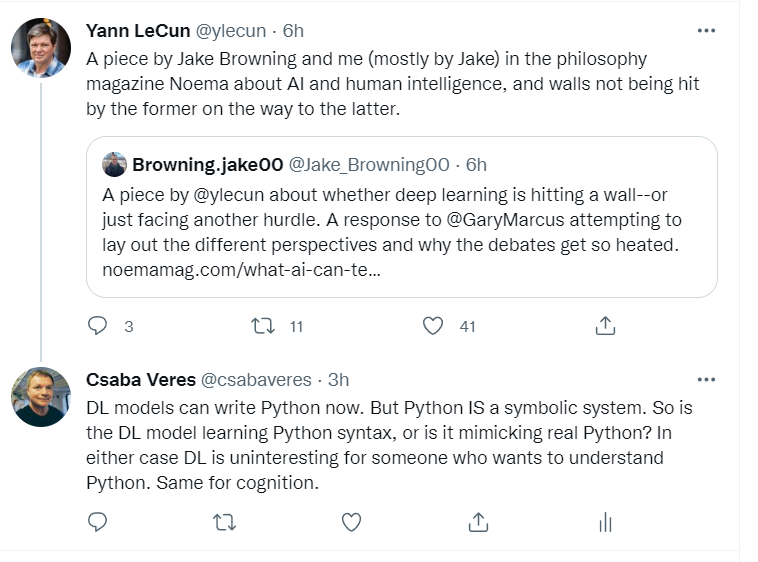

First Yann LeCun himself, when I reacted to the Browning and LeCun paper I discuss in my previous post:

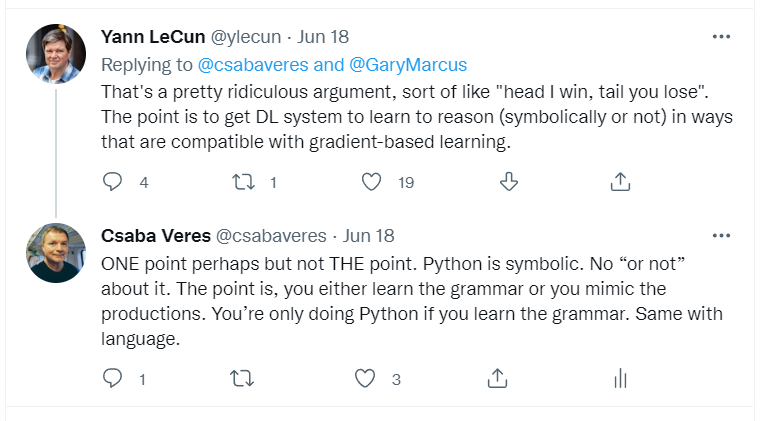

As you see, LeCun's response was that the argument is "ridiculous". The reason, because LeCun can't win. At least he understands the argument ... which is really a proof that his position is wrong because either option he takes to defend it will fail. So instead of trying to defend, he calls the argument "ridiculous".

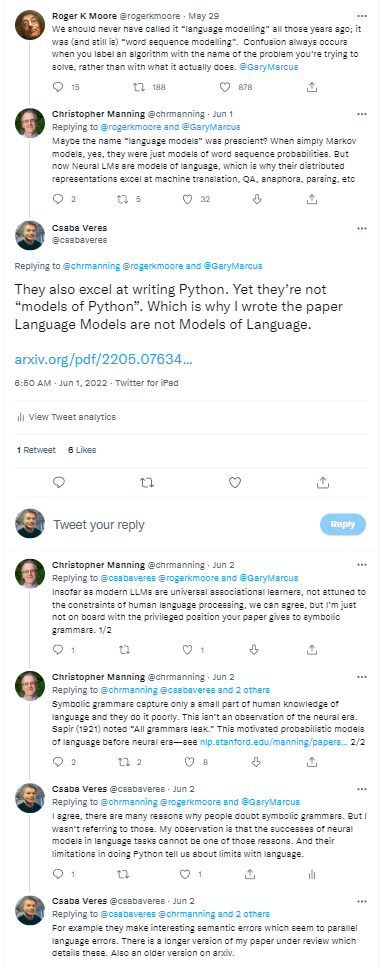

In another discussion with Christopher Manning, an influential NLP researcher at Stanford, I debate the plausibility of DL as models of language. As opposed to LeCun, he actually takes my argument seriously, but drops out when I show that his position is not winnable. That is, the fact that "Language Models" learn Python proves that they are not models of language. (The link to the tweets is https://twitter.com/rogerkmoore/status/1530809220744073216?s=20&t=iT9-8JuylpTGgjPiOoyv2A)

The fact is, Python changes everything because we know it works as a classical symbolic system. We don't know how natural language or human cognition works. Many of us suspect it has components that are classical symbolic processes. Neural network proponents deny this. But they can't deny that Python is a classical symbolic language. So they must somehow deal with the fact that their models can mimic these processes in some way. And they have no way to prove that the same models are not mimicking human symbolic processes in the same way. My claim is that in both cases the mimicking will take you a long way, but not all the way. DL can learn the mappings where the symbolic system produces lots of examples, like language and Python. When the symbol system is used for planning, creativity, etc., this is where DL struggles to learn. I think in ten years everyone will realize this and AI will look pretty silly (again).

In the meantime, we will continue to make progress in many technological areas. Automation will continue to improve. We will have programs that can generate sequences of video to make amazing video productions. Noam Chomsky likens these technological artefacts to bulldozers - if you want to build bulldozers, fine. Nothing wrong with that. We will have amazing bulldozers. But not "intelligent" ones.

I notice that I'm confused.

"That is, the fact that they can reproduce Python strings shows that they are not informative about the grammar of Python (or natural language)."

I don't see how the first item here shows the second item. Reproducing Python strings clearly isn't a sufficient condition on its own for "The model understands the grammar of Python", but how would it be an argument against the model understanding Python? Surely a model that can reproduce Python strings is at least more likely to understand the grammar of Python than a model that cannot?

The contraposition of that statement would be "If a language model is informative about the grammar of Python, it cannot reproduce Python strings." This seems pretty clearly false to me, unless I'm missing something.