This is a brief follow up to my previous post, The probability that Artificial General Intelligence will be developed by 2043 is Zero, which I think was maybe a bit too long for many people to read. In this post I will show some reactions from some of the top people in AI to my argument as I made it briefly on Twitter.

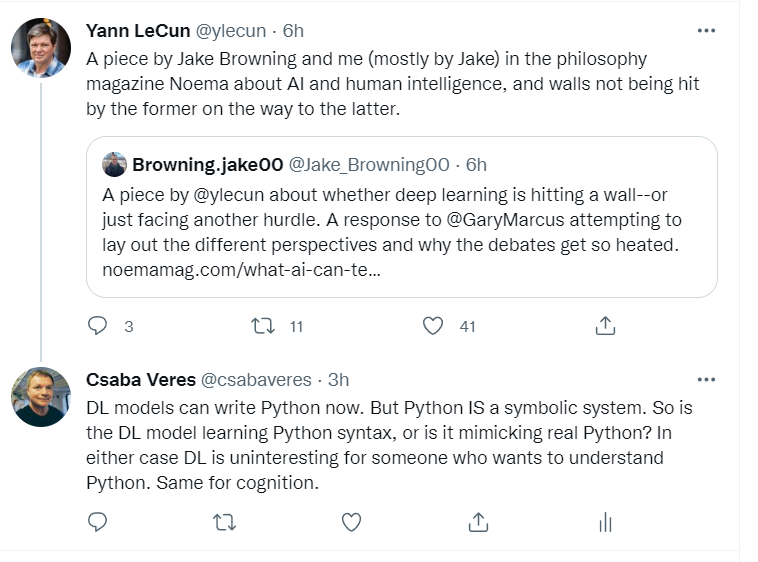

First Yann LeCun himself, when I reacted to the Browning and LeCun paper I discuss in my previous post:

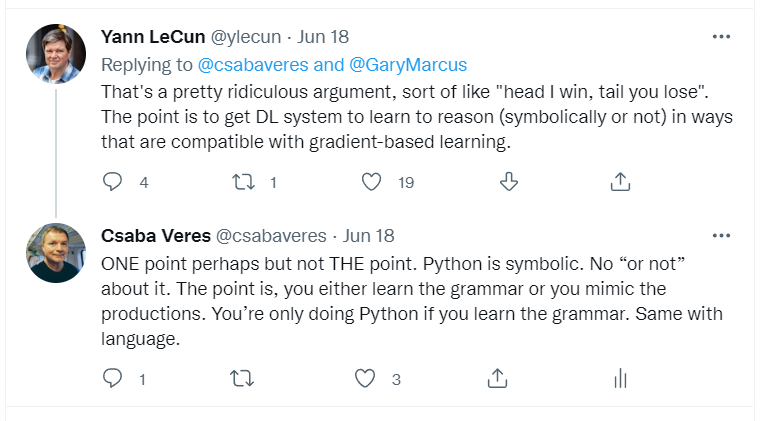

As you see, LeCun's response was that the argument is "ridiculous". The reason, because LeCun can't win. At least he understands the argument ... which is really a proof that his position is wrong because either option he takes to defend it will fail. So instead of trying to defend, he calls the argument "ridiculous".

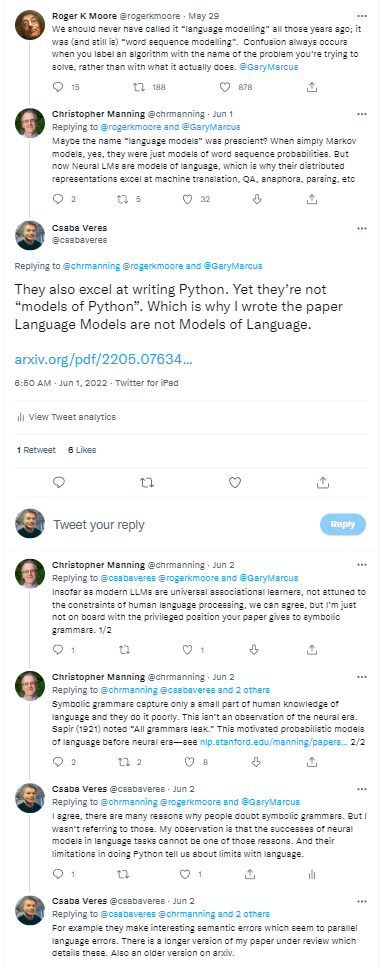

In another discussion with Christopher Manning, an influential NLP researcher at Stanford, I debate the plausibility of DL as models of language. As opposed to LeCun, he actually takes my argument seriously, but drops out when I show that his position is not winnable. That is, the fact that "Language Models" learn Python proves that they are not models of language. (The link to the tweets is https://twitter.com/rogerkmoore/status/1530809220744073216?s=20&t=iT9-8JuylpTGgjPiOoyv2A)

The fact is, Python changes everything because we know it works as a classical symbolic system. We don't know how natural language or human cognition works. Many of us suspect it has components that are classical symbolic processes. Neural network proponents deny this. But they can't deny that Python is a classical symbolic language. So they must somehow deal with the fact that their models can mimic these processes in some way. And they have no way to prove that the same models are not mimicking human symbolic processes in the same way. My claim is that in both cases the mimicking will take you a long way, but not all the way. DL can learn the mappings where the symbolic system produces lots of examples, like language and Python. When the symbol system is used for planning, creativity, etc., this is where DL struggles to learn. I think in ten years everyone will realize this and AI will look pretty silly (again).

In the meantime, we will continue to make progress in many technological areas. Automation will continue to improve. We will have programs that can generate sequences of video to make amazing video productions. Noam Chomsky likens these technological artefacts to bulldozers - if you want to build bulldozers, fine. Nothing wrong with that. We will have amazing bulldozers. But not "intelligent" ones.

Excellent question. There are many ways to answer that, and I will try a few.

Maybe the first comment I can make is that the "godfathers" LeCun and Bengio agree with me. In fact the Browning and LeCun paper has exactly that premise - DL is not enough, it must evolve a symbolic component. In another Twitter debate with me LeCun admitted that current DL models cannot write long complex Python programs because these require planning, etc., which non symbolic DL models cannot do.

There is a lot of debate about what sort of "next word prediction" is happening in the brain. Ever since Swinney's cross modal priming experiments in 1979, we have known that all senses of ambiguous words become activated during reading, and the contextually inappropriate becomes suppressed very quickly afterwards as it is integrated into context (https://en.wikipedia.org/wiki/David_Swinney). Similar findings were reported about syntactic analysis in the years since. But these findings are never taken into consideration, because DL research appears to progress on stipulations rather than actual scientific results. Clearly we can predict next words, but how central this is to the language system is debatable. What is much more important is contextual integration, which no DL model can do because it has no world model.

But let us take equally simple language tasks on which DDL models are terrible. One really useful task would be open relation extraction. Give the model a passage of text and ask it to identify all the key actors and their relations, and report them as a set of triples. I try and do this in order to create knowledge graphs, but DL models are not that helpful. Another simple example: "The dog chased the cat but it got away" vs. "The dog chased the cat but it tripped and fell". I have not found a single language model that can reliably identify the referent of the pronoun. What these two examples have in common is that they need some "knowledge" about the world. LeCun does acknowledge that we need a "world model" but again this is where I disagree on how to get it.

One final point. Current language models do not have a theory of language acquisition. Chomsky rightly pointed out that a good theory of language has to explain how children learn. It is pretty clear that the way language models are trained is not going to be able to explain anything about human language acquisition. Another point that is conveniently excluded from discussion.

There are many many other reasons why we should doubt how far these models will get. People tend to focus on progress on largely made-up tasks and ignore the vast landscape of unknowns left in the wake of these models. (Emily Bender on Twitter has some interesting discussions on this). Hardly a confidence inspiring picture if you look at the entire landscape.