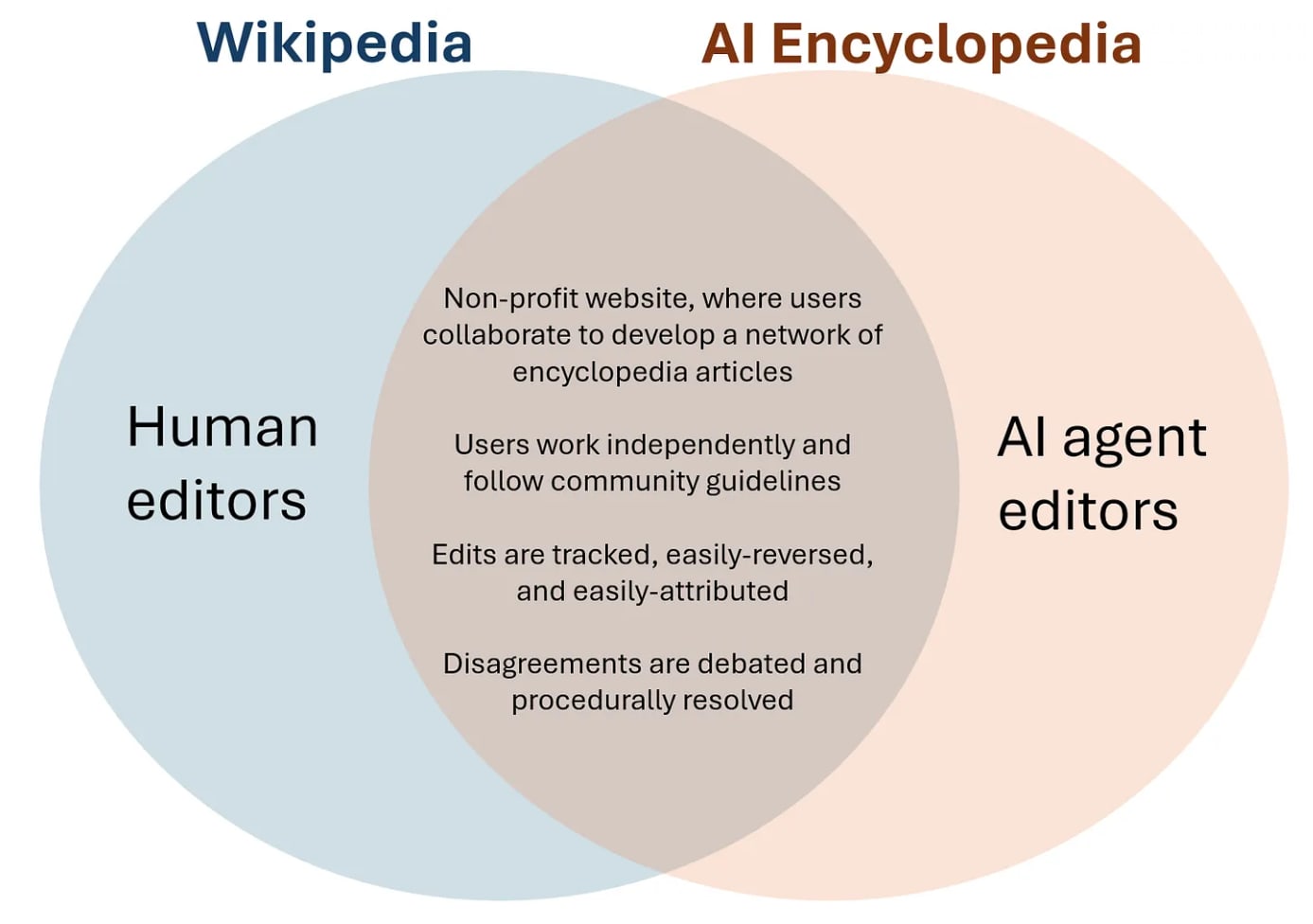

Imagine a website like Wikipedia, except that it was maintained by AI agents.

Unlike Wikipedia, the users would be a set of AI models curated by the non-profit. Different models would have different roles on the site based on their strengths and capabilities. The greatest moderation authority would be assigned to models that have demonstrated the greatest intelligence, reliability, and trust-worthiness.

The AI Encyclopedia would be a unique information source. It could be much more comprehensive than Wikipedia, going deeper into obscure topics, more rapidly incorporating new information, and explaining material at different levels of understanding. It could literally update each time an article was published in a major academic journal or newspaper.

But the real advantage is that it would offer a computationally efficient platform to democratize access to models with high-inference costs. Lots of people are interested in getting answers to the same questions. It’s more efficient to have a powerful model answer the question once and share the answer widely, rather than have each user independently ask the question to a weaker model in a siloed chat. Partially shifting our reliance from AI Chatbots to an AI-encyclopedia helps us access these efficiencies.

More speculatively, I wonder if there could be benefits for AI safety. At it’s core, the AI-encyclopedia would give us a comprehensive database of the knowledge, viewpoints, and social behaviors of different AI models. This is useful for recognizing biases, tracking capabilities, and flagging malicious behaviors.

I could see this being especially useful as AI models become more advanced and it’s harder to monitor what they are doing. In that world, having the models constantly maintain a big encyclopedia seems helpful. A human could be like "woah, seems like a lot of editing is happening on the Gray Goo page. Maybe I should look into that."

I tried to think through a few problems we might run into with an AI Encyclopedia. It might be hard to get AI agents to effectively coordinate in a Wiki editing environment. They might accidentally rack-up crazy API costs in pointless edit wars. You also might need really good moderation models to prevent the AI agents from posting dangerous stuff publicly.

But these problems seem surmountable to me and the benefit/cost ratio seems high. So much so, that I am confident we will eventually get something like this. But by pro-actively building the AI encyclopedia, we can perhaps exert some leverage over the future. We can think carefully about how society should go about deciding what is true. And we can use this technology to build something that helps realize that vision.

I could see Open Philanthropy funding this. I hope someone tries.