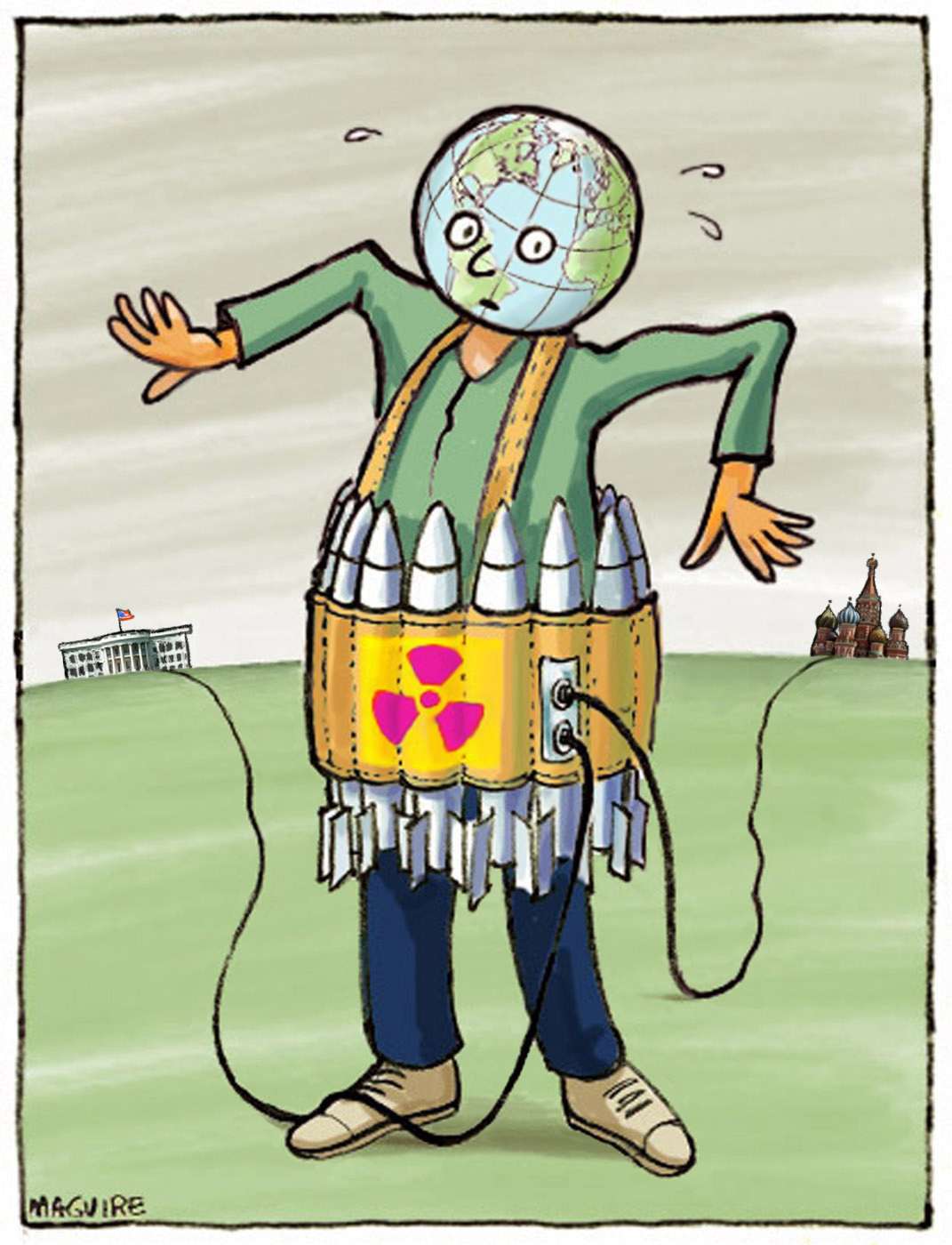

Sometimes I wonder if people just don’t realize what very likely is in store in the future.

What life would be like after a nuclear war. What would constitute day to day existence when hundreds of millions of humans are desperately migrating to escape climate induced famine. Such things aren’t polite topics. They are, however, necessary topics.

The bulletin of the Atomic Scientists argument in favor of quantifying nuclear risk shares the following from Martin Hellman from Stanford University:

"A risk of one percent per year would accumulate to worse-than-even odds over the lifetime of a child born today. Even if someone were to estimate that the lower bound should be 0.1 percent per year, that would be unacceptably high—that child would have an almost ten percent risk of experiencing nuclear devastation over his or her lifetime.'

The past half decade has seen a dramatic erosion in the taboo around threatening the use of nuclear weapons, even as they have proliferated across the globe. This threat will only be compounded and accelerated in the coming years as technological advance and proliferation continue to expand the risk of nuclear Armageddon.

Yet when I look at Open Philanthropy's grants for nuclear deterrence, all I see are a few tangentially related longtermist grants on biosecurity. The term nuclear seems to just come in where organizations like Nuclear Threat Initiative work on biosecurity grants. Why is this the case? What is the logic there? This seems like an obvious blind spot.

I wonder if EA is subject to the same myopia and complacency that's gripped our civilization since the end of the Cold War. The nuclear threat feels dated and tired. Yet a clear eyed analysis shows that the threat has only grown.

The war in Ukraine may very well be the start of World War Three. Do any of us have the right to be surprised? What then is the EA response to this threat? Worship at the cult of the coming AGI singularity and escape into cyberspace? Pat ourselves on the backs for the number of bednets we've funded? I'm being ungenerous in my rhetoric but what seriously are we doing people?

What is preventing us from showing much greater leadership and courage in the face of what may very well be the end of humanity? Refuges and bunkers are great. Why not more? Why not take a more proactive role? Why not work to address the underlying threat at its source?

I think this is caused by two factors, 1) longtermism has decided to distinguish between existential risks and global catastrophic risks, and 2) there is a much stronger general culture of denial around nuclear risk than any other risk.

When in the Precipice(which I take to have been broadly read) Toby Ord says that AI is a 1/10 risk and nuclear war and climate change are both 1/1000 and does so essentially on the basis that he views the later two as survivable this created the justification to write them off.(this isn't to say this is where the trend began)

If you talk to most people they just don't believe nuclear war is likely, as a result, it seems like nuclear war and unaligned artificial intelligence are both treated as being speculative, except nuclear war has a long history of not happening. This is of course nonsense, as nuclear weapons exist right now.

If you sign up for the 80,000 hours newsletter you can get one for free, it is also on libgen