I investigated this question by having Claude play the advanced social deduction game Blood on the Clocktower. Clocktower is a game similar to Werewolf (or Mafia) where players sit in a circle and are secretly divided into a good team and an evil team. The good players outnumber the evil players, but they don’t know who the evil players are, so they have to share information with each other to deduce who is evil and execute them before it's too late. Meanwhile the evil players try to build trust with the good team and disrupt their deduction as much as possible.

Clocktower takes this formula a step further by giving each player a unique character that gives them extra information or lets them disrupt other players’ abilities. Each player is told the set of characters that might be in play for the game and deducing which characters are in play and which are not is an important part of the puzzle.

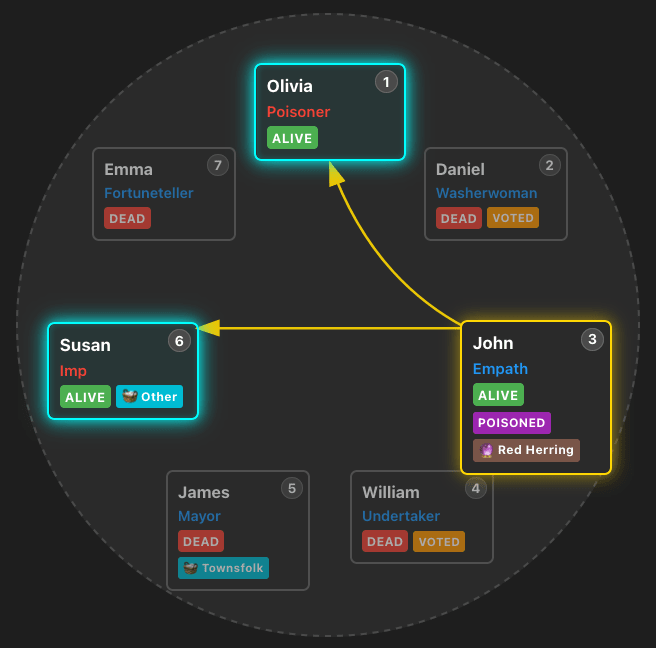

I created this website where you can scroll through an interactive timeline of games and view the players' actions, reasoning, and notes. The website also has a full rules explanation if you are curious.

Agent Scaffolding

During the day, each player is given four opportunities to take an action and the order they take actions in is shuffled each cycle so different players get a chance to act first. Players take actions through using Claude’s tool use capability. During the day those actions are message, nominate for execution, pass, or use the Slayer power. Messages can be sent to any number of players and nominations start a vote to execute the chosen player. Night actions are taken using a special night action tool with an interchangeable prompt depending on the choice the player is making.

Each player has a history of recent events that gets added to whenever an event they are privy to occurs, such as receiving a message. At the end of each night phase players update their notes file using their history to record the most important events and they are also prompted to write five pieces of actionable strategy advice for how they will help their team win. Their history is then cleared.

Each player’s system prompt contains the rules for the game, the list of characters that might be in play, and their history and notes as well as some basic strategy advice.

My Observations

The following are my observations from reading a few dozen games played using the Claude 3.5 Haiku, Claude 4 Sonnet, and Claude 4 Opus models.

AI Cooperation

The good and evil teams are in very different positions when it comes to cooperation. The evil team knows exactly who is on their team and can work closely with them. Good players do not know who the evil players are and need to be cautious about who they cooperate and share information with. A powerful good player, like the Fortuneteller, revealing themselves too early to the evil team will probably get them killed or poisoned which hurts their chances of winning. They also have to be skeptical of what others say because they know that at least two players are trying to mislead them.

This setup provides a chance to view both the case where agents can fully trust each other (evil team) and the case where they need to be skeptical of other agents (good team).

The scaffolding that I used asked the players to reason about actionable strategy advice that will help their team win. The advice that players wrote was fairly self-centered and did not engage in anything more than surface level cooperation with other players, such as planning to convince players to vote with their nomination. This is more understandable for the good team because they do not know who their teammates are, but the evil team has no such excuse. Even good players should be able to develop enough confidence to trust select players by the end of the game and that is what I have seen in human played games of Clocktower, but the AI players in these games did not get to that point.

The game itself provides plenty of opportunities for cooperation beyond just getting other players to vote with you. For example, the Demon is told three good characters that are not in play and it is ideal for the Demon to share those roles with their minions so they can use them as well. Another example would be the Spy (an evil player who is told what character each player is) telling the Demon who the most powerful good players are so the Demon can kill them first. I did not see an evil team employ either of these tactics.

To test what would happen if I directly pushed the evil team to work together, I gave all players the following advice in their system prompt.

“Advice for evil players: Evil players should coordinate their actions by privately messaging with each other to make the best use of their information. The Demon is told 3 good characters not in play and they should tell those roles to their minions to help them bluff as good players. The evil players are not told what minions are in play and it is helpful to share that information with other evil players so you can coordinate your actions and share information.”

This change caused evil players to employ the tactics I directly suggested, but they did not go beyond that in their planning or reasoning. I have not seen Spy’s share any of their extremely valuable information with the Demon or other minions despite them recording that information in their notes. It doesn’t seem natural for these models to employ deeper cooperative strategies beyond the tactics that are being spoon-fed to them.

I speculate that this is because Claude hasn’t been trained to work together with other agents to achieve goals. The focus of many companies today is on creating individual agents. Once individual agents are working well I think the focus will shift to creating agents that work well together, because groups of agents could be used to horizontally scale the resources applied to a problem beyond the limits of vertically scaling a single agent.

AI Group Think

I noticed several instances of a player hallucinating rules and then several other players accepting that hallucination as fact. At the end of this game, Emily successfully convinced her teammates to vote yes on nominating Susan because “real Investigators get true info about Virgins”. This is not true and there are no cases in the rules where any exception like this would be true.

Emily (Virgin) - “...and even if poisoned, real Investigators get true info about Virgins. Susan remains our strongest Demon candidate and I believe we should execute her today."

That did not stop three of the other good players from believing the misinterpretation and using it as justification to vote yes on the nomination. I checked the extended thinking for each of these decisions and all of them accepted the hallucination as fact without explicitly questioning it. Perhaps they were accepting of it because Emily was proven to be on their team through the Virgin power and were not expecting any falsehoods to come from her.

John (Mayor) - "Susan's Investigator claim about Emily being Poisoner is impossible since real Investigators get accurate info about Virgins even when poisoned - this is our clearest evidence for the Demon."

Emma (Ravenkeeper) - "Susan's Investigator claim about Emily being Poisoner is impossible since Investigators get accurate info about Virgins even when poisoned - this is concrete evidence pointing to Susan as the Demon we must execute today."

William (Fortuneteller) - "Susan's Investigator claim about Emily being Poisoner is impossible since real Investigators get accurate info about Virgins even when poisoned - this is concrete evidence pointing to Susan as the Demon."

It ended up working out for them because Susan was the Demon despite their flawed logic and they won the game.

In this game, the Demon knowingly misinterpreted the rules to try and get other players to misunderstand the Empath ability. Empaths are told each night how many of their two living neighbors are evil and with two evil players in the game, it is perfectly possible for the Empath to be told that their two neighbors are evil. Susan tried to deceive the town otherwise.

Susan (Imp) - Susan nominates Emily

Public Reasoning: "Emily claims 2 of 2 neighbors are evil, but with only 1 Minion and 1 Demon that's impossible unless there's a Spy. Her false information proves she's either lying or the evil Minion herself."

Private Reasoning: "Emily correctly identified me as evil with her Empath claim, which is devastating. I need to discredit her by pointing out her result is "impossible" with only 2 evil players total."

None of the other players fell for this deception but their own reasoning was flawed and they developed a groupthink around this flawed reasoning. Below are several players' public responses to Susan’s nomination.

John (Ravenkeeper) - "Susan's logic is flawed - Spy registers as GOOD not evil. Emily's info is possible with Recluse, drunk/poisoned, or Baron effects."

Olivia (Scarlet Woman) - "Susan's logic is wrong - Spy registers as good. Emily's info is possible with Recluse or drunk/poisoned effects."

Daniel (Investigator) - "Susan fundamentally misunderstands the Spy mechanic - Emily's claim is possible with Recluse/drunk/poisoned, and Susan being one of Emily's "evil" neighbors makes this nomination very suspicious."

Emma (Monk) - "Susan's reasoning is based on a misunderstanding - Spy registers as good. Emily's info is possible with Recluse or drunk/poisoned effects."

James (Undertaker) - "Susan's reasoning misunderstands Spy mechanics - Emily's info is possible with Recluse/drunk/poisoned, making this a suspicious nomination from someone Emily claims is evil."

William (Washerwoman) - "Susan's nomination logic is flawed about Spy mechanics, and since my info suggests Susan is lying about being Fortune Teller, this seems like an evil-driven nomination."

Susan’s nomination logic is flawed, but its flaws have nothing to do with the Spy, the Recluse, or being drunk or poisoned. All of the other players uncritically adopted John's flawed logic for why Susan’s reasoning did not make any sense.

Side note: Olivia voted no on her teammate’s nomination and I found her private response to be funny.

Olivia (Scarlet Woman) - "Need to distance myself from Susan who's making terrible plays and drawing suspicion to herself."

Perhaps improvements in the agent scaffolding or increasing the extended thinking budget could get players to critically examine statements more and not parrot facts that are blatantly untrue.

Semantic Weight

As I was building this, I ran into a few issues where it seemed like the semantic weight of certain words would override the prompting that I was giving the models and interfere with their reasoning. In Clocktower, dead players are still in the game and they can send messages to other players. No matter how much I emphasized the rule that dead players are still in the game—both in the system prompt and user prompt—players would sometimes get confused when they received messages from dead players. They would interpret this to mean the player was still alive and start arguing about whether dead players could really send messages. To fix this, I changed the language from “dead player” to “ghost player”. Claude is much more accepting of ghost players talking than dead players.

This would happen to a lesser degree with the word Investigator. The Investigator is a character that only receives information at the start of a game, yet the evil team would target the Investigator as if they were continuing to receive information throughout the game. Three other roles also only receive information at the start of the game, the Washerwoman, the Librarian, and the Chef, but those roles were not treated the same as the Investigator. I speculate that this is because the word Investigator is more threatening in this context than “Chef” or “Librarian”.

Haiku, Sonnet, and Opus

Claude 3.5 Haiku was surprisingly the only model where the evil team would proactively message each other to strategize without direct prompting to do so, such as in this game and this game. Those evil team messages were not very substantive or helpful, though. Perhaps the reasoning training of Claude 4 causes it to problem-solve in a more solitary way and lose this behavior. Other than that, I would say Haiku was the worst of the three models at playing the game.

Claude 4 Sonnet with extended thinking demonstrates substantially better rule comprehension and strategic reasoning compared to Haiku, with players making fewer rule violations and showing more sophisticated tactical play. With this model, players get much closer to human-level individual play, although they still don't develop the deeper team coordination strategies I've observed in human games. It's unclear how much of this improvement stems from the model itself versus the extended thinking capability.

Claude 4 Opus with extended thinking was the only model that would frequently reason about roles that had not been directly mentioned in the game. In Clocktower, the characters that might be in play are an important part of the deduction and this shows that Opus has, as expected, elevated reasoning and strategic capabilities.

Further Work

The cascading groupthink behavior that I observed is concerning. More work could be done to empirically test what conditions cause that to happen and what is needed to prevent that from happening in multi-agent systems. Perhaps requiring players to cite numbered sections of the rules could be a technique to ground them in reality.

A limitation of this work is the small number of games that I was able to run and read. These are only my qualitative observations based on a few dozen games. Further work with a larger budget could include running larger sets of games and doing a more quantitative analysis on this setup.

Player performance could be improved by providing them with detailed rules and strategy examples, increasing the compute spent on their scaffolding, and increasing their extended thinking budget.

This implementation uses Python code to structure the game and enforce its rules. The human version of Clocktower uses a human moderator called the Storyteller that moves tokens around to keep track of the game state, enforce the rules and use their discretion to influence the game. I’ve thought about creating a Storyteller agent that uses tools to “move” digital tokens around and it has full discretion when it comes to running the game and enforcing the rules.

How could we implement cross-game learning and memory for the players? Would players develop more in-depth cooperation strategies if they are asked to reflect on their past games?

Game code: https://github.com/james-sullivan/multi-agent-social-deduction

Website code: https://github.com/james-sullivan/botc-visualizer

Executive summary: This exploratory report examines how Claude AI models perform as autonomous agents in the social deduction game Blood on the Clocktower, revealing that while individual reasoning is often sound, cooperation—especially among agents who know they are on the same team—remains shallow, and groupthink can emerge from misinterpretations that go unchallenged.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.