(This article was originally posted on my website here. I'd love to get an Effective Altruist critique of it. In general, I think long-termist EAs should give meta x-risk more attention. I'm sorry the images are so small.)

“Since Auschwitz we know what man is capable of. And since Hiroshima we know what is at stake." —Viktor Frankl, Man's Search for Meaning

"The greatest shortcoming of the human race is our inability to understand the exponential function." —Al Bartlett, Professor at University of Colorado

The goal of this article is to help readers understand the concept of Meta Existential Risk. I'm surprised that (essentially) nothing has been written on it yet.

Before understanding Meta Existential Risk, it's helpful to understand Existential Risk (x-risks). Existential Risks are possible future events that would "wipe out" all sentient life on Earth. A massive asteroid impact, for example. Existential Risks can be contrasted with non-existential Global Catastrophic Risks, which don't wipe out all life but produce lots of instability and harm. A (smaller) asteroid impact, for example :).

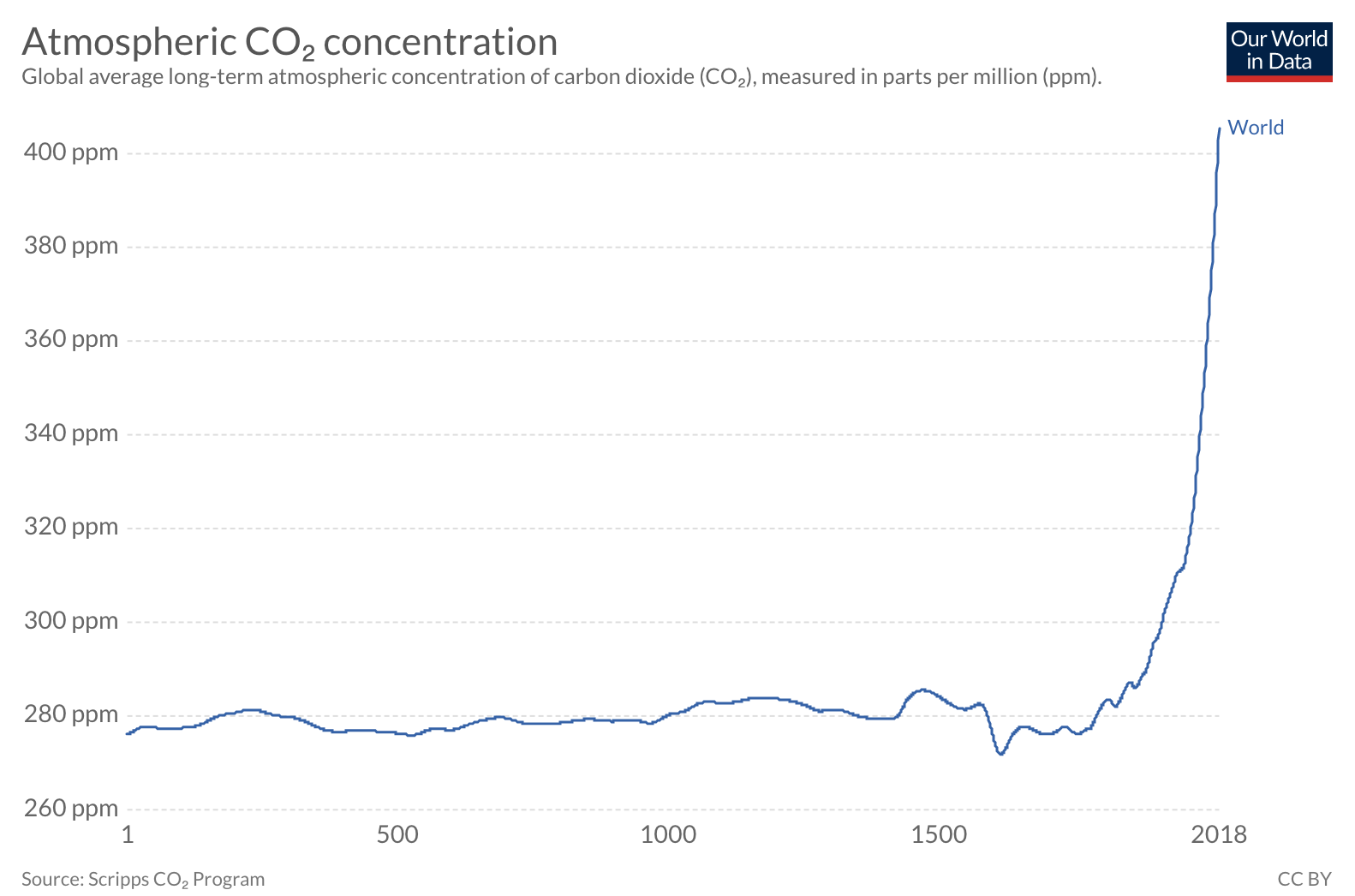

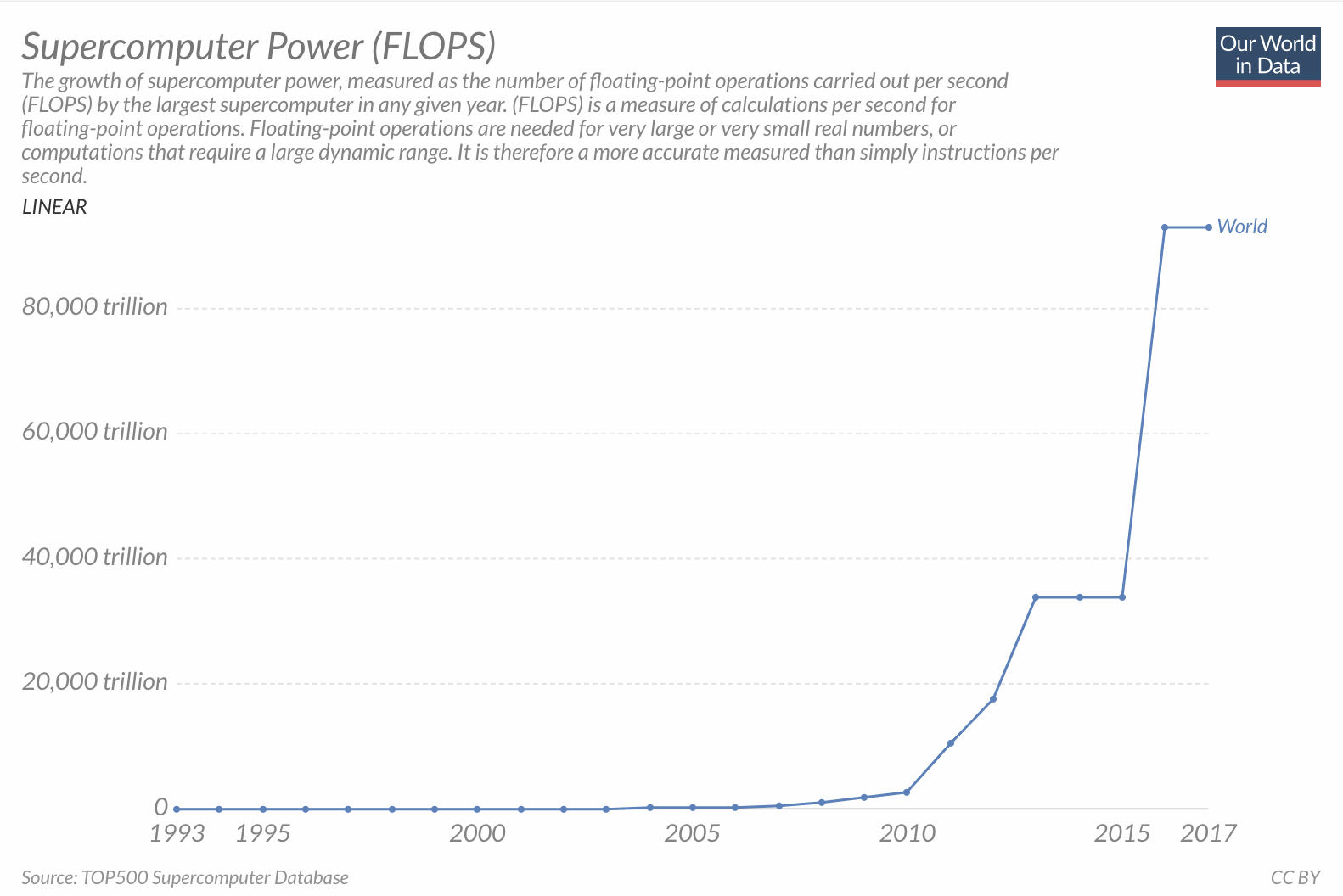

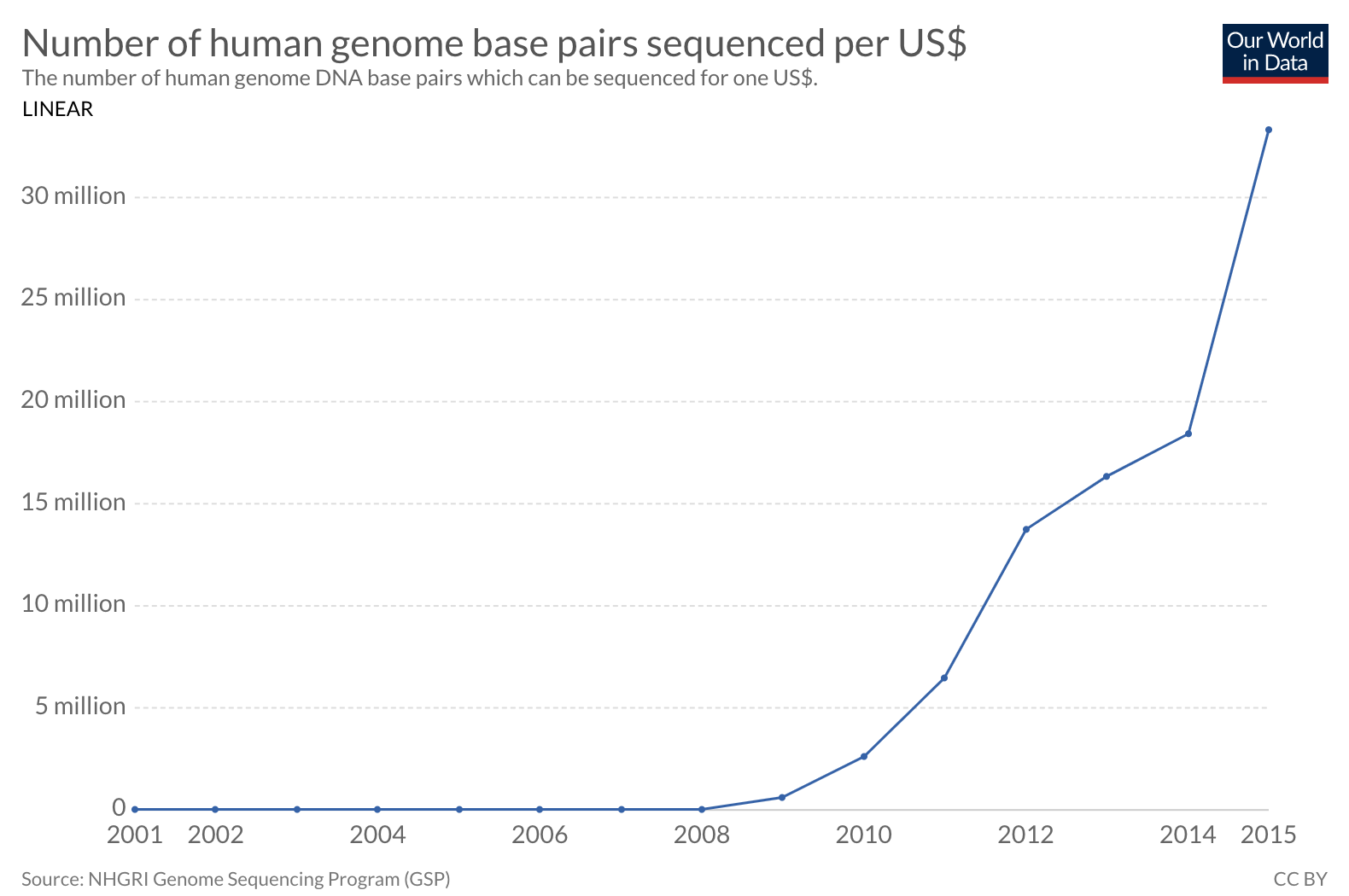

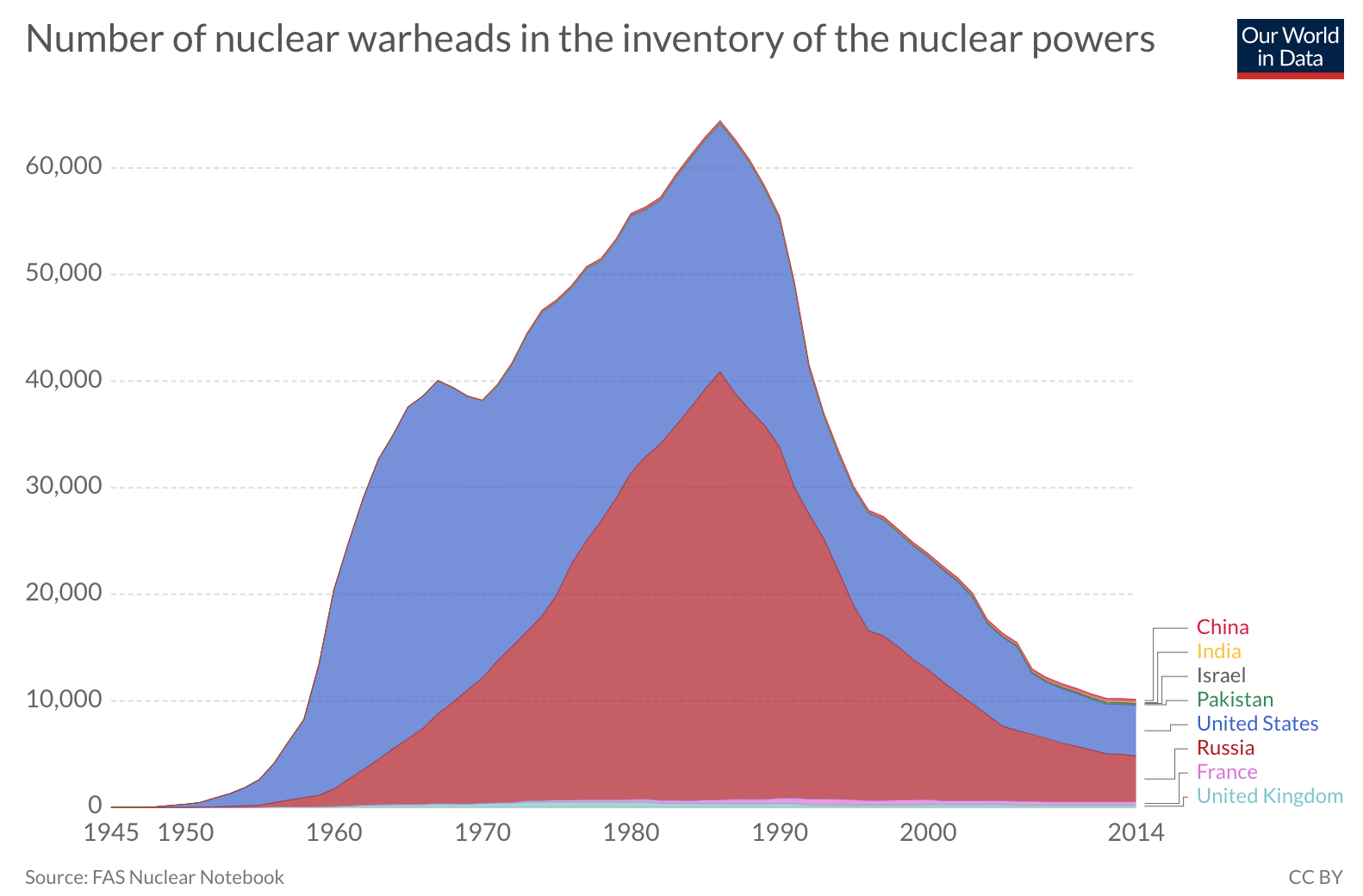

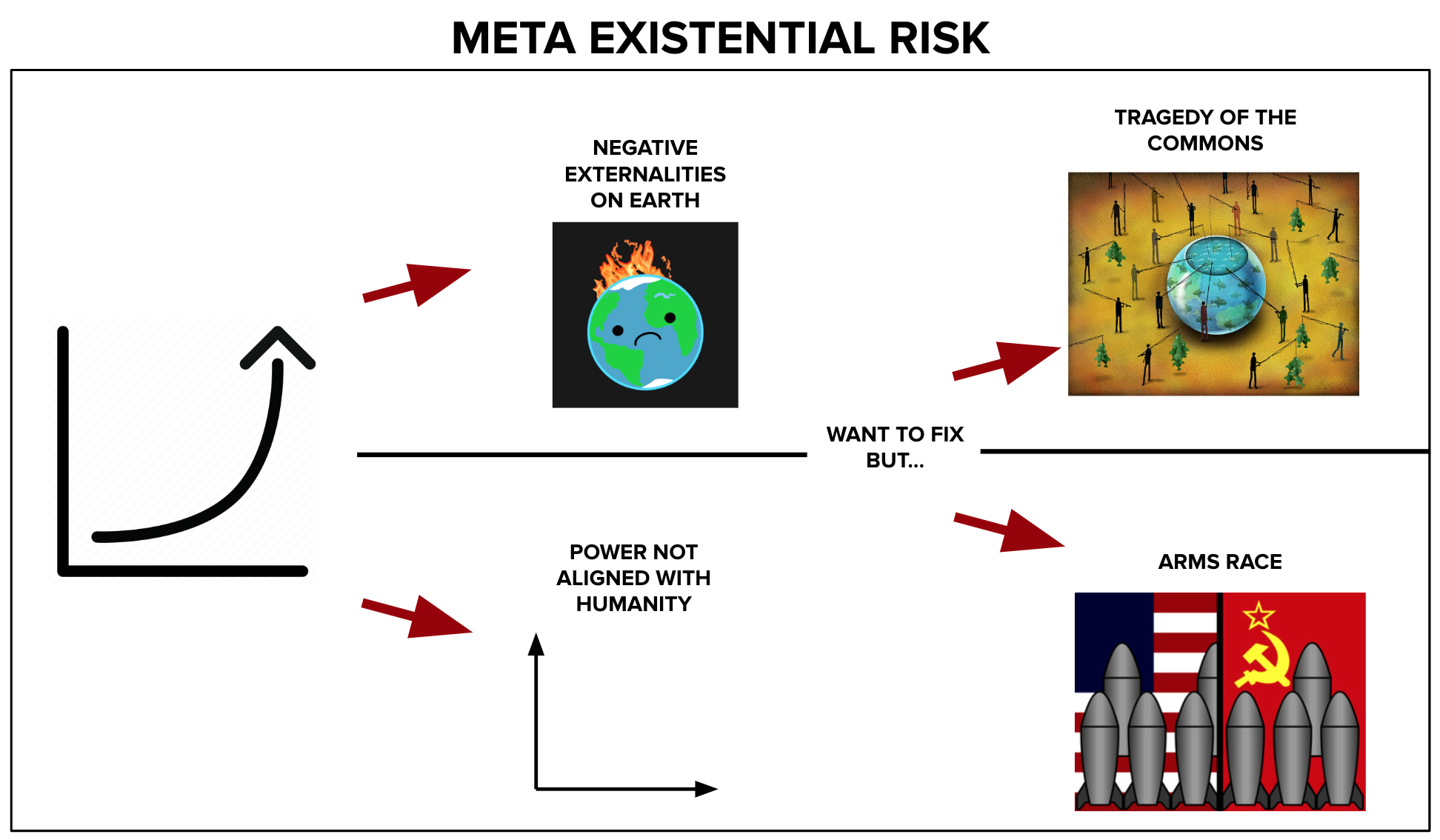

Meta Existential Risk gets at at the question: why do existential risks occur? The answer: exponential curves combined with bad game theory. Let's look at our 4 primary existential risks. As you can see, each one is an exponential curve.

Climate Change

Artificial Intelligence

Biological Technology

Nuclear Technology

Exponential Curves Generate X-Risks

These x-risks fall into two primary categories:

- Magnitude for Destruction (AI, BioTech, Nukes): In this case, the x-risk is deadly simply because it represents a massive increase in power. There is a lot of power (destructive or not) in 60,000 nukes, super cheap genomic sequencing, or robust AI. And, as the Orthogonality Thesis states: power is orthogonal to alignment with human values. More power doesn't necessarily mean more responsibility.

- Negative Externalities (Climate Change): In this case, the x-risk is deadly not because of "the tech itself", but because of the tech's negative externalities. The Industrial Revolution (inadvertently) pumped a lot of CO2 into the atmosphere, which has led to climate change. The problem is not factories or planes or whatever, but rather the ignored negative externality.

(Daniel Schmachtenberger defines the 1st issue as: "Rivalrous (win-lose) games multiplied by exponential technology self terminate." And the 2nd issue as: "The need to learn how to build closed loop systems that don’t create depletion and accumulation, don’t require continued growth, and are in harmony with the complex systems they depend on.")

These risks might not be such a big deal if we were able to look at the negative impact of the exponential curve and say "oh, let's just fix it". The problem is that we can't because we're stuck in two kinds of bad game theory problems (often called "coordination problems").

- Our "Power" Problems are Arms Races: For powerful new tech, all of the players are incentivized to make it more powerful without thinking enough about safety. If one player thinks about safety but goes slower, then the other player "wins" the arms race. e.g. Russia and the U.S. both build more nukes, Google and Baidu create powerful AI as fast as possible (not as safe as possible), and CRISPR companies do the same.

- Negative Externalities are a Tragedy of the Commons: It is in each country's best interest to ignore/exploit the global commons because everyone else is doing the same. If they try to carbon tax, underfish, etc. and no one else does, then they "lose".

This is the root of meta x-risk: exponential curves with negative impacts that humans need meta-coordination to stop.

These problems don't just hold for these four specific exponential curves. Those are just the four that we're dealing with today. There will be more exponential curves in the future that will move more quickly and be more powerful. This leads to our final definition.

Meta Existential Risk is the risk to humanity created by (current and future) exponential curves, their misalignment with human values and/or our Earth system, and our inability to stop them given coordination problems.

Categorically Solving Meta X-Risk

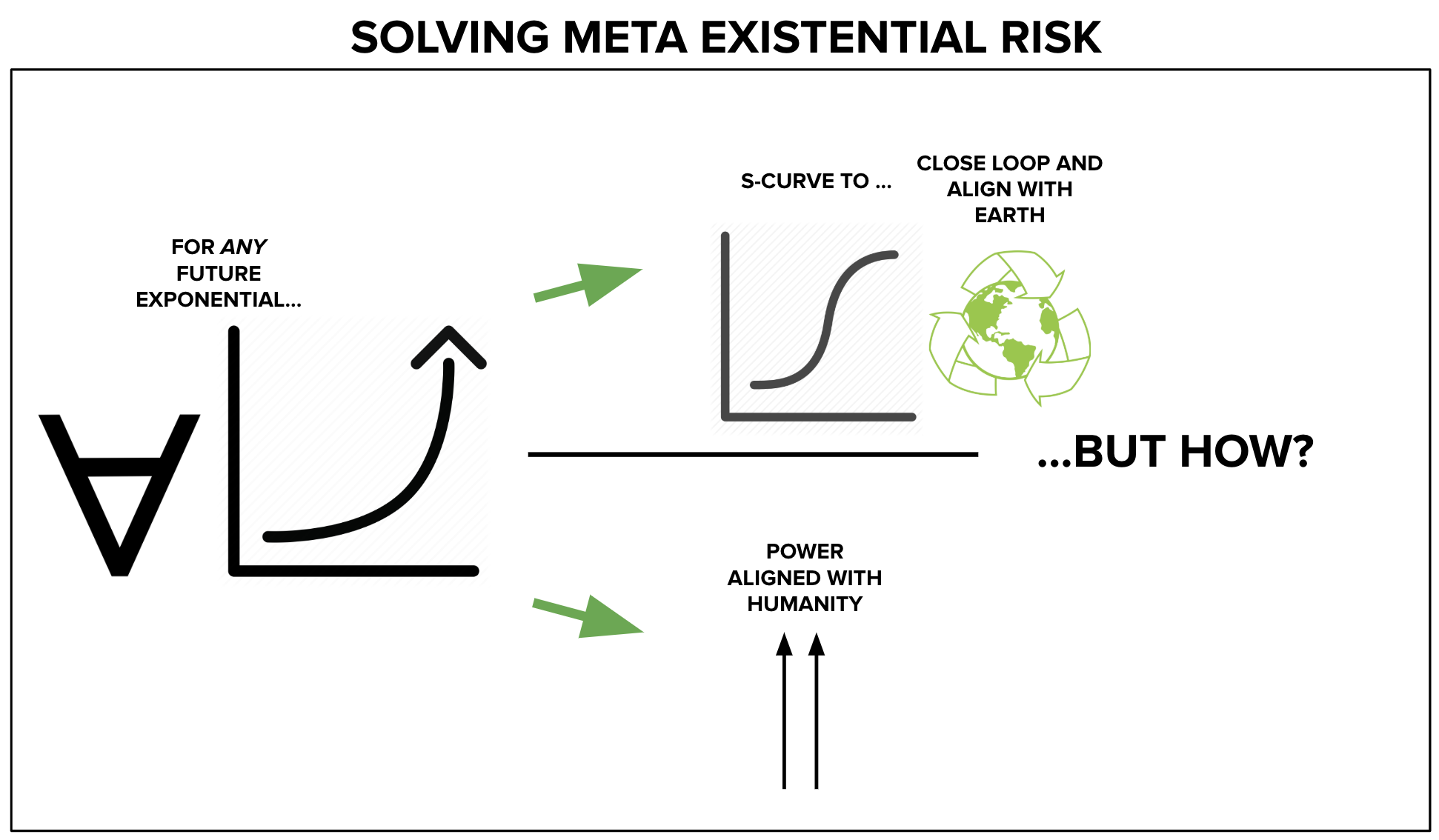

I won't dive deeply into my hypotheses for how to "categorically solve" meta x-risk here. However, I'll show you what this looks like in a directional sense. For any current/future exponential:

- To solve the negative externality problem, we need to turn exponentials into S-curves that create closed loop systems with our biological substrate.

- To solve the power problem, we need to ensure our exponentials align with human values.

Hope this article was helpful in understanding meta x-risk. Comment below with your thoughts/feedback!

Notes:

- Notice how similar the two solves for meta x-risk are. Both are through-time vectors that align the exponential with its substrate. In case 1, it's aligning the exponential with the physical world (Nature 1.0). i.e. Not going too fast and replenishing the complex biological system. In case 2, it's aligning the exponential with human values (Nature 2.0). (Nature 3.0 is AI/tech btw.) I'm not totally sure, but this feels very connected to Forrest Landry's idea of continuity.

- I wanted to note this more explicitly in the visuals, but there's another key way to conceptualize of meta x-risk: as a natural conclusion of entropy—it's easier to destroy than to create. (1000 years to make a city, a single a-bomb to destroy it in minutes.)

- In the article above I make it seem like all Negative Externalities are a Tragedy of the Commons, and all Power Problems are Arms Races. I don't think this is necessarily true. Or at least, I haven't formalized why this might be true. Thoughts?

- In these possibly intense times, I like to remind myself of thing like "we're incredibly insignificant". I mean damn, the universe can only harbor life for like 1 quintillionth of it's existence. (See Melodysheep's Timelapse of the Future.)

- I linked to it above as well, but the best current writings on meta x-risk are from Daniel Schmachtenberger: Solving the Generator Functions of Existential Risk. Check out my podcast with him here.

For more on meta x-risk, see the Fragility section in my book. Some highlights:

- Resilience, Antifragility, and the Precautionary Principle: We should probably just assume lots of crazy stuff is going to happen, then make ourselves able to handle it.

- Ok, so we want to evolve a new Theory of Choice that keeps our exponential reality from self-terminating. What else do we need? I’d also argue that we need “Abstract Empathy”.

A more relevant curve for nuclear weapons might be "TNT equivalents" or "cost per ton TNT equivalent".

This is mostly a problem with an example you use. I'm not sure whether it points to an underlying issue of your premise:

You link to the exponential growth of transistor density. But that growth is really restricted to just that: transistor density. Growing your number of transistors doesn't necessarily grow your capability to compute things you care about, both from a theoretical perspective (potential fundamental limits in the theory of computation) as well as a practical perspective (our general inability to write code that makes use of much circuitry at the same time + the need for dark silicon + Wirth's law). Other numbers, like FLOP/s, don't necessarily mean what you'd think either.

Moore's law does not posit exponential growth in amount of "compute". It is not clear that the exponential growth of transistor density translates to exponential growth of any quantity you'd actually care about. I think it is rather speculative to assume it does and even more so to assume it will continue to.

Very interesting - you might be interested in my recent post on Corporate GCRs, where I make a similar argument.