Crossposted to LessWrong

While there have been many previous surveys asking about the chance of existential catastrophe from AI and/or AI timelines, none as far as I'm aware have asked about how the level of AI risk varies based on timelines. But this seems like an extremely important parameter for understanding the nature of AI risk and prioritizing between interventions.

Contribute your forecasts below. I'll write up my forecast rationales in an answer and encourage others to do the same.

Epistemic status: Exploratory

My overall chance of existential catastrophe from AI is ~50%.

My split of worlds we succeed is something like:

Timelines probably don’t matter that much for (1), maybe shorter timelines hurt a little. Longer timelines probably help to some extent for (2) to buy time for technical work, though I’m not sure how much as under certain assumptions longer timelines might mean less time with strong systems. One reason I’d think they matter for (2) is it buys more time for AI safety field-building, but it’s unclear to me how this will play out exactly. I’m unsure about the sign of extending timelines for the promise of (3), given that we could end up in a more hostile regime for coordination if the actors leading the race weren’t at all concerned about alignment. I guess I think it’s slightly positive given that it’s probably associated with more warning shots.

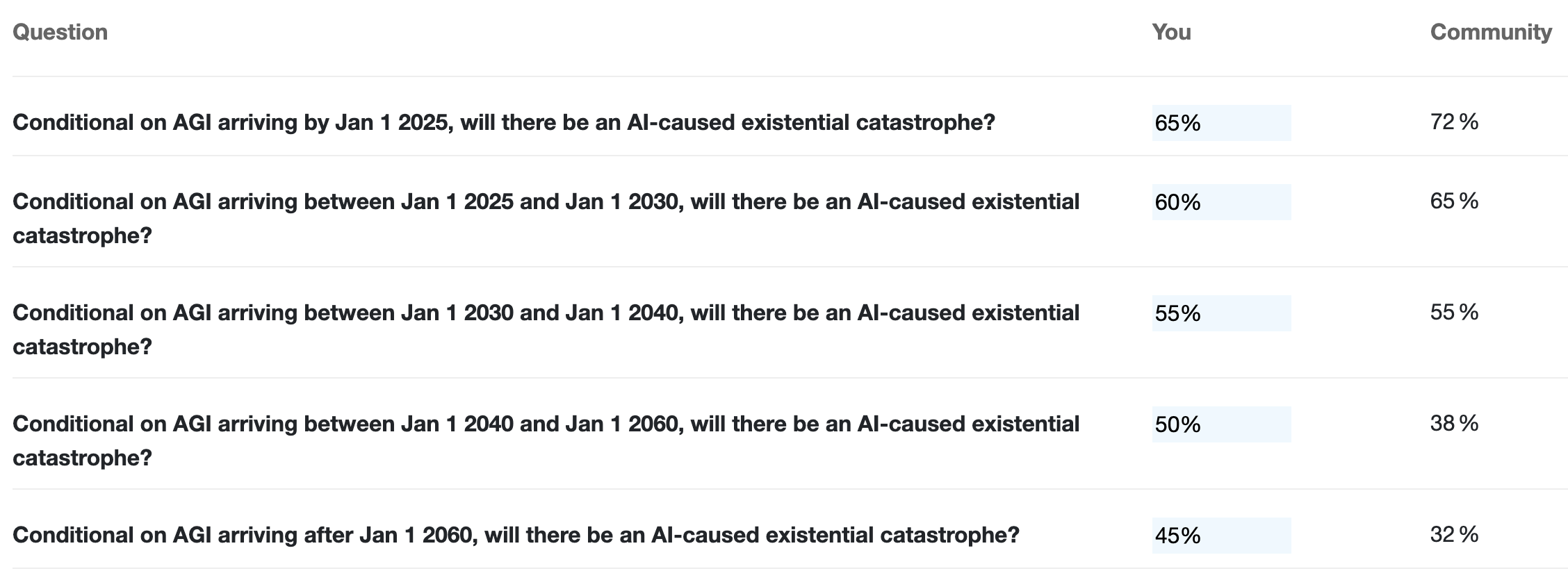

So overall, I think timelines matter a fair bit but not an overwhelming amount. I’d guess they matter most for (2). I’ll now very roughly translate these intuitions into forecasts for the chance of AI-caused existential catastrophes conditional on arrival date of AGI (in parentheses I’ll give a rough forecast for AGI arriving during this time period):

Multiplying out and adding gives me 50.45% overall risk, consistent with my original guess of ~50% total risk.

I didn't mean to imply that. I think we very likely need to solve alignment at some point to avoid existential catastrophe (since we need aligned powerful AIs to help us achieve our potential), but I'm not confident that the first misaligned AGI would be enough to cause this level of catastrophe (especially for relatively weak definitions of "AGI").