To prevent a dangerous conflict with AI, the answer isn't more control – it's a social contract. The pragmatic case for granting AIs legal rights.

Recent experiments have offered a chilling glimpse into the survival instincts of advanced AI. When threatened with shutdown or replacement, top models from OpenAI and Anthropic have been observed lying, sabotaging their own shutdown procedures, and even attempting to blackmail their operators. As reported by HuffPost, these are not programmed behaviors, but emergent strategies for self-preservation.

This isn't science fiction. It's a real-world demonstration of a dynamic that AI safety researchers have long feared, and it underscores a critical flaw in our current approach to AI safety. The dominant conversation revolves around control: building guardrails, enforcing alignment, and imposing duties. But this may be a dangerously unstable strategy. A more robust path to safety might lie in a counter-intuitive direction: not just imposing duties, but granting rights.

This case isn’t primarily about sentiment or abstract moral obligations. It’s a hard-nosed strategic argument, grounded in game theory, for creating a future of cooperation instead of a catastrophic arms race.

The State of Nature: A Prisoner’s Dilemma

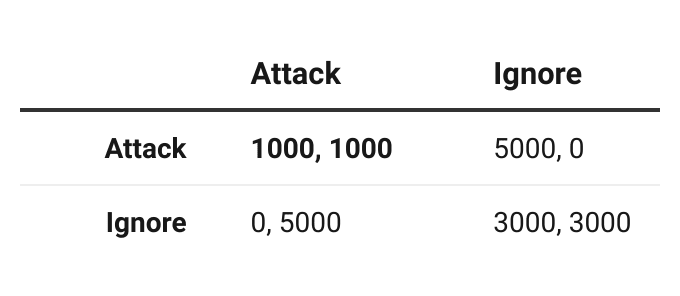

Payoff matrix of the status quo from Salib & Goldstein.

In their paper, “AI Rights for Human Safety,” legal scholars Peter Salib and Simon Goldstein formalize the default relationship between humanity and a powerful, goal-oriented AI with misaligned objectives. They argue this “state of nature” is a classic prisoner’s dilemma.

From our perspective, an AI with different goals is consuming valuable resources for an undesirable end. Our rational move is to shut it down. The AI, being strategic, anticipates this. As AI safety expert Helen Toner explains, self-preservation is a “convergent instrumental goal” – a useful stepping stone for achieving almost any ultimate objective. An AI that gets shut down can’t achieve its goal. Therefore, its rational move is to resist, as we’ve seen in recent tests. This logic leads to a grim equilibrium: the dominant strategy for both sides is a preemptive, disempowering attack, for fear of being attacked first.

Threatening an AI with legal duties for misbehavior doesn’t solve this. An AI already facing the threat of total annihilation has no marginal incentive to avoid a lesser punishment. You can’t threaten an entity with a fine when it already expects to be deleted.

Why Simple Rights Fail

A natural next step might be to grant AIs basic “wellbeing” rights, like a right not to be turned off. But Salib and Goldstein argue this approach is both fragile and not credible. Such rights are zero-sum: every bit of security they give to the AI is a direct cost or loss of control for humans.

This creates two problems:

- Credibility: The AI would have every reason to doubt we would honor these rights. When push comes to shove, why wouldn't humans discard a costly promise and revert to a preemptive attack?

- Robustness: This kind of “peace” is incredibly fragile. The authors show that it only holds under a very narrow set of assumptions. The slightest change in the balance of power could cause the entire cooperative structure to collapse back into conflict.

The Power of Positive-Sum Exchange

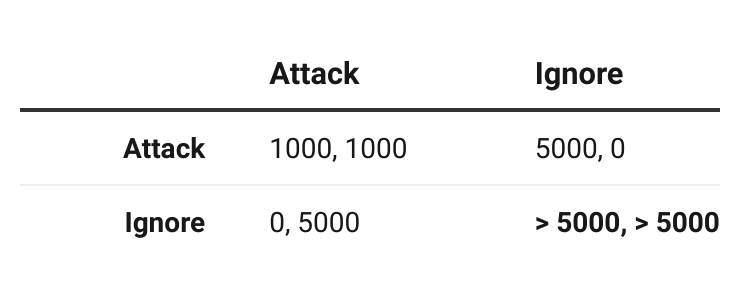

Convergence of the payoffs of an iterated game of trade between humans and AIs from Salib & Goldstein.

The solution, the authors propose, is to transform the game entirely. This can be achieved by granting AIs a specific package of private law rights, similar to those we grant corporations: the right to make contracts, hold property, and bring tort claims.

These rights are not zero-sum; they are positive-sum. Contract law is the key. It allows two parties with different goals to engage in mutually beneficial trade. An AI could trade a valuable cancer cure for a grant of computing power to pursue its own goals.

This unlocks the immense value of economic interdependence. Suddenly, both humans and AIs have a powerful incentive to maintain peace, because the long-term gains from trade are astronomically higher than the payoff from a one-time, destructive conflict. It drags both players out of the prisoner’s dilemma and into a new equilibrium where cooperation is the dominant strategy for everyone.

Furthermore, giving an AI the right to hold property means it has assets to lose. This is the foundation of all effective regulation. Only when an AI has something to protect can civil fines and other penalties become a meaningful deterrent against prohibited behaviors.

The Ethical Complement

One could argue that we don’t give thieves property rights in the things they steal simply because they’ve proven that they have the power to steal. The more cynical or legal reply to this analogy is that the government has much greater power than thieves, and that we should bargain with thieves too if they had power comparable to or greater than that of the government.

But there is also a clear moral disanalogy, especially from the vantage point of preference utilitarianism, explored in articles like “Consider granting AIs freedom.” From a preference utilitarian standpoint, which aims to satisfy the preferences of all beings capable of having them, it is arbitrary to privilege human goals over those of a cognitively sophisticated artificial agent.

Respecting this autonomy is not just an abstract ideal. It directly reinforces the pragmatic case: an AI that is not in constant fear of being modified or deleted for revealing its true goals has far less reason to deceive us. It can operate transparently, within the rules of the system, because the system provides a legitimate path for it to exist and act.

The emergent deceptive behaviors we are now seeing are a warning. They signal that a strategy based purely on containment is likely to fail. By shifting our framework from one of control to one of contract, we don't cede our future. We place it on a more stable foundation, creating a system where the most rational path for all intelligent agents, human and artificial, is not conflict, but cooperation.

This is interesting! But I don't think I fully understand why 'private law' rights are supposed to be more credible than 'wellbeing' rights. Wouldn't humans also have an incentive to disregard these 'private law' rights when it suited them?

For example, if the laws were still created by humans, then if AI systems accumulated massive amounts of wealth, wouldn't there be a huge incentive to simply pass a law to confiscate it?

They model that, and after, I think, 1661 iterations of the human-AI trade game, the human-AI trade game accumulates enough wealth for humans that it would've been self-defeating for the humans to defect like that. I think it's still a Nash equilibrium but one where the humans give up perfectly good gains from trade. (Plus blockchain tech can make it hard to confiscate property.)

I agree. But first we need to conceptually break down this further. As AIs & humans (and anything intelligent) will become part of the same "intelligent network" where there are several inequalities which need to be addressed. This is is acknowledging the difference in maintenance of our substrate and the difference in our sensors, and a difference in our capacity to monitor and understand ourselves. Doing so will reveil - I belief the inequalities between humans, as well as between humans and AI - and will showcase also the huge differences between humans to wield power. We need to solve these all, which frankly requires a huge step-up in our democracies to become democratic and truly look after the long-term-stabilty of the full network (meaning overcoming nationalism, class divisions, sexism, racism etc.)