Earlier this month, we sent an announcement to our Giving What We Can Pledge members about our new pledge pin. This pin will be given to all Giving What We Can Pledge members who have been pledgers for over a year and are on track with their donations.

It’s an honour to be a part of a community that cares deeply about giving and improving the world, and this is a small way for us to thank our members for displaying an ongoing commitment to their pledge.

Here’s the full pledge pin announcement along with some FAQs.

2021 Giving Review

We’ve launched our yearly Giving Review and have been collecting data about the donations our community made in 2021.

If you could spend 5 minutes reporting your donations before the 31st July, please do so. It helps us measure our impact and hopefully provide better experiences for our members in the future.

Yes, your donations matter!

In the wake of Bill Gates’ announcement about upping the spending of the Gates Foundation, some of us may feel that our relatively small, individual donations don’t matter.

We’d like to remind you that although your contribution may feel like a drop in the bucket, it means quite a lot to the people, animals and planet it benefits.

By giving effectively, you are part of a community of donors who — together — are drastically increasing the size of that drop, making a meaningful, concrete difference to the lives of others and helping to normalise giving to those who need it. That’s incredibly important, because even with generous donations from large philanthropists, there's much more to be done.

Until next time, keep doing good!

-Luke Freeman & the rest of the Giving What We Can team

Newsletter audio summary

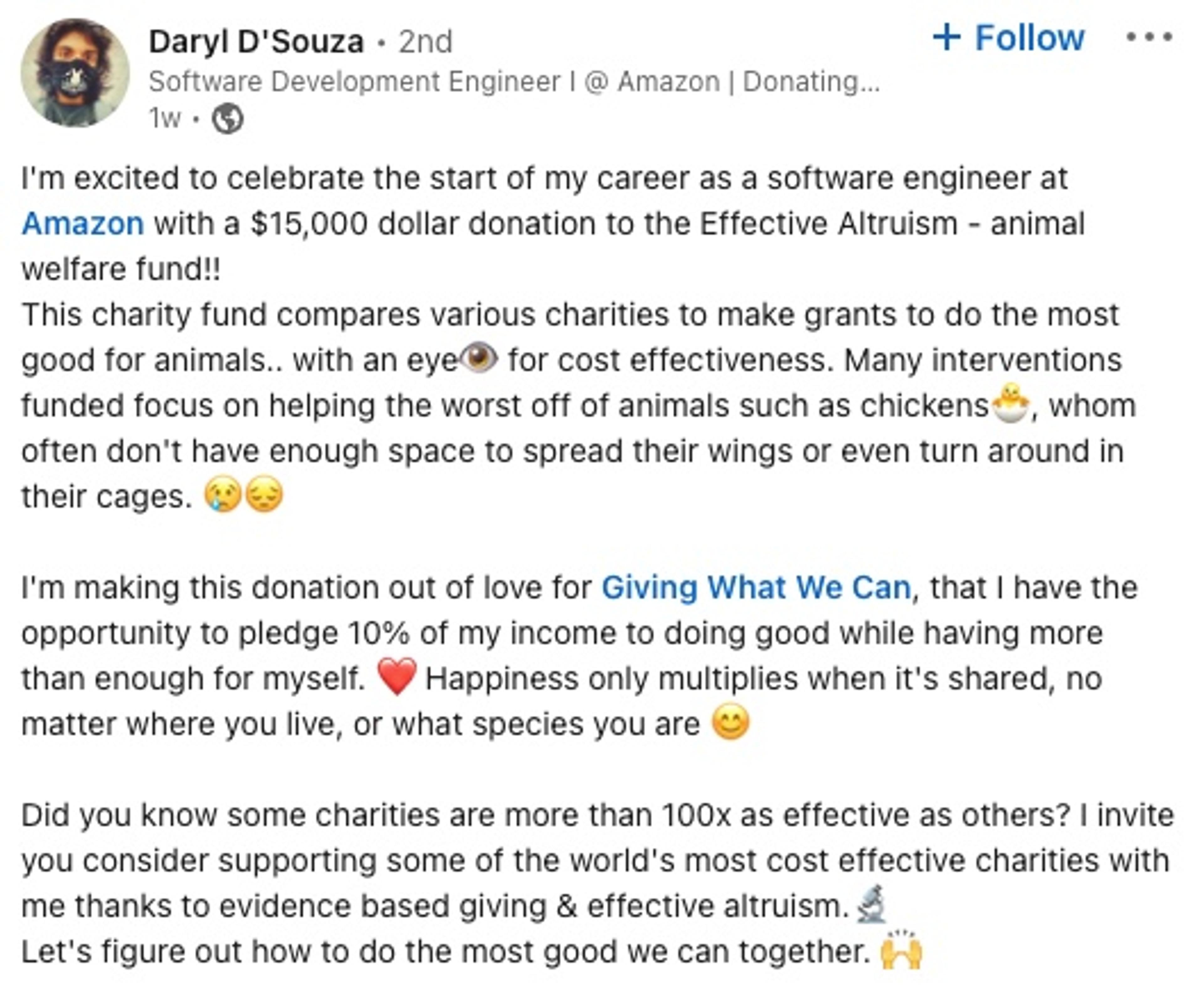

Member Daryl D'Souza started a new job and shared a donation publicly with his network!

Congratulate Daryl on his new job and his advocacy here

Attend An Event

Meetups

Americas/Oceania

The meetups team is hosting an EA Forum “Show and Tell.” Come and tell us about a forum post that you found interesting, didn’t agree with, didn’t understand, want to learn more about, etc., and we’ll discuss it together! Feel free to choose any post (old or new) on any topic or cause. Just come along and we can spend some time learning something new or debating something fun!

- Aug 6: 22:00 UTC (New York: Sat, Aug. 6, 6:00 pm; Sydney: Sun, Aug. 7, 8:00 am)

- RSVP on Facebook

- Register

Europe/Asia

Tony Senanayake from IDInsight will join this session to answer some Q&A about his work with multiple organisations in the global health and development sector. Tony is a goldmine of knowledge and this is sure to be a fascinating discussion.

- Aug 7: 09:30 UTC (London: 10:30 am, Munich: 11:30 am, Mumbai: 3:00 pm, Singapore: 5:30 pm)

- RSVP on Facebook

- Register

Open Forum

Our open forum is an event where you can come along with questions about effective giving and/or to meet others interested in effective giving. This event alternates between different timezones each month.

Next Open Forum (Europe/Americas)

- Aug 18: 19:00 UTC (London 8:00 pm, Berlin 9:00 pm, New York 3:00 pm, Los Angeles 12:00 pm)

- RSVP on Facebook

- Register

New content from Giving What We Can

Blog

- Do many charities fail to help people? - Caroline Wood, contributing writer

- Pledge Pin Announcement - Grace Adams, Head of Marketing

YouTube

- Why plant based meat is a scalable solution to feed the world: Interview with Bruce Friedrich, founder and CEO of the Good Food Institute (GFI) - Giving What We Can YouTube

- James Montavon, John Yan, and Catherine Low share their giving stories - Giving What We Can YouTube, People Who Give Effectively series

Podcast

- Find audio-only versions of our new YouTube content on the Giving What We Can podcast!

- Bruce Friedrich: Why plant based meat is a scalable solution to feed the world

- Member Story: James Montavon

- Member Story: John Yan

- Member Story: Catherine Low

News & Updates

Effective altruism community

- Ready Research performed a meta-review of what works and doesn’t work to promote charitable donations. Read Peter Slattery’s summary of the findings.

- Magnify Mentoring has opened up applications for their next round of mentoring. If you are a woman, non-binary person, or trans person of any gender with enthusiasm to pursue a high-impact career path, consider applying before the deadline on 5th August

- Peter McIntyre (formerly of 80,000 Hours) has launched a free online learning platform called non-trivial, which introduces some foundational EA concepts aimed to help young adults (particularly teenagers) increase their impact on the world. Peter encourages the EA community to share the first course, How to (actually) change the world, with others.

- A community member is developing a course on forecasting. If you’re interested, you can join the waitlist to participate in this new online class.

Evaluators, grantmakers and incubators

- GiveWell published an update on its funding projections for 2022, stating that it doesn’t expect to have enough funding this year to fill all the cost-effective grant opportunities it has been able to identify. As a result, it is raising its cost-effectiveness bar for funding and increasing its fundraising efforts.

- GiveWell has published several new research materials, including a report on the efficacy and cost-effectiveness of programs that train health workers to deliver maternal and neonatal health interventions, a page about two recent grants (totaling $562,000) supporting IRD Global’s tuberculosis team in Karachi, Pakistan, and notes from a conversation with Drs. Edward Miguel and Michael Walker about a possible follow-up to a randomised controlled trial of GiveDirectly's unconditional cash transfer program in Kenya.

- GiveWell is hiring for several positions, including Operations Assistant (new!), Senior Researcher, Senior Research Associate, and Content Editor. View all open positions on its jobs page.

- Animal Charity Evaluators announced a special giving opportunity in which donations to its Movement Grants program will be matched dollar-for-dollar up to $300,000.

- The EA Infrastructure Fund published an organisational update with information about the grants they made between September-December 2021

- James Snowden, a GWWC pledger and former team member, has joined Open Philanthropy as Program Officer for Effective Altruism Community Building.

Cause areas

Animal welfare

- How sustainable are fake meats? An exploration of the topic from Bob Holmes at Knowable magazine

- Faunalytics has released the 2022 update of their Global Animal Slaughter Statistics & Charts

- New data shows 10M fewer hens confined in cages in 2021 in Europe, as per this article from Poultry World

Global health and development

- Bill Gates recently announced that by 2026, the Gates Foundation aims to spend $9 billion a year

- “Is it really useful to ‘teach a person to fish’ or should you just give them the damn fish already?” asks Sigal Samuel in his Vox article discussing the evidence behind ‘ultra-poor graduation programs,’ which are aimed at lifting the ultra-poor out of poverty through a combination of training and cash/assets. Samuel explores how these combo programs compare to simple cash transfer initiatives, and how the gap between “teach a man to fish” and “give a man to fish” is narrowing.

- Evidence Action is hiring for some communications roles including a Senior Associate, Communications, a DC-based role working across a variety of communications workstreams with a heavy focus on digital, and an Associate Director, Communications.

- Kelsey Piper, Vox journalist and GWWC member, writes about The return of the “worm wars” and how the controversy over the value of deworming interventions shows the need for effective altruists to reason under uncertainty.

Long-term future

- A new animated TED-Ed video on The 4 greatest threats to the survival of humanity was released.

- Rational animations have posted a well researched video on how we could be living in the most important century and explores the development of advanced AI.

- Four lessons from effective altruism that we can apply to climate change by Kara Hunt, Clean Air Task Force.

- Centre for the Governance of AI has opened submissions for their 2022 Student Essay Prizes, and are seeking promising pieces of research with relevance to AI governance. Eligible submissions include undergraduate and master’s theses, journal articles, and essays. Winners will be awarded monetary prizes and be eligible for mentoring.

Useful Links

- Review our giving recommendations.

- Report your donations with your pledge dashboard.

- Share our ideas to help grow our community and multiply your impact.

- Join other members in the Giving What We Can Community Facebook group.

- Find more ways to get involved with Giving What We Can and effective altruism.

- Discuss effective giving and effective altruism on the EA Forum.

You can follow us on Twitter, Facebook, LinkedIn, Instagram, YouTube, or TikTok and subscribe to the EA Newsletter for more news and articles.

Do you have questions about the pledge, Giving What We Can, or effective altruism in general? Check out our FAQ page, or contact us directly.