In which: Organizers from Meta Coordination Forum 2023 summarize survey responses from 19 AI safety experts.

About the survey

The organizers of Meta Coordination Forum 2023 sent a survey to 54 AI safety (AIS) experts who work at organizations like Open AI, DeepMind, FAR AI, Open Philanthropy, Rethink Priorities, MIRI, Redwood, and GovAI to solicit input on the state of AI safety field-building and how it relates to EA community-building.

The survey focused on the following questions:

- What talent is AI safety most bottlenecked by?

- How should EA and AI safety relate to each other?

- What infrastructure does AI safety need?

All experts were concerned about catastrophic risks from advanced AI systems, but they varied in terms of how much they worked on technical or governance solutions. Nineteen experts (n = 19) responded.

The survey and analyses were conducted by CEA staff Michel Justen, Ollie Base, and Angelina Li with input from other MCF organizers and invitees.

Important caveats

- Beware of selection effects and a small sample size: Results and averages are better interpreted as “what the AI safety experts who are well-known to experienced CEA employees think” rather than “what all people in AIS think.” The sample size is also small, so we think readers probably shouldn’t think that the responses from this sample are representative of a broader population.

- Technical and governance experts' answers are grouped together unless otherwise indicated. Eleven respondents (n = 11) reported focusing on governance and strategy work, and eleven (n = 11) reported focusing on technical work. (Three people reported working on both technical and governance work.) This section compares the two groups' responses.

- These are respondents' own views. These responses do not necessarily reflect the views of any of the organizations listed above.

- People filled in this survey quickly, so the views expressed here should not be considered as respondents' most well-considered views on the topic.

- Summaries were written by the event organizers, not by an LLM.

What talent is AI safety most bottlenecked by?

Section summary

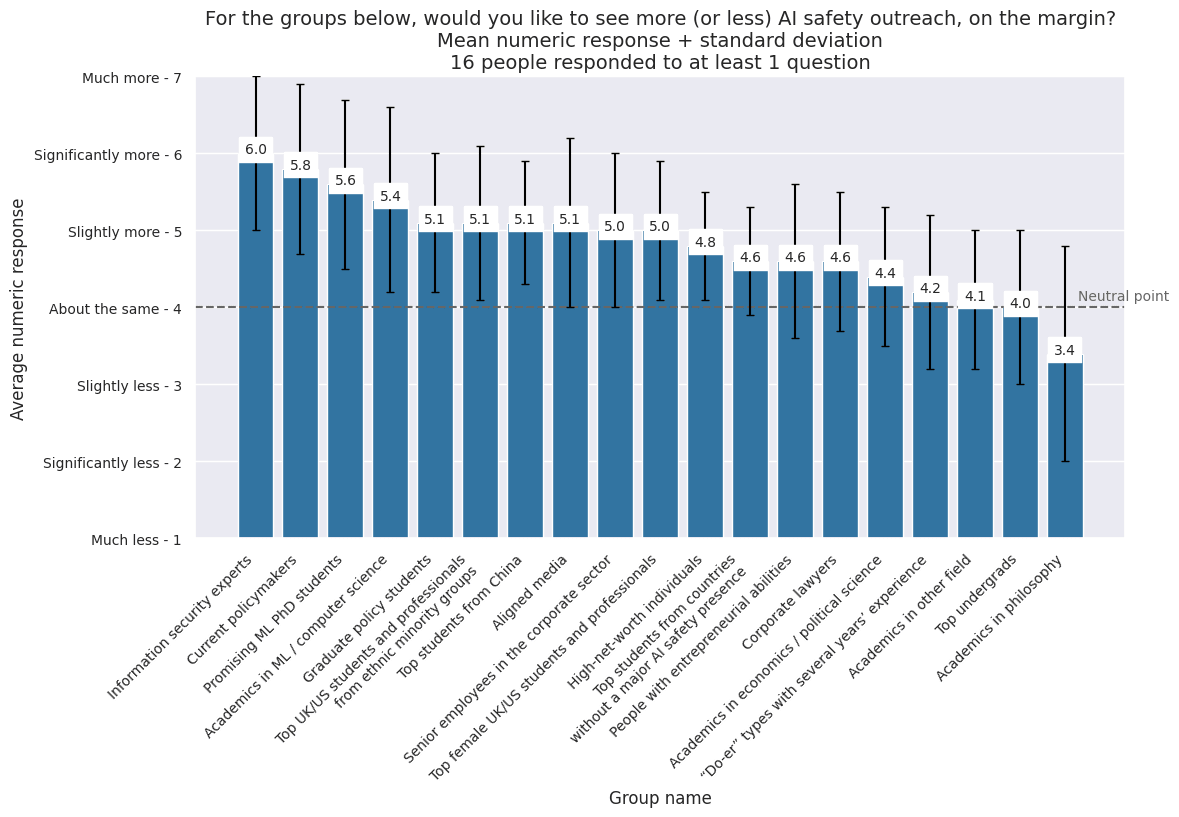

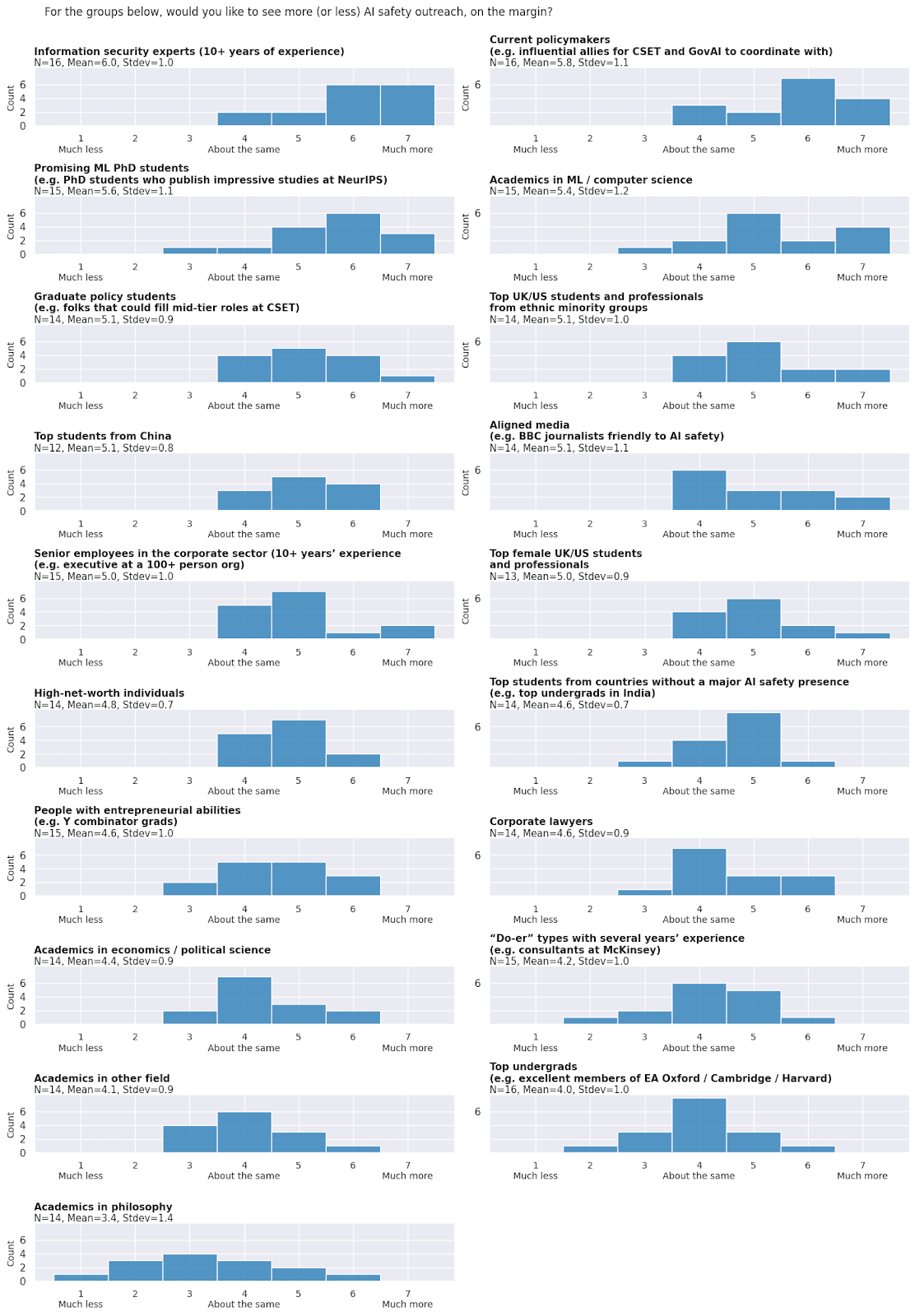

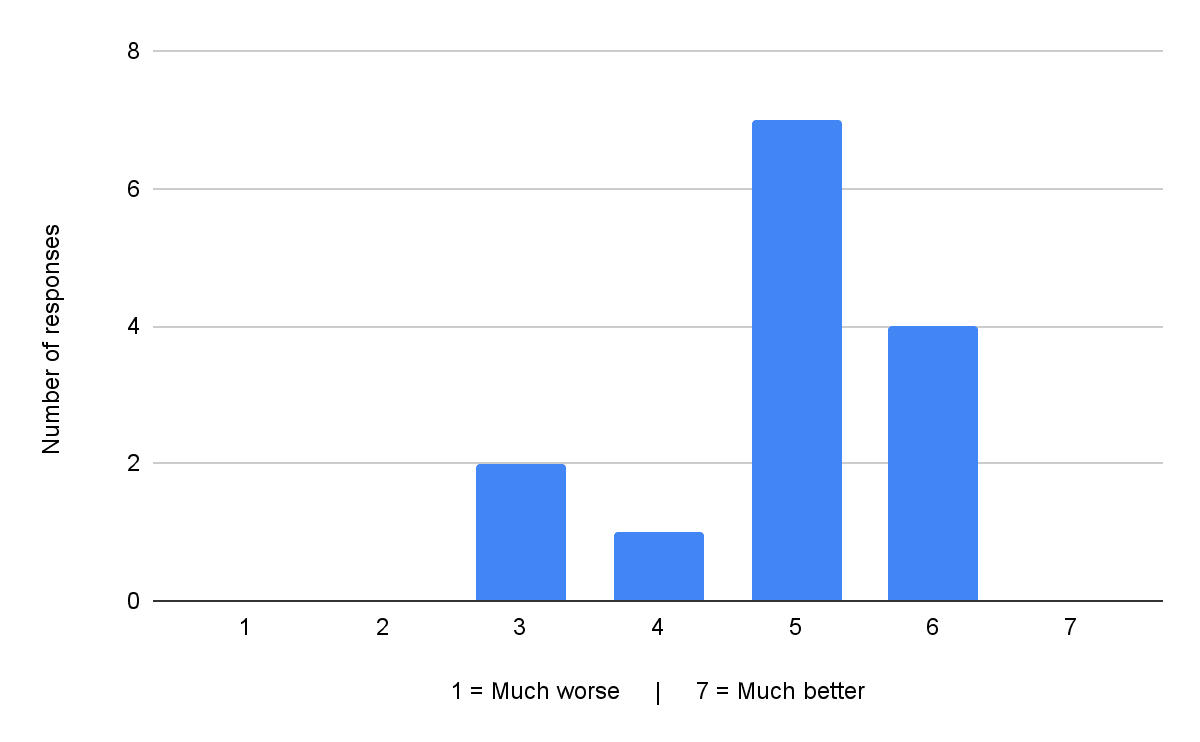

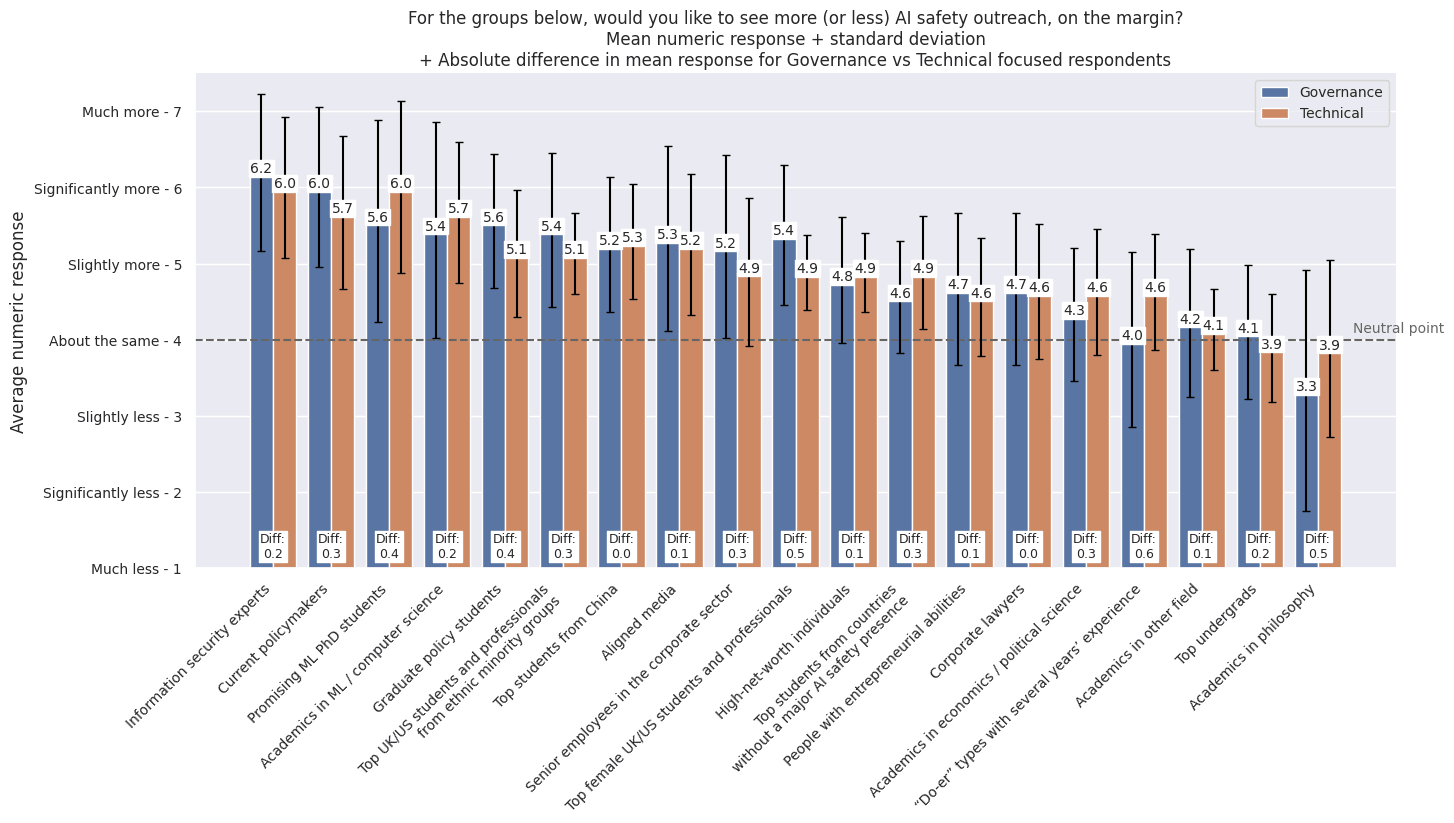

Respondents wanted to see more talent from a wide range of areas. Many called for more legible expertise and seniority, including policymakers (esp. those with technical expertise), senior ML researchers, and information security experts. When polled, respondents thought that information security experts were the group the field most needed more of, followed by academics in ML or computer science and promising ML PhD students.

For the groups below, would you like to see more (or less) AI safety outreach, on the margin?[1]

Question description

Assume the outreach work is high quality and sensibly tailored to the specific group, but take into account the tractability of the outreach (i.e. outreach will be less tractable for hard-to-reach groups).

Please try to have four or fewer answers in the "Much more" column.

How should EA and AI safety relate to each other?

Section summary

- Sprint time?: Respondents expressed a wide range of views on whether the AIS field was in “sprint mode”. ~5 respondents mentioned that the next few months were a high-leverage time for policy interventions, ~4 thought the next 1–2 years were a high-leverage time for the AIS field broadly, but ~8 favoured pacing ourselves because leverage will remain high for 5–10 years.

- % of EA resources that should go to AI: Respondents also expressed a wide range of views on what percent of the EA community’s resources should be devoted to AI safety over the next 1–2 years. Respondents who directly answered the question gave answers between ~20% and ~100%, with a wide spread of answers within that range. (Example responses below).

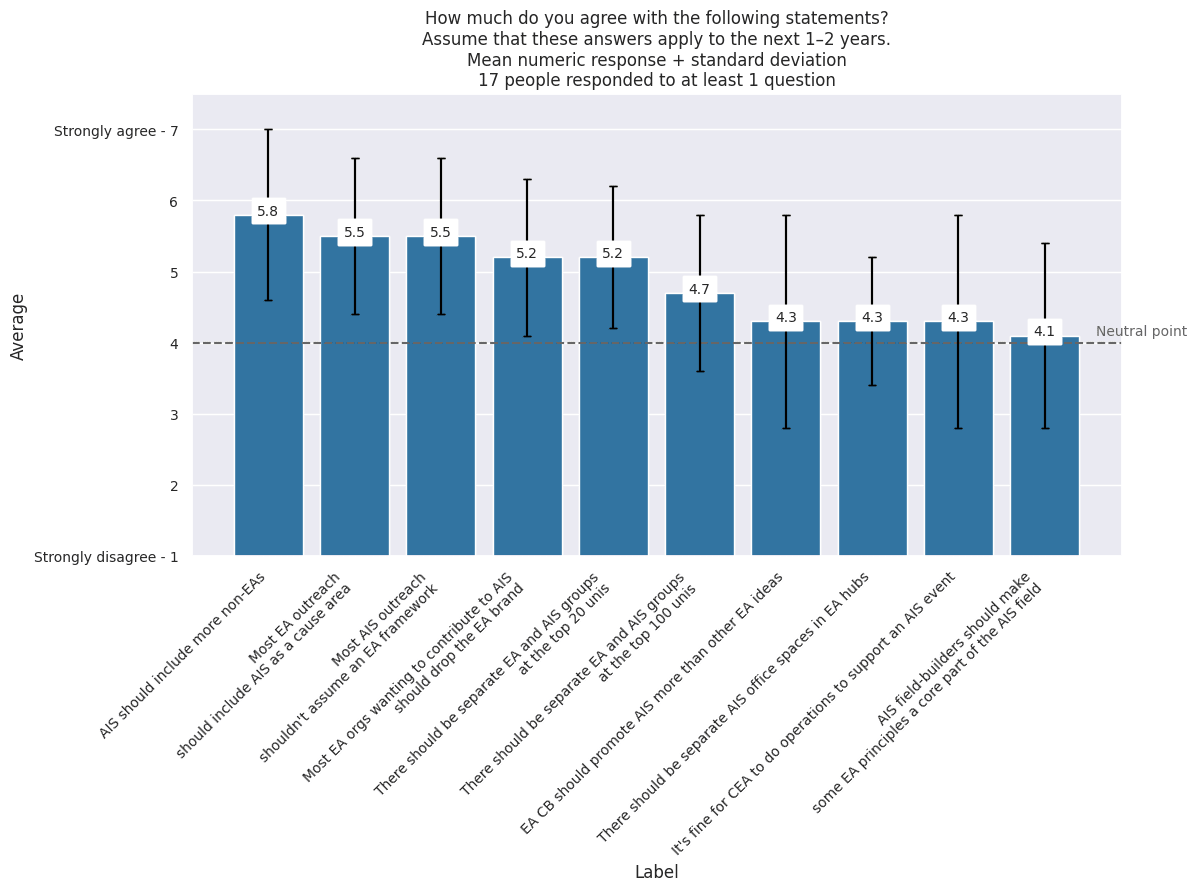

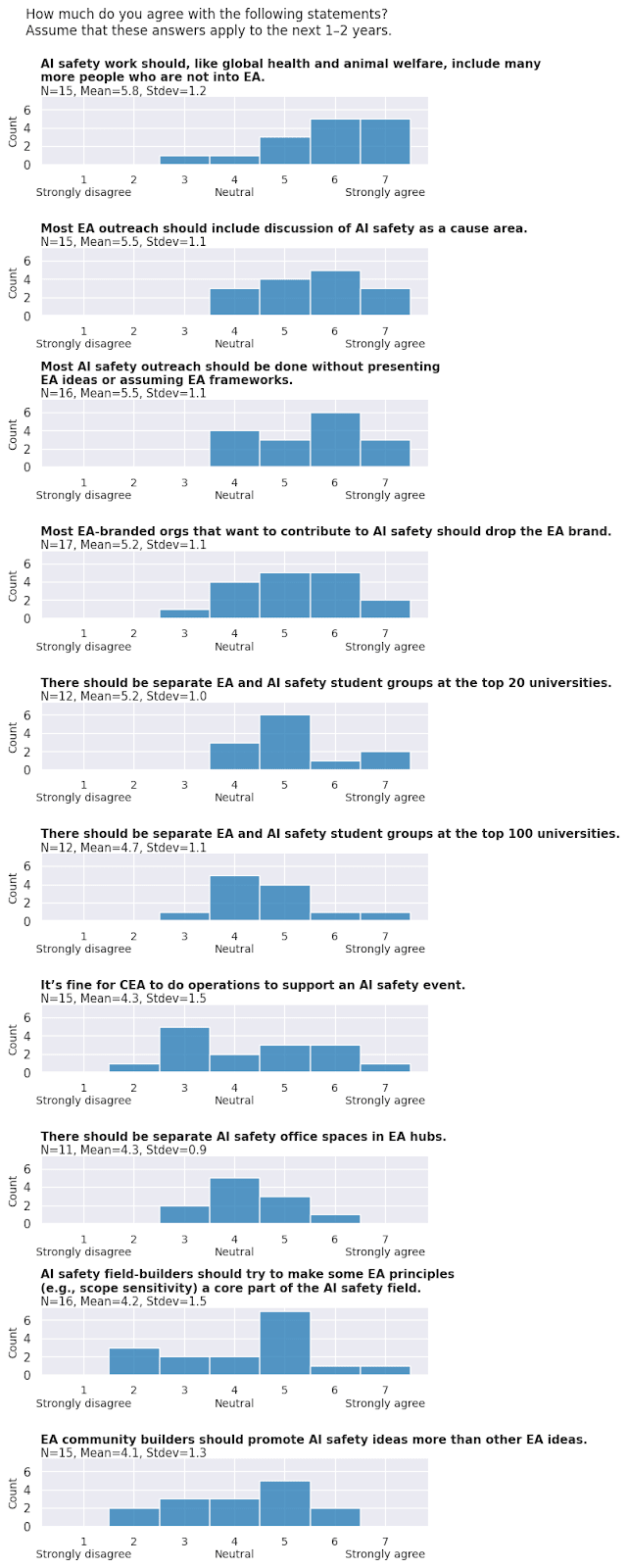

- Advice for EA orgs to contribute to AI safety: A majority of respondents agreed that most EA-branded orgs that want to contribute to AI safety should drop the EA brand.

- Disagreements in ideas to promote: There was disagreement on whether EA community builders should promote AI safety ideas more than other EA ideas, and whether AIS field-builders should try to make some EA principles a core part of the AIS field.

- Disagreements in overlap between EA and AIS infrastructure: Respondents were uncertain whether there should be separate AI safety office spaces in EA hubs and whether there should be separate EA and AI safety student groups at the top 100 universities, but a majority agreed that there should be separate EA and AI safety student groups at the top 20 universities.

- Effect of engaging with EA on quality of AIS work: A majority of respondents thought that engaging with EA generally makes people’s AI safety work better (via e.g. good epistemics, taking risks seriously and sensible prioritization) but they mentioned several ways in which it can have a negative influence (via bad associations and directing promising researchers to abstract or unproductive work). These results should be taken with a grain of salt given the selection effects of who received this survey and who responded (e.g., CEA are less likely to know experts who think that engaging with EA make’s people’s AIS work worse).

- Perceptions of EA in AIS: Respondents had mixed views on how non-EAs doing important AI safety work view EA, with an even split between favourably and unfavourably, and several neutral answers.

Do you think the next 1-2 years is an especially high-leverage time for AI safety, such that we should be operating in sprint mode (e.g., spending down resources quickly) or will leverage remain similarly high or higher for longer, e.g. 5-10 years, such that we need to pace ourselves?

Question Description:

Reasons to operate in sprint mode might be short timelines, political will being likely to fall, or policy and public opinion soon being on a set path.

We understand that this is a hard question, but we’re still interested in your best guess.

Question summary:

Respondents expressed a wide range of views in response to this question. ~5 respondents mentioned that the next few months were a high-leverage time for policy interventions, ~5 thought the next 1–2 years were a high-leverage time for the AIS field broadly, but ~8 favoured pacing ourselves because leverage will remain high for 5–10 years.

Reasons for sprinting included short timelines, the likelihood that more resources will be available in future, the opportunity to set terms for discussion, the UK summit, the US election, and building influence in the field now which will be useful later. Reasons for pacing ourselves included slow take-off, the likelihood of future policy windows, the immaturity of the AI safety field, and interventions becoming more tractable over time.

What percent of the EA community’s resources (i.e. talent and money) do you think should be devoted to AI safety over the next 1-2 years?

Question description:

Assume for the sake of this question that 30% of the EA community’s resources are currently devoted to AI safety, and future EA resources wouldn't need to be spent by organisations/talent closely affiliated with EA brand.

Leaders in the EA community are asking ourselves how much they should go ‘all in’ on AI safety versus sticking with the classic cause-area portfolio approach. Though you’re probably not an expert on some of the variables that feed into this question (e.g., the relative importance of other existential risks, etc.), you know more about AI safety than most EA leaders and have a valuable perspective on how urgently AI safety needs more resources.

Question summary:

Everyone unanimously agreed that EA should devote exactly 55% of resources to AI safety and respondents cooperatively outlined a detailed spending plan, almost single-handedly resolving the EA community’s strategic uncertainty. Incredible stuff…

Unfortunately not. In fact, respondents expressed a wide range of views in response to this question and many expressed strong uncertainty. Respondents who directly answered the question gave answers between ~20% and ~100%, with a wide spread of answers within that range. Two respondents mentioned that a substantial portion of quality-adjusted EA talent is already going towards AI safety. A few mentioned uncertainty that marginal additional spending in the space is currently effective compared to other opportunities. Two mentioned concern about the brand association between EA and AIS if EA moved closer to all-in on AIS.

Examples responses that illustrate the wide range of views include:

- “I expect more than 30% of resources to be the right call. 50% seems reasonable to me.”

- “At least 50% of the top-end movement building talent should be focused on AI safety over the next 1-2 years.”

- “Unclear how much EA could usefully spend given my very-short-timelines views, non-fungibility of talents and interests between causes, etc. And it's not like all the other problems have become less important! I'd look to spend as much on robustly-very-positive AI Safety as I could though, and can imagine anywhere between "somewhat less than at present" to "everything" matching that.”

- “Depends what we'd do with the resources. I'm not very excited about our current marginal use of resources, so that suggests 25%? On the other hand I'm not sure if there are better ways to use money (either now or in the future), so maybe I should actually be higher than 30%.”

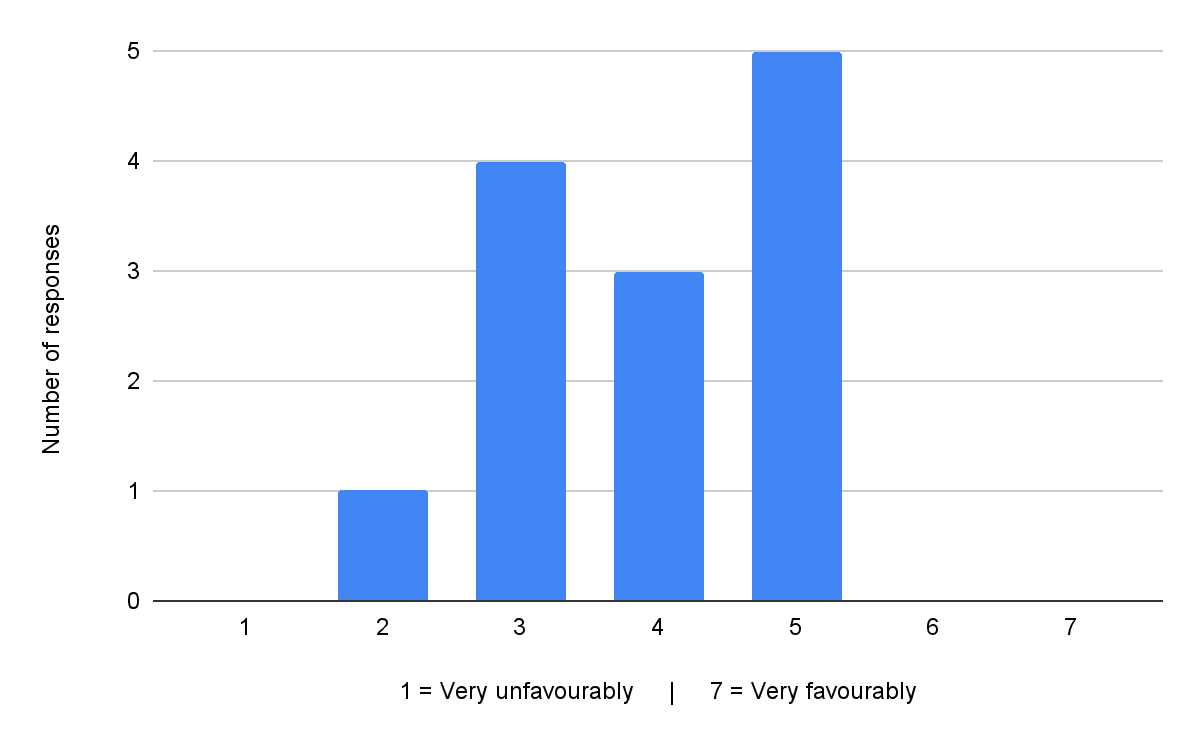

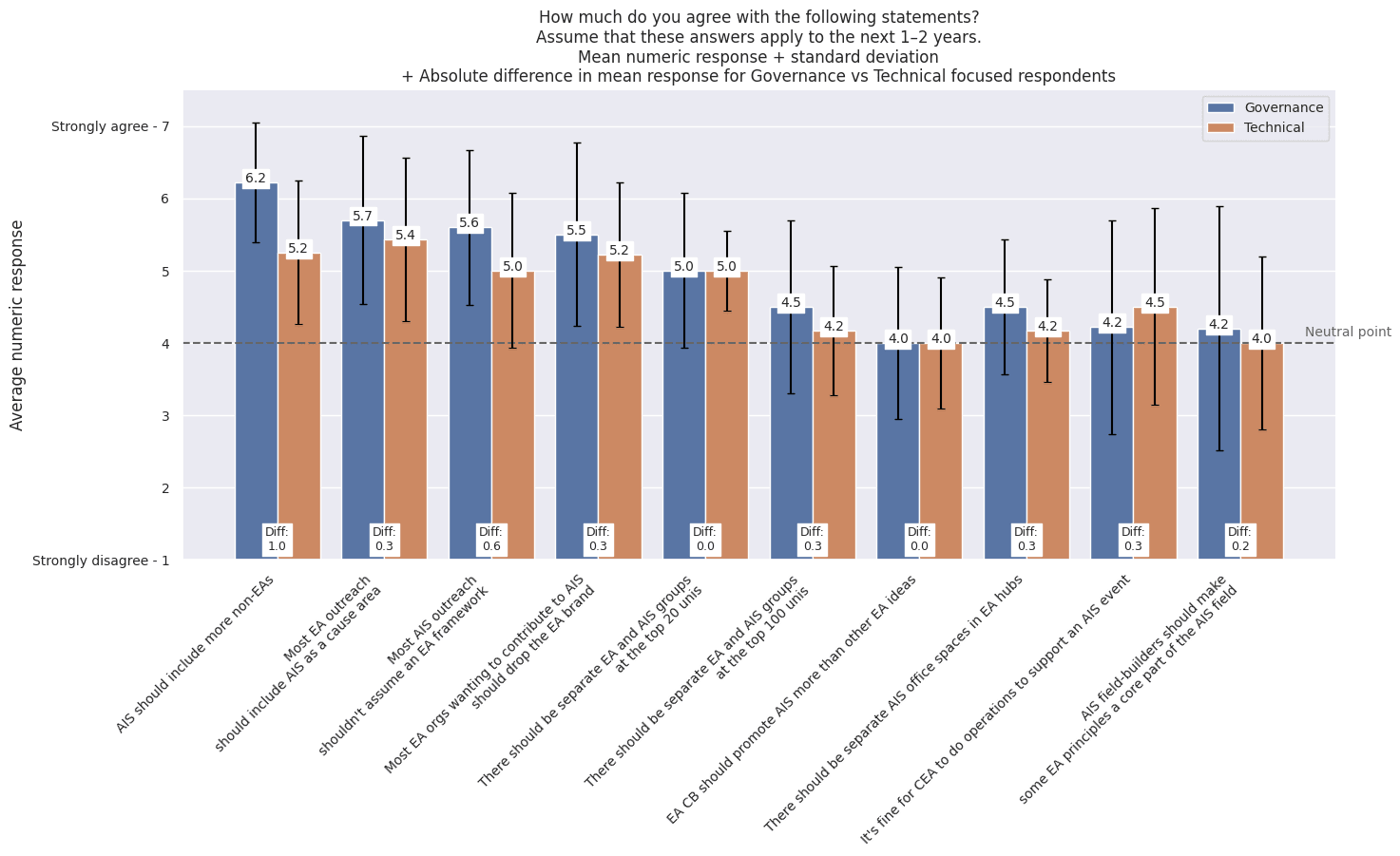

Agreement voting on statements on the relationship between EA and AI safety

Prompt for statements in this section:

How much do you agree with the following statements? Assume that these answers apply to the next 1–2 years.

Among equally talented people interested in working on AI safety, do you think engaging with EA generally makes their AI safety work better or worse?

Question description:

“Better work” here means work that you think has a greater likelihood of reducing catastrophic risks from AI than other work.

“Engaging with EA” means things like attending EA Global or being active in an EA student group.

Summary stats: n = 14; Mean = 4.9; SD = 1.0

Do you have any comments on the relationship between engaging with EA and the quality of AI safety work?

Question Summary:

Respondents indicated that EA has a broadly positive influence on AIS, but mentioned several ways in which it can have a negative influence. ~5 respondents said that, for most people, engaging in a sustained way with EA thinking and the EA community made their AIS work better via e.g. good epistemics, taking risks seriously and sensible prioritization. The ways EA can have a negative influence included bad associations (e.g. cultiness) and directing promising researchers to abstract or unproductive work.

In your experience, how do non-EAs doing important AI safety work view EA?

Summary stats: n = 13; Mean = 4.0; SD = 1.0

What infrastructure does AI safety need?

We prompted people to propose capacity-building infrastructure for the field of AI safety with a variety of questions like “What additional field-building projects do you think are most needed right now to decrease the likelihood of catastrophic risks from AI?” and “What is going badly in the field of AI safety? Why are projects failing or not progressing as well as you'd like?”

Note that not all respondents necessarily agree with all the suggestions raised. To the contrary, we expect that there will likely be disagreement about many of the suggestions raised. The survey asked people to suggest interventions but did not ask them to evaluate the suggestions of others, so the output is more like a brainstorm and less like a systematic evaluation of options.

Section summary

- Respondents suggested a wide range of potential field-building projects; they mentioned outreach programs aimed at more senior professionals, a journal or better alignment forum, events and conferences, explainers, educational courses, a media push, and office spaces.

- For the field of technical AI safety, respondents mentioned wanting to see senior researchers and executors, good articulations of how a research direction translates into x-risk reduction, evals and auditing capacity, demonstrations of alignment failures, proof-of-concept for alignment MVPs, better models of mesa-optimization / emergent goals. Two respondents also mentioned the importance of interventions that buy time for the field of technical alignment.

- For the field of AI governance and strategy, respondents mentioned wanting to see more compute governance, auditing regulation, people with communication skills, people with a strong network, people with technical credentials, concrete policy proposals, a ban or pause on AGI and a ban on open source LLMs.

- What’s going badly: When asked what’s going badly in AI safety, respondents mentioned the amount of unhelpful research, not enough progress on evals, the popularity of open source, a lack of people with general/technical impressiveness with a strong understanding of the risks, the lack of any framework for assessing alignment plans, the difficulty of interpretability, the speed of lab progress, the slow pace of Congress, too much funding, not enough time, not enough awareness of technical difficulties, a focus on persistent, mind-like systems instead of a constellation of models and the absence of workable plans.

- How EA meta orgs can help: When asked how existing EA meta orgs (e.g. CEA, 80k) can contribute, respondents had mixed views. Two were happy with what these orgs were currently doing, provided they avoided associating AIS too strongly with EA. One thought they weren’t well placed to help, another encouraged them to gain a stronger understanding of the field in order to find ways to contribute.

How do opinions of governance vs technical focused respondents compare?

In the summaries above, we don’t segment any responses by whether the respondent was primarily focused on AI governance or technical work.

For this section, we categorised respondents as “governance focused” and / or “technical focused” based on their reported type of work, and evaluated how these groups differed.

- Note that these cohort based analyses take a very small response size and chop it up into even smaller segments — we think there’s a reasonable chance the differences in responses across fields we’ve found are not that meaningful.

In distinguishing these categories, we relied on people’s self-reports. Namely, their responses to the ‘select all that apply’ question on “Which of the below best describes your work?”

- If someone checked any of the following technical categories and none of the governance categories below we marked them as ‘technical focused’: Technical AI safety research, Technical AI safety field-building, Technical AI safety grantmaking

- If someone checked any of the following governance categories and none of technical categories, we marked them as ‘governance focused’: AI governance grantmaking, AI governance research, AI governance field-building

- If someone checked both technical and governance categories, we marked them as ‘both’

Eleven survey (n = 11) respondents reported that they focused on governance & strategy work, and eleven survey respondents (n = 11) reported that they focused on technical work. Three respondents reported focusing on both.

Governance vs technical focused respondents: Differences in views on talent needs

- In general there was fairly high agreement on what the top 1-3 groups to prioritise were.

- Governance-focused respondents were most excited by:

- (1) Information security experts

- (2) Current policymakers

- (Tied for 3) Promising ML PhD students + graduate policy students.

- Technical-focused respondents were most excited by:

- (Tied for 1) Information security experts + promising ML PhD students

- (Tied for 3) Current policymakers + academics in ML / computer science.

- Governance focused respondents were slightly more excited about recruiting top female UK/US students and professionals, and slightly less excited about recruiting academics in philosophy (although the standard deviation on the latter is extremely wide).

Governance vs technical focused respondents: Differences in views on relationship between EA and AI safety

- Governance-focused respondents felt most strongly that:

- (1) AI safety work should, like global health and animal welfare, should include many more people who are not into EA

- Governance-focused respondents agreed with this statement much more than technical-focused respondents.

- (2) Most EA outreach should include AI safety as a cause area

- (3) Most AI safety outreach shouldn’t assume an EA framework

- (1) AI safety work should, like global health and animal welfare, should include many more people who are not into EA

- Technical-focused respondents felt most strongly that:

- (1) Most EA outreach should include AI safety as a cause area

- (Tied for 3):

- AI safety work should, like global health and animal welfare, should include many more people who are not into EA

- Most EA-branded orgs that want to contribute to AI safety should drop the EA brand.

- ^

When we said "less" in this question, we meant "on net, less, because I'd want the effort being spent on this to be spent elsewhere", not “less, because I think this outreach is harmful.” But some respondents may have interpreted it as the latter.

FYI that this is the first of a few Meta Coordination Forum survey summaries. More coming in the next two weeks!

Thank you for this. I found it very helpful, for instance, because it gave me some insight into which audiences are currently perceived as being most valuable to engage by leaders in the AI safety and governance communities.

As I mentioned in my series of posts about AI safety movement building, I would like to see a larger and more detailed version of this survey.

Without going into too much detail, I basically want more uncertainty reducing and behavior prompting information. Information that I think will help to coordinate the broader AI safety community to do things that benefit themselves and the community. Obviously a larger sample would be much better.

For instance, I would like to understand which approaches to growing the community are perceived as particularly positive and particularly negative, and why. I want people with the potential to reach and engage potentially valuable audiences to better understand the good ways/programs etc to on board new people into the community. So we get more of what organizations and leaders think are good programs or good approaches and less of the bad.

I'd like to know what number of different roles organizations plan to hire. Like is it the case that these organizations collectively expect to hire 10 information security experts, or do they think it's important and want someone else to fund that work? Someone who works in information security might be very likely to attempt a career transition if they expect job opportunities but this doesn't quite demonstrate that. I would like it to be the case that someone considering the possibility of a stressful and risky career transition into AIS has the best possible information they can have about the probability that they will get a role and be useful in the AI safety community.

In my experience many people find the AI safety opportunity landscape is extremely complex and confusing and this probably filters out a significant portion of good candidates who don't have time to figure out and be secure in pursuing options that we probably want them to take. More work like this, if effectively disseminated, could make their decisions and actions easier and better.

Your insights have been incredibly valuable. I'd like to share a few thoughts that might offer a balanced perspective going forward.

It's worth noting the need to approach the call for increased funding critically. While animal welfare and global health organizations might express similar needs, the current emphasis on AI risks often takes center stage. There's a clear desire for more support within these organizations, but it's important for OpenPhil and private donors to assess these requests thoughtfully to ensure their alignment with genuine justification.

The observation that AI safety professionals anticipate more attention within Effective Altruism for AI safety compared to AI governance confirms a suspicion I've had. There seems to be a tendency among AI safety experts to prioritize their field above others, urging a redirection of resources solely to AI safety. It's crucial to maintain a cautious approach to such suggestions. Given the current landscape in AI safety—characterized by disagreements among professionals and limited demonstrable impact—pursuing such a high-risk strategy might not be the most prudent choice.

In discussions with AI safety experts about the potential for minimal progress despite significant investment in the wrong direction over five years, their perspective often revolves around the need to explore diverse approaches. However, this approach seems to diverge considerably from the principles embraced within Effective Altruism. I can understand why a community builder might feel uneasy about a strategy that, after five years of intense investment, offers little tangible progress and potentially detracts from other pressing causes.