Ram 🔸

Bio

Participation2

My goal is to do work that counterfactually reduces AI risk from loss-of-control scenarios. My perspective is shaped by my experience as the founder of a VC-backed AI startup, which gave me a firsthand understanding of the urgent need for safety.

I have a B.S. in Artificial Intelligence from Carnegie Mellon and am currently a CBAI Fellow at MIT/Harvard. My primary project is ForecastLabs, where I'm building predictive maps of the AI landscape to improve strategic foresight.

I subscribe to Crocker's Rules and am especially interested to hear unsolicited constructive criticism. http://sl4.org/crocker.html - inspired by Daniel Kokotajlo.

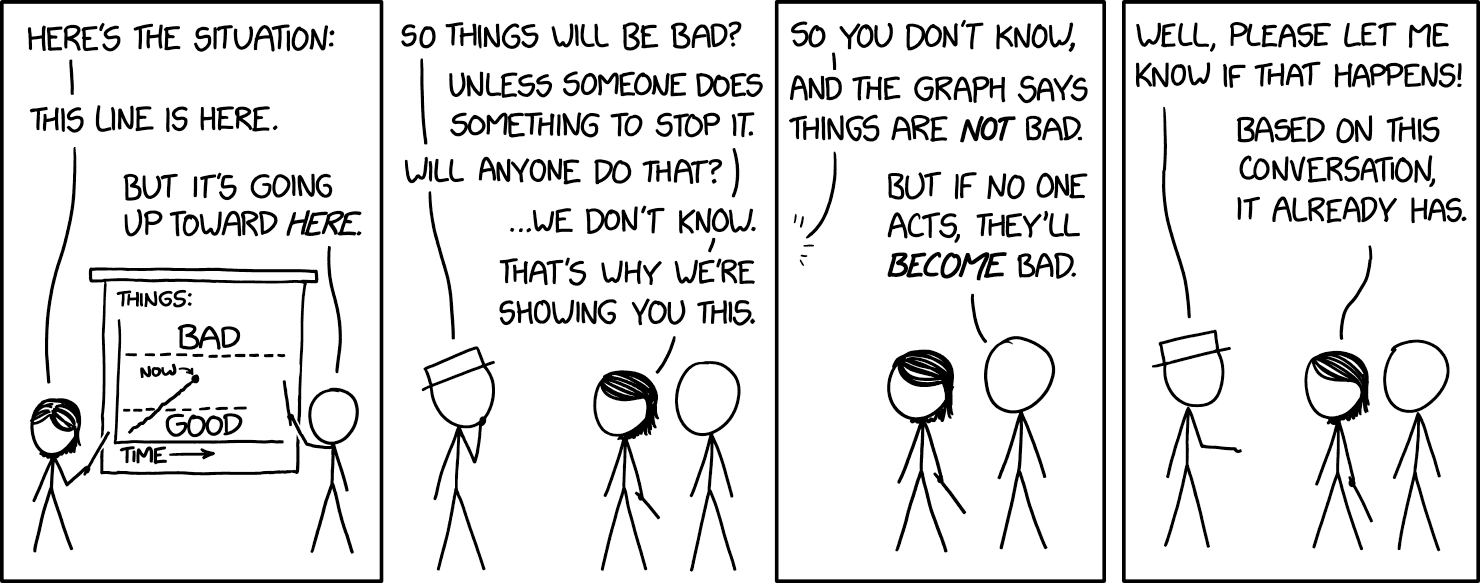

(xkcd meme)

How others can help me

Opportunities for funding my agenda on advancing LLM Corrigibility and connections to funders who can help me with this.

How I can help others

Questions on corrigibility and entrepreneurship advice (I built a VC-backed startup)

This is a very well thought out post and thank you for posting it. We cannot depend on warning shots and a mindset where we believe people will "wake up" due to warning shots is unproductive and misguided.

I believe to spread awareness, we need more work that does the following:

This cannot be overstated, lets put in the work to make this happen, ensuring that our understanding of AI risk is built from first principles so we are resilient to negative feedback!