Saul Munn

Posts 17

Comments88

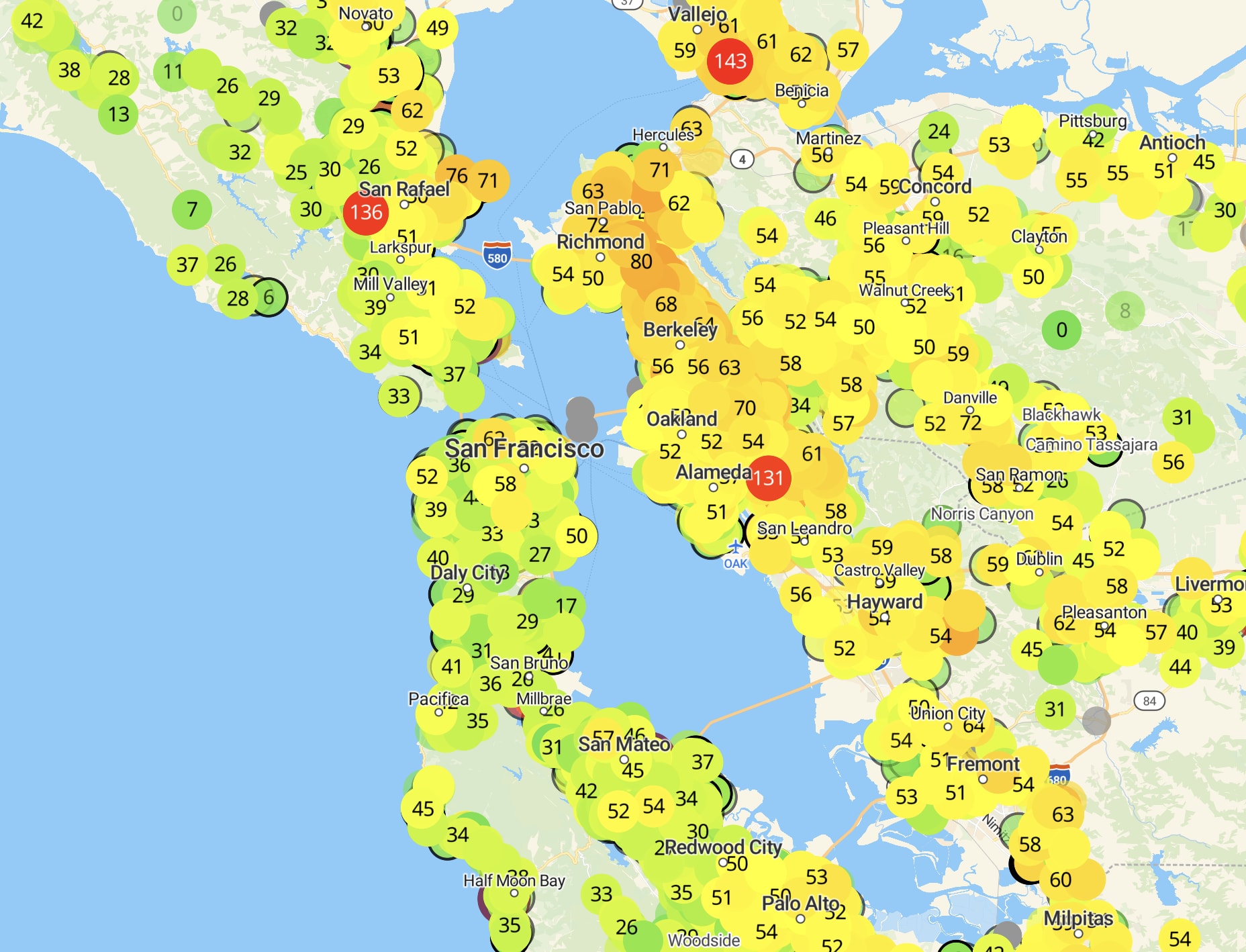

PurpleAir collects data from a network of private air quality sensors. Looks interesting, and possibly useful for tracking rapid changes in air quality (e.g. from a wildfire).

(written v quickly, sorry for informal tone/etc)

i think that a happy medium is getting small-group conversations (that are useful, effective, etc) of size 3–4 people. this includes 1-1s, but the vibe of a Formal, Thirty Minute One on One is a very different vibe from floating through 10–15, 3–4-person conversations in a day, each that last varying amounts of time.

- much more information can flow with 3-4 ppl than with just 2 ppl

- people can dip in and out of small conversations more than they can with 1-1s

- more-organic time blocks means that particularly unhelpful conversations can end after 5-10m, and particularly helpful ones can last the duration that would be good for them to last (even many hours!)

- 3-4 person conversations naturally select for a good 1-1. once 1-2 people have left a 3-4 person conversation, the conversation is then just a 1-1 of the two people who've engaged in the conversation longest — which seems like some evidence of their being a good match for a 1-1.

however, i think that this is operationally much harder to do for organizers than just 1-1s. my understanding is that this is much of the reason EAGs (& other conferences) do 1-1s, instead of small group conversations.

- i think Writehaven did a mediocre job of this at LessOnline this past year (but, tbc, it did vastly better than any other piece of software i've encountered).

- i think Lighthaven as a venue forces this sort of thing to happen, since there are so so so many nooks for 2-4 people to sit and chat, and the space is set up to make 10+ person conversations less likely to happen.

i know that The Curve (from @Rachel Weinberg) created some "Curated Conversations:" they manually selected people to have predetermined conversations for some set amount of time. iirc this was typically 3-6 people for ~1h, but i could be wrong on the details. rachel: how did these end up going, relative to the cost of putting them together?

[srs unconf at lighthaven this sunday 9/21]

Memoria is a one-day festival/unconference for spaced repetition, incremental reading, and memory systems. It’s hosted at Lighthaven in Berkeley, CA, on September 21st, from 10am through the afternoon/evening.

Michael Nielsen, Andy Matuschak, Soren Bjornstad, Martin Schneider, and about 90–110 others will be there — if you use & tinker with memory systems like Anki, SuperMemo, Remnote, MathAcademy, etc, then maybe you should come!

Tickets are $80 and include lunch & dinner. More info at memoria.day.

Started something sorta similar about a month ago: https://saul-munn.notion.site/A-collection-of-content-resources-on-digital-minds-AI-welfare-29f667c7aef380949e4efec04b3637e9?pvs=74