Zach Stein-Perlman

Bio

Participation1

Researching donation opportunities. Previously: ailabwatch.org.

Posts 59

Comments486

Topic contributions1

Quick take on longtermist donations for giving tuesday.

My favorite donation opportunity is Alex Bores's congressional campaign. I also like Scott Wiener's congressional campaign.

If you have to donate to a normal longtermist 501c3, I think Forethought, METR, and The Midas Project—and LTFF/ARM and Longview's Frontier AI Fund—are good and can use more money (and can't take Good Ventures money). But I focus on evaluating stuff other than normal longtermist c3s, because other stuff seems better and has been investigated much less; I don't feel very strongly about my normal longtermist c3 recommendations.

Some friends and I have nonpublic recommendations less good than Bores but ~4x as good as the normal longtermist c3s above, according to me.

- +1

- Random take: people underrate optionality / information value. Even within EA, few opportunities are within 5x of the best opportunities (even on the margin), due to inefficiencies in the process by which people get informed about donation opportunities. Waiting to donate is great if it increases your chances of donating very well. Almost all of my friends regret their past donations; they wish they'd saved money until they were better-informed.

- Random take: there are still some great c3 opportunities, but hopefully after the Anthropic people eventually get liquidity they'll fill all of the great c3 opportunities.

- Some public c3 donation opportunities I like are The Midas Project (small funding gap + no industry money), Forethought, and LTFF/ARM.

- Random take: you should really invest your money to get a high return rate.

Thanks. I'm somewhat glad to hear this.

One crux is that I'm worried that broad field-building mostly recruits people to work on stuff like "are AIs conscious" and "how can we improve short-term AI welfare" rather than "how can we do digital-minds stuff to improve what the von Neumann probes tile the universe with." So the field-building feels approximately zero-value to me — I doubt you'll be able to steer people toward the important stuff in the future.

A smaller crux is that I'm worried about lab-facing work similarly being poorly aimed.

I endorse Longview's Frontier AI Fund; I think it'll give to high-marginal-EV AI safety c3s.

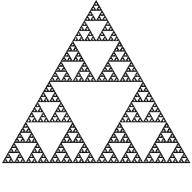

I do not endorse Longview's Digital Sentience Fund. (This view is weakly held. I haven't really engaged.) I expect it'll fund misc empirical and philosophical "digital sentience" work plus unfocused field-building — not backchaining from averting AI takeover or making the long-term future go well conditional on no AI takeover. I feel only barely positive about that. (I feel excited about theoretical work like this.)

$500M+/year in GCR spending

Wait, how much is it? https://www.openphilanthropy.org/grants/page/4/?q&focus-area%5B0%5D=global-catastrophic-risks&yr%5B0%5D=2025&sort=high-to-low&view-list=true lists $240M in 2025 so far.

I have a decent understanding of some of the space. I feel good about marginal c4 money for AIPN and SAIP. (I believe AIPN now has funding for most of 2026, but I still feel good about marginal funding.)

There are opportunities to donate to politicians and PACs which seem 5x as impactful as the best c4s. These are (1) more complicated and (2) public. If you're interested in donating ≥$20K to these, DM me. This is only for US permanent residents.

Two hours before you posted this, MacAskill posted a brief explanation of viatopianism.

I think I'm largely on board. I think I'd favor doing some amount of utopian planning (aiming for something like hedonium and acausal trade). Viatopia sounds less weird than utopias like that. I wouldn't be shocked if Forethought talked relatively more about viatopia because it sounds less weird. I would be shocked if they push us in the direction of anodyne final outcomes. I agree with Peter that stuff is "convex" but I don't worry that Forethought will have us tile the universe with compromisium. But I don't have much private info.