Summary

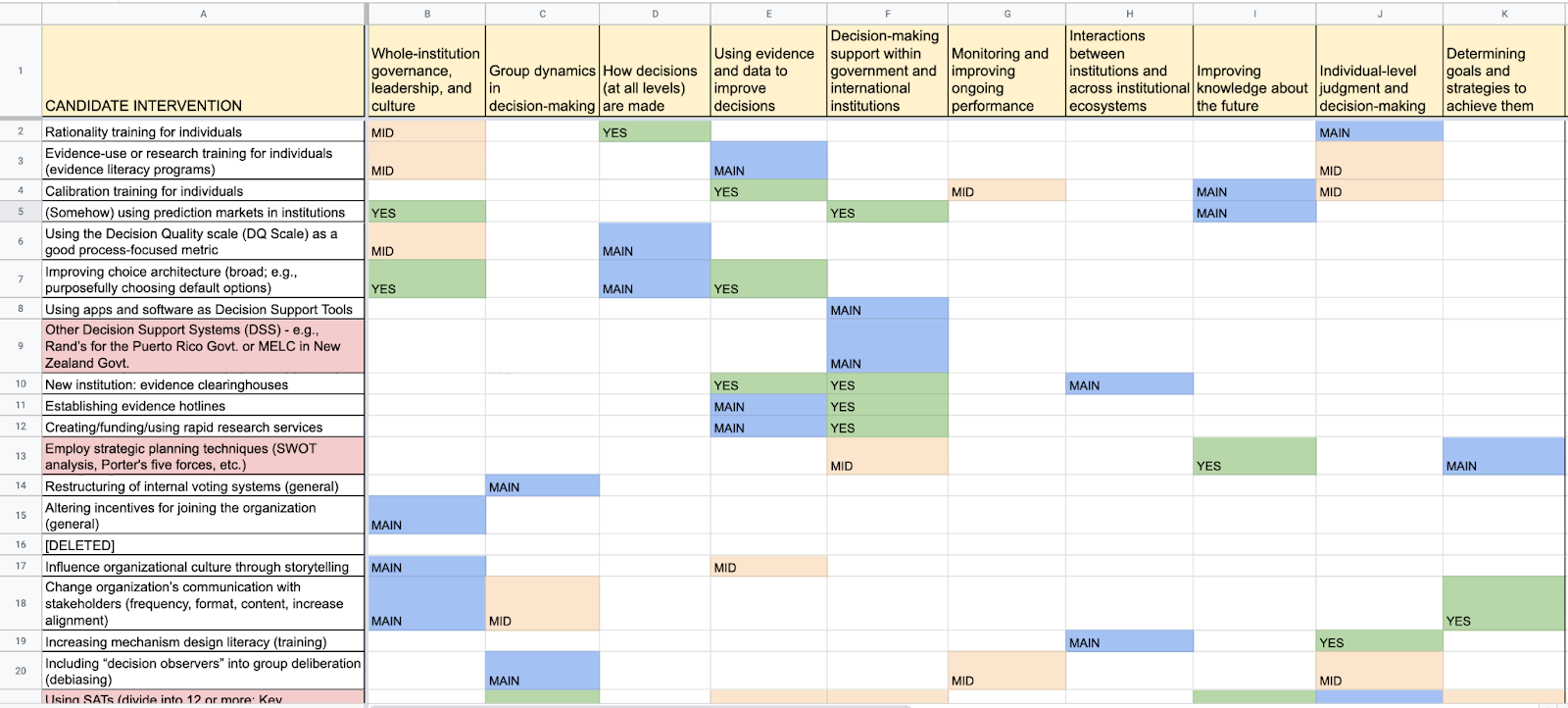

- This is a list of 54 potential interventions for Improving Institutional Decision-Making (IIDM) that I produced during CHERI’s Summer Research Program.

- My goal is to provide a large list of possible interventions as starting points for IIDM researchers.

- I have prioritized 11 interventions during my research (preceded by carets [^]) and have posted their descriptions under List of prioritized interventions. The remaining 43 interventions have a minimal description and are found under Full list of interventions.

- Consider taking a look at the methodology section, which I added to the end of the post to get to the main content more quickly, the next steps section,[1] and the two appendices after it (one on the categorization framework, the other on extra resources).

Motivation

One year ago, the results from a scoping survey conducted by the Effective Institutions Project (EIP) revealed that, on average, respondents believe that the highest-priority activity in the field of Improving Institutional Decision-Making (IIDM) is to “Synthesise the existing evidence on ‘what works’ in IIDM across academic fields.” Since that task proved too difficult for me, I decided to compile a list of “what might work”; a list of potentially useful interventions. My hope is that other researchers can pick interventions from this list and rigorously analyze the evidence behind them.

The ultimate vision motivating this post is to create a list of interventions with a similar format to The Decision Lab’s list of cognitive biases, with a description of each intervention, practical examples, and a review of the relevant evidence. This post is one step toward that goal. Consider taking some next steps yourself.

Full list of interventions

If you would like to see a titles-only list, go to Appendix II.

The descriptions of these 44 non-prioritized interventions are minimal and I wrote them pretty quickly due to time constraints. Their purpose is to clarify the intervention’s name. Interventions are not ordered within categories.

Whole-institution governance, leadership, and culture

Creating identity cues

Subtly reminding people that part of their professional identity is being evidence-informed could increase their evidence uptake (Breckon & Dodson, 2016, p. 8).

Note that, throughout this post, I will be referring to “evidence” and “increasing evidence use.” What I mean by evidence is robust, scientific evidence. I.e., the type you would find in a peer-reviewed journal. I acknowledge that this high-quality evidence is not always available, but that is no excuse for lowering our standards of what constitutes good evidence. When being forced to use lower-quality evidence, we should acknowledge that this is due to lack of better alternatives.

Amending constitutions, bylaws, or statutes

In his 2021 book, “Anticipation, Sustainability, Futures and Human Extinction,” Bruce Tonn (also my mentor for this project) dedicates a chapter to creating new institutions and changing existing ones in order to increase foresight, prevent existential risks, and “ensure humanity’s journey into the distant future.” The chapter includes a study on the future-orientedness of national constitutions, suggestions for new “anticipatory institutions,” suggestions for constitutional amendments, and more. This is a great resource to get started and get a first longtermist view of constitutional amendment opportunities. My hope is that the approach Tonn took to studying the future-orientation of constitutions and proposing improvements could be applied to other institutions. Perhaps one can take similar approaches to changing the legal structure of many different forms of institutions, and that is why I included bylaws and statutes in the intervention’s title.

As Araújo & Koessler (2021) state, 41% of constitutions give (varying levels of) legal protection to future generations.[2] Despite that, Tonn (2021) states that, "of ... 100 reviewed constitutions, only two contained explicit provisions for institutions to advocate for future generations"; the Hungarian and Tunisian ones (p. 164; emphasis mine).[3] Constitutions could thus be amended to establish new institutions, not just protect future generations in the abstract. Example ideas are Tonn’s proposed national anticipatory institutions (NAI), the World Court of Generations (WCG), the InterGenerational Panel on Perpetual Obligations (IPPO), or others. For possible approaches to amending constitutions, see Tonn (2021, p. 165).

Capitalizing on “evidence leaders” to increase evidence uptake

“Evidence leaders” are likable, influential, and trustworthy individuals who publicly champion evidence use. Giving these individuals the spaces to champion their views, both within the institution and publicly, could be a way of broadly increasing evidence uptake. See this article from the healthcare sector.

Reviewing evidence: signing up employees to evidence newsletters, reminders of past findings, journal clubs

Some interventions in this category: (1) signing up employees to evidence newsletters which list important new findings in their relevant area, (2) having a similar newsletter, which “reminds” them about important past findings instead of just new ones, and (3) creating journal clubs in the organization: groups that meet and discuss new journal publications relevant to the organization’s work. This could serve as a motivator to read more relevant research, and would also be a way to slowly build stronger epistemic commons (shared knowledge).

Making a research database your employees’ default landing page

Making research databases employees’ landing pages changes the default option, which leverages their bias toward inaction to increase use of scientific research (Milkman et al., 2009a). As noted by one of the reviewers of this post, this seems like an intervention that could be helpful only in niche roles.

Providing internal financial incentives to modify behavior

This is a broad category of possible interventions, but is listed here as one because I think that this solution to suboptimal behavior isn’t considered seriously enough. A (somewhat niche) example of this would be offering small prizes or higher bonuses for those who list their epistemic status and use numerical probabilities when writing internal policy recommendations. See this article from the health sector exploring this, as cited in Breckon & Dodson (2016).

Incentivizing attendance of or hosting knowledge exchange events

EA-relevant examples of this are EAG(x) events. Seminars, conferences, workshops, and the like can increase coordination and cooperation between experts and facilitate knowledge exchange. This would also be a great place to give the aforementioned “evidence leaders” a platform. Organizations could host knowledge exchange events, or incentivize attendance at third-party events by promoting such events, covering transportation/accommodation costs, or outright requiring attendance of X number of such events per year.

Increasing diversity in institutions

Increasing different types of diversity (geographic, ethnic, racial, gender, seniority, etc.) in institutions and teams of workers may increase performance. After a very short amount of time digging into the research, it seems to me like diversity’s effects on performance are not as straightforward as I had previously assumed. See Elicit for a short list of relevant papers. Stauffer, M. has said that “While popular, nudges and diversity do not seem to adequately support political decision-making” (link). Nevertheless, even if diversity did not directly improve performance in certain areas (which is unclear) an institution might pursue diversity goals for many other reasons, such as increasing the representation of underrepresented groups in public office.

Altering incentives for joining organizations

This idea comes from a conversation I had with J.T. Stanley at EAGx Boston (2022). His idea was to change the very incentives for people to run for the US Congress or the presidency by establishing Public Office Enrichment Ceilings (POECs), which essentially mean setting a hard limit on how much money and assets these individuals would be allowed to hold. The idea being that setting such a limit might shift the incentives people face when deciding whether to run for public office: those who run for power and more riches quit, while those dedicated to the public good remain. This is a simplification of J.T.'s idea, so if you want to delve deeper into it refer to the following document.

That is just one way that the incentives for joining organizations could be changed. Open avenues of research would be what other incentive structures could be altered, in which organizations, and whether some such changes have been successful before.

Influencing organizational culture through storytelling

This idea comes from Superforecasting (Tetlock & Gardner, 2016). In chapter 10, Tetlock & Gardner recount how Helmuth von Moltke, a nineteenth-century Prussian field marshal, was partly responsible for influencing the Prussian army’s culture toward embracing critical and independent thinking by officers on-the-ground. Below is an excerpt from Superforecasting that should help me make my point.

The acceptance of criticism went beyond the classroom, and under extraordinary circumstances more than criticism was tolerated. In 1758, when Prussia’s King Frederick the Great battled Russian forces at Zorndorf, he sent a messenger to the youngest Prussian general, Friedrich Wilhelm von Seydlitz, who commanded a cavalry unit. “Attack,” the messenger said. Seydlitz refused. He felt the time wasn’t right and his forces would be wasted. The messenger left but later returned. Again he told Seydlitz the king wanted him to attack. Again Seydlitz refused. A third time the messenger returned and he warned Seydlitz that if he didn’t attack immediatel the king would have his head. “Tell the King that after the battle my head is at his disposal,” Seydlitz responded, “but meanwhile I will make use of it.” Finally, when Seydlitz judged the time right, he attacked and turned the battle in Prussia’s favor. Frederick the Great congratulated his general and let him keep his head. This story, and others like it, notes Muth, “were collective cultural knowledge within the Prussian officer corps, recounted and retold countless times in an abundance of variations during official lectures, in the officer’s mess, or in the correspondence between comrades.” The fundamental message: think. If necessary, discuss your orders. Even criticize them. And if you absolutely must—and you better have a good reason—disobey them. (Tetlock & Gardner, 2016)

I hope this clarifies what I mean by this intervention. The inner workings of institutions are deeply shaped by the institutions’ culture, which is in turn shaped by many factors, among them the stories we tell and the “collective cultural knowledge” that we develop. How exactly to use storytelling to influence organizational culture goes beyond the scope of this article.

Core-values change

This idea comes from a document from the European Commission’s Joint Research Centre: “The principle of informing policy through evidence could be recognised as a key accompaniment to the principles of democracy and the rule of law.” (Mair et al., 2019). Attempting to shift an institution’s core values seems difficult, but potentially high-impact.

Decreasing partisanship and polarization (general)

Excessive partisanship and polarization seem unconducive to rational discourse and would probably hinder effective deliberation and decision-making. I have not had time to delve into what actual, specific intervention exist that could help counter this issue; I am including this “general” area because I think it would be worthwhile for other researchers to pay more attention to it than I did. The same applies to all other interventions in this article labeled “general.”

Altering hiring practices (general)

Just as the intervention above: I did not have time to go into this area, but this dimension of whole-institution governance interventions should not be neglected by others.

Decreasing corruption (general)

Same as above. I was very surprised that I did not come across interventions to decrease corruption in institutions while doing research, and even more surprised in hindsight that I did not go out of my way to look for them. Anecdotally, it seems to me that corruption may be the single biggest problem hindering the effectiveness of many governmental institutions in my home country (Argentina) as well as in many (if not most) other developing countries. Interventions that successfully decrease corruption in institutions, if feasible and cost-effective, could be one of the highest priorities for development-focused IIDM in the future. Therefore, if you are an IIDM-focused researcher, it might be worthwhile to take a much closer look into this general area than I did.

Group dynamics in decision-making

^Delphi method for aggregating judgment

[Click on the caret (^) above to be taken to the more in-depth explanation]

^Including “decision observers” into group deliberation (debiasing)

[Click on the caret (^) above to be taken to the more in-depth explanation]

Structured expert judgment techniques (group)

These are “techniques for using expert judgment as scientific data” (Coleson & Cooke, 2017). The Delphi method, the Roger Cooke method, and other techniques. An initial look into each of them did not give me enough insight to tell whether I should make an entry for each or whether I should group them all into one. I decided to prioritize Delphi, because I saw lots of compelling evidence in its favor. You want to research the Roger Cooke method see the following resources.

Surveying experts and using performance-based aggregations of their judgments - Coherentizing and aggregating group judgments

This is similar to the Delphi method, in that it sources many experts’ judgments, though it lacks the iterative process of updating judgments to reach consensus. This is also related to the intervention “using post-analytic techniques” further down in this document, where you can take a look at the evidence backing coherentization and aggregation of expert judgment. Basically, the idea is to survey experts on, for example, the likelihood that X, Y, or Z will happen. You then might decrease the weights of the expert judgments that do not conform to the axioms of probability (e.g., the probabilities of mutually exclusive events exhaustive of the sample space don’t add up to one). Finally, you would take the mean of these weighted judgments to come to a “final say” on the matter. This is a huge oversimplification of the process, so please refer to the ^Using post-analytic techniques section below, where evidence in favor of this approach is given.

Developing and using consensus-building discussion platforms

Taiwan has experimented with discussion platforms designed to build consensus instead of enhancing disagreements. Developing social media platforms (or changing existing ones) designed with the goal of building common ground, amplifying nuanced opinions, and avoiding echo chambers could be one way of decreasing polarization. This does not seem very tractable to me, but if you are interested check out the following Wired article on Taiwan’s so-called “democracy experiments.”

Facilitating group deliberation

Group deliberation carries the risk of groupthink. Perspective sharing and constructive criticism are two ways to avoid this (Kerr & Tindale, 2004, as cited in Stauffer et al., 2021). See Stauffer et al. (2021) for more relevant resources. Even in the absence of groupthink, how can a group conversation be made more effective? See also ^Including “decision observers” into group deliberation (debiasing) in this same document.

Subdividing groups so that they don’t surpass Dunbar’s number (speculative)

Dunbar’s number refers to a measure of the limit of social connections that a person can hold at any one time, usually in the range of 100-200 people. The research behind this seems speculative, and has been challenged by Lindenfors (2021), among others. The reasoning behind this intervention is trying to limit the number of people in any one office to some number (maybe Dunbar’s, maybe another), so that people’s capacity to build relationships within the (sub-)organization is not exceeded. It seems intuitively correct that an office with 1000 people would not be able to accommodate the same levels of cooperation/coordination as one with 50 people. The main question for others to investigate is if this effect is big enough to warrant advising institutions to limit their offices to X number of people. For examples of this intervention being applied, see this Wikipedia article, under “popularization.”

Restructuring of internal voting systems / electoral systems (general)

These topics definitely deserve more attention than I had time to dedicate to them. Since I did not go into them in-depth, I am suspending judgment as to how to subdivide the systems. For more information, see the following resources on quadratic voting, social choice theory, electoral reform, and the Center for Election Science (CES). For a foundational textbook in decision theory, including social choice theory, see Resnik (1987). This seems like a fruitful area of research as well as advocacy.

How decisions (at all levels) are made

Using the Decision Quality scale

The Decision Quality (DQ) scale involves six steps: setting the right frame, considering alternatives, gathering meaningful data, clarifying values and tradeoffs, using logical reasoning, and committing to action. These steps seem to be in line with other normative approaches that I have learned throughout my Behavioral Decision Sciences studies at Brown University, but there may be other, better frameworks of valuing decision quality. The advantage of the DQ scale is that it is process-focused, which means that using it can provide more clarity on which aspects of the decision-making process are lacking while making decisions, not after they have been made.

Using Causal Decision Diagrams (CDDs)

Dr. Lorien Pratt advocates for the use of CDDs, which she introduces in a series of posts on her website. I have done less reading on these than on the Decision Quality scale above, but the resources in Pratt’s website seem worth investigating.

Improving choice architecture, nudging (general)

This article from The Decision Lab gives a better explanation than I could here. This is a group of interventions (hence the label “general”). The key insight of nudge theory is that the way in which options are presented (the choice architecture) matters. Without restricting freedom of choice, it is possible to nudge people into choosing certain options instead of others. This is a uniquely interesting intervention, in that it is easy to not only increase the technical quality of decisions (the focus of this article), but also better align institutions’ decisions to altruistic goals. See an example for increasing participation in organ donation programs — changing default and/or recommended options for employees could change outcomes to be more aligned with the choice architect’s preferences. See also libertarian paternalism and a 2022 paper challenging the evidence behind nudging: Maier et al., 2022.

Establishing or improving Standard Operating Procedures (SOP)

See this website explaining SOPs and their importance. Especially for big institutions that follow SOPs closely, improving these procedures seems worthwhile. SOPs may be appropriate in some circumstances but overly rigid and bureaucratic in others. Relatedly, see The Checklist Manifesto, a book arguing in favor of the use of checklists in business and other contexts to increase adherence to best practices. See also the pointing and calling method, which would synergize well with SOPs and checklists in certain, more specific contexts.

Using DMDU Methods*

*This is a group of interventions. They are grouped together here due to lack of time to investigate and categorize each individually.

The DMDU (Decision-Making under Deep Uncertainties) methods here are taken directly from Bonjean Stanton & Roelich (2021), and are: Robust Decision Making (RDM), Dynamic Adaptive Planning (DAP), Dynamic Adaptive Policy Pathways (DAPP), Info-Gap Theory (IG) and Engineering Options Analysis (EOA).

Stauffer et al. (2021) also endorsed Multi-Criteria Decision Analysis (MCDA), DAP, and DOA as “decision-making under uncertainty” methods.

See also the DMDU Society.

Using evidence and data to improve decisions

^Employing simple linear models (e.g., for college admissions)

[Click on the caret (^) above to be taken to the more in-depth explanation]

^Establishing rapid research services: evidence hotlines and helpdesks

[Click on the caret (^) above to be taken to the more in-depth explanation]

Running information literacy (IL) workshops

The main idea is to provide the relevant workers at an organization with the necessary knowledge to find credible information and evidence on their own, analyze it critically, and communicate it with high fidelity. I have the intuition that, for many people, a short, well-run workshop could dramatically increase their ability to find and use credible information online. See Smart et al. (2016) for an example of an IL program in the health sector.

Requiring explicit evidence for policy recommendations/general proposals

The idea is to require scientific evidence to be explicitly cited to endorse all or most aspects of policy proposals, if such evidence is available. I am not at all sure to what extent this is already the case in different countries and institutions.

Mandating public calls for evidence submissions before discussing policies

The European Commission’s Joint Research Centre (JRC) published an article (Mair et al., 2019) which, among other things, considers “making a public call for evidence at the beginning of the [policymaking] process” (p. 64). This initial phase of evidence collection before discussion could increase the overlap between people’s epistemic commons, and decrease the likelihood that disagreements regarding the goodness of a proposal stem from differences in people’s factual knowledge.

Although this intervention comes from the policymaking space, it could just as well be used in other contexts. For example, one could require an information-sharing/pooling phase prior to group deliberation when discussing any big decisions in any type of institution.

Decision-making and data to improve decisions

^Other Decision Support Systems (DSS)

[Click on the caret (^) above to be taken to the more in-depth explanation]

Using the EAST Framework for behavioral interventions

This comes from the Behavioral Insights Team’s (BIT) Behavioral Government report. EAST: Easy, Attractive, Social, and Timely. These are the characteristics that a behavior-change intervention should have in order to be most effective, says the BIT. See a primer here, and consider reading the Behavioral Government report linked above.

Increasing policymaker-researcher collaboration, co-defining research questions

One theory of why policymakers don’t always use sufficient scientific evidence in drafting policies is that researchers are not producing policy-relevant research. Maire et al. (2019) mentioned that, in order to make research more policy-relevant, research questions could be co-defined by researchers and policymakers. This is just one way of increasing policymaker-researcher collaboration. I remember having read a paper which found no increase in evidence uptake by policymakers after co-defining research questions, but I could not find it among my notes.

Improving the science-policy interface

The goal is to increase the degree to which policymakers privilege scientific information over other, less-reliable sources of information. Some barriers to this happening are “lack of access to and relevance of research, the mismatch between research timelines and policy deadlines, and the lack of funding schemes to support policy-relevant research projects” (Oliver et al., 2014, as cited in Stauffer et al., 2021). Two ways of addressing these issues could be: (1) funding and training interface actors to act as facilitators of information exchange between researchers and policymakers, and (2) through funding timely and policy-relevant evidence syntheses (see the intervention above and the intervention on rapid research services in this post for more information).

Monitoring and improving ongoing performance

^Institutionalizing the recording of quantitative judgmental predictions of advisors/analysts and decision-makers

[Click on the caret (^) above to be taken to the more in-depth explanation]

^Using post-analytic techniques

[Click on the caret (^) above to be taken to the more in-depth explanation]

Facilitating anonymous 360-degree feedback among employees

See this article for more context. The idea is to create the opportunity for employees who work closely together to provide anonymous feedback on each other’s performance (broadly defined). This feedback could be given directly from employee to employee, or summarized by a third party. A list of potential benefits and drawbacks are mentioned in the article linked above, and it is not clear to me that the benefits outweigh the potential risks. I am including this here because it is worth investigating different mechanisms for providing feedback within institutions.

Interactions between institutions and across institutional ecosystems

^Creating and funding evidence clearinghouses for IIDM

[Click on the caret (^) above to be taken to the more in-depth explanation]

Increasing mechanism design literacy (training)

See Maskin (2019) for more context. In broad terms, mechanism design consists in identifying a desired outcome and engineering the mechanisms needed to bring about that outcome. In the context of IIDM, this could mean two things. First, mechanism design could help us envisage new future institutions, and backwards engineer what exactly they would have to be like for them to fulfill this purpose. Second, mechanism design concepts could be helpful tools in reforming existing institutions by envisaging what changes we want to happen and then backwards engineering how we should get there.

Note that this intervention could have been categorized under the “individual-level judgment and decision-making.” This depends on whether the training itself or the institutional change is emphasized. See On categorization for more details.

Training systems thinking

See Arnold & Wade (2015) for a nuanced definition of systems thinking. Systems thinking training refers to helping people build better mental models of systems (regularly interacting or interdependent groups of items forming a unified wholes[4]). This might include helping people identify system properties, better generate mental models, appreciate feedback loops, interdependence, etc. (Stauffer et al., 2021).

Tonn (2021) states that “improving systems literacy worldwide could have profound positive impacts on all levels of decision-making” (p. 157).

The same note about the categorization of the intervention above applies here.

Improving knowledge about the future

^Providing “Superforecasting” training to decision-makers

[Click on the caret (^) above to be taken to the more in-depth explanation]

^Improving communication of uncertainty

[Click on the caret (^) above to be taken to the more in-depth explanation]

Creating internal prediction markets or forecasting competitions

See this Forum post on prediction markets. The idea of this intervention is to create small-scale prediction markets within the same institution. This would involve some system through which people make forecasts on decision-relevant outcomes, and either (a) some reward system rewards those who make the most accurate predictions, or (b) people are allowed to buy and sell shares of outcomes (hence the ‘market’ aspect).

As I see them, IIDM interventions should focus first and foremost on what the institution itself can change to improve itself — not on what third parties should do that would benefit the institution (since it is harder to institute this change). And an institution, especially large ones, could always create an internal prediction market or prediction competition to crowdsource forecasts. However, this does not discard that the decision-makers in an institution should still gauge the probabilities of future events by taking into consideration “external,” i.e., public, prediction markets as well whenever possible.

Engaging in premortem and backcasting during group deliberations

Premortem analysis involves the following hypothetical: “assume your plan has failed. What happened?” This seems powerful to me for two reasons. One, you are forced to think through several potential failure modes of your plans; and two, explicitly engaging in premortem analysis as a team could take pressure off teammates to conform, thus decreasing the risk of groupthink. See this HBR article for more context.

Backcasting: As this Wikipedia article states, backcasting asks: “if we want to attain a certain goal, what actions must be taken to get there?” I am sure you can see how this relates to premortem analysis, and also mechanism design (on this same post). Since I did not prioritize these interventions, I have no idea what empirical evidence behind them exists, but they seem promising at face value.

Forecasting techniques: using superforecasters

An interesting idea is deliberately finding superforecasters (top ~2% of people who demonstrably most accurately predict future events) and commissioning them to forecast outcomes that are decision-relevant for the institution. Other forecasting techniques are already on this list, like creating prediction markets, probabilistic calibration training, and more.

See this CSER conference recording, around the 1 hour 9 minute mark for other forecasting and strategic foresight techniques. See also the book Superforecasting by Tetlock (2015).

Foresight techniques*

*This is a group of interventions. They are grouped together here due to lack of time to investigate and categorize each individually.

Some potentially valuable foresight techniques are: gaming and roleplaying (serious games), scenario construction, horizon scanning, red-teaming, analysis of unanticipated unintended consequences (UUCs), analysis of unknown unknowns (UKUKs), and singular chains of events scenarios (SCES), and other tools shared with forecasting (prediction markets, superforecasting).

I think that foresight techniques might be unfairly disregarded or discounted in comparison to forecasting techniques in EA circles, although there might be legitimate reasons for this. So if you want to broaden the scope of IIDM, and believe that accurately predicting (and preparing for) the future matters, the list of resources below is one of the first places where you might want to look into.

Resources to investigate further:

- The book “Foundations of Futures Studies: Vol. 1” was recommended to me by a strategic foresight expert as the best introductory resource on foresight techniques.

- You can also check out Tonn (2021) for a global perspective on using foresight techniques to tackle extinction threats. This is a particularly good resource to get started with UUC, UKUK, and SCES analysis.

- See also this document: the “Futures Toolkit” published by the Government Office for Science in the UK Government for a more complete list of foresight techniques and a much lengthier discussion of each.

- See also this document by Shallowe et al. (2020) on public sector strategic foresight. It was also recommended by the strategic foresight expert I spoke with.

- Finally, here is a paper (Habegger, 2010) on past implementations of public sector strategic foresight.

Individual-level judgment and decision-making

^Rationality training for individuals

[Click on the caret (^) above to be taken to the more in-depth explanation]

Using Structured Analytic Techniques (SATs)*

*This is a group of interventions. They are grouped together here due to lack of time to investigate and categorize each individually.

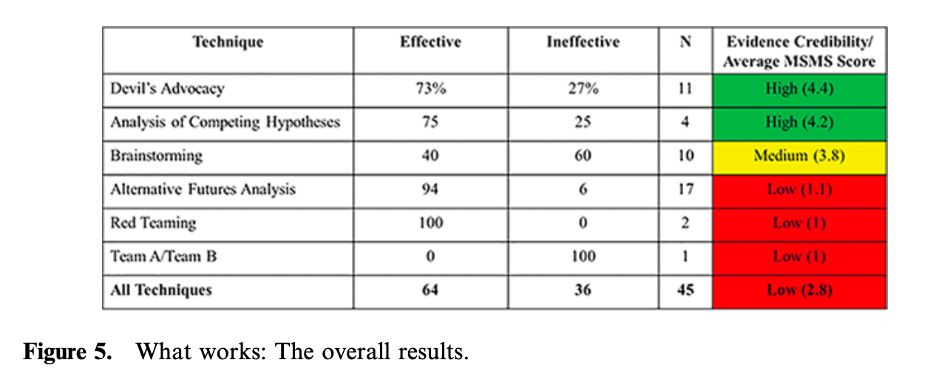

The list of potentially promising SATs below comes from Artner et al. (2016), who define SATs as “methods for organizing and stimulating thinking about intelligence problems.”

Here is a tentative list of some potentially promising SATs: Key Assumptions Check, Quality of Information Check, Indicators of Signpost/Change, Analysis of Competing Hypotheses, Devil’s Advocacy, Team A/Team B, High-Impact Low-Probability Analysis, “What-If?” Analysis, Brainstorming, Outside-In Thinking, Red Team Analysis, Alternative Futures Analysis.

Mandel et al. (2019) note that although the number of SATs has skyrocketed, attempts to prove their efficacy in controlled environments have not kept up. SATs are too often assumed to be good prima facie, so carefully testing them is one way you could contribute to the IIDM body of research.

See below Artner et al. (2016)’s results on existing evidence on the six of the 12 SATs named above (the other six did not have evidence to be analyzed):

Determining goals and strategies to achieve them

Creating consensus-based staggered planning for long-term goals

This idea comes from this Forum post. The author writes “the consensus-based staggered planning approach is extremely common and can be run from the level of a small organisation to the level of an entire country and my personal experience of this kind of work makes me optimistic it can deliver useful results.” It is not entirely clear to me what a “consensus-based staggered plan” would be from that post, but it might be worth exploring this further.

Increasing the use of testable theories of change (ToC)

According to theoryofchange.org, a “Theory of Change is essentially a comprehensive description and illustration of how and why a desired change is expected to happen in a particular context.”

A crucial advantage of creating thought-out theories of change is that you should have a testable hypothesis of how you expect a desired result to come about; a testable idea that lays bare the precise mechanisms through which you expect change to happen, which others can point to and critique. See other purported benefits of ToCs here.

For related interventions on this list, search for “backcasting” and “mechanism design.”

The Effective Institutions Project (EIP) published their ToC, which you can see here.

Employ strategic planning techniques*

*This is a group of interventions. They are grouped together here due to lack of time to investigate and categorize each individually.

Strategic planning refers, broadly, to the process through which organizations’ leaders define the organization’s goals and general direction. Some examples of strategic planning techniques are SWOT (Strengths, Weaknesses, Opportunities, and Threats) analysis, Porter’s Five Forces, and more. These seem especially prevalent in the business world, but I have no clue as to their effectiveness — this might be worth investigating.

Fostering moral reflection (general)

This means “motivating actors to reflect on the future and expand their circle of compassion to future lives” (Stauffer et al., 2021; p. 20). The cited paper is the only one in which I encountered this idea, and it is worth noting that, although the paper focuses on longtermist policymaking, the idea of fostering moral reflection could be used to include not only the encompassing of future lives, but also those of people across the planet and nonhuman animals. This is one of the few interventions on this list that focus on value alignment instead of increasing the technical quality of institutions’ decisions.

List of prioritized interventions

I did not have enough time to write polished descriptions for all interventions on the list. I therefore marked 11 interventions as “prioritized interventions,” marked with a caret (^), to write lengthier descriptions of them. They are listed below.

I chose which interventions to prioritize based on my subjective judgment of their potential, ease of application, or quantity of existing relevant research.

These descriptions are not meant to be a review of the intervention’s efficacy, but rather a way for the reader to better understand what is meant by the intervention’s title, and to provide a starting point of a couple of papers/resources for the reader to start his or her research.

The format of most of these is: 1) an explanation of the intervention, 2) examples of the intervention being implemented (when possible), 3) remaining questions that I had after my relatively shallow research, and 4) resources for you to investigate the intervention further.

^ Delphi method for aggregating judgment

The Delphi method, or Delphi technique, is a structured approach to extracting information from a group of people, typically experts in some area. It is used as a foresight/forecasting technique, as a tool for building consensus, and for getting information in situations where data are lacking. It probably is, as far as my research goes, the most popular method on the list (by that I mean, the most concrete “intervention” that I’ve seen endorsed over and over by different authors).

Basically, the method consists in gathering a group of experts who are willing to participate (online), and guiding them through two or more rounds of surveys in which the participants share their views (for forecasting, quantitative probability estimates, otherwise it could be agreement with a question on a scale) and reasons for them. Between every round, an anonymized summary of everyone’s responses during the last round is shared with the participants, who can make and answer comments. The idea is that with each consecutive round, opinions will tend to converge as respondents adjust their responses in light of other people’s reasoning. Hsu & Sandford (2007), as well as others (see Duffield, 1993; Breckon & Dodson, 2006) claim that it is an effective tool for building consensus.

The IDEA Protocol: the IDEA (investigate, discuss, estimate, and aggregate) is a modified Delphi method, which some have claimed is the most effective form of Delphi, at least for forecasting purposes.[5] I highly recommend reading Hemming et al. (2017) to get a sense of how the IDEA protocol would be applied in practice, and how it might differ from a generic Delphi method.

Resources:

- https://www.investopedia.com/terms/d/delphi-method.asp A quick and simple introduction

- http://foresight-platform.eu/community/forlearn/how-to-do-foresight/methods/classical-delphi/ - (For a general introduction as well as more nuanced comparison of pros and cons).

- https://ec.europa.eu/consultation/resources/documents/Delphi_Guide.pdf A guide from the EU Commission on running a Delphi panel. It could give a good idea of how to run logistics for it.

- Linstone, H. A., & Turoff, M. (Eds.). (1975). The delphi method (pp. 3-12). Reading, MA: Addison-Wesley. - (a very widely cited book about the Delphi method, PDF available on Google Scholar.)

- Okoli, C., & Pawlowski, S. D. (2004). The Delphi method as a research tool: an example, design considerations and applications. Information & management, 42(1), 15-29. - (a widely cited paper of Delphi’s applications for research.)

- If I remember correctly, I was told that the Effective Institutions Project has used Delphi panels before. If you’re interested in running one yourself, consider reaching out to them to learn more about good methodology.

- Hemming, V., Burgman, M., Hanea, A., McBride, M. and Wintle, B., 2017. A practical guide to structured expert elicitation using the IDEA protocol. Methods in Ecology and Evolution, 9(1), pp.169-180.

Things I’ve read which mention Delphi:

- Breckon, J., & Dodson, J. (2016). Using evidence: What works?. [LINK]

- Hilton, S., 2021. A practical guide to long-term planning – and suggestions for longtermism. Effective Altruism Forum. Available at: [LINK]

- Stauffer, M., Seifert, K., Ammann, N., & Snoeij, J. P. Policymaking for the Long-term Future: Improving Institutional Fit. [LINK]

- Cambridge Conference on Catastrophic Risk 2022 - DAY 1 [Foresight techniques].[LINK]

^ Including “decision observers” into group deliberation (debiasing)

This means assigning someone familiar with common biases to “search for diagnostic signs that could indicate, in real time, that a group’s work is being affected by one or several familiar biases.” It could be a coworker, or an outside party. See Kahneman et al. (2021) for a checklist that decision observers could use to structure their approach.

The reasons why I consider this a potentially promising intervention are not transparent to me, and I have not had time to go more deeply into the literature. Possible things to compare this intervention to are devil’s advocacy and Team A/Team B exercises.

An important quote from Artner et al. (2016): “Academic studies have found that the use of the devil’s advocate technique does not necessarily promote genuine reexamination of assumptions and, in some cases, heightens confidence in preferred hypotheses.” Decision observers and devil’s advocate seem quite different, but it would be interesting to see if rigorous investigations into the adoption of this intervention would yield similarly disappointing results.

Resources:

- Kahneman, D., Sibony, O. and Sunstein, C., 2021. Noise. New York: Little, Brown Spark. [LINK] - (This is where I got the idea from.)

- Artner et al. (2016). Assessing the value of SATs in the US intelligence community. RAND Corporation. DOI: https://doi.org/10.7249/RR1408 [LINK] - (This does not deal directly with decision observers.)

- Schwenk, C., 2022. Devil's Advocacy in Managerial Decision-Making. DOI: http://dx.doi.org/10.1111/j.1467-6486.1984.tb00229.x - (I have not read this paper because of time constraints.)

Questions: how exactly are decision observance, devil’s advocacy, and Team A/Team B exercises different? In which ways might they aid in debiasing and increasing decision quality? What type of expertise should a decision observer have? Have any RCTs testing decision observance procedures already been run?

^ Employing simple linear models

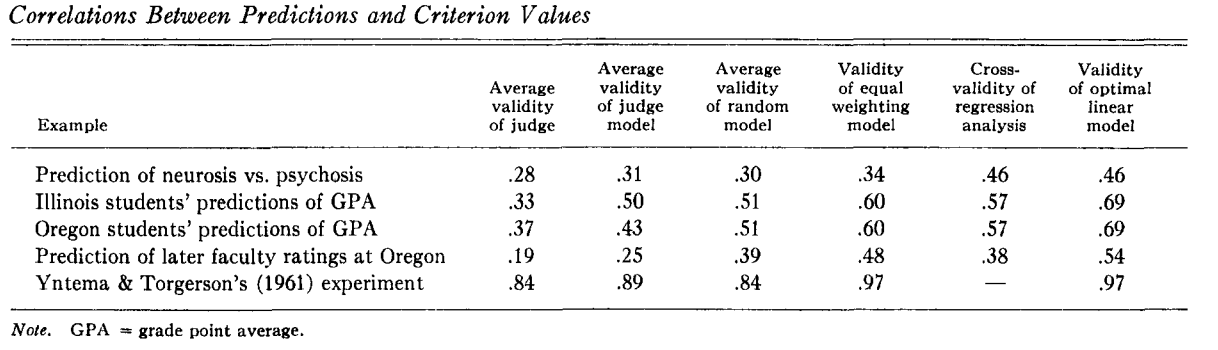

In his influential paper, “The robust beauty of improper linear models in decision making,” Dawes (1979) concludes that even improper linear models[6] consistently outperform human decision-makers in making predictions under certain conditions[7] (and thus aiding decision-making). Dawes (1979, 1971) showed that linear models consistently outperform admissions officers in predicting graduate school applicants’ future performance. But linear models (and statistical predictors in general) can be used in a broad array of situations. For example, after someone used marital (un)happiness as an example of something that Dawes wouldn’t be able to predict with linear models, Dawes (1979) created a very simple predictor: “rate of lovemaking minus rate of fighting” (ibid.)[8] This metric turned out to be an (astonishingly) accurate predictor, as it was correlated with marital (un)happiness at .40 (p. <0.05) (Howard & Dawes, 1976). A later replication study found a correlation of .81 (p. 0.01) (Edwards and Edwards, 1977).

Dawes (1979) writes that “people are bad at integrating information from diverse and incomparable sources. Proper linear models are good at such integration when the predictions have a conditionally monotone relationship to the criterion.”[9] That is the reason why proper linear models, designed to optimize the weights assigned to each numerical criterion, seem to outperform human decision-makers.

Below is a results table from Dawes (ibid.) comparing the reliability of (among others) human judges (1st) and an equal-weight (improper) linear model (4th). Notable result: even the linear model with random weights (3rd) appears to outperform human judgment for the selected experiments.

Examples: using a linear model based on GPA (grade point average) and GRE (Graduate Record Examination) scores as one of the main criteria to make admissions decisions at higher education institutions. Other numerical criteria could be added. But remember: linear models could be used for all sorts of other decisions.

Resources:

- Dawes, R. (1979). The robust beauty of improper linear models in decision making. American Psychologist, 34(7), 571-582. doi: 10.1037/0003-066x.34.7.571

- Dawes, R. (1971). A case study of graduate admissions: Application of three principles of human decision making. American Psychologist, 26(2), 180-188. doi: 10.1037/h0030868

- Dawes, R., & Corrigan, B. (1974). Linear models in decision making. Psychological Bulletin, 81(2), 95-106. doi: 10.1037/h0037613

Questions: I have only focused on Dawes’ work — what other work is there validating his findings and expanding on possible uses of simple linear models for decision-making? What are some ethical implications of relying too heavily on these simple predictor models? In which environments are simple linear models inadequate for aiding in decision-making?

^ Establishing rapid research services: evidence hotlines and helpdesks

Even though I listed it as a promising intervention (^), I did not go in depth into it — so if you want to get the same introduction I got to it I recommend you read “Using Evidence: What Works?” (Breckon & Dodson, 2016; pp. 13-14).

As I understand it, an evidence hotline or evidence helpdesk would be a branch of an institution (or could also be a separate organization), which provides so-called rapid-resarch services, like “brief literature review[s], including summaries and comments from subject experts” (ibid.), for example for policymakers, who need to make a decision and do not have the time (or maybe expertise) to dive into the relevant literature themselves and extract conclusions from it.

I think this could be a very promising way of creating a more robust policy-research interface. Calling and making a request for a summary of the evidence on [X issue] is a very low-personal-commitment way of gathering evidence, and seems like a good solution for busy policymakers and other professionals.

Examples: an existing example according to Breckon & Dodson (2016) is the Health and Education Advice Resource Team (HEART), and possibly the Public Policy Institute for Wales (PPIW), which became a part of the Welsh Center for Public Policy (WCPP) in 2017.

Resources:

- Breckon & Dodson (2016; pp. 13-14) [LINK] - this is where I got the idea for this intervention.

- Breckon & Dodson (2016) cite Chambers (2011), but I couldn’t easily find the paper they referred to.

- The Health and Education Advice Resource Team (HEART) website

- Welsh Center for Public Policy (WCPP) website might be a good resource, although I have not explored it, and I am not familiar with their work or if they still provide on-demand research services.

- This Forum post, which mentions evidence hotlines almost in passing, but is worth reading for all of its valuable commentary.

Questions: Who should be responsible for the expenses of these rapid research services? What other interventions should be adopted along with establishing these hotlines, so that they are actually used? (see others in this same category, “using evidence and data”). Where have these services been established? Are there any case studies on what changed after their adoption?

^ Other Decision Support Systems (DSS)

Decision Support Systems (DSS) or Decision Support Tools (DST) are “information system[s] that supports business or organizational decision-making activities” (Wikipedia).

The definition is broad and possible applications even broader. Here I list two DSSs that I’ve encountered during my research, which could give a better idea of how useful they might prove to be. It looks from the outside like DSSs would have to be tailored to the specific institution and specific application to be useful, although I have not looked into this enough to be able to tell.

- RAND’s DST for the Puerto Rican Government [LINK], the “Puerto Rico Economic and Disaster Recovery Plan Decision Support Tool.” I encourage you to use that link, and try the tool out for yourself (it’s the thing that looks like an announcement on that page, but it’s not). For example, go to the “courses of action” tab, and click through the possible options. This is, specifically, a DST to aid in disaster recovery, so it is of particular interest to those working on Global Catastrophic Risks (GCRs) and existential risk mitigation at an institutional level. I found this DST on this EA Forum post.

- Modeling the Early Life-Course (MELC), a DST for the New Zealand Government to model how different policies might affect life outcomes for children. For more information read the following paper (Milne, 2016).

If at all possible, General Decision Support Systems (GDSSs) (I just made this up) could be a powerful generalist tool. Perhaps they could guide the decision-maker through a hypothesis brainstorming process (or alternatives generation process), and/or an Analysis of Competing Hypotheses (ACH), a backcasting exercise, and/or pre-mortem analysis, and/or a “considering the opposite” exercise in order to debias judgment. An evidence-based and effective GDSS for individual or institutional decision-making could be a highly valuable tool (and again, I have not had time to look and see if one exists. The closest I’ve found is this tool: a Multi-Criteria Decision Analysis aid).

Resources:

- https://focus-grp.com/decision-support-tools/ - A website which explains what DSTs are.

- https://www.decisionsupporttools.com/ - I have not had time to go in-depth into this website, but it seems like it could be really valuable.

- Both DST examples linked in the above section.

Questions: Are GDSSs possible? Do any already exist? What are some common properties to many DSSs/DSTs used by institutions? Does using DSSs improve decision quality?

^ Institutionalizing the recording of quantitative judgmental predictions of advisors/analysts and decision-makers

This comes almost word-for-word from someone’s contribution to the IIDM Interventions Google Doc that I shared with the IIDM Slack group. If I understood the idea correctly, it involves keeping a record of forecasts made by and used by an institution, and also keeping track of the real outcomes, so that, with time one can judge the accuracy of everyone’s predictions.

In case this confused you, here goes an example: say an the FBI (Federal Bureau of Investigation) relies on only three advisors to determine the likelihood of unknown events (e.g., how likely is it that a terrorist is hiding in this building?). As the three of them make precise quantitative predictions (forecasts) about a great quantity of events, both the forecasts and the results of the events once known (i.e., the terrorist was/was not there) are recorded, so that one can determine the accuracy or level of calibration of each advisor with something like Brier scores. This and other related ideas are discussed in Tetlock & Gardner’s “Superforecasting” (2015).

People with knowledge of forecasting would be much better resources for understanding this intervention than whatever I could write here, so I recommend you check out the resources below.

Resources:

- The Good Judgment Project (GJP)

- Tetlock, P. and Gardner, D., 2015. Superforecasting. New York: Broadway Books. [LINK]

- Forecasting EA Forum tag

- Mandel, D. R., & Irwin, D. (2021). Tracking accuracy of strategic intelligence forecasts: Findings from a long-term Canadian study. Futures & Foresight Science, 3(3-4), e98. - (I have not read this paper, but it might give some idea of how to track forecasting accuracy).

^ Using post-analytic techniques

Post-analytic techniques are “statistical methods that intelligence organizations could use to improve probability judgments after analysts had provided judgments” (Mandel et al., 2018, p. 12). The paper cited focused on the intelligence community (IC), but post-analytic techniques could be used just as well by any institution that records probability judgments (see the intervention above). This paper proposes two promising post-analytic techniques: coherentization and aggregation.

Coherentization means correcting probability judgments so that they better conform to the axioms of probability theory (ibid.). For example: if an analyst claims that there’s a .5 probability of war and a .6 probability of peace (silly example, but go with it), and those are the only two possibilities, then coherentization could mean adjusting the probabilities to .4545 and .5454 (to keep the relative likelihoods the same but make the sum of both add up to 1[10][11]). There are different methods of coherentization, including normalization and CAP-based methods.

According to Mandel et al. (2018), Karvetski, Olson, Mandel et al. (2013) have shown that coherentization methods can significantly improve judgment accuracy.

Aggregation in this context means aggregating the probability judgments of different people. Mandel et al. (2018) write that “substantial benefits to accuracy were achieved by taking the arithmetic average of as few as three analysts” (p. 13), and that giving different weights (coherence-based weights[12]) to participants’ judgments didn’t yield higher group accuracy, although others have found that it does (ibid.).

Recalibration and aggregation methods substantially improved accuracy: mean absolute error decreased 61% after coherentizing and aggregating judgments in Mandel et al. (2018).

Another example of a post-analytic technique not discussed in Mandel (2018) is the extremization of aggregated probability judgments (Tetlock & Gardner, 2016). Extremization of probability judgments means getting them closer to the extremes (or farther from 0.5) — so, if the original prediction is that there is a .7 probability that you will upvote this post, extremizing it might mean increasing the probability to .8 (thank you very much), and if it was .3, decreasing it to .2, or .27, etc.

See the following footnote for an explanation as to why extremizing aggregations of probability judgments appears to increase accuracy.[13]

Examples: institutionalizing the use of the post-analytic techniques mentioned above.

Resources:

- Boosting intelligence analysts’ judgment accuracy: What works, what fails? (Mandel, Karvetski, Dhami, 2018) [LINK] - (I have not seen the term “post-analytic techniques” being used a lot, so I recommend that you start with this paper and make your way to its references section.)

- Tetlock, P. and Gardner, D., 2016. Superforecasting. New York: Broadway Books. [LINK]

^ Creating and funding evidence clearinghouses for IIDM

An evidence clearinghouse is an organization which systematically analyzes the existing evidence in [X area] and summarizes, lists, or otherwise provides guidance as to what the evidence suggests is true (or, in the case of interventions, “what works”).

When it comes to improving the research-policy interface, or more broadly the science-application interface, evidence clearinghouses seem to me like a likely effective (and mostly missing) component to facilitate evidence uptake and evidence-informed decision-making.

The Effective Institutions Project’s scoping survey revealed that participants believed that the highest-priority goal for IIDM work should be to “synthesize existing evidence on ‘what works’ in IIDM across academic fields.” This is broadly what the goal that an IIDM evidence clearinghouse would want to achieve, and an important goal for those of us who believe that improving institutions and institutional decision-making could be a great way to do good and improve the world. I am listing this “intervention” as a priority/promising one (^) because I think this is the direction that IIDM should aim to go into. Although, as I will mention in a follow-up post about my thoughts on IIDM, linked here once posted [REF], I believe that we are far from being able to make summaries of “what works” in IIDM, and that many things probably need to be accomplished in the field before that is a realistic goal for researchers.

For a related intervention, see “establishing rapid research services: evidence hotlines and helpdesks” in this post. A more ambitious project could combine interventions, for example, by creating a research clearinghouse with a rapid research service division for timely advice.

Resources:

- The best resource I found so far is this example of an evidence clearinghouse for education interventions: the What Works Clearinghouse (WWC). I highly recommend that you spend 5-10 minutes exploring their website to get a better sense of what an IIDM evidence clearinghouse could be like.

- Related to the resource above, there is this PDF “guide to evidence-based clearinghouses” [PDF], which provides a summary and important resources for many more such evidence clearinghouse projects within the education sector.

- Clayton, V. et al. (2021). Refining improving institutional decision-making as a cause area: results from a scoping survey. Effective Altruism Forum. Available at: [LINK]

Questions: This might be an ignorant question, but it seems like the field of education also has an immense literature with numerous interventions to go over. What has made it possible for the WWC to make a “what works?” evidence clearinghouse, but it seems (from where I stand) so far off for IIDM? Two possible answers could be that it’s easier to run RCTs or studies in general within schools than, for example, with policymakers/politicians, and that “education” has been a unified field of inquiry for much longer than IIDM has (even though branches of IIDM have existed for a long time independently. It is also likely that I am missing something important here, and I just haven’t dedicated enough time to think about this question.

^ Providing “Superforecasting” training to decision-makers

Most decision-making environments include some degree of uncertainty. Accurate appraisals of this uncertainty should, on average, lead to better decisions. “Superforecasting” (see Tetlock & Gardner, 2015) has proven to be a comparatively effective way of making predictions about the future.[14] If you’re not at all familiar with forecasting, you might want to start here.

Training key decision-makers and their advisors within institutions in forecasting techniques would very likely improve their institution’s overall ability to make predictions.

Here is the link to an online course provided by the GJP (Good Judgment Project). I have not taken the course, but looking at the syllabus makes me think that although this is a great start, there is probably a lot of room for adding important content.

I am out of my depth when it comes to forecasting, but here are some things that an alternative (or expanded) training program might include (taking all ideas from the book “Superforecasting: The art and science of prediction”):

- Trying to make people adopt a growth-mindset, because commitment to self-improvement is the strongest predictor of performance in forecasting (Tetlock & Gardner, 2016; p. 20). “Perpetual beta, the degree to which one is committed to belief updating and self-improvement” was the strongest predictor of forecasting ability, and in fact three times as strong as the next-best predictor: intelligence. (ibid., p. 144).

- Training on Fermi estimations to develop a better sense of how to break down complex problems (see ibid., pp. 87-89).

- Include some classes on probability theory, including the axioms and, most importantly, Bayes’ theorem (ibid., p. 170).

- Training on common biases and heuristics.

- Including forecasting exercises with clear and timely feedback. Especially exercises relevant to the target audiences’ actual problems.

- Training on taking inside and outside views.

- Training on considering alternative hypotheses and alternative causal mechanisms.

- Training on communicating degrees of doubt and uncertainty clearly and in a nuanced way.

- Training to maximize the benefits from group interactions: “perspective-taking (understanding the arguments of the other side so well you can reproduce them to their satisfaction), precision questioning (helping others to clarify their arguments so they are not misunderstood), and constructive confrontation (learning to disagree without being disagreeable)” (Tetlock & Gardner, 2016; p. 211 PDF version).

- Calibration training. I considered making this an entire intervention in its own right, but decided against it. Calibration training seeks to provide one with enough practice, feedback, and advice, so as to help you become more “well-calibrated.”[15] I have not extensively looked for the best tool for calibration training, but this tool by ClearerThinking could be a good start.

Hiring-side interventions:

- Using active open-mindedness (AOM) questionnaires as part of the hiring process, since successful forecasters score, on average, highly on these tests (ibid., p. 98). AOM positively correlates with accuracy (p. 207).

- I don’t know how the authors tested the degree to which forecasters have a “perpetual beta” mindset, but that could also be used as part of a job application, since its predictive validity seems to be high.

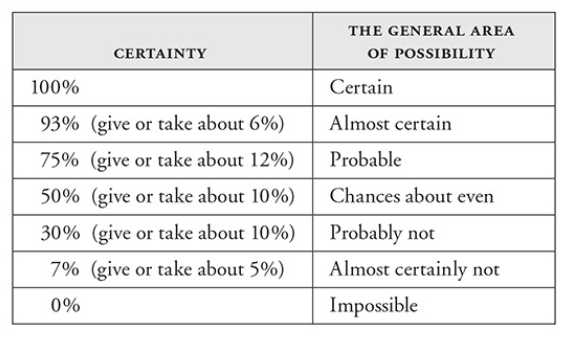

Examples: an organization/institution could collaborate with the GJP to provide a superforecasting course for its key decision-makers and their advisors. It could also adopt a standardized numeric-to-verbal probability mapping scale (For examples, see the figures from Tetlock & Gardner, p. 45; p. 112). This is also relevant for the next intervention (improving communication of uncertainty).

Resources:

- Tetlock, P. and Gardner, D., 2016. Superforecasting: The art and science of prediction. New York: Broadway Books. [LINK]. - (Note: I originally read the paperback version, and then gave it away. So when looking back for quotes I sometimes used a PDF version, which might have mixed up the page numbers.)

- “Superforecasting in a nutshell” by Luke Muehlhauser

- “Forecasting” topic on the EA Forum

Questions: which biases and heuristics are most important to know for decision-makers? What are other publicly available forecasting courses that institutions could use now to train their decision-makers?

^ Improving communication of uncertainty

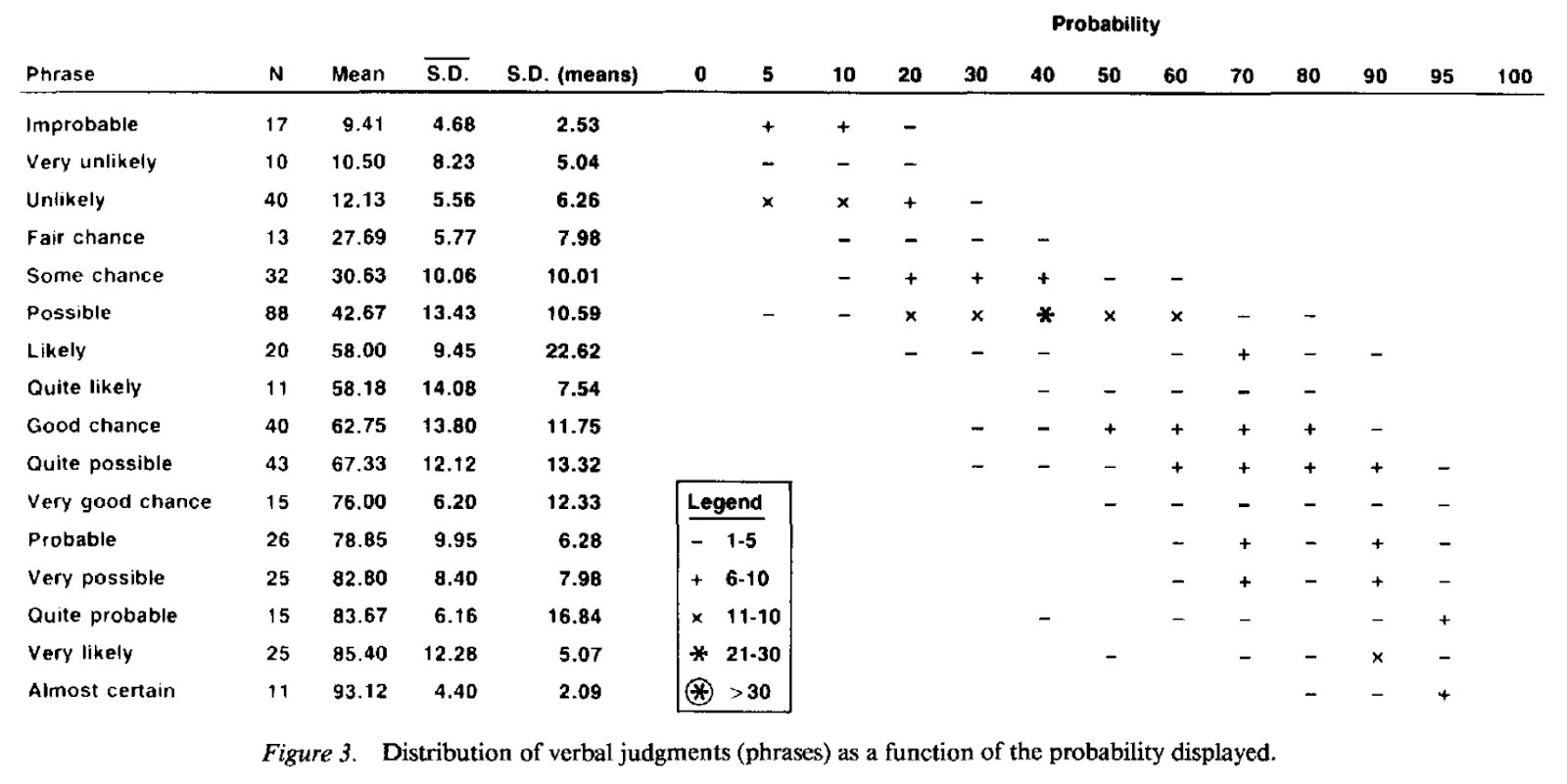

People appear to mean very different things when using words such as “possibly,” “likely,” “probable,” etc. Tetlock & Gardner (2016) mention the case of people describing “a serious possibility” as odds anywhere between 80 to 20 and 20 to 80. Budescu et al. (1988) write that individuals have “substantial working vocabularies of uncertainty, with nevertheless relatively little overlap among individuals” (p. 290), and show that “all comparisons favored the numeric over the verbal response mode” (p. 290). In Budescu et al. (1988), one can see how verbal probabilities can be interpreted in significantly different ways by different people (see the table below):[16]

I chose this as a promising intervention because of the apparent ease of implementation, although I might be overestimating this ease due to being friends with many other EAs for whom numerical descriptions of uncertainty are commonsensical. Dhami et al. (2015) propose three decision-science approaches to improving intelligence analysis, and could be taken as institutional interventions:

- “Use methods that translate analysts’ terms into ones that have equivalent meaning for decision makers” (p. 755). For a discussion on developing a general Linguistic Probability Translator (LiProT), see Karelitz & Budescu (2004).

- “Remind decision makers of numerical interpretations of verbal terms in the text of an analytic report” (Dhami et al., p. 755). One could adopt a standard 7-step verbal probability scale with associated numerical probabilities, and set up mechanisms (like reminders) to make sure that the scale is understood and used both internally and explicitly stated in communications between the institution and third parties. One possible criticism by Tetlock & Gardner (2016) is that a 5- or 7-step probability scale does not allow for “sufficient shades of maybe.” Either way, this seems like an improvement from just stating verbal probabilities.

- “Adopt numerical probabilities in contexts where precision matters, such as forecasting.” (Dhami et al., p. 755).

For criticisms and counter-arguments on adopting numerical probabilities for communicating uncertainty, see Tetlock & Gardner (2016).

Resources:

- Budescu, D. V., Weinberg, S., & Wallsten, T. S. (1988). Decisions based on numerically and verbally expressed uncertainties. Journal of Experimental Psychology: Human Perception and Performance, 14(2), 281. [LINK]

- Dhami, M., Mandel, D., Mellers, B., & Tetlock, P. (2015). Improving Intelligence Analysis With Decision Science. Perspectives On Psychological Science, 10(6), 753-757. doi: 10.1177/1745691615598511 [LINK]. - (This paper provides an incredibly useful review on the decision research on communicating uncertainty, which is a great place to start if you want to dive deeper into this intervention.)

- Tetlock, P. and Gardner, D., 2016. Superforecasting: The art and science of prediction. New York: Broadway Books. [LINK]

- Karelitz, T. M., & Budescu, D. V. (2004). You Say "Probable" and I Say "Likely": Improving Interpersonal Communication With Verbal Probability Phrases. Journal of Experimental Psychology: Applied, 10(1), 25–41. https://doi.org/10.1037/1076-898X.10.1.25 - (a more technical paper, that develops the idea of finding the best methods to translate verbal probabilities to numerical ones.)

^ Rationality training for individuals

Evidence and reason are two pillars of good decision-making. Multiple interventions on this list focus on the use of evidence, and although many involve aspects of rationality (calibration training, decision observers, etc.), this could also be one promising intervention.

This intervention would involve incentivising/funding the attendance of rationality training workshops (and this is also a call for more such workshops to be created, or for existing ones to be held more regularly).

Some topics that come to mind, which rationality training programs might/could include, are: System 1/System 2 thinking, cognitive biases and mental heuristics, basic formal logic, basic statistical reasoning, informal fallacies (see a list here),[17] detection of misinformation, probabilistic calibration training, updating & Bayes’ theorem, mindset (Scout Mindset), reasoning transparency, deduction and induction, generalizations, causal reasoning, and expected value theory. That list is by no means exhaustive. My hope is that the topics above plus the resources below are a good starting point for anyone interested in learning more.

Resources:

- The Center for Applied Rationality (CFAR) has developed intensive workshops for this purpose.

- CFAR has also made its handbook publicly available.

- Also look into ClearerThinking.org, especially the “tools” section.

- David Manley’s “Reason Better: An Interdisciplinary Guide to Critical Reasoning” is a great, practical textbook for learning better reasoning practices. A course I took at Brown (PHIL 100: Critical Reasoning) used this textbook almost exclusively as the reading material, and I consider it the most useful course that I’ve ever taken.

- Kahneman, D. (2011). Thinking, fast and slow. Macmillan. [LINK]

- The Decision Lab’s list of cognitive biases and heuristics.

- LessWrong (LW) is “a community blog dedicated to refining the art of rationality.” I have never really explored LW, but it seems like a resource worth exploring if you want to do research in rationality training, or develop a training program yourself. Some specific things I found after very little digging: the “Training Regime” sequence and the “Hammertime” sequence. (Warning: I haven’t read them; they might be wholly off topic.)

Questions: my biggest unknown when it comes to this is whether there is good evidence that rationality training improves decision-making ability, and whether this improvement holds when decision-makers are placed in an institutional context.

Methodology and disclaimers

- My research was highly exploratory and unstructured. This is neither a systematic review nor a complete list. I started reading what the EA community had produced on IIDM and then moved outward from there. This might have biased my sample of interventions by having a higher proportion of interventions endorsed by other EAs.

- I tried to minimize type II errors (incorrectly ignoring a good intervention) during my research, which means that my bar for including an intervention on this list was low. This also implies that there might be many type I errors (incorrectly including a bad intervention) throughout the list.

- My sources: You can see a list of the papers and articles I’ve read under bibliography and IIDM papers. I have also conducted interviews with nine experts/people with significant experience in related fields,[18] and received written input and feedback from several others.

- I adopted an untargeted approach to IIDM. This means that I have researched general IIDM interventions, without considering them within the context of one particular institution. The opposite is a targeted approach: seeking interventions to improve a specific institution’s decision-making capabilities. This distinction is taken from this post.[19]

- This is the result of roughly six weeks of full-time work at CHERI’s Summer Research Program, plus extra time writing this post after that. This left me with very little time to research any given intervention. I therefore do not have in-depth knowledge in any of these, so it is quite possible that I have mischaracterized or miscategorized some interventions. Please add your feedback in the comments, and add important interventions I may have missed to the “Interventions shared” document under Appendix II.

- The categorization I made borrows from the Effective Institutions Project’s (EIP) scoping survey. The categorization I did is far from perfect. More on this under Appendix I.

Next steps

There are several directions that you could go into as someone researching IIDM:

- If working in IIDM makes sense for you and you don’t feel like you understand what is meant by IIDM, I recommend you start with the following articles and resources. Then, you can go on to read some of the things under Bibliography and IIDM papers that catch your eye.

- This post was meant to give you a list of possible interventions to research in depth. If you are motivated by the vision of where IIDM should go explained under Motivation, you could start by reviewing the evidence behind one or more of the interventions on this list, and add to our collective knowledge by finding out “what works.”

- You could also work on creating useful frameworks for others working in IIDM. How should we categorize interventions? How should we categorize different institutions? What, precisely, do we mean by “intervention,” “institution,” and “decision-making”?

- You could work at an IIDM-focused organization like the Effective Institutions Project, fp21, or the Legal Priorities Project.

- As you could probably tell, my project was hindered by time constraints, so I do not consider this research finished. You could work on adding more potentially good interventions to this list by contributing to the “interventions shared” document under Appendix II. I have added a short list of resources that I would have gone into in more depth had I had more time for it. So if you would like to contribute more interventions to this list, these are the places I would look into first.

Short list of resources and topics that I would investigate next:

- Anything concerning hiring practices

- Anything concerning decreasing corruption in institutional contexts

- Research on implementation science

- Heavily technology-based interventions that I did not go into for lack of familiarity and expertise (e.g., AI-based interventions)

- The Behavioral Insights Team Behavioral Government Report

- Fp21’s “Less Art, More Science” report

- Expert Political Judgment (Tetlock)

- Noise (Kahneman)

- See latest contributions to the Google Doc shared on the IIDM Slack (under Appendix II)

- Longtermist Institutional Reform (John and MacAskill)

Of course, you should think about the expected impact of you working on IIDM as it compares to you working on other high-priority areas. My intuition is that, for someone with equally good fit across cause areas, working on areas such as AI alignment, biosecurity, and nuclear warfare would probably be more impactful. I will go more in-depth into cause prioritization and where to go from here in a future post.

Acknowledgments

I wrote this post during the Summer Research Program of the Swiss Existential Risk Initiative (CHERI). I am grateful to CHERI’s organizers for their support. I am especially grateful to my mentor for the project, Bruce Tonn, as well as to Jam Kraprayoon from the Effective Institutions Project for providing valuable advice. Many thanks to Angela Aristizábal, Lizka Vaintrob, Annette Gardner, Laurent Bontoux, Maxime Stauffer, Luis Enrique Urtubey, Adam Kuzee, Rumtin Sepasspour, and Steven Sloman for discussions that helped inform this research project. Finally, thanks to Justis Mills and Mason Zhang for providing valuable feedback drafts of this post.

Citation

To cite this post, please use the following or the equivalent in another citation format:

Burga Montoya, F. T. (2022, November 23). List of interventions for improving institutional decision-making. EA Forum. Retrieved [INSERT DATE], from https://forum.effectivealtruism.org/posts/6miasxvmrGZChosBc/list-of-interventions-for-improving-institutional-decision

Bibliography and IIDM papers

You can find this same list on a separate Google Document here. As mentioned before, although this also serves the function of an imperfect bibliography, it only includes papers/articles that I’ve taken notes on and that I deemed somewhat relevant to IIDM — not everything and anything that I cited somewhere here. I estimate this list has roughly 30% of everything I’ve read for IIDM since starting this project, but >85% of the most important things I’ve read since then. It is arranged in rough chronological order, so that the first things on the list are the first resources I used.

Resources that I took notes on and got interventions from

- Whittlestone, J. (2017). Improving Institutional Decision-Making. 80000 Hours. Accessed 21 August 2022 from [LINK].

- EA Norway, 2022. Cause Area Guide: Institutional Decision-making [SHARED]. [online] Google Docs. Available at: <https://docs.google.com/document/d/1dICVV01a5TyqQeibz3iME5AsFtGx3Xx3U7SHykZXzws/edit> [Accessed 21 August 2022]. [LINK]

- Clayton, V. et al. (2021). Refining improving institutional decision-making as a cause area: results from a scoping survey. Effective Altruism Forum. Available at: [LINK]

- Moss, I. D. et al. (2020). Improving institutional decision-making: a new working group. Effective Altruism Forum. Available at: [LINK]

- Moss, I. D. (2021). Improving Institutional Decision-Making: Which Institutions? (A Framework) Effective Altruism Forum. Available at: [LINK]

- Moss, I. D. (2022). A Landscape Analysis of Institutional Involvement Opportunities. Effective Altruism Forum. Available at:[LINK]

- Disentangling “Improving Institutional Decision-Making” (EAF Post - Lizka) [LINK]

- Whittlestone, J., (2022). Improving institutional decision-making. [online] Youtube.com. Available at: <https://www.youtube.com/watch?v=TEk05tZVIKo> [Accessed 21 August 2022]. [LINK]

- The Effective Institutions Project (EIP)’s Theory of Change [LINK]

- Tetlock, P. and Gardner, D., 2016. Superforecasting. New York: Broadway Books. [LINK]

- Maskin, E. (2019). Introduction to mechanism design and implementation†. Transnational Corporations Review, 11(1), 1-6. doi: 10.1080/19186444.2019.1591087

- Kahneman, D., Sibony, O. and Sunstein, C., 2021. Noise. New York: Little, Brown Spark. [LINK]

- Artner et al. (2016). Assessing the value of SATs in the US intelligence community. RAND Corporation. DOI: https://doi.org/10.7249/RR1408 [LINK]

- Dhami, M., Mandel, D., Mellers, B., & Tetlock, P. (2015). Improving Intelligence Analysis With Decision Science. Perspectives On Psychological Science, 10(6), 753-757. doi: 10.1177/1745691615598511 [LINK]

- Boosting intelligence analysts’ judgment accuracy: What works, what fails? (Mandel, Karvetski, Dhami, 2018) [LINK]

- Friedman, J., Baker, J., Mellers, B., Tetlock, P., & Zeckhauser, R. (2018). The Value of Precision in Probability Assessment: Evidence from a Large-Scale Geopolitical Forecasting Tournament. International Studies Quarterly. doi: 10.1093/isq/sqx078

- Milkman, K., Chugh, D., & Bazerman, M. (2009). How Can Decision Making Be Improved?. Perspectives On Psychological Science, 4(4), 379-383. doi: 10.1111/j.1745-6924.2009.01142.x

- Banuri, Sheheryar; Dercon, Stefan; Gauri, Varun. 2017. Biased Policy Professionals. Policy Research Working Paper;No. 8113. World Bank, Washington, DC. © World Bank. https://openknowledge.worldbank.org/handle/10986/27611 License: CC BY 3.0 IGO. [LINK]

- Sunstein, C. 2001. Probability Neglect: Emotions, Worst-Cases, and Law. DOI:10.2139/ssrn.292149 [LINK]