This is a series of posts for the Bayesian in a hurry. The Bayesian who wants to put probabilities to quantities, but doesn't have the time or inclination to collect data, write code, or even use a calculator.

In these posts I'll give a template for doing probabilistic reasoning in your head. The goal is to provide heuristics which are memorable and easy to calculate, while being approximately correct according to standard statistics. If approximate correctness isn't possible, then I'll aim for intuitively sensible at least.

An example of when these techniques may come in handy is when you want to quickly generate probabilistic predictions for a forecasting tournament.

In this first post, I'll cover cases where you have a lower bound for some positive value. As we'll see, this covers a lot of real-life situations. In future posts I'll use this as a foundation for more general settings.

For those who struggle with the mathematical details, you may skip to the TL;DRs.

The Delta T Argument

We'll use what John Gott called it the "delta t argument" in his famous Doomsday Argument (DA). It goes like this:

- Suppose humanity lasts from time to time .

- Let be the present time, and be the proportion of humanity's history which has so far passed.

- is drawn from a uniform distribution between zero to one. That is, the present is a totally random moment in the history of humanity.

- The probability that is less than some value is

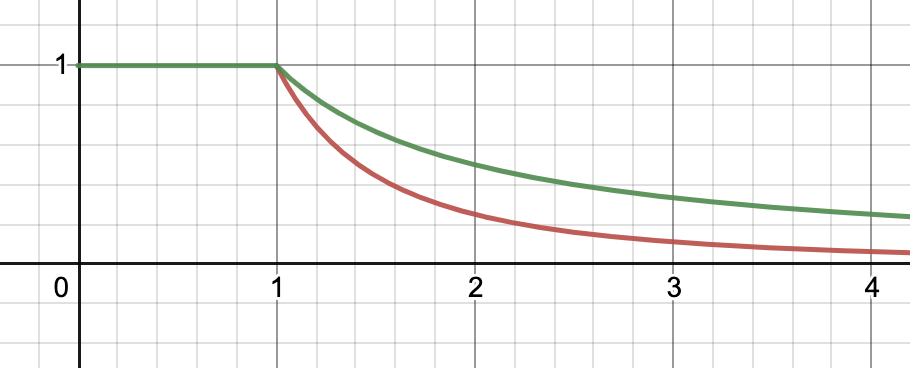

5. Let . Then humanity's survival function (the probability of humanity suviving past time ) is hyperbolic:

6. And the density function for human extinction is given by

These distributions are illustrated below:

TL;DR The probability of a process surviving up to a certain time is equal to the proportion of that time which has already been survived. For example, the probability of a house not catching fire after 40 years, given it has so far lasted 10 years without catching fire, is .

Lindy's Law

Lindy's Law states that a process which has suvived up to time , will on average survive a further . This is true for the delta t distribution, as long as we interpret "average" to mean "median":

However, the mean lifespan of humanity is undefined:

On a related note, because our density function decreases with the inverse square of time, it is "fat tailed", meaning that it dies off sub-exponentially. It belongs in Nassim Taleb's Extremistan.

TL;DR A process which has survived amount of time, will with 50% probability survive another amount of time. For example, a house which has lasted 10 years without burning down, will survive another 10 years without burning down with 50% confidence.

50% Confidence Intervals

We can thus derive a 50% confidence interval by finding the values at which humanity has a 25% chance of surviving, and a 75% chance of surviving. The first is obtained from

which when we solve for gives

The second is obtained from

which gives

So with 50% confidence we have

which we can remember with the handy mnemonic:

Adding a third is worth a lower quartile bird.

Times-ing by four gets your last quartile in the door.

In the case of human extinction: Homo Sapiens have so far survived some 200,000 years. So with 50% confidence we will survive at least another 60,000 years, and at most another 800,000 years.

TL;DR A process which has survived amount of time, will with 50% confidence survive at least another and at most another amount of time.

90% Confidence Interval

By similar reasoning, we can say with 90% confidence that

But it's hard to muliply by 20/19. So instead we'll approximate it with

Thus, with about 90% confidence, the remaining lifespan is more than 1/20th of its current lifespan, but less than 20x its current lifespan.

Similarly, we can say that with about 99% confidence the remaining lifespan is more than 1/200th the current lifespan, but less 200x times the current lifespan. And so on with however many nines you like.

So with 90% confidence, humanity will survive at least another 10,000 years, and at most another 4,000,000 years, and with 99% confidence between 1,000 and 40,000,000 further years.

TL;DR A process which has survived amount of time, will with 90% confidence survive at least another and at most another amount of time.

The Validity of the Doomsday Argument

A lot of people think the DA is wrong. Should this concern us?

I think in the specific case of predicting humanity's survival: yes, but in general: no.

When you apply the delta t argument to humanity's survival you run into all kinds of problems to do with observer-selection effects, disagreements about priors, disagreement about posteriors, and disagreement about units. For an entertaining discussion of some of the DA's problems, I recommend Nick Bostrom's The Doomsday Argument, Adam & Eve, UN⁺⁺, and Quantum Joe.

But when you apply delta t argument to an everyday affair, such as the time until your roof starts leaking, then you needn't worry about most (or all) of these problems. There are plenty of situations where "the present moment is a random sample from the lifetime of this process" is a perfectly reasonable characterisation.

Let's look at some everyday examples.

Examples

Example 1: Will a new webcomic be released this year?

Your favourite webcomic hasn't released any new installments since 6 months ago. What is the probability of a new installment this calendar year (ie, within next 9 months)?

Answer: The probability of the no-webcomic streak continuing for a further 9 months is

Which is a little over a third. Maybe 35%. So the probability of there being a comic is about 65%.

Example 2: How high will my balloon chair go?

You have tied 45 helium balloons to a lawnchair and taken flight. You are now at an altitude of 1,000km. How high will you go?

Answer: Our 90% confidence interval gives at least 1,200km and at most 20,000km. Lindy's Law gives an average height of 2,000 km.

Example 3: Will the sun rise tomorrow?

I have observed the sun rise for the last 30 years. What is the probability that the sun rises tomorrow?

Answer: 3 years is about 1,000 days, so 30 years is about 10,000 days. The probability that the sun rising streak ends on the 10,001st day is

Which itself is going to be approximately 1/10,000=0.001%. So the sun will rise with 99.999% confidence.

"Real" answer: Laplace's answer to the sunrise problem was to start with a uniform prior over possible sunrise rates, so that the posterior comes out as

This is the "rule of succession", and which in our case also gives something very close to 0.001%. Alternatively, we could use the Jeffreys prior and get

which will be something more like 0.0015%.

Example 4: German Tanks

You have an infestation of German tanks in your house. You can tell they're German because they're tan with dark, parallel lines running from their heads to the ends of their wings. You know that the tanks have serial numbers written on them. You inspect the first tank you find and it has serial number . How many tanks are in your house?

Answer: 120-2,000 with 90% confidence. Median is 200.

"Real" answer: If we are doing frequentist statistics, the the minimum-variance unbiased point estimate is , so 199. The frequentist confidence intervals are gotten by the same formula as the delta t argument, so we again have 120-2,000 with 90% confidence.

The Bayesian story is complicated. If we have an improper uniform prior over , then we get an improper posterior. But if we had inspected two tanks, and the larger serial number was 100, then we would have a median estimate of (the mean is undefined). If our prior of was a uniform distribution between 1 and and upper bound , then the posterior looks like

which has approximate mean

So if we have an a priori maximum of tanks, then the mean will be something like . Weird.

I don't know what happens if you use other priors, like exponential.

Next time...

In future posts in this series I'll cover situations where you need estimating a distribution from a single data point.

Thank you. I have corrected the mistake.

The relationship between Lindy, Doomsday, and Copernicus is as follows: