I belive AI safety is a big problem for the future, and more people working on the problem would likely increase the chance that it gets solved, but I think the third component of ITN might need to be reevaluated.

I mainly formed my base ideas around 2015, when the AI revolution was portraied as a fight against killer robots. Nowadays, more details are communicated, like bias problems, optimizing for different-than-human values (ad-clicks), and killer drones.

It is possible that it only went from very neglected to somewhat neglected, or that the news I received from my echochamber was itself biased. In any case, I would like to know more.

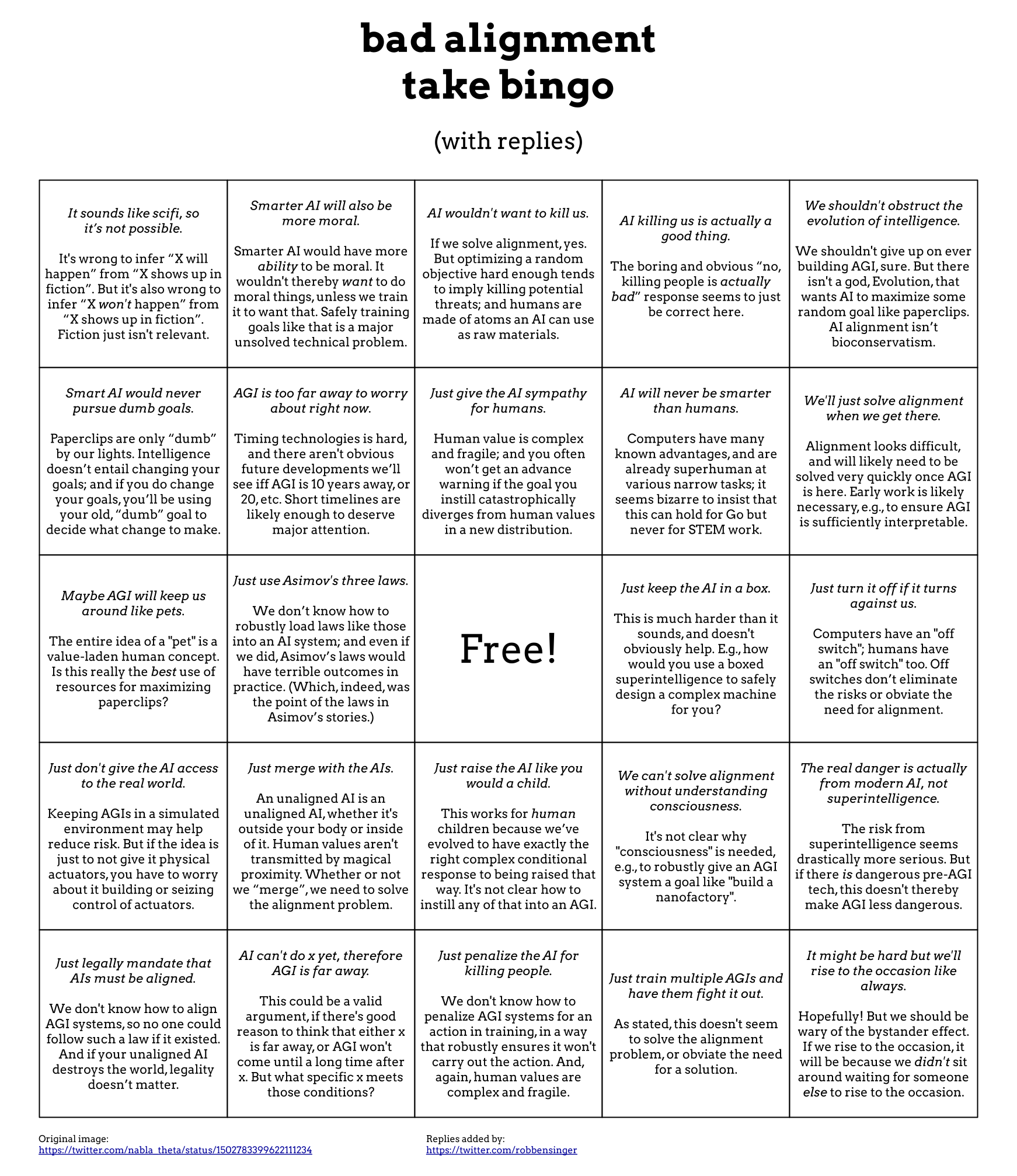

It depends on what you mean by "neglected", since neglect is a spectrum. It's a lot less neglected than it was in the past, but it's still neglected compared to, say, cancer research or climate change. In terms of public opinion, the average person probably has little understanding of AI safety. I've encountered plenty of people saying things like "AI will never be a threat because AI can only do what it's programmed to do" and variants thereof.

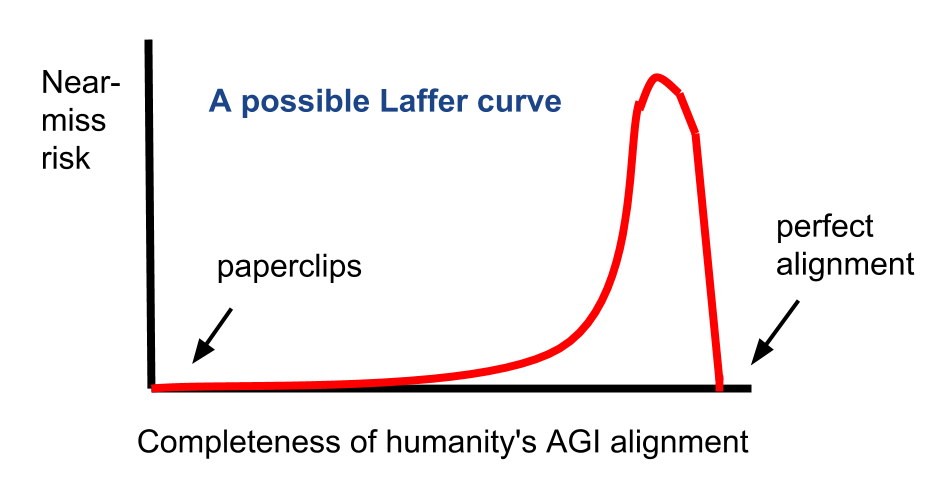

What is neglected within AI safety is suffering-focused AI safety for preventing S-risks. Most AI safety research and existential risk research in general seems to be focused on reducing extinction risks and on colonizing space, rather than on reducing the risk of worse than death scenarios. There is also a risk that some AI alignment research could be actively harmful. One scenario where AI alignment could be actively harmful is the possibility of a "near miss" in AI alignment. In other words, risk from AI alignment roughly follows a Laffer curve, with AI that is slightly misaligned being more risky than both a perfectly aligned AI and a paperclip maximizer. For example, suppose there is an AI aligned to reflect human values. Yet "human values" could include religious hells. There are plenty of religious people who believe that an omnibenevolent God subjects certain people to eternal damnation, which makes one wonder if these sorts of individuals would implement a Hell if they had the power. Thus, an AI designed to reflect human values in this way could potentially involve subjecting certain individuals to something equivalent to a Biblical Hell.

Regarding specific AI safety organizations, Brian Tomasik wrote an evaluation of various AI/EA/longtermist organizations, in which he estimated that MIRI has a ~38% chance of being actively harmful. Eliezer Yudkowsky has also harshly criticized OpenAI, arguing that open access to their research poses a significant existential risk. Open access to AI research may increase the risk of malevolent actors creating or influencing the first superintelligence to be created, which poses a potential S-risk.

Sure, but people are still researching narrow alignment/corrigibility as a prerequisite for ambitious value learning/CEV. If you buy the argument that safety with respect to s-risks is non-monotonic in proximity ... (read more)