Epistemic status: I lean toward 'yes, in some cases' - but a deeper dive into the question could be a valuable though potentially large research project. In this post I’ll just provide some intuitions for why I think it could be important question to ask.

Introduction

I recently argued that EA organisations should outsource their tech work to nonprofit agencies. This got me wondering if the arguments more generally imply that EA organisations should strive to divide themselves to get as close as possible to having a single responsibility.[1]

Splitting as often as possible

Higher fidelity funding signals

Let’s imagine a hypothetical charity, Artificial Intelligence & Malaria Longtermist Exploration & Shorttermist Solutions (AIMLESS), that spends 50% of its effort on distributing bednets with world-class efficiency and 50% on performing mediocre AI research.

If we believe the EA space benefits from wisdom of the crowd, then that wisdom is purer the easier it is for EAs to donate money to specific causes or projects they believe in.[2] If AIMLESS is the best bednet-distributing organisation, it will be difficult or impossible to tell in what proportion its donors are supporting the bednets or the AI research - and which one puts off non-donors who might have supported one of the programs.

Better inter-organisational comparisons

We tend to think of the competition between for-profits as allowing an organic comparison that isn’t possible between nonprofits, but enthusiastic splitting could turn that on its head. Value-aligned nonprofits can make comparison far clearer if they split themselves enough that what they’re doing is directly comparable.

This needn’t mean they necessarily compete - multiple tech nonprofit agencies could exist in the EA space, each focusing on different cause areas, for example. But the more granular comparability would make it much easier to diagnose when one is outperforming the other, and much easier to fix the problem. The stronger org could share its practices with the weaker one to start with, and if that didn’t work, could start competing directly for funding - allowing underperforming organisations the crucially important function of ceasing to exist. Then if one went to zero funding, the other would aim to split into two as soon as possible so the ecosystem continued with minimal interruption (unless the defunct one’s issue had been that it wasn’t in a viable niche).

If one were inclined toward awful neologisms, one could call this coopetition.

More efficient allocation of time for support staff

In principle, extracting support departments from multiple organisations into a smaller number of consultancies or agencies allows the same number of staff to produce significantly more value - I showed one way one could quantify that benefit here. To give a quick summary: if we assume that the amount of valuable work for any given department fluctuates over time and that their stated goals have similar value, then combining multiple departments into a single external organisation means they can always prioritise the highest priority work from any of their dependent organisations.

These agencies could either be client-funded or donor-funded, in the latter case doing some amount of their own internal prioritisation. I discussed the advantages of each approach here. A third option would be to determine their priorities via regranting orgs who specialise in prioritising among subareas, and who distribute grants among those so as to optimise incentives - basically mimicking the operations and management of a large unitary organisation.[3]

But no oftener

If the titular hypothesis is true, it should start conversations, not end them. In software development, where the single responsibility principle is fairly uncontroversial, understanding how to apply it well is an art form. It’s often better to delay a split even when you know one should happen because you expect to learn more about how to split by waiting. So this post is a call for greater scrutiny of organisations’ focus, not for any specific chopping plan. Here’s some considerations which might go either way…

Synergies

Combining functions can allow organisations to develop internal synergies; separating functions can create overhead. An organisation could have many different departments, but if those are all in service of a coherent goal, splitting may be unwise. Sometimes a large responsibility might necessitate a large organisation.

Having said that, splitting organisations needn’t remove close cooperation or even physical proximity between their former departments - it just makes it an option, rather than a necessity. It also needn’t remove any functions. If the judgement of the executives of a multi-responsibility organisation was good enough that people wanted to continue to fund their judgement, those executives could still become, say, one or more regranting/consulting/management organisations - but one that could now be evaluated separately from the direct workers.

This kind of philosophy (embedded video link)

Legal hassles

Setting up a single charity is a pain. Setting up multiple charities is multiple pains. This could be offset somewhat by having easy access to a specialised EA-focused legal agency, or by just not becoming charities if most of their funding would be granted by donor charities (meaning there wouldn’t be a tax incentive for charitable status).

Restricted donations as an alternative

This is possible and in practice common, but seems to lose many of the benefits. Suppose AIMLESS considered restricted donations as an alternative to splitting. The former would have at least two issues that the latter would avoid:

- Counterfactual fungibility: if Alice gives $x restricted to their malaria program and Bob then gives $x unrestricted, it seems likely the charity will divert all the latter to its AI program, leading to the same outcome as if both donations had been unrestricted. This is less of a problem the more of its grants are restricted, but the organisation will always be incentivised to seek unrestricted grants or to seek specific ‘restrictions’ that match its intentions.

- Logistical ambiguity: assuming AIMLESS has any support staff or other workers whose time is naturally split between both projects, even with the most scrupulous intentions it’s going to be very hard to ensure they split their attention between projects in proportion to the donation restrictions.

Classes of division

One could slice up a nonprofit at least two ways - by shared services such as tech, marketing, legal, HR, design and perhaps some types of research, and by focused services or products, such as distributing bednets. The arguments above apply to both, but the logistics of splitting them might vary predictably.

Shared services

- We’re already familiar with the concept of shared service agencies and consultancies; we seem to be increasingly viewing them as a good thing,[4] and such services are already being set up.

- These services are often essential - an org often cannot forego legal input, hiring or having a website.

So shared service agencies can be established before orgs split off their service departments, and would need to be - unless the org converted its department into the first such agency.

Products

- It will rarely seem appealing to create an organisation to do work that an existing organisation already covers. The new organisation won’t be directly comparable to the existing organisation, removing the advantages of competition.

- As long as a product-oriented org has at least one product, having other distinct products is optional.

So splitting orgs by product doesn’t create new dependencies between separate organisations, though it probably requires more initiative to do.

Real world splitting

Splitting services

Any EA org that has an ‘X-service-department’ of at least 1 person should arguably split. If we want to encourage splitting, we might also want to develop encouraging community norms, e.g. praising those who try it, or more concretely having shared service agencies prioritise newly split organisations.

Splitting products

Product-splitting is more awkward, both because it’s harder to describe without singling out specific orgs, and because it’s harder to identify when multiple products constitute a single ‘responsibility’. A good sign that you might lean towards splitting is that there’s no succinct way of describing the sum of all your products without using the word ‘and’. A good sign that you might lean away from splitting is that, if you were interviewing someone for work on Product B, one of the most practically valuable qualifications they could have is previous work on Product A and vice versa (example, further discussion). Recombining later is always possible, and perhaps less subject to status quo bias.

But if the hypothesis of this post is correct, organisations should err towards splitting when in doubt. I offer for discussion, but don’t want to commit either way on the following examples:

CEA, whose products* include

- Community building grants

- Group support

- The EA forum

- Other EA websites

- Running events

- Supporting EAGx events

- Community health

- Media training, in some capacity

80k, whose products* include

- General careers advice (web content)

- Bespoke careers advice (1-on-1s)

- Careers research

- Podcast

- Job board All with a broad longtermist focus.

Founders Pledge, whose products* include

- Philanthropic advisory

- Cause area research

- Multiple DAFs

- Pledges

- Events

- Community building

Rethink Priorities, whose products* include

- Explicitly longtermist research

- Explicitly shorttermist research

*Some of these are more like services, but since the case for splitting services off seems both stronger and clearer than products, it’s not important to distinguish here.

Summary

To paint a clear picture, a post-split org would likely start out with mostly the same people working in the same shared office (if they had one) on the same projects, with the same product staff seeking project management from the same managers.

The immediate differences might be a) that for services for which an agency already existed, the relevant service staff would need to apply to join that agency or be retained initially as contractors, with the intention of the org ultimately moving to the agency for the service; b) there would be some setup hassle with registering new organisations etc (but resolving this would be a priority for the rest of the community).

But these new orgs would be decoupled from each other, such that over time they could try working with different orgs, each seeking the optimal relationships for their specific role.

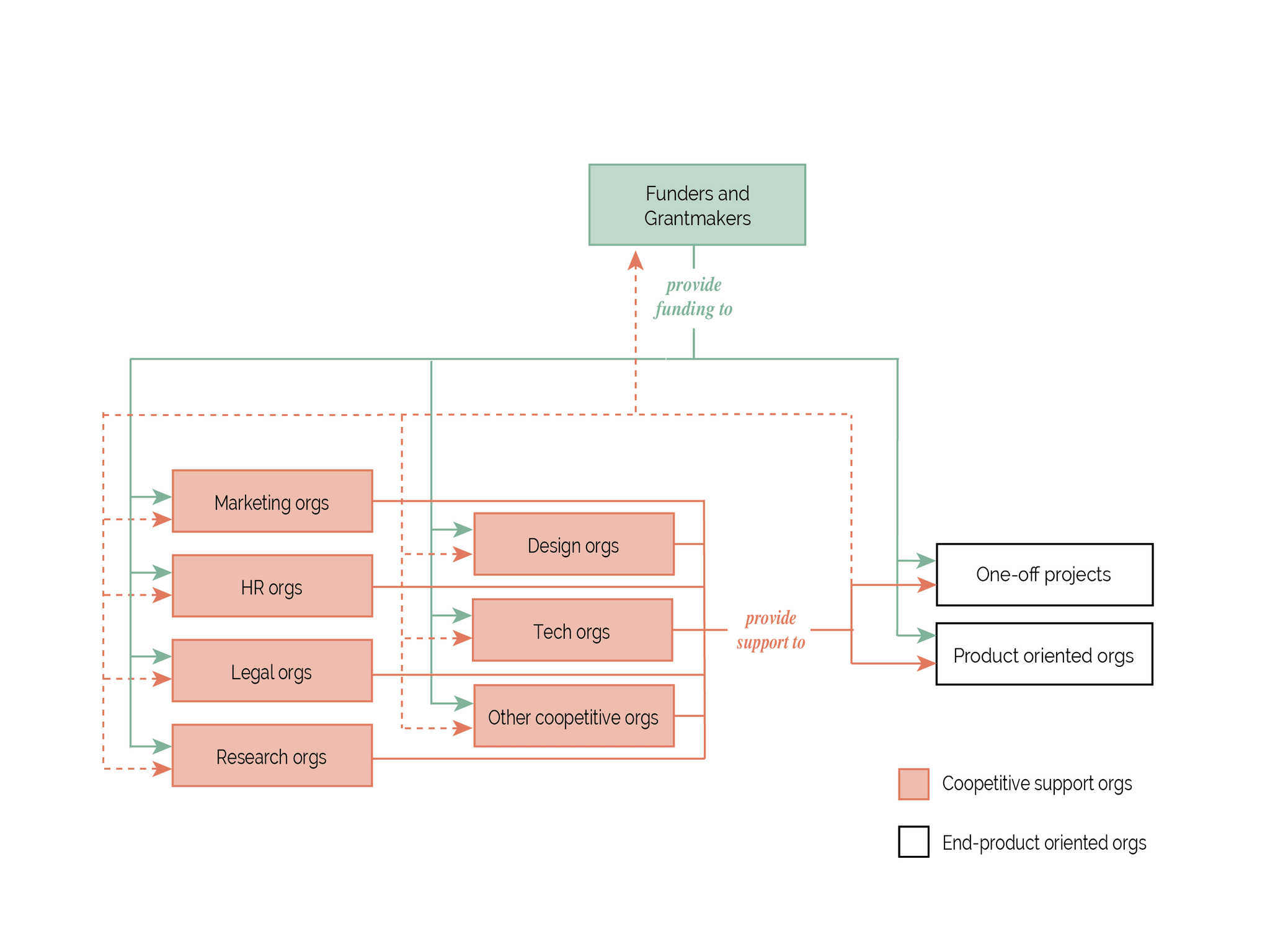

Finally, here’s an illustration of what this future version of the effective altruism ecosystem could look like:

Acknowledgements

Thanks to Evan Chu, Emrik Garden, Jonas Wagner, Krystal Ha, Siao Si Looi, Dony Christie, Jonas Moss, Tazik Shahjahan and Onni Arne for input on this post. Needless to say, mistakes are entirely my cat's fault.

Much of the argument here maps onto the single responsibility principle in object-oriented software design. This states that each object or class should have a single concern. ↩︎

This holds both ‘vertically’, as in allowing EAs to choose the level of specificity at which they feel comfortable targeting their support (eg shorttermist < global poverty < diseases < neglected tropical diseases < schistosomiasis < deworming project), and ‘horizontally’, as in allowing EAs to choose the focus at their given level of specificity (eg within global poverty, diseases=education=womens’ empowerment=mental health). ↩︎

The purported benefits of the single responsibility principle are semi-intentionally analogous to those I describe here:

- ‘Easier to Understand’ - cf section 1

- ‘Easier to maintain’ - cf section 2(kinda)

- ‘More reusable’ - cf section 3

While I’m somewhat suspicious of my epistemics here, having come from a software development background and just happened to see it this way, I do think there’s some justification for this analogy. To wit, computer programs have a single goal or clearly aligned set of goals, which all of their modules are set up to serve. The same can’t be said of the for-profit space or of non-EA nonprofits, but it’s uniquely true - at least truer - of the EA space. While EAs inevitably have some value disparity, it’s generally smaller, more explicit and more compartmentalisable - as in, animal welfarists, global povertyists and longtermists are quite closely value aligned among themselves, even when not with each other. So even if the EA space as a whole isn’t value-aligned enough for such a model (and I’m not sure it isn’t at the organisational level), it’s easy to identify subsets of it which are. ↩︎

‘Agency’ vs ‘consultancy’ is a vague distinction, but I’m using the former for consistency with the rest of this post: a consultancy typically provides a superset of the services an agency, so where those services aren’t intrinsically linked, the argument of the rest of this post would apply to splitting the consultancy. ↩︎

This doesn't sound like most people's view on democracy to me. Normally it's more like 'we have to relinquish control over our lives to someone, so it gives slightly better incentives if we have a fractional say in who that someone is'.

I'm reminded of Scott Siskind on prediction markets - while there might be some grantmakers who I happen to trust, EA prioritisation is exceptionally hard, and I think 'have the community have as representative a say in it as they want to have' is a far better Schelling point than 'appoint a handful of gatekeepers and encourage everyone to defer to them'.

This seems like a cheap shot. What's the equivalent of systemwide security risk in this analogy? Looking at the specific CEA form example, if you fill out a feedback form at the event, do CEA currently need to share it among their forum, community health, movement building departments? If not, then your privacy would actually increase post-split, since the minimum number of people you could usefully consent to sharing it with would have decreased.

Also, what's the analogy where you end up with an increasing number of sandboxes? The worst case scenario in that respect seems to be 'organisations realise splitting didn't help and recombine to their original state'.

I agree in the sense that overhead would increase in expectation, but a) the gains might outweigh it - IMO higher fidelity comparison is worth a lot and b) it also seems like there's a <50% but plausible chance that movement-wide overhead would actually decrease, since you'd need shared services for helping establish small organisations. And that's before considering things like efficiency of services, which I'm confident would increase for the reasons I gave here.