Summary

- Control.AI’s treaty proposal to build AI safely, A Narrow Path, is unlikely to work in China as currently proposed, because of lack of compatibility with China’s political system

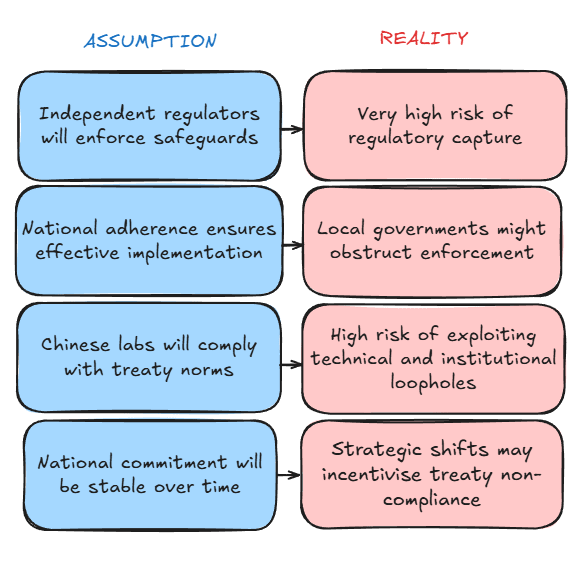

- Some potential risks in China include: regulatory capture, interference or non-compliance by local governments, treaty evasion by labs, and potential national defection. These make treaty enforcement fragile without a major redesign

- Suggested solutions:

- Replace the assumption of independent regulators with verifiable but competent mechanisms, similar to China’s civil nuclear sector

- Build treaty structures that anticipate local evasion, e.g. via strong national and international supervisory mechanisms, or formal integration of Party-led oversight

- Treat national defection risk as an imminent problem, and consider incentives and costs from the very beginning

- Emphasise getting genuine state/Party buy-in, rather than assuming strict adherence to treaty rules

Mini-Disclaimer: The ideas in this post are about political dynamics in China and the incentives that shape them. There's always a risk that coverage of these issues might be read as an adversarial or accusatory narrative against China, turning shared concerns into an us-versus-them dynamic that makes cooperation on AI governance between great powers harder. This dynamic can also spill over into negative sentiment toward Chinese people or others of East Asian descent. I hope this post manages to avoid this, and my readers appreciate these concerns!

Introduction

Our future might well depend on whether we can get global leaders to agree to meaningful treaty commitments before we build super-intelligent systems.

This means that the success of any global governance regime will hinge on getting a deal done with China.

To see how such a deal might fare in practice, I examined Control.AI’s governance proposal A Narrow Path as part of an Apart Research red-teaming policy sprint. Unfortunately, I found that it rests on unrealistic assumptions about how one-party political systems like China’s actually function.

For those who haven’t heard of it, A Narrow Path presents an ambitious vision for a treaty that aims to: a) cover the technical pathways by which misaligned superintelligence could emerge; and b) establish institutions and safeguards that create the conditions for stable global implementation.

As treaties go, it’s definitely on the bold side. “Phase 0” of this plan (subtitled Safety) proposes a 20-year moratorium on the most powerful models, designed to give humanity breathing space to develop aligned and safe AI systems. Phases 1 and 2 then outline how humanity could reach a stable and safe future state, having achieved international coordination and Safe Transformative AI.

Phase 0 of the treaty proposal, which I focus on here, aims to ensure a state of safety by covering all the bases needed to prevent the emergence of super-intelligent systems:

- Banning AIs that improve other AIs

- Prohibiting systems that can escape their environments

- Ensuring models are bounded and predictable

- Requiring strict licenses for models over a certain size

These would be enforced through national-level independent agencies, corporate oversight, and an international treaty agreement.

The logic of A Narrow Path is clear-headed, but it mostly abstracts away from the messier issues of political enforcement and incentives. Noticing this, I decided to red-team A Narrow Path from the perspective of China’s political economy (the study of incentives in politics) to test how the treaty might succeed or fail in that context.

What’s been done before

This is a relatively neglected angle. Governance researchers are increasingly recognising the importance of aligning AI governance efforts with state incentives, and there are some good papers on incentives for sharing the benefits of AI and prestige motivations. But I think there’s too much focus in this literature on signatories as abstract geopolitical variables, rather than specific actors with distinctive political structures.

There are a few exceptions, such as Governance.ai’s analysis of Beijing’s strategic priorities and analogies to institutions such as the International Atomic Energy Agency, which engage more seriously with issues like China's internal governance.

There’s also a bunch of work that engages deeply with China’s domestic AI governance, by researchers like Matt Sheehan, Jeff Ding, Concordia AI, and the ChinaTalk ecosystem, but this rarely intersects with concrete treaty design or enforcement analysis. I also haven’t seen examples of China-focused AI governance work explaining how and where things could go wrong.

On the other hand, China’s political economy literature gives us many clear reasons to anticipate treaty mechanisms not working, which I look into in this post. I’ll draw on specific case studies from Chinese governance in sectors like tech regulation, financial oversight, and energy policy, to show how enforcement mechanisms can fail, and to argue that any viable treaty must account for domestic political and institutional dynamics.

To make this concrete, I’ve split the argument into four key assumptions and their corresponding realities, shown in the figure below. Each assumption is discussed in its own section.

1. Regulatory independence is vulnerable to capture

A pillar of the Narrow Path proposal is the idea that national AI regulators should have “adequate independence from political decision-making”. These regulators would play a major role in upholding the licensing regime (Sections 5 and 6), enforcing restrictions on superintelligence (Section 1), containment (Section 2), and recursive self-improvement (Section 3), as well as ensuring that deployment only occurs if a valid safety case has been made (Section 4).

The proposal also outlines an impressive set of enforcement powers for these independent national regulators. This includes: shutting down training runs or entire projects, permanently revoking licences, firing teams, prosecuting individuals, and in extreme cases, fully dissolving companies and auctioning their assets.

On paper, this makes sense: independence would allow regulators to enforce compute thresholds, monitor capabilities, and run safety checks free from political interference. A robust independent institution with real “teeth” (enforcement powers and meaningful penalties) is clearly the ideal way of monitoring and controlling frontier AI development. But, sadly, in China’s political system, regulatory independence will only ever be more of an aspiration than a reality.

Why wouldn’t this work in China?

Basically, Political Economy 101.

In democratic systems with separations of power, regulators can enforce a set of rules even when business or government would rather they didn’t. In a one-party state with centralised authority, not so much.

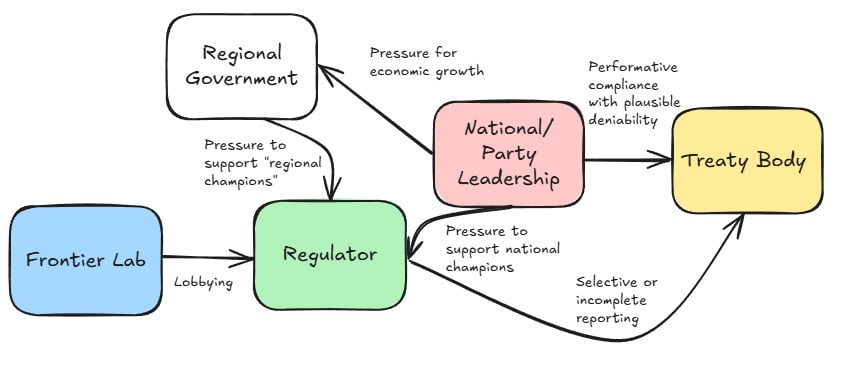

Here, powerful state actors and the businesses they support just find it terribly inconvenient to be criticised, monitored or restricted by independent regulators. Instead, regulators can be susceptible to pressure from all sides: central government, local government and the private sector.

How it could go wrong — a vignette:

China signs the treaty. Regulators begin by following treaty norms. But under pressure from labs in tandem with local officials, they’re gradually nudged to adjust or sidestep these requirements.

What begins as a small concession on the part of the regulators: a bit of underreporting on a training run here, a sandbagged model test there, starts to accumulate.

Before long, a frontier lab is steering towards superintelligent capabilities, with the regulator either unaware or complicit.

This isn’t hypothetical. In China, regulatory capture, when regulators serve political or industry interests rather than their stated goals, is the norm rather than the exception. Examples range from failed attempts to independently regulate the power sector in the early 2000s, to high-profile incidents like the 2020 Ant Group IPO (initial public offering). There, the China Securities Regulatory Commission blocked the IPO at the last minute, nudged by central authorities who started to panic over Jack Ma’s public criticism of China’s financial regulation.

These cases show how nominally autonomous agencies cast off the illusion of independence when the political winds shift.

The current state of AI regulation reinforces this point. The State of AI Safety in China (2025) report describes how enforcement from the two leading bodies, the Cyberspace Administration of China (CAC) and the Ministry of Science and Technology (MOST), uses “campaign-style enforcement” which remains “selective and uneven”, following state or party mandates.

These regulators can lack the independence, power or incentives to consistently uphold national law, let alone enforce an international treaty.

What we can do about it

This doesn’t have to be a fatal flaw. True regulatory independence is unrealistic in China, but competence, transparency and oversight are plausible goals. Regulation in the Chinese civil nuclear sector (a success story, by most accounts) has focused on a combination of the three. The International Atomic Energy Agency conducts treaty-based inspections in the country, working alongside the China Atomic Energy Authority and the National Nuclear Safety Administration. These Chinese bodies both operate within Party-led ministries, but their arrangement has provided a system of technical verification and compliance that could offer a useful precedent for AI governance (Andrews-Speed, 2020).

I'm confident that closer attention to how this model works in China could make A Narrow Path more viable.

2. Local governance can complicate national enforcement

Even if a treaty could bring competent, aligned regulation to AI governance in China, that would only address part of the problem. A lot of the day-to-day implementation would still fall to local governments, creating a principal–agent problem, where the “agent” (local officials, in this case) is given a task, but has different incentives to the “principal” (central government), so pursues individual or local priorities instead.

In China, this dynamic often plays out in politically sensitive or economically strategic areas. The central government appoints capable regulators, but enforcement defaults to local authorities whose incentives are not always aligned. Local leaders are rewarded for GDP growth, investment, and prestige, and often benefit from informal deals with business, which makes them more willing to bypass national laws or mandates.

How it could go wrong — a vignette:

A frontier lab becomes a “regional champion,” closely tied to local officials who see it as a source of growth and prestige.

In return for this success, the government works with the lab to shape safety assessments, filter information to regulators, and make sure that inspections are carefully managed or delayed.

This process follows a common pattern, as people (like myself) who experienced the early years of China’s Air Pollution Prevention Plan (2013–2017) will remember. To protect their industrial sectors, local governments in smog-afflicted Hebei and Shandong under-reported emissions, disabled air quality monitors, and delayed enforcement. This only shifted when the central government introduced its own central inspection teams, which bypassed local authorities entirely.

In the AI sector, we can see how these trends might begin. Local officials see AI labs, data centres, and related infrastructure as valuable assets. Despite the current division of labour between the hubs of Beijing, Shenzhen and Shanghai, with each focusing on different sectors of the AI economy, it’s easy to imagine competition between these hubs heating up. We also see strong backing for “regional champions” in less developed areas, such as iFlytek in Anhui and cloud computing centres in Guizhou, which could be a source of central and local government incentives becoming misaligned.

This isn’t just about frontier labs and model training. Section 5 of A Narrow Path calls for cloud providers to implement shutdown mechanisms and KYC (Know-Your-Customer) reporting (citing Egan & Heim, 2023). The same section also mentions Hardware-Enabled Mechanisms (HEMs) that depend on transparency and cooperation throughout the chip supply chain. You might be able to guarantee central control of frontier labs, but the tangle of cloud infrastructure, chip manufacturing, and hardware logistics means that local governance incentives, and the associated vulnerabilities, crop up all along the supply chain.

What we can do about it

Local governance should be treated as a likely point of failure. This is something to address diplomatically and carefully. You can’t just write into the treaty text, “we don’t trust your local officials”, especially if it singles out a specific signatory.

But there are ways to address this. Alongside increased international verification of treaty mechanisms at various stages, treaty design can still build in measures to limit the ability of local actors to quietly ignore or subvert requirements.

On the international level, borrowing from IAEA protocols, a treaty can use capacity-building as a polite euphemism for improved observation and awareness of local actors. Providing equipment, training, and standardised protocols can be framed as technical support, keeping local governments on-side, while allowing for more consistent monitoring.

A treaty can also insist on centralised governance and demonstrated competence. In China, things tend to work better when the central government and Party get involved. China successfully uses centralised control to manage regional instability in sectors like the military and financial system.

In the scandal-beset food safety system, greater involvement by Party members has been associated with improved regulatory transparency (Gao et al. 2023), and less corruption in corporate governance (Xie et. al, 2022).

Can we borrow from this?

I'm not sure. Giving the CCP direct power over their regulatory system might not sound ideal. This might sound controversial for a couple of reasons: first, because centralised control doesn’t fit well with the principles of independent regulation (e.g. OECD, 2016), second, because handing power to China’s ruling party generates other risks (see section 4).

But ultimately, we should be under no illusions about where power in China actually lies, and this is unlikely to change any time soon. As such, creating provisions to involve the Chinese Communist Party more directly in domestic AI governance could be one pathway to improve competence and transparency.

3. Labs might exploit technical and institutional loopholes

Let's say China buys into the treaty, regulators are empowered to do their job, and the local government is either aligned with safety goals or effectively monitored. Does this make the system secure?

Perhaps not.

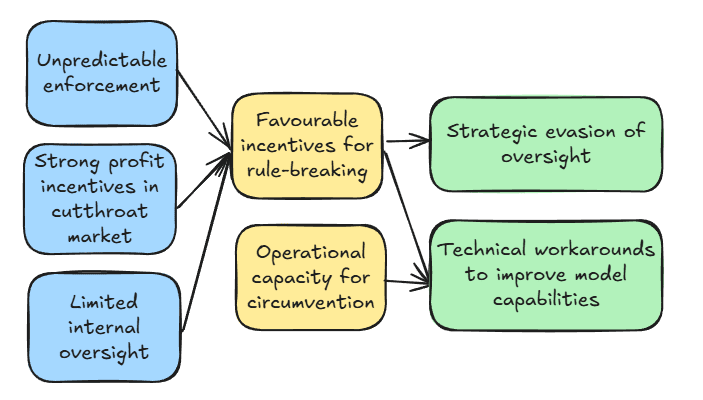

Even sincere enforcement by aligned national regulators might be meaningless if the labs are able to find ways around the regulations; and this process could follow different rules in China that the treaty does not anticipate.

A Narrow Path proposes limiting frontier AI systems through a licensing regime based largely on compute thresholds (the amount of computing power used to train a model).

Judging by the examples in A Narrow Path, the framework leans heavily on Western regulatory models. In fields like pharmaceuticals and nuclear safety, Western companies are expected to self-monitor and set up internal systems, such as oversight committees, review boards and compliance training, to meet liability and regulatory demands. However, in China, to the extent to which these mechanisms exist, firms often find ways to avoid or bypass internal oversight.

How it could go wrong — a vignette:

A Chinese lab trains multiple sub-threshold model “shards1” on local, and grey-market GPUs. Oversight committees and review boards incorrectly certify that operations comply with licensing rules. After passing a basic audit, the lab merges them into a far more capable system and deploys it at scale. Regulators, relying on internal paperwork, miss the leap in capabilities.

Although this scenario could be a risk anywhere, I’d estimate that frontier labs would be more willing to take these risks in China. In China’s fiercely competitive, low-margin tech industry, regulatory evasion has been historically very common, mainly because of weaker liability and less predictable enforcement (see Lee, 2018).

One particularly egregious recent example was Pinduoduo, who created malware-ridden apps to exploited Android system vulnerabilities to collect excessive user data and interfere with rival apps. Because China’s internal corporate oversight bodies are particularly weak, often lacking any independence, technical capacity, or political backing to challenge powerful firms, if a lab wanted to bypass regulations, there would be very little resistance from within the company.

From the angle of how a lab might avoid the technical compute governance limits included in the Narrow Path proposal, Shavit (2023) outlines various ways compute controls might be circumvented—in China, grey-market chips, under-monitored supply chains, and firmware tampering seem likely culprits. Current trends in AI model architectures suggest that it will get easier to build capable systems that don’t get caught by the policy, for a couple of reasons:

- Compute-based limits are becoming less reliable for judging how powerful a given model is. In China, Kimi K2 and Deepseek V3 are catching up in capabilities to much larger Western models, despite using far less training compute.

- Large-scale use of inference compute, as seen in ChatGPT’s o3 and GPT-5 Thinking, can significantly enhance performance while not reaching FLOP limits during training runs (Ord, 2025).

In short, the combination of strong economic incentives, weak internal oversight, and the growing ease of building smaller but highly capable models could make the rules laid out in the treaty increasingly easy to bypass.

What we can do about it

Some technical fixes could improve A Narrow Path’s approach to monitoring compute: Shavit (2023) suggests technical measures such as weight snapshot logging2, transcript verification3, and chip-level security4 to close loopholes. But all these require more than just tweaking physical hardware. They also rely on Chinese data centre operators to enforce usage limits, local chipmakers like SMIC or Huawei to implement logging at the hardware level, and government officials to monitor compliance—all coming back to political economy.

To stop companies evading treaty regulation, effective implementation needs strong central coordination, widespread adoption of trusted hardware, and an unusual degree of political willingness to scrutinise domestic firms. Within China, one underexplored mechanism may be the Party committee (党委 dangwei) system—party officials currently embedded in research institutions and technology firms, who, if aligned with AI safety, could improve top-down compliance and enforcement.

4. National incentives can shift over time

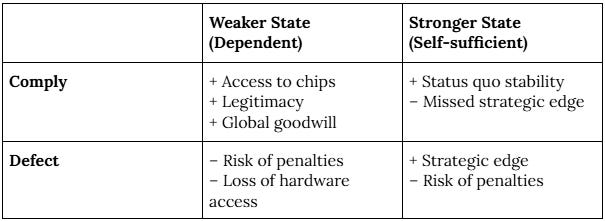

If all the other issues are resolved and local governments, regulators, and frontier labs are aligned or effectively managed, one higher-level risk remains: defection by China’s national leadership.

When developing a global AI treaty, we need to recognise that parties might only follow the rules as long as it remains in their interest to do so. A Narrow Path does address the risk of state-level defection in Phase 1, but this concern arrives only after the treaty system is in place.

As China becomes more self-sufficient in hardware and foundational models (see MERICS, 2025), the incentive to “defect” from the treaty increases. China today struggles to produce its own cutting-edge hardware at scale, and is therefore more likely to comply if it wants to preserve access to international markets and maintain its reputation. On the other hand, a more self-sufficient actor (such as the US today, or China in the near future) sees little cost to defection, especially if enforcement is weak or slow. This basic dilemma can be illustrated with a simple payoff grid:

How it could go wrong — a vignette:

As Chinese fabs develop scalable production of sub-5nm5 chips, and Chinese models are inching towards the frontier, the central government decides that the strategic value of advanced AI outweighs the benefits of continued treaty compliance. The country begins covertly developing systems that skirt treaty rules, which prompts the US to respond in kind. The treaty collapses and both sides slide back into arms race dynamics.

To followers of geopolitics, this follows a familiar pattern. States often comply with treaties while it suits their interests, then withdraw once the balance shifts: North Korea remained in the Nuclear Non-Proliferation Treaty until it was able to develop nuclear weapons. The U.S. left the Anti-Ballistic Missile Treaty when missile defence became a higher priority. Russia withdrew from the Treaty on Conventional Armed Forces in Europe when it conflicted with its regional aims; the results of which are playing out tragically in Ukraine today. As China gains greater AI capability and hardware sovereignty, its long-term incentive to remain bound by a treaty might be drastically reduced.

I’d predict that this geopolitical impasse (or the belief in such a geopolitical impasse) will be the biggest challenge for a sufficiently ambitious AI treaty. Just as the US, as the current leader, might see little reason to sign and therefore allow China to “catch up”, China could sign the treaty while making plans to defect after achieving self-sufficiency.

What we can do about it

A Narrow Path needs to think earlier about how to maintain incentives against defection. To sustainably bring China and the US on board, a treaty would need a mix of pressure and incentives from the outset. Pressure could come through strict sanctions or trade restrictions that raise the cost of staying out or defecting. Positive incentives could include prestige and influence, access to markets and advanced chips (with Hardware-Enabled Mechanisms to ensure safety), roles in global projects like the global alignment project (GUARD) mentioned in Phase 1 of A Narrow Path, and exclusive use of safety-tested state-of-the-art models.

Early commitments to benefit sharing in the event of economically transformative AGI could also strengthen buy-in. If a nation-state or coalition develops a safe and highly profitable AGI system, all parties would commit to sharing at least some of the benefits, leaving all treaty participants far better off than before. It may sound trite, but the idea that safe AI could allow all of humanity to “win” might be the kind of shared belief needed to sustain such a treaty.

Final Takeaways

Bottom line: a global governance framework for AI needs to be coherent with political economy incentives. Understanding this can help us to think more clearly about what is and isn’t realistically possible, avoid wasted effort on proposals that can’t be enforced, and design solutions to foreseeable problems like those discussed in this paper.

Guaranteeing enforcement of a treaty in China won’t be easy. But the worst takeaway from this red-teaming effort would be that establishing effective international governance for AI with China is impossible or unrealistic. First, it’s likely wrong—the US and leading AI labs may currently be a more significant roadblock to progress on AI governance. But also, pragmatically, suggesting that Chinese actors are unwilling or unable to comply could signal bad faith before negotiations begin, and could become a self-fulfilling prophecy.

Without being naïve, I think we can be more optimistic about China’s role in a global AI governance regime. Although A Narrow Path overlooks several political challenges to treaty enforcement in China, some (such as the assumption of independent oversight) could be addressed with modest revisions. And there could even be advantages to China’s centralised system, where strong national mandates and Party integration could embed AI safety commitments within frontier labs. This might avoid a situation like OpenAI’s boardroom dramas, where safety-focused board members and engineers have been edged out. These advantages underscore the importance of securing genuine buy-in from Chinese institutions—a high-level challenge at the heart of any serious attempt at governing AI at a global scale.

Future Work

This is an exercise in how attempts to develop an ambitious and comprehensive treaty for AI might fail because of China-specific factors. Over the next few weeks and months hope to build on this by addressing the following questions from a China-centric perspective:

- How a range of other treaty proposals hold up when viewed through the lens of Chinese political incentives and institutional structures. This might include a dive into A Narrow Path’s Phases 1 and 2, and a political economy analysis of the three AI governance strategies outlined by Convergence Analysis: Cooperative Development, Strategic Advantage, and Global Moratorium

- How Chinese AI governance would be impacted by a domestic equivalent of the EU AI Act, particularly in how it addresses supply chains, divergent governance models, and enforcement incentives

- How China’s proposed “global AI cooperation organisation,” announced by Premier Li Qiang at the 2025 World AI Conference, might influence treaty design, and what a credible agreement could look like with China in a leading or co-leading role, drawing on its recent Global AI Governance Action Plan

I plan to look at these questions in a short series of blog posts that should offer grounded insights for policy designers and advocates engaging with China’s approach to AI governance, particularly in areas relevant to existential risk. I hope this work can help bridge technical and geopolitical perspectives in a rapidly evolving field.

I’d love to collaborate with anyone interested, so please contact me at jack.stennett.new@gmail.com or my Linked In!

I’d like to thank Apart Research and Control.AI for hosting the hackathon that initiated this project. Apart Labs assisted in funding and supporting the research, without which this work would not have been possible. Jacob Haimes, Tolga Bilge and Dave Kasten provided insightful feedback on the sprint document and initial draft.

1 Model sharding involves dividing a large model into smaller, more manageable pieces or “shards” and distributing these shards across multiple devices or pieces of hardware, which can pose a challenge for compute governance

2 Weight snapshot logging involves periodically saving model parameters during training to create a verifiable history of capability development

3 Transcript verification refers to cryptographically checking detailed training logs to confirm the declared compute use matches reality

4 Chip-level security means here embedding hardware features that monitor, limit, and attest to AI training activity at the processor level

5 “Sub-5 nm” is a marketing term for highly miniaturised, precise semiconductor manufacturing. Chips at this scale achieve much higher transistor density, better energy efficiency, and faster computation per unit area. This tech is pretty much essential for training cutting-edge AI models.